pytorch进阶————可视化

目录

1、可视化网络结构

1.1 安装torchinfo

2、CNN卷积层可视化

2.1deach理解

2.2 CNN特征图可视化

2.3、CNN class activate map 可视化方法

3、利用TensorBoard进行可视化

3.1 安装 TensorBoard

3.2 TensorBoard可视化基本逻辑

3.3TensorBoard配置与启动

3.4 Tensorboard图像可视化操作

3.5 连续变量可视化

3.6 可视化参数分布

3.7 遇到的问题

1、可视化网络结构

#pytorch可视化、

import torch

import torchvision.models as models

from torchvision.models import vgg11

from torchinfo import summary

model = models.vgg11(pretrained= True)

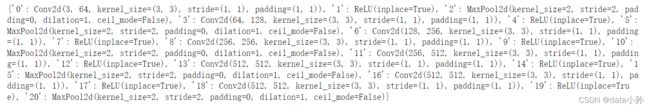

print(dict(model.features.named_children()))print(model),得到的信息只有基础构建的信息,不能显示每一层的shape,为了解决这个问题,引出torchinfo

1.1 安装torchinfo

pip install torchinfo#pytorch可视化、

#pytorch可视化、

import torch

import torchvision.models as models

from torchvision.models import vgg11

from torchinfo import summary

model = models.vgg11(pretrained= True)

#print(dict(model.features.named_children()))

summary(model,(1,3,224,224))#1代表batch_size,3代表图片通道数,224代表图片的宽高最终用得到模型每一层的类型、输出shape和数量,模型参数量、模型大小以及一次前向或者反向传播需要的内存大小

2、CNN卷积层可视化

import matplotlib.pyplot as plt

#print(dict(model.features.named_modules()))#1代表batch_size,3代表图片通道数,224代表图片的宽高

conv1 = dict(model.features.named_children())['3']

kernel_set = conv1.weight.detach()#自己理解的意思是把这层第3层网络剥离出来不求其他网络的梯度

num = len(conv1.weight.detach())

print(kernel_set.shape)

for i in range(0,num):

i_kernel = kernel_set[i]

plt.figure(figsize = (20,17))

if len((i_kernel))>1:

for idx,filter in enumerate(i_kernel):

plt.axis('off')

plt.imshow(filter[:,:].detach(),cmap = 'bwr')2.1deach理解

import torch

a = torch.tensor([1, 2, 3.], requires_grad=True)

print(a.grad)

out = a.sigmoid()

print(out)

#添加detach(),c的requires_grad为False

c = out.detach()

print(c)

print(id(out))

print(id(c))

#这时候没有对c进行更改,所以并不会影响backward()

out.sum().backward()

print(a.grad)

按照官方理解,detach()可以使得两个计算图的梯度传递断开,可以看出来返回一个新的tensor,新的tensor和原来的tensor共享数据内存,但不涉及梯度计算,即requires_grad=False。修改其中一个tensor的值,另一个也会改变,因为是共享同一块内存,但如果对其中一个tensor执行某些内置操作,则会报错,例如resize_、resize_as_、set_、transpose_。

2.2 CNN特征图可视化

与卷积核相对应,输入的原始图像经过每次卷积得到的数据成为特征图,可视化卷积是为了看模型提取那些特征,可视化特征图则是为了看到模型提取到的特征什么样子

import torch

import matplotlib.pyplot as plt

from torchvision import transforms

from torchvision.models import vgg11

from PIL import Image

import numpy as np

class Hook(object):

def __init__(self):

self.module_name = []

self.features_in_hook = []

self.features_out_hook =[]

def __call__(self,module,fea_in,fea_out):#__call__直接将()应用到自身并执行

print('hooker working',self)

self.module_name.append(module.__class__)

self.features_in_hook.append(fea_in)

self.features_out_hook.append(fea_out)

return None

def plot_feature(model,idx,inputs):

hh = Hook#调用类

model.features[idx].register_forward_hook(hh)#注册

model.eval()

__ = model(inputs)

#forward_model(model,False)#模型前向传播

print(hh.module_name)

print((hh.features_in_hook[0][0].shape))

print((hh.features_out_hook[0].shape))

out1 = hh.features_out_hook[0]

total_ft = out1.shape[1]

first_item = out1[0].cpu().clone()

plt.figure(figsize = (20,17))

for ftidx in range(total_ft):

if fiidx >99:

break

ft = first_item[ftidx]

plt.subplot(10,10,ftidx+1)

plt.axis('off')

plt.imshow(ft[ :, :].detach())

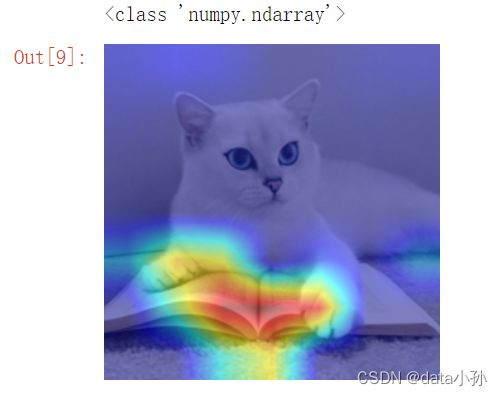

2.3、CNN class activate map 可视化方法

CAM作用是判断哪些特征对模型来说是重要的,也衍生出Grad-CAM、等诸多变种

import torch

import matplotlib.pyplot as plt

from torchvision.models import vgg11

from PIL import Image

import numpy as np

model = vgg11(pretrained=True)

img_path = 'C:/Users/CHDT/Desktop/cat.jpg'

img = Image.open(img_path).resize((608,608))

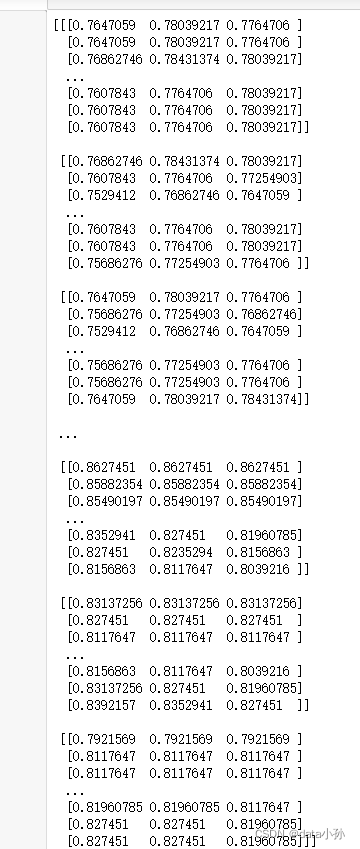

rgb_img = np.float32(img)/255#将原始图像转换为float32格式且在0-1之间

plt.imshow(img)from pytorch_grad_cam import GradCAM,ScoreCAM,GradCAMPlusPlus,AblationCAM,XGradCAM,EigenCAM,FullGrad

from pytorch_grad_cam.utils.model_targets import ClassifierOutputTarget

from pytorch_grad_cam.utils.image import show_cam_on_image,preprocess_image

img_tensor = preprocess_image(rgb_img,mean = [0.485,0.486,0.406],std=[0.229,0.224,0.225])

target_layers = [model.features[-1]]

# 选取合适的类激活图,但是ScoreCAM和AblationCAM需要batch_size

cam = GradCAM(model=model,target_layers=target_layers)

targets = [ClassifierOutputTarget(200)]

# 上方preds需要设定,比如ImageNet有1000类,这里可以设为200

grayscale_cam = cam(input_tensor=img_tensor, targets=targets)

grayscale_cam = grayscale_cam[0, :]

cam_img = show_cam_on_image(rgb_img, grayscale_cam, use_rgb=True)

print(type(cam_img))

Image.fromarray(cam_img)

2.4 使用FlashTorch实现CNN可视化

#使用FlashTorch工具快速实现CNN可视化

import matplotlib.pyplot as plt

from PIL import Image

import torchvision.models as models

from flashtorch.utils import apply_transforms,load_image

from flashtorch.saliency import Backprop

model = models.alexnet(pretrained = True)

backprop = Backprop(model)

img_path = 'C:/Users/CHDT/Desktop/cat.jpg'

img = Image.open(img_path).resize((224,224))

#rgb_img = np.float32(img)/255

owl = apply_transforms(img)

target_class = 24

backprop.visualize(owl,target_class,guided=True,use_gpu = True)遇到一个问题是这样,用自己本地的图像,出现错误,目前还在研究,未解决 ,继续学习下

2.4.1可视化卷积核

#可视化卷积核

import matplotlib.pyplot as plt

from PIL import Image

import torchvision.models as models

from flashtorch.utils import apply_transforms,load_image

from flashtorch.saliency import Backprop

from flashtorch.activmax import GradientAscent

model = models.vgg16(pretrained= True)

g_ascent = GradientAscent(model.features)

conv5_1 = model.features[24]

conv5_1_filters = [45,271,363,489]

g_ascent.visualize(conv5_1,conv5_1_filters,title ='VGG16:covn5_1')3、利用TensorBoard进行可视化

3.1 安装 TensorBoard

pip install tensorboardX3.2 TensorBoard可视化基本逻辑

记录包括模型权重、训练损失等等。》》》》》记录下的内容可以已网页形式加以可视化。

3.3TensorBoard配置与启动

#tensorbard

from tensorboardX import SummaryWriter

writer = SummaryWriter('./runs')上述操作实例化SummaryWriter为变量Writer,指定输出目录下的run目录,,之后类似于模型的权重文件 epoch以及模型损失都可以保存至在run目录。

#tensorbard模型可视化

import torch.nn as nn

import torchvision.models as models

net = models.vgg11()

print(net)3.4 Tensorboard图像可视化操作

#tensorboard图像可视化

import torchvision

from torchvision import datasets,transforms

from torch.utils.data import DataLoader

transforms_train = transforms.Compose(

[transforms.ToTensor()])#利用transforms将图像变换为Tensor形式

transforms_test = transforms.Compose(

[transforms.ToTensor()])

train_data = datasets.CIFAR10(".",train = True,download =True,transform=transforms_train)

test_data = datasets.CIFAR10(".",train = False,download =True,transform=transforms_test)#划分数据集

train_loader = DataLoader(train_data,batch_size =64,shuffle = True)#打乱数据集一次取64张图像进行训练

test_loader=DataLoader(test_data,batch_size=64)

images,labels = next(iter(train_loader))

#查看一张图片

writer = SummaryWriter('./pytorch_tb')

writer.add_image('images[0]',images[0])

writer.close()

notebook.start("--logdir .//Desktop/pytorch_tb")#启动tensorboard发现了错误是未启动tensorboard造成的,>>>>>因此添加notebook.start("--logdir .//Desktop/pytorch_tb")#启动tensorboard

#多张图像直接写入

writer = SummaryWriter('./pytorch_tb')

writer.add_images('images',images,global_step=0)

writer.close()#将多张图片拼接成一整图片

writer = SummaryWriter('./pytorch_xs')

img_grid = torchvision.utils.make_grid(images)

writer.add_image('image_grid',img_grid)

writer.close()3.5 连续变量可视化

#连续变量可视化

writer = SummaryWriter('./xiao')

for i in range(500):

x= i

y = x*2

writer.add_scalar("x",x,i)

writer.add_scalar("y",y,i)

writer.close()

3.6 可视化参数分布

#参数分布可视化

import torch

import numpy as np

def norm(mean,std):

t = std*torch.randn((100,20))+mean

return t

writer = SummaryWriter('./Desktop/xiaosun')

for step,mean in enumerate(range(-10,10,1)):

w = norm(mean,1)

writer.add_histogram("w",w,step)

writer.flush()

writer.close()

主要实现是通过SummaryWriter,然后通过add_xxx()函数实现

3.7 遇到的问题

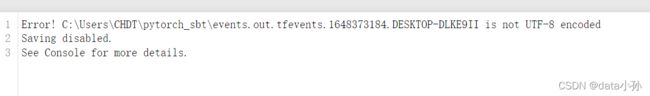

连续打开文件运行tensorboard,运行的总是这一个图像,不发生变化

》》》》》》》》》》》》》》》》》》》》》》》》》》》》

上面程序运行自己本地图片发生错误 未解决

继续学习

https://zhuanlan.zhihu.com/p/389738863

https://github.com/datawhalechina