《Web安全之深度学习实战》笔记:第十一章 Webshell检测

本小节通过机器学习算法来识别webshell ,较新的知识点是opcode。

一、webshell

WebShell就是以ASP、PHP、JSP或者CGI等网页文件形式存在的一种命令执行环境,也可以将其称为一种网页后门。黑客在入侵了一个网站后,通常会将ASP或PHP后门文件与网站服务器Web目录下正常的网页文件混在一起,然后就可以使用浏览器来访问ASP或者PHP后门,得到一个命令执行环境,以达到控制网站服务器的目的。顾名思义,“Web”的含义是需要服务器提供Web服务,“Shell”的含义是取得对服务器某种程度的操作权限。WebShell常常被入侵者利用,通过网站服务端口对网站服务器获取某种程度的操作权限。

在攻击链模型中,整个攻击过程分为以下几个步骤(见图11-1):

1)Reconnaissance(踩点)

2)Weaponization(组装)

3)Delivery(投送)

4)Exploitation(攻击)

5)Installation(植入)

6)C2(控制)

7)Actions(行动)

在针对网站的攻击中,通常是利用上传漏洞,上传WebShell,然后通过WebShell进一步控制Web服务器,对应攻击链模型的Install和C2环节。

常见的WebShell检测方法主要有以下几种:

·静态检测,通过匹配特征码、特征值、危险操作函数来查找WebShell的方法。只能查找已知的WebShell,并且误报率、漏报率会比较高,但是如果规则完善,可以减低误报率,但是漏报率必定会有所提高。

·动态检测,检测执行时刻表现出来的特征,比如数据库操作、敏感文件读取等。

·语法检测,根据PHP语言扫描编译的实现方式,进行剥离代码和注释,通过分析变量、函数、字符串、语言结构的方式,来实现关键危险函数的捕捉。这样可以完美解决漏报的情况。但误报上,仍存在问题 [3] 。

·统计学检测,通过信息熵、最长单词、重合指数、压缩比等进行检测 [4] 。

二、数据集

数据集包含WebShell样本2616个,开源软件PHP文件9035个。WebShell数据来自互联网上常见的WebShell样本,数据来自GitHub上相关项目(见图11-2),为了演示方便,全部使用了基于PHP的WebShell样本。白样本主要使用常见的基于PHP开发的开源软件。

源码处理

def load_files_re(dir):

files_list = []

g = os.walk(dir)

for path, d, filelist in g:

for filename in filelist:

if filename.endswith('.php') or filename.endswith('.txt'):

fulepath = os.path.join(path, filename)

try:

t = load_file(fulepath)

files_list.append(t)

#print("Load %s" % fulepath)

except:

print('failed:', fulepath)

return files_list对于每个文件处理如下

def load_file(file_path):

t=""

with open(file_path, encoding='utf-8') as f:

for line in f:

line=line.strip('\n')

t+=line

return t据此,黑样本和白羊本的处理流程如下

webshell_dir="../data/webshell/webshell/PHP/"

whitefile_dir="../data/webshell/normal/php/"

webshell_files_list = load_files_re(webshell_dir)

y1=[1]*len(webshell_files_list)

black_count=len(webshell_files_list)

wp_files_list =load_files_re(whitefile_dir)

y2=[0]*len(wp_files_list)

white_count=len(wp_files_list)三、特征提取

(一)词汇表(词集)

def get_features_by_tf():

global max_document_length

global white_count

global black_count

x=[]

y=[]

webshell_files_list = load_files_re(webshell_dir)

y1=[1]*len(webshell_files_list)

black_count=len(webshell_files_list)

wp_files_list =load_files_re(whitefile_dir)

y2=[0]*len(wp_files_list)

white_count=len(wp_files_list)

x=webshell_files_list+wp_files_list

y=y1+y2

vp=tflearn.data_utils.VocabularyProcessor(max_document_length=max_document_length,

min_frequency=0,

vocabulary=None,

tokenizer_fn=None)

x=vp.fit_transform(x, unused_y=None)

x=np.array(list(x))

return x,y(二)TF-IDF

def get_feature_by_bag_tfidf():

global white_count

global black_count

global max_features

print ("max_features=%d" % max_features)

x=[]

y=[]

webshell_files_list = load_files_re(webshell_dir)

y1=[1]*len(webshell_files_list)

black_count=len(webshell_files_list)

wp_files_list =load_files_re(whitefile_dir)

y2=[0]*len(wp_files_list)

white_count=len(wp_files_list)

x=webshell_files_list+wp_files_list

y=y1+y2

CV = CountVectorizer(ngram_range=(2, 4), decode_error="ignore",max_features=max_features,

token_pattern = r'\b\w+\b',min_df=1, max_df=1.0)

x=CV.fit_transform(x).toarray()

transformer = TfidfTransformer(smooth_idf=False)

x_tfidf = transformer.fit_transform(x)

x = x_tfidf.toarray()

return x,ynote:本实验中,此种场景下的机器学习算法性能均较差

(三)OP-CODE

1、OPCODE简介

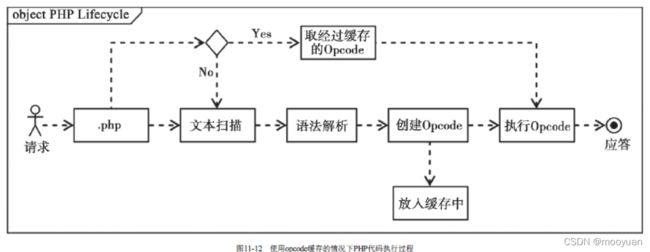

opcode是计算机指令中的一部分,用于指定要执行的操作,指令的格式和规范由处理器的指令规范指定。除了指令本身以外通常还有指令所需要的操作数,可能有的指令不需要显式的操作数。这些操作数可能是寄存器中的值、堆栈中的值、某块内存的值或者I/O端口中的值等。通常opcode还有另一种称谓—字节码(byte codes)。例如Java虚拟机(JVM)和.NET的通用中间语言(Common IntermeditateLanguage,CIL)等。PHP中的opcode则属于前面介绍中的后者,PHP是构建在Zend虚拟机(Zend VM)之上的。PHP代码处理流程如下所示:

2、OPCODE安装

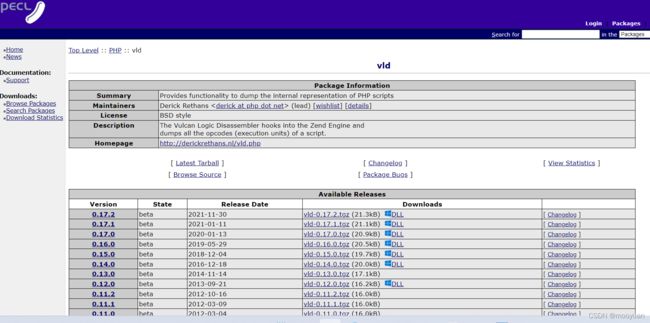

本文讲解下windows下怎么安装opcode vld工具,(1)下载vld 网址如下所示

PECL :: Package :: vld

根据自己使用的php版本号,来确定到底使用哪个vld版本号。

根据自己使用的php版本号,来确定到底使用哪个vld版本号。

以我的环境为例,我安装的phpstudy中,选择使用php7.3.30版本号, 以使用vld0.17.1为例,这里选择与之匹配的版本号,我的windows php study是ts版本,下载下图中红框版本即可。

PECL :: Package :: vld 0.17.1 for Windows

(2)将vld放在php版本的ext文件夹,即下图红色的文件夹中

如下所示,将vld文件放入如下ext文件夹后的效果如下

(3)php.ini 添加扩展配置

extension=php_vld.dll

(4)运行执行php vld的opcode命令检测

首先启动php,我的windows是php study,点击启动如下所示,确认php版本为7.3.30ts版本

关于版本号的确定,进入网站界面,确保版本正确且运行正常

执行命令行命令,进入php主目录,假设当前php文件(放到d:\\目录下,命名为a.php),内容如下

那么执行如下命令php -d vld.active=1 d:\a.php,通过使用PHP的VLD扩展查看对应的opcode,其中vld.active=1表示激活VlD,运行结果如下

通过使用PHP的VLD扩展查看对应的opcode,其中vld.active=1表示激活VlD,vld.execute=0表示只解析不执行:

php -d vld.active=1 -dvld.execute=0 d:\a.php运行结果如下所示

VLD输出的PHP代码生成的中间代码的信息,说明如下

Branch analysis from position 这条信息多在分析数组时使用。

Return found 是否返回,这个基本上有都有。

filename 分析的文件名

function name 函数名,针对每个函数VLD都会生成一段如上的独立的信息,这里显示当前函数的名称

number of ops 生成的操作数

compiled vars 编译期间的变量,这些变量是在PHP5后添加的,它是一个缓存优化。这样的变量在PHP源码中以IS_CV标记。

op list 生成的中间代码的变量列表3、源码

开发调试阶段会频繁解析相同的PHP文件以获取对应的opcode,可以使用PHP的opcode缓存技术提高效率。opcode缓存技术 可以有效减少不必要的编译步骤,减少CPU和内存的消耗。正常情况下PHP代码的执行过程会经历文本扫描、语法解析、创建opcode、执行opcode这几步,如图11-11所示。

使用了opcode缓存技术后,对于曾经解析过的PHP文件,opcode会缓存下来,遇到同样内容的PHP文件就可以直接进入opcode执行阶段,如图11-12所示。

开启opcode的缓存功能非常方便,PHP5.5.0以后在编译PHP源码的时候开启--enable-opcache.

def load_files_opcode_re(dir):

global min_opcode_count

files_list = []

g = os.walk(dir)

for path, d, filelist in g:

for filename in filelist:

if filename.endswith('.php') :

fulepath = os.path.join(path, filename)

print ("Load %s opcode" % fulepath)

t = load_file_opcode(fulepath)

if len(t) > min_opcode_count:

files_list.append(t)

else:

print ("Load %s opcode failed" % fulepath)

#print("Add opcode %s" % t)

return files_list对于每个文件,处理如下

def load_file_opcode(file_path):

global php_bin

t=""

cmd=php_bin+" -dvld.active=1 -dvld.execute=0 "+file_path

print ("exec "+cmd)

#status,output=commands.getstatusoutput(cmd)

status,output = subprocess.check_output(cmd)

t=output

#print(t)

tokens=re.findall(r'\s(\b[A-Z_]+\b)\s',output)

t=" ".join(tokens)

print("opcode count %d" % len(t))

return t

注意,这里面php_bin目录如下

php_bin=r"D:\web\phpstudy_pro\Extensions\php\php7.3.30ts\bin"

在程序运行过程中,调用如下函数时会报错

x, y = get_feature_by_opcode()会有如下报错

max_features=10000 webshell_dir=../data/webshell/webshell/PHP/ whitefile_dir=../data/webshell/normal/php/

Load ../data/webshell/webshell/PHP/mattiasgeniar\php-exploit-scripts-master\exp4php\hadsky.php opcode

exec D:\web\phpstudy_pro\Extensions\php\php7.3.30ts\php -dvld.active=1 -dvld.execute=0 ../data/webshell/webshell/PHP/mattiasgeniar\php-exploit-scripts-master\exp4php\hadsky.php

Finding entry points

Branch analysis from position: 0

2 jumps found. (Code = 47) Position 1 = 10, Position 2 = 12

Branch analysis from position: 10

2 jumps found. (Code = 43) Position 1 = 13, Position 2 = 14

Branch analysis from position: 13

1 jumps found. (Code = 79) Position 1 = -2

Branch analysis from position: 14

2 jumps found. (Code = 43) Position 1 = 20, Position 2 = 21

Branch analysis from position: 20

1 jumps found. (Code = 79) Position 1 = -2

Branch analysis from position: 21

2 jumps found. (Code = 43) Position 1 = 23, Position 2 = 70

Branch analysis from position: 23

2 jumps found. (Code = 43) Position 1 = 66, Position 2 = 69

Branch analysis from position: 66

1 jumps found. (Code = 42) Position 1 = 70

Branch analysis from position: 70

2 jumps found. (Code = 43) Position 1 = 72, Position 2 = 110

Branch analysis from position: 72

2 jumps found. (Code = 43) Position 1 = 107, Position 2 = 109

Branch analysis from position: 107

1 jumps found. (Code = 42) Position 1 = 110

Branch analysis from position: 110

1 jumps found. (Code = 62) Position 1 = -2

Branch analysis from position: 109

1 jumps found. (Code = 62) Position 1 = -2

Branch analysis from position: 110

Branch analysis from position: 69

2 jumps found. (Code = 43) Position 1 = 72, Position 2 = 110

Branch analysis from position: 72

Branch analysis from position: 110

Branch analysis from position: 70

Branch analysis from position: 12

filename: C:\Users\liujiannan\PycharmProjects\pythonProject\Web安全之深度学习实战\data\webshell\webshell\PHP\mattiasgeniar\php-exploit-scripts-master\exp4php\hadsky.php

function name: (null)

number of ops: 111

compiled vars: !0 = $die, !1 = $argv, !2 = $argc, !3 = $poc, !4 = $ch, !5 = $url, !6 = $out, !7 = $start, !8 = $end, !9 = $output, !10 = $error, !11 = $errorpos

line #* E I O op fetch ext return operands

-------------------------------------------------------------------------------------

9 0 E > FETCH_DIM_R ~12 !1, 0

1 ROPE_INIT 5 ~15 '%0Ausage%3Aphp+'

2 ROPE_ADD 1 ~15 ~15, ~12

11 3 FETCH_DIM_R ~13 !1, 0

4 ROPE_ADD 2 ~15 ~15, '+target+%5BpayloadURL%5D%0A%0AEg%3A+php+'

5 ROPE_ADD 3 ~15 ~15, ~13

6 ROPE_END 4 ~14 ~15, '+http%3A%2F%2Fwww.google.com%2FHadSky%2F+%5Bhttp%3A%2F%2FyourServer%2Fpayload.txt%5D%0A%0Aif+you+dont+set+the+payloadURL%2CThis+exp+will+read+the+site%60s+config.php+by+default.%0A'

7 ASSIGN !0, ~14

16 8 IS_SMALLER ~19 !2, 2

9 > JMPNZ_EX ~19 ~19, ->12

10 > IS_SMALLER ~20 3, !2

11 BOOL ~19 ~20

12 > > JMPZ ~19, ->14

18 13 > > EXIT !0

20 14 > ASSIGN !3, '%3Fc%3Dpage%26filename%3D.%2Fpuyuetian%2Fmysql%2Fconfig.php'

21 15 INIT_FCALL_BY_NAME 'curl_init'

16 DO_FCALL 0 $22

17 ASSIGN !4, $22

22 18 BOOL_NOT ~24 !4

19 > JMPZ ~24, ->21

24 20 > > EXIT 'Dont+support+curl%21'

27 21 > IS_EQUAL ~25 !2, 2

22 > JMPZ ~25, ->70

29 23 > FETCH_DIM_R ~26 !1, 1

24 CONCAT ~27 ~26, !3

25 ASSIGN !5, ~27

30 26 INIT_FCALL_BY_NAME 'curl_setopt'

27 SEND_VAR_EX !4

28 FETCH_CONSTANT ~29 'CURLOPT_URL'

29 SEND_VAL_EX ~29

30 SEND_VAR_EX !5

31 DO_FCALL 0

31 32 INIT_FCALL_BY_NAME 'curl_setopt'

33 SEND_VAR_EX !4

34 FETCH_CONSTANT ~31 'CURLOPT_RETURNTRANSFER'

35 SEND_VAL_EX ~31

36 SEND_VAL_EX 1

37 DO_FCALL 0

32 38 INIT_FCALL_BY_NAME 'curl_setopt'

39 SEND_VAR_EX !4

40 FETCH_CONSTANT ~33 'CURLOPT_HEADER'

41 SEND_VAL_EX ~33

42 SEND_VAL_EX 0

43 DO_FCALL 0

33 44 INIT_FCALL_BY_NAME 'curl_exec'

45 SEND_VAR_EX !4

46 DO_FCALL 0 $35

47 ASSIGN !6, $35

34 48 INIT_FCALL 'strpos'

49 SEND_VAR !6

50 SEND_VAL '%24_G%5B%27MYSQL%27%5D'

51 DO_ICALL $37

52 ASSIGN !7, $37

35 53 INIT_FCALL 'strpos'

54 SEND_VAR !6

55 SEND_VAL '%24_G%5B%27MYSQL%27%5D%5B%27CHARSET%27%5D'

56 DO_ICALL $39

57 ASSIGN !8, $39

36 58 INIT_FCALL 'substr'

59 SEND_VAR !6

60 SEND_VAR !7

61 SUB ~41 !8, !7

62 SEND_VAL ~41

63 DO_ICALL $42

64 ASSIGN !9, $42

37 65 > JMPZ !9, ->69

39 66 > ECHO '%0D%0Aoh+yeah%2Cgot+the+result%0D%0A%0D%0A'

40 67 ECHO !9

68 > JMP ->70

44 69 > ECHO 'oops%2Cseems+the+config+file+has+been+renamed%21'

47 70 > IS_EQUAL ~44 !2, 3

71 > JMPZ ~44, ->110

49 72 > FETCH_DIM_R ~45 !1, 1

73 CONCAT ~46 ~45, '%3Fc%3Dpage%26filename%3D'

74 FETCH_DIM_R ~47 !1, 2

75 CONCAT ~48 ~46, ~47

76 ASSIGN !5, ~48

50 77 INIT_FCALL_BY_NAME 'curl_setopt'

78 SEND_VAR_EX !4

79 FETCH_CONSTANT ~50 'CURLOPT_URL'

80 SEND_VAL_EX ~50

81 SEND_VAR_EX !5

82 DO_FCALL 0

51 83 INIT_FCALL_BY_NAME 'curl_setopt'

84 SEND_VAR_EX !4

85 FETCH_CONSTANT ~52 'CURLOPT_RETURNTRANSFER'

86 SEND_VAL_EX ~52

87 SEND_VAL_EX 1

88 DO_FCALL 0

52 89 INIT_FCALL_BY_NAME 'curl_setopt'

90 SEND_VAR_EX !4

91 FETCH_CONSTANT ~54 'CURLOPT_HEADER'

92 SEND_VAL_EX ~54

93 SEND_VAL_EX 0

94 DO_FCALL 0

53 95 INIT_FCALL_BY_NAME 'curl_exec'

96 SEND_VAR_EX !4

97 DO_FCALL 0 $56

98 ASSIGN !6, $56

54 99 ASSIGN !10, '%E6%9C%AA%E6%89%BE%E5%88%B0%E7%9A%84%E6%A8%A1%E6%9D%BF%E6%96%87%E4%BB%B6%EF%BC%81'

55 100 INIT_FCALL 'strpos'

101 SEND_VAR !6

102 SEND_VAR !10

103 DO_ICALL $59

104 ASSIGN !11, $59

56 105 IS_IDENTICAL ~61 !11,

106 > JMPZ ~61, ->109

58 107 > ECHO 'Done%2Cur+code+has+been++excuted+successfully%21'

108 > JMP ->110

62 109 > ECHO 'Failed%21'

65 110 > > RETURN 1

Traceback (most recent call last):

File "Web安全之深度学习实战/code/11 webshell(Web安全之深度学习实战).py", line 107, in load_file_opcode

status,output = subprocess.check_output(cmd)

ValueError: too many values to unpack (expected 2)

Process finished with exit code 1

根据报错信息可以知道,错误原因是因为值错误:要解包的值太多(应为2),代码应该为如下:

output = subprocess.check_output(cmd, stderr=subprocess.STDOUT)不过这样的话,解析token进行正则匹配时依旧会报错,因为output为二进制,直接正则报错如下所示:

exec D:\web\phpstudy_pro\Extensions\php\php7.3.30ts\php -dvld.active=1 -dvld.execute=0 d:/a.php

Traceback (most recent call last):

File "Web安全之深度学习实战/code/11 webshell(Web安全之深度学习实战).py", line 460, in

load_file_opcode('d:/a.php')

File "Web安全之深度学习实战/code/11 webshell(Web安全之深度学习实战).py", line 108, in load_file_opcode

tokens = re.findall(r'\s(\b[A-Z_]+\b)\s', output)

File "C:\ProgramData\Anaconda3\lib\re.py", line 223, in findall

return _compile(pattern, flags).findall(string)

TypeError: cannot use a string pattern on a bytes-like object

b"Finding entry points\nBranch analysis from position: 0\n1 jumps found. (Code = 62) Position 1 = -2\nfilename: D:\\a.php\nfunction name: (null)\nnumber of ops: 4\ncompiled vars: !0 = $a\nline #* E I O op fetch ext return operands\n-------------------------------------------------------------------------------------\n 2 0 E > ECHO 'Hello+World'\n 3 1 ASSIGN !0, 2\n 4 2 ECHO !0\n 5 3 > RETURN 1\n\nbranch: # 0; line: 2- 5; sop: 0; eop: 3; out0: -2\npath #1: 0, \n"

Process finished with exit code 1

于是进行如下处理,将二进制decode,并且为避免报错,将代码增加try except捕捉异常

def load_file_opcode(file_path):

global php_bin

t=""

cmd=php_bin+" -dvld.active=1 -dvld.execute=0 "+file_path

print ("exec "+cmd)

try:

output = subprocess.check_output(cmd, stderr=subprocess.STDOUT)

output = output.decode()

tokens = re.findall(r'\s(\b[A-Z_]+\b)\s', output)

print(tokens)

t = " ".join(tokens)

print("opcode count %d" % len(t))

return t

except:

return " "此时运行三(三)中第4点的例子,代码如下

load_file_opcode('d:/a.php')运行结果如下

exec D:\web\phpstudy_pro\Extensions\php\php7.3.30ts\php -dvld.active=1 -dvld.execute=0 d:/a.php

['E', 'O', 'E', 'ECHO', 'ASSIGN', 'ECHO', 'RETURN']

opcode count 29这里要特别注意一点,如果代码如下所示,即没有stderr=subprocess.STDOUT的话,会在运行中报错,调试过程中会发现是因为opcode count 0

def load_file_opcode(file_path):

global php_bin

t=""

cmd=php_bin+" -dvld.active=1 -dvld.execute=0 "+file_path

print ("exec "+cmd)

try:

output = subprocess.check_output(cmd)

output = output.decode()

tokens = re.findall(r'\s(\b[A-Z_]+\b)\s', output)

print(tokens)

t = " ".join(tokens)

print("opcode count %d" % len(t))

return t

except:

return " "问题原因是因为正常的VLD解析的opcode序列被当成错误信息了,不能被当作正常

输出,如果用check_output函数捕获输出信息,需设置stderr=subprocess.STDOUT

它不会像往常一样将其报告为错误,而只是将其作为标准输出打印到控制台。

(四)OPCODE-TF

def get_feature_by_opcode_tf():

global white_count

global black_count

global max_document_length

x=[]

y=[]

if os.path.exists(data_pkl_file) and os.path.exists(label_pkl_file):

f = open(data_pkl_file, 'rb')

x = pickle.load(f)

f.close()

f = open(label_pkl_file, 'rb')

y = pickle.load(f)

f.close()

else:

webshell_files_list = load_files_opcode_re(webshell_dir)

y1=[1]*len(webshell_files_list)

black_count=len(webshell_files_list)

wp_files_list =load_files_opcode_re(whitefile_dir)

y2=[0]*len(wp_files_list)

white_count=len(wp_files_list)

x=webshell_files_list+wp_files_list

y=y1+y2

vp=tflearn.data_utils.VocabularyProcessor(max_document_length=max_document_length,

min_frequency=0,

vocabulary=None,

tokenizer_fn=None)

x=vp.fit_transform(x, unused_y=None)

x=np.array(list(x))

f = open(data_pkl_file, 'wb')

pickle.dump(x, f)

f.close()

f = open(label_pkl_file, 'wb')

pickle.dump(y, f)

f.close()

return x,y

(五)OPCODE-WordBag

def get_feature_by_opcode():

global white_count

global black_count

global max_features

global webshell_dir

global whitefile_dir

print("max_features=%d webshell_dir=%s whitefile_dir=%s" % (max_features,webshell_dir,whitefile_dir))

x=[]

y=[]

webshell_files_list = load_files_opcode_re(webshell_dir)

y1=[1]*len(webshell_files_list)

black_count=len(webshell_files_list)

wp_files_list =load_files_opcode_re(whitefile_dir)

y2=[0]*len(wp_files_list)

white_count=len(wp_files_list)

x=webshell_files_list+wp_files_list

y=y1+y2

CV = CountVectorizer(ngram_range=(2, 4), decode_error="ignore",max_features=max_features,

token_pattern = r'\b\w+\b',min_df=1, max_df=1.0)

x=CV.fit_transform(x).toarray()

return x,y

(六)OPCODE-TFIDF

def get_feature_by_opcode_tfidf():

global white_count

global black_count

global max_features

global webshell_dir

global whitefile_dir

print("max_features=%d webshell_dir=%s whitefile_dir=%s" % (max_features,webshell_dir,whitefile_dir))

x=[]

y=[]

if os.path.exists(tfidf_data_pkl_file) and os.path.exists(tfidf_label_pkl_file):

f = open(tfidf_data_pkl_file, 'rb')

x = pickle.load(f)

f.close()

f = open(tfidf_label_pkl_file, 'rb')

y = pickle.load(f)

f.close()

else:

webshell_files_list = load_files_opcode_re(webshell_dir)

y1=[1]*len(webshell_files_list)

black_count=len(webshell_files_list)

wp_files_list =load_files_opcode_re(whitefile_dir)

y2=[0]*len(wp_files_list)

white_count=len(wp_files_list)

x=webshell_files_list+wp_files_list

y=y1+y2

CV = CountVectorizer(ngram_range=(2, 4), decode_error="ignore",max_features=max_features,

token_pattern = r'\b\w+\b',min_df=1, max_df=1.0)

x=CV.fit_transform(x).toarray()

transformer = TfidfTransformer(smooth_idf=False)

x_tfidf = transformer.fit_transform(x)

x = x_tfidf.toarray()

f = open(tfidf_data_pkl_file, 'wb')

pickle.dump(x, f)

f.close()

f = open(tfidf_label_pkl_file, 'wb')

pickle.dump(y, f)

f.close()

return x,y

这里要强调一下,我在运行作者源码中的wordbag和tfidf模型,无论使用哪种特征提取方法,性能都是极差,这应该是CountVectorizer的配置不恰当导致的,故而这部分还是可以重新调优一下,以获得更好的性能

四、模型构建

(一)NB

def do_nb(x,y):

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.4, random_state=0)

gnb = GaussianNB()

gnb.fit(x_train, y_train)

y_pred = gnb.predict(x_test)

do_metrics(y_test,y_pred)

(二)SVM

def do_svm(x,y):

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.4, random_state=0)

clf = svm.SVC()

clf.fit(x_train, y_train)

y_pred = clf.predict(x_test)

do_metrics(y_test,y_pred)

(三)XGBOOST

def do_xgboost(x,y):

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.4, random_state=0)

print ("xgboost")

xgb_model = xgb.XGBClassifier().fit(x_train, y_train)

y_pred = xgb_model.predict(x_test)

print(classification_report(y_test, y_pred))

print (metrics.confusion_matrix(y_test, y_pred))(四)RF随机森林

def do_rf(x,y):

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.4, random_state=0)

rf = RandomForestClassifier(n_estimators=50)

rf.fit(x_train, y_train)

y_pred = rf.predict(x_test)

do_metrics(y_test,y_pred)

(五)MLP

def do_mlp(x,y):

#mlp

clf = MLPClassifier(solver='lbfgs',

alpha=1e-5,

hidden_layer_sizes=(5, 2),

random_state=1)

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.4, random_state=0)

clf.fit(x_train, y_train)

y_pred = clf.predict(x_test)

do_metrics(y_test,y_pred)(六)CNN

def do_cnn(x,y):

global max_document_length

print ("CNN and tf")

trainX, testX, trainY, testY = train_test_split(x, y, test_size=0.4, random_state=0)

y_test=testY

trainX = pad_sequences(trainX, maxlen=max_document_length, value=0.)

testX = pad_sequences(testX, maxlen=max_document_length, value=0.)

# Converting labels to binary vectors

trainY = to_categorical(trainY, nb_classes=2)

testY = to_categorical(testY, nb_classes=2)

# Building convolutional network

network = input_data(shape=[None,max_document_length], name='input')

network = tflearn.embedding(network, input_dim=1000000, output_dim=128)

branch1 = conv_1d(network, 128, 3, padding='valid', activation='relu', regularizer="L2")

branch2 = conv_1d(network, 128, 4, padding='valid', activation='relu', regularizer="L2")

branch3 = conv_1d(network, 128, 5, padding='valid', activation='relu', regularizer="L2")

network = merge([branch1, branch2, branch3], mode='concat', axis=1)

network = tf.expand_dims(network, 2)

network = global_max_pool(network)

network = dropout(network, 0.8)

network = fully_connected(network, 2, activation='softmax')

network = regression(network, optimizer='adam', learning_rate=0.001,

loss='categorical_crossentropy', name='target')

model = tflearn.DNN(network, tensorboard_verbose=0)

model.fit(trainX, trainY,

n_epoch=5, shuffle=True, validation_set=0.1,

show_metric=True, batch_size=100,run_id="webshell")

y_predict_list=model.predict(testX)

y_predict=[]

for i in y_predict_list:

print (i[0])

if i[0] > 0.5:

y_predict.append(0)

else:

y_predict.append(1)

print ('y_predict:')

print (y_predict)

print ('y_test:')

print (y_test)

do_metrics(y_test, y_predict)

这里运行基于tfidf特征提取的运行结果,可见准确率没眼看啊

y_predict:

[1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]

y_test:

[0, 0, 1, 0, 1, 1, 0, 0, 1, 1, 1, 1, 0, 0, 1, 0, 1, 1, 1, 0, 1, 1, 1, 0, 1, 1, 1, 0, 0, 0, 0, 1, 0, 0, 1, 0, 1, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 1, 1, 1, 0, 1, 1, 0, 1, 1, 1, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 1, 0, 1, 0, 1, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0, 1, 1, 0, 1, 1, 1, 0, 1, 1, 1, 0, 0, 1, 0, 0, 1, 0, 1, 0, 1, 1, 1, 1, 1, 0, 0, 1, 1, 1, 1, 0, 1, 0, 1, 1, 0, 0, 1, 1, 1, 0, 1, 0, 0, 0, 0, 1, 0, 1, 1, 0, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 1, 0, 1, 1, 1, 1, 0, 0, 0, 1, 1, 1, 0, 1, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 1, 0, 1, 0, 0, 0, 1, 0, 1, 1, 1, 0, 1, 1, 0, 0, 0, 0, 0, 0, 0, 1, 1, 0, 1, 0, 0, 1, 1, 0, 0, 0, 0, 1, 0, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 1, 0, 1, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 1, 0, 0, 1, 1, 1, 1, 0, 1, 0, 1, 0, 0, 1, 0, 0, 0, 1, 0, 1, 0, 1, 0, 1, 1, 0, 0, 0, 0, 1, 1, 0, 0, 0, 1, 0, 0, 0, 1, 1, 0, 1, 0, 1, 1, 1, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 0, 1, 0, 1, 1, 0, 0, 1, 0, 1, 1, 1, 1, 0, 0, 1, 1, 0, 0, 0, 0, 1, 1, 0, 1, 1, 1, 1, 1, 1, 0, 0, 0, 1, 1, 0, 0, 0, 1, 0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 1, 0, 0, 1, 1, 1, 1, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 0, 1, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 1, 0, 0, 1, 1, 1, 1, 0, 0, 1, 0, 0, 0, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 1, 1, 1, 0, 0, 1, 1, 0, 1, 1, 1, 0, 0, 1, 0, 1, 1, 1, 0, 0, 0, 0, 1, 1, 1, 1, 0, 0, 1, 0, 1, 1]

metrics.accuracy_score:

0.47884187082405344

metrics.confusion_matrix:

[[ 0 234]

[ 0 215]]

metrics.precision_score:

0.47884187082405344

metrics.recall_score:

1.0

metrics.f1_score:

0.6475903614457832

Process finished with exit code 0

对于tf词集方式提取特征值,比tfidf好很多,效果如下

y_predict:

[0, 0, 1, 0, 1, 1, 0, 0, 1, 1, 1, 1, 0, 1, 1, 0, 1, 1, 0, 0, 1, 1, 1, 0, 1, 1, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 1, 1, 1, 0, 1, 1, 0, 1, 0, 1, 0, 0, 1, 0, 0, 0, 0, 1, 1, 0, 1, 0, 1, 0, 1, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0, 1, 1, 0, 1, 0, 1, 0, 1, 1, 1, 0, 0, 1, 0, 0, 1, 0, 1, 0, 1, 1, 1, 1, 1, 0, 0, 0, 1, 1, 1, 0, 1, 0, 1, 1, 0, 0, 1, 1, 1, 0, 1, 0, 0, 0, 0, 1, 0, 1, 1, 0, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 1, 0, 1, 1, 1, 1, 0, 0, 0, 1, 1, 1, 0, 1, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 1, 0, 0, 0, 0, 0, 1, 0, 1, 1, 1, 0, 1, 1, 0, 0, 0, 0, 0, 0, 0, 1, 1, 0, 1, 0, 0, 1, 1, 0, 0, 0, 0, 1, 0, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 1, 0, 1, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 1, 0, 0, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 1, 0, 1, 0, 1, 0, 1, 1, 0, 0, 0, 0, 1, 1, 0, 0, 0, 1, 0, 0, 0, 1, 0, 0, 1, 0, 1, 1, 1, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 0, 1, 0, 0, 1, 0, 0, 1, 0, 1, 1, 1, 1, 0, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 1, 1, 1, 1, 1, 1, 0, 0, 0, 1, 1, 0, 0, 0, 1, 0, 0, 1, 0, 1, 1, 1, 1, 1, 0, 1, 0, 0, 1, 1, 1, 1, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 0, 1, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 1, 0, 0, 1, 1, 1, 1, 0, 0, 1, 0, 0, 0, 1, 0, 1, 1, 0, 1, 0, 0, 0, 0, 1, 1, 1, 0, 0, 1, 1, 0, 1, 1, 1, 0, 0, 1, 0, 1, 1, 1, 0, 0, 0, 0, 1, 1, 1, 1, 0, 0, 1, 0, 1, 1]

y_test:

[0, 0, 1, 0, 1, 1, 0, 0, 1, 1, 1, 1, 0, 0, 1, 0, 1, 1, 1, 0, 1, 1, 1, 0, 1, 1, 1, 0, 0, 0, 0, 1, 0, 0, 1, 0, 1, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 1, 1, 1, 0, 1, 1, 0, 1, 1, 1, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 1, 0, 1, 0, 1, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0, 1, 1, 0, 1, 1, 1, 0, 1, 1, 1, 0, 0, 1, 0, 0, 1, 0, 1, 0, 1, 1, 1, 1, 1, 0, 0, 1, 1, 1, 1, 0, 1, 0, 1, 1, 0, 0, 1, 1, 1, 0, 1, 0, 0, 0, 0, 1, 0, 1, 1, 0, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 1, 0, 1, 1, 1, 1, 0, 0, 0, 1, 1, 1, 0, 1, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 1, 0, 1, 0, 0, 0, 1, 0, 1, 1, 1, 0, 1, 1, 0, 0, 0, 0, 0, 0, 0, 1, 1, 0, 1, 0, 0, 1, 1, 0, 0, 0, 0, 1, 0, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 1, 0, 1, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 1, 0, 0, 1, 1, 1, 1, 0, 1, 0, 1, 0, 0, 1, 0, 0, 0, 1, 0, 1, 0, 1, 0, 1, 1, 0, 0, 0, 0, 1, 1, 0, 0, 0, 1, 0, 0, 0, 1, 1, 0, 1, 0, 1, 1, 1, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 0, 1, 0, 1, 1, 0, 0, 1, 0, 1, 1, 1, 1, 0, 0, 1, 1, 0, 0, 0, 0, 1, 1, 0, 1, 1, 1, 1, 1, 1, 0, 0, 0, 1, 1, 0, 0, 0, 1, 0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 1, 0, 0, 1, 1, 1, 1, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 0, 1, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 1, 0, 0, 1, 1, 1, 1, 0, 0, 1, 0, 0, 0, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 1, 1, 1, 0, 0, 1, 1, 0, 1, 1, 1, 0, 0, 1, 0, 1, 1, 1, 0, 0, 0, 0, 1, 1, 1, 1, 0, 0, 1, 0, 1, 1]

metrics.accuracy_score:

0.9665924276169265

metrics.confusion_matrix:

[[232 2]

[ 13 202]]

metrics.precision_score:

0.9901960784313726

metrics.recall_score:

0.9395348837209302

metrics.f1_score:

0.9642004773269689对于OPCODE-wordbag和tfidf的方式,均是效果奇差,和之前的tfidf一样,运行结果如下

y_predict:

[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

y_test:

[1, 1, 0, 1, 1, 1, 1, 0, 0, 1, 1, 0, 1, 0, 0, 1, 1, 0, 0, 0, 0, 1, 1, 0, 0, 1, 0, 1, 1, 1, 1, 1, 1, 0, 1, 1, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 1, 1, 1, 0, 1, 1, 1, 1, 0, 0, 0, 0, 1, 0, 1, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 1, 0, 0, 0, 1, 1, 1, 0, 0, 0, 1, 0, 1, 1, 1, 1, 0, 1, 1, 0, 1, 1, 0, 1, 0, 1, 1, 1, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 1, 1, 0, 0, 1, 0, 1, 0, 1, 1, 1, 1, 0, 1, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 1, 0, 1, 1, 1, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 1, 0, 1, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 1, 0, 0, 1, 0, 0, 1, 0, 0, 1, 0, 0, 1, 0, 0, 0, 1, 1, 1, 0, 1, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 0, 0, 1, 0, 0, 0, 1, 0, 0, 1, 0, 1, 1, 1, 0, 0, 1, 0, 0, 0, 1, 0, 1, 1, 0, 0, 0, 0, 1, 0, 1, 1, 1, 1, 0, 1, 0, 1, 0, 1, 0, 1, 1, 0, 1, 0, 1, 0, 1, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 1, 0, 0, 0, 0, 1, 1, 1, 0, 1, 1, 1, 1, 1, 1, 0, 0, 1, 1, 1, 0, 1, 0, 0, 0, 1, 1, 0, 1, 0, 0, 1, 0, 0, 0, 1, 1, 0, 0, 1, 0, 1, 1, 1, 1, 0, 1, 0, 1, 0, 0, 1, 0, 1, 1, 1, 0, 1, 1, 0, 0, 0, 1, 0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 1, 0, 0, 1, 0, 0, 0, 0, 1, 1, 0, 0, 0, 1, 1, 0, 1, 1, 1, 1, 0, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 1, 1, 0, 1, 1, 0, 1, 0, 0, 1, 1, 0, 1, 1, 1, 1, 1, 1, 1, 1, 0, 1, 0, 1, 0, 1, 1, 0, 0, 1, 0, 0, 1, 0, 1, 0, 1, 1, 1, 1]

metrics.accuracy_score:

0.5213270142180095

metrics.confusion_matrix:

[[220 0]

[202 0]]

metrics.precision_score:

0.0

metrics.recall_score:

0.0

metrics.f1_score:

0.0对opcode-tf词汇表法,运行结果还可以

y_predict:

[1, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 1, 0, 0, 1, 0, 1, 0, 1, 0, 1, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 1, 0, 0, 0, 0, 1, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 1, 0, 1, 1, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 1, 0, 0, 1, 0, 1, 1, 0, 0, 0, 1, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 0, 1, 0, 1, 1, 1, 1, 0, 0, 0, 1, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 1, 0, 0, 1, 0, 0, 1, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 1, 0, 0, 1, 0, 0, 0, 1, 0, 0, 0, 1, 0, 1, 1, 0, 1, 0, 1, 0, 1, 0, 0, 1, 0, 1, 0, 1, 0, 1, 1, 0, 0, 0, 0, 0, 0, 1, 0, 1, 0, 0, 1, 1, 0, 1, 0, 0, 1, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 1, 0, 1, 0, 0, 0, 0, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 1, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1]

y_test:

[1, 1, 0, 1, 1, 1, 1, 0, 0, 1, 1, 0, 1, 0, 0, 1, 1, 0, 0, 0, 0, 1, 1, 0, 0, 1, 0, 1, 1, 1, 1, 1, 1, 0, 1, 1, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 1, 1, 1, 0, 1, 1, 1, 1, 0, 0, 0, 0, 1, 0, 1, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 1, 0, 0, 0, 1, 1, 1, 0, 0, 0, 1, 0, 1, 1, 1, 1, 0, 1, 1, 0, 1, 1, 0, 1, 0, 1, 1, 1, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 1, 1, 0, 0, 1, 0, 1, 0, 1, 1, 1, 1, 0, 1, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 1, 0, 1, 1, 1, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 1, 0, 1, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 1, 0, 0, 1, 0, 0, 1, 0, 0, 1, 0, 0, 1, 0, 0, 0, 1, 1, 1, 0, 1, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 0, 0, 1, 0, 0, 0, 1, 0, 0, 1, 0, 1, 1, 1, 0, 0, 1, 0, 0, 0, 1, 0, 1, 1, 0, 0, 0, 0, 1, 0, 1, 1, 1, 1, 0, 1, 0, 1, 0, 1, 0, 1, 1, 0, 1, 0, 1, 0, 1, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 1, 0, 0, 0, 0, 1, 1, 1, 0, 1, 1, 1, 1, 1, 1, 0, 0, 1, 1, 1, 0, 1, 0, 0, 0, 1, 1, 0, 1, 0, 0, 1, 0, 0, 0, 1, 1, 0, 0, 1, 0, 1, 1, 1, 1, 0, 1, 0, 1, 0, 0, 1, 0, 1, 1, 1, 0, 1, 1, 0, 0, 0, 1, 0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 1, 0, 0, 1, 0, 0, 0, 0, 1, 1, 0, 0, 0, 1, 1, 0, 1, 1, 1, 1, 0, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 1, 1, 0, 1, 1, 0, 1, 0, 0, 1, 1, 0, 1, 1, 1, 1, 1, 1, 1, 1, 0, 1, 0, 1, 0, 1, 1, 0, 0, 1, 0, 0, 1, 0, 1, 0, 1, 1, 1, 1]

metrics.accuracy_score:

0.7914691943127962

metrics.confusion_matrix:

[[219 1]

[ 87 115]]

metrics.precision_score:

0.9913793103448276

metrics.recall_score:

0.5693069306930693

metrics.f1_score:

0.7232704402515724(七)RNN

def do_rnn(x,y):

global max_document_length

print ("RNN")

trainX, testX, trainY, testY = train_test_split(x, y, test_size=0.4, random_state=0)

y_test=testY

trainX = pad_sequences(trainX, maxlen=max_document_length, value=0.)

testX = pad_sequences(testX, maxlen=max_document_length, value=0.)

# Converting labels to binary vectors

trainY = to_categorical(trainY, nb_classes=2)

testY = to_categorical(testY, nb_classes=2)

# Network building

net = tflearn.input_data([None, max_document_length])

net = tflearn.embedding(net, input_dim=10240000, output_dim=128)

net = tflearn.lstm(net, 128, dropout=0.8)

net = tflearn.fully_connected(net, 2, activation='softmax')

net = tflearn.regression(net, optimizer='adam', learning_rate=0.001,

loss='categorical_crossentropy')

# Training

model = tflearn.DNN(net, tensorboard_verbose=0)

model.fit(trainX, trainY, validation_set=0.1, show_metric=True,

batch_size=10,run_id="webshell",n_epoch=5)

y_predict_list=model.predict(testX)

y_predict=[]

for i in y_predict_list:

if i[0] > 0.5:

y_predict.append(0)

else:

y_predict.append(1)

do_metrics(y_test, y_predict)

五、总结

本小章节最大的学习收获就是opcode的使用方法,而在python3中调试运行代码,作者的源码会大量报错,修改和调试的过程中学习到了很多知识。只是运行测试的过程中,发现所有基于词袋、tfidf的特征提取方法,进行建模均会效果奇差。