视频处理:帧差法、光流法和背景减除法的视频目标识别

视 频 处 理 : 帧 差 法 、 光 流 法 和 背 景 减 除 法 的 视 频 目 标 识 别 视频处理:帧差法、光流法和背景减除法的视频目标识别 视频处理:帧差法、光流法和背景减除法的视频目标识别

1.调用摄像头

函数1:cv2.VideoCapture()

参数说明:0,1代表电脑摄像头,或视频文件路径。

函数2:ret, frame = cap.read()

说明:cap.read()按帧读取视频,

. Ret:返回布尔值True/False,如果读取帧是正确的则返回True,如果文件读取到结尾,它的返回值就为False;

. Frame:每一帧的图像,是个三维矩阵。

import numpy as np

import cv2

cap = cv2.VideoCapture(0) # 0 使用默认的电脑摄像头

while(True):

#获取一帧帧图像

ret, frame = cap.read()

#转化为灰度图

#gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

cv2.imshow('frame',frame)

#按下“q”键停止

if cv2.waitKey(1) & 0xFF == ord('q'): # cv2.waitKey(1) 1毫秒读一次

break

cap.release()

cv2.destroyAllWindows()

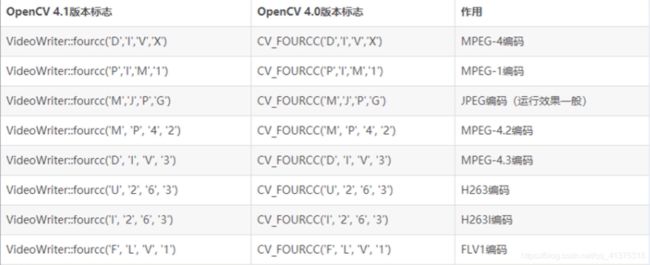

2.保存视频

import cv2

#调用摄像头函数cv2.VideoCapture,参数0:系统摄像头

cap = cv2.VideoCapture(0)

#创建编码方式

#mp4:'X','V','I','D'avi:'M','J','P','G'或'P','I','M','1' flv:'F','L','V','1'

fourcc = cv2.VideoWriter_fourcc('F','L','V','1')

#创建VideoWriter对象

out = cv2.VideoWriter('output_1.flv',fourcc, 20.0, (640,480)) # 20.0 是帧率 (640,480)是宽和高,添加第五个参数 false可以保存灰度图

#创建循环结构进行连续读写

while(cap.isOpened()):

ret, frame = cap.read()

if ret==True:

out.write(frame)

cv2.imshow('frame',frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

else:

break

cap.release()

out.release()

cv2.destroyAllWindows()

3.修改视频格式

import numpy as np

import cv2

cap = cv2.VideoCapture('output_1.flv')

fourcc = cv2.VideoWriter_fourcc('M','J','P','G')

fps = cap.get(cv2.CAP_PROP_FPS)

print(fps)

# 视频图像的宽度

frame_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

# 视频图像的长度

frame_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

print(frame_width)

print(frame_height)

#创建VideoWriter对象

out = cv2.VideoWriter('output_1_new.avi',fourcc, fps,(frame_width,frame_height))

while(True):

ret, frame = cap.read()

if ret==True:

# 水平翻转

frame = cv2.flip(frame,1)

out.write(frame)

cv2.imshow('frame',frame)

if cv2.waitKey(25) & 0xff == ord('q'):

break

else:

break

out.release()

cap.release()

cv2.destroyAllWindows()

4.帧差法

- 帧间差分法是通过对视频中相邻两帧图像做差分运算来标记运动物体的方法。

- 当视频中存在移动物体的时候,相邻帧(或相邻三帧)之间在灰度上会有差别,求取两帧图像灰度差的绝对值,则静止的物体在差值图像上表现出来全是0,而移动物体特别是移动物体的轮廓处由于存在灰度变化为非0。

# 导入必要的软件包

import cv2

# 视频文件输入初始化

filename = "move_detect.flv"

camera = cv2.VideoCapture(filename)

# 视频文件输出参数设置

out_fps = 12.0 # 输出文件的帧率

fourcc = cv2.VideoWriter_fourcc('M', 'P', '4', '2')

out1 = cv2.VideoWriter('E:/video/v1.avi', fourcc, out_fps, (500, 400))

out2 = cv2.VideoWriter('E:/video/v2.avi', fourcc, out_fps, (500, 400))

# 初始化当前帧的前帧

lastFrame = None

# 遍历视频的每一帧

while camera.isOpened():

# 读取下一帧

(ret, frame) = camera.read()

# 如果不能抓取到一帧,说明我们到了视频的结尾

if not ret:

break

# 调整该帧的大小

frame = cv2.resize(frame, (500, 400), interpolation=cv2.INTER_CUBIC)

# 如果第一帧是None,对其进行初始化

if lastFrame is None:

lastFrame = frame

continue

# 计算当前帧和前帧的不同

frameDelta = cv2.absdiff(lastFrame, frame)

# 当前帧设置为下一帧的前帧

lastFrame = frame.copy()

# 结果转为灰度图

thresh = cv2.cvtColor(frameDelta, cv2.COLOR_BGR2GRAY)

# 图像二值化

thresh = cv2.threshold(thresh, 25, 255, cv2.THRESH_BINARY)[1]

'''

#去除图像噪声,先腐蚀再膨胀(形态学开运算)

thresh=cv2.erode(thresh,None,iterations=1)

thresh = cv2.dilate(thresh, None, iterations=2)

'''

# 阀值图像上的轮廓位置

cnts, hierarchy = cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

# 遍历轮廓

for c in cnts:

# 忽略小轮廓,排除误差

if cv2.contourArea(c) < 300:

continue

# 计算轮廓的边界框,在当前帧中画出该框

(x, y, w, h) = cv2.boundingRect(c)

cv2.rectangle(frame, (x, y), (x + w, y + h), (0, 255, 0), 2)

# 显示当前帧

cv2.imshow("frame", frame)

cv2.imshow("frameDelta", frameDelta)

cv2.imshow("thresh", thresh)

# 保存视频

out1.write(frame)

out2.write(frameDelta)

# 如果q键被按下,跳出循环

if cv2.waitKey(20) & 0xFF == ord('q'):

break

# 清理资源并关闭打开的窗口

out1.release()

out2.release()

camera.release()

cv2.destroyAllWindows()

优点:

- 算法实现简单,程序设计复杂度低;

- 对光线等场景变化不太敏感,能够适应各种动态环境,稳定性较好;

缺点:

- 不能提取出对象的完整区域,对象内部有“空洞”;

- 只能提取出边界,边界轮廓比较粗,往往比实际物体要大;

- 对快速运动的物体,容易出现糊影的现象,甚至会被检测为两个不同的运动物体;

- 对慢速运动的物体,当物体在前后两帧中几乎完全重叠时,则检测不到物体;

5.光流法

import cv2 as cv

import numpy as np

es = cv.getStructuringElement(cv.MORPH_ELLIPSE, (10, 10))

kernel = cv.getStructuringElement(cv.MORPH_ELLIPSE, (3, 3))

cap = cv.VideoCapture("move_detect.flv")

frame1 = cap.read()[1]

prvs = cv.cvtColor(frame1, cv.COLOR_BGR2GRAY)

hsv = np.zeros_like(frame1)

hsv[..., 1] = 255

# 视频文件输出参数设置

out_fps = 12.0 # 输出文件的帧率

fourcc = cv.VideoWriter_fourcc('M', 'P', '4', '2')

sizes = (int(cap.get(cv.CAP_PROP_FRAME_WIDTH)), int(cap.get(cv.CAP_PROP_FRAME_HEIGHT)))

out1 = cv.VideoWriter('E:/video/v6.avi', fourcc, out_fps, sizes)

out2 = cv.VideoWriter('E:/video/v8.avi', fourcc, out_fps, sizes)

while True:

(ret, frame2) = cap.read()

next = cv.cvtColor(frame2, cv.COLOR_BGR2GRAY)

flow = cv.calcOpticalFlowFarneback(prvs, next, None, 0.5, 3, 15, 3, 5, 1.2, 0)

mag, ang = cv.cartToPolar(flow[..., 0], flow[..., 1])

hsv[..., 0] = ang * 180 / np.pi / 2

hsv[..., 2] = cv.normalize(mag, None, 0, 255, cv.NORM_MINMAX)

bgr = cv.cvtColor(hsv, cv.COLOR_HSV2BGR)

draw = cv.cvtColor(bgr, cv.COLOR_BGR2GRAY)

draw = cv.morphologyEx(draw, cv.MORPH_OPEN, kernel)

draw = cv.threshold(draw, 25, 255, cv.THRESH_BINARY)[1]

image, contours, hierarchy = cv.findContours(draw.copy(), cv.RETR_EXTERNAL, cv.CHAIN_APPROX_SIMPLE)

for c in contours:

if cv.contourArea(c) < 500:

continue

(x, y, w, h) = cv.boundingRect(c)

cv.rectangle(frame2, (x, y), (x + w, y + h), (255, 255, 0), 2)

cv.imshow('frame2', bgr)

cv.imshow('draw', draw)

cv.imshow('frame1', frame2)

out1.write(bgr)

out2.write(frame2)

k = cv.waitKey(20) & 0xff

if k == 27 or k == ord('q'):

break

elif k == ord('s'):

cv.imwrite('opticalfb.png', frame2)

cv.imwrite('opticalhsv.png', bgr)

prvs = next

out1.release()

out2.release()

cap.release()

cv.destroyAllWindows()

6.背景减除法

import numpy as np

import cv2

# read the video

cap = cv2.VideoCapture('move_detect.flv')

# create the subtractor

fgbg = cv2.createBackgroundSubtractorMOG2(

history=500, varThreshold=100, detectShadows=False)

def getPerson(image, opt=1):

# get the front mask

mask = fgbg.apply(frame)

# eliminate the noise

line = cv2.getStructuringElement(cv2.MORPH_RECT, (1, 5), (-1, -1))

mask = cv2.morphologyEx(mask, cv2.MORPH_OPEN, line)

cv2.imshow("mask", mask)

# find the max area contours

out, contours, hierarchy = cv2.findContours(mask, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

for c in range(len(contours)):

area = cv2.contourArea(contours[c])

if area < 150:

continue

rect = cv2.minAreaRect(contours[c])

cv2.ellipse(image, rect, (0, 255, 0), 2, 8)

cv2.circle(image, (np.int32(rect[0][0]), np.int32(rect[0][1])), 2, (255, 0, 0), 2, 8, 0)

return image, mask

while True:

ret, frame = cap.read()

cv2.imwrite("input.png", frame)

cv2.imshow('input', frame)

result, m_ = getPerson(frame)

cv2.imshow('result', result)

k = cv2.waitKey(20)&0xff

if k == 27:

cv2.imwrite("result.png", result)

cv2.imwrite("mask.png", m_)

break

cap.release()

cv2.destroyAllWindows()