OpenCV4 图像处理与视频分析实战教程 笔记

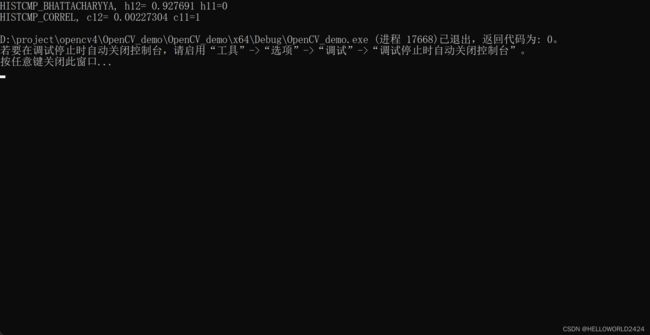

14. 图像直方图的比较

比较巴氏距离和余弦相似度

void hist_compare() {

Mat src1 = imread("D:/images/hist_01.jpg");

Mat src2 = imread("D:/images/hist_02.jpg");

Mat hist1, hist2;

int channels[] = { 0, 1, 2 };

int histSize[] = { 256, 256, 256 };

float c1[] = { 0, 255 };

float c2[] = { 0, 255 };

float c3[] = { 0, 255 };

const float* histRanges[] = { c1, c2, c3 };

calcHist(&src1, 1, channels, Mat(), hist1, 3, histSize, histRanges, true, false);

calcHist(&src2, 1, channels, Mat(), hist2, 3, histSize, histRanges, true, false);

// 归一化

normalize(hist1, hist1, 0, 1.0, NORM_MINMAX, -1, Mat());

normalize(hist2, hist2, 0, 1.0, NORM_MINMAX, -1, Mat());

double h12 = compareHist(hist1, hist2, HISTCMP_BHATTACHARYYA);

double h11 = compareHist(hist1, hist1, HISTCMP_BHATTACHARYYA);

cout << "HISTCMP_BHATTACHARYYA, h12= " << h12 << " h11=" << h11 << endl;

double c12 = compareHist(hist1, hist2, HISTCMP_CORREL);

double c11 = compareHist(hist1, hist1, HISTCMP_CORREL);

cout << "HISTCMP_CORREL, c12= " << c12 << " c11=" << c11 << endl;

}

15. 使用LUT

void Advance::lut(Mat& src) {

Mat color = imread("D:/images/lut.png");

Mat lut = Mat::zeros(256, 1, CV_8UC3);

for (size_t i = 0; i < 256; ++i) {

lut.at<Vec3b>(i, 0) = color.at<Vec3b>(10, i);

}

imshow("color", color);

Mat dst;

LUT(src, lut, dst);

imshow("lut", dst);

applyColorMap(src, dst, COLORMAP_DEEPGREEN);

imshow("default colormap", dst);

}

19. 自定义滤波

void Advance::filter2d(Mat& src) {

// 均值滤波器

int k = 15;

Mat mkernel = Mat::ones(k, k, CV_32F) / (float)(k*k);

Mat dst;

filter2D(src, dst, -1, mkernel, Point(-1, -1), 0, BORDER_DEFAULT);

imshow("2d filter", dst);

// 非均值滤波

Mat robot = (Mat_<int>(2, 2) << 1, 0, 0, -1);

Mat result;

filter2D(src, result, CV_32F, robot, Point(-1, -1), 127, BORDER_DEFAULT);

convertScaleAbs(result, result);

imshow("robot filter", result);

}

20. 图像的梯度

图像梯度的3个算子,robot,sobel和scharr算子

void Advance::gradient(Mat& src) {

// robot 算子

Mat robot_x = (Mat_<int>(2,2) << 1, 0, 0, -1);

Mat robot_y = (Mat_<int>(2,2) << 0, 1, -1, 0);

Mat grad_x, grad_y;

filter2D(src, grad_x, CV_32F, robot_x, Point(-1, -1), 0, BORDER_DEFAULT);

filter2D(src, grad_y, CV_32F, robot_y, Point(-1, -1), 0, BORDER_DEFAULT);

convertScaleAbs(grad_x, grad_x);

convertScaleAbs(grad_y, grad_y);

Mat result;

add(grad_x, grad_y, result);

imshow("robot gradient", result);

// Sobel算子

Sobel(src, grad_x, CV_32F, 1, 0);

Sobel(src, grad_y, CV_32F, 0, 1);

convertScaleAbs(grad_x, grad_x);

convertScaleAbs(grad_y, grad_y);

Mat result2;

addWeighted(grad_x, 0.5, grad_y, 0.5, 0, result2);

imshow("sobel, add weight", result2);

// Scharr算子

Mat result3;

Scharr(src, grad_x, CV_32F, 1, 0);

Scharr(src, grad_y, CV_32F, 0, 1);

convertScaleAbs(grad_x, grad_x);

convertScaleAbs(grad_y, grad_y);

addWeighted(grad_x, 0.5, grad_y, 0.5, 0, result3);

imshow("scharr", result3);

}

几种不同算子的梯度提取效果.

21. 拉普拉斯算子和图像的锐化

拉普拉斯算子对图像的噪声比较敏感

void Advance::laplacian(Mat& src) {

Mat dst;

// 拉普拉斯二阶导梯度提取,对噪声很敏感

Laplacian(src, dst, -1, 3, 1.0, 0, BORDER_DEFAULT);

imshow("Laplacian demo", dst);

// 图像锐化

Mat dst2;

Mat filter = (Mat_<int>(3, 3) << 0, -1, 0,

-1, 5, -1,

0, -1, 0);

filter2D(src, dst2, CV_32F, filter, Point(-1, -1), 0, BORDER_DEFAULT);

convertScaleAbs(dst2, dst2);

imshow("sharpen demo", dst2);

}

22. USM锐化

sharp_image = blur - laplacian

void Advance::USM(Mat& src) {

Mat blur;

GaussianBlur(src, blur, Size(3, 3), 0);

Mat lap;

Laplacian(src, lap, -1, 1, 1.0, 0, BORDER_DEFAULT);

Mat usm;

addWeighted(blur, 1.0, lap, -1.0, 0, usm);

namedWindow("usm", WINDOW_AUTOSIZE);

imshow("usm", blur);

}

23. 图像噪声

噪声分为椒盐噪声和高斯噪声

void Advance::Noise(Mat& src) {

Mat src2 = src.clone();

RNG rng(12345);

// 椒盐噪声

int nums = 10000;

int w = src.cols;

int h = src.rows;

cout << w << " " << h << endl;

for (int i = 0; i < nums; ++i) {

int x = rng.uniform(0, w);

int y = rng.uniform(0, h);

if (i % 2 == 0) {

src.at<Vec3b>(y, x) = Vec3b(0, 0, 0);

}

else {

src.at<Vec3b>(y, x) = Vec3b(255, 255, 255);

}

}

imshow("椒盐噪声", src);

Mat dst;

Mat noise = Mat::zeros(src2.size(), src2.type());

randn(noise, Scalar(25, 25, 25), Scalar(10, 10, 10));

imshow("高斯噪声", noise);

addWeighted(src2, 1.0, noise, 1.0, 0, dst);

imshow("高斯噪声加入结果", dst);

}

24. 图像去噪

去噪有中值滤波,高斯滤波

void Advance::median_blur(Mat& src) {

RNG rng(12345);

// 椒盐噪声

int nums = 10000;

int w = src.cols;

int h = src.rows;

cout << w << " " << h << endl;

for (int i = 0; i < nums; ++i) {

int x = rng.uniform(0, w);

int y = rng.uniform(0, h);

if (i % 2 == 0) {

src.at<Vec3b>(y, x) = Vec3b(0, 0, 0);

}

else {

src.at<Vec3b>(y, x) = Vec3b(255, 255, 255);

}

}

imshow("椒盐噪声", src);

Mat dst;

medianBlur(src, dst, 5);

imshow("中值滤波去噪声", dst);

GaussianBlur(src, dst, Size(5,5), 0);

imshow("高斯滤波去噪", dst);

}

高斯滤波对椒盐噪声去除效果并没有中值滤波好

void Advance::median_blur(Mat& src) {

Mat noise = Mat::zeros(src.size(), src.type());

randn(noise, Scalar(50, 60, 70), Scalar(55, 50, 50));

addWeighted(src, 1.0, noise, 0.7, 0, src);

imshow("添加高斯噪声", src);

Mat dst;

medianBlur(src, dst, 5);

imshow("中值滤波去噪声", dst);

GaussianBlur(src, dst, Size(5, 5), 0);

imshow("高斯滤波去噪", dst);

}

高斯噪声去除比椒盐噪声要困难很多,两种滤波的去除效果都很一般

25. 边缘保留滤波

分为高斯双边滤波和非局部均值滤波,其中非局部均值滤波运行很慢,灰度和彩色的api是分开的.

void Advance::bilateral_filter(Mat& src) {

Mat noise = Mat::zeros(src.size(), src.type());

randn(noise, Scalar(50, 60, 70), Scalar(55, 50, 50));

addWeighted(src, 1.0, noise, 0.7, 0, src);

imshow("添加高斯噪声", src);

// 高斯双边滤波

Mat dst;

bilateralFilter(src, dst, 0, 200, 0);

imshow("高斯双边滤波", dst);

// 非局部均值滤波

// 灰度图有效

cvtColor(src, src, COLOR_BGR2GRAY);

fastNlMeansDenoising(src, dst, 15, 10, 30);

imshow("非局部均值滤波", dst);

}

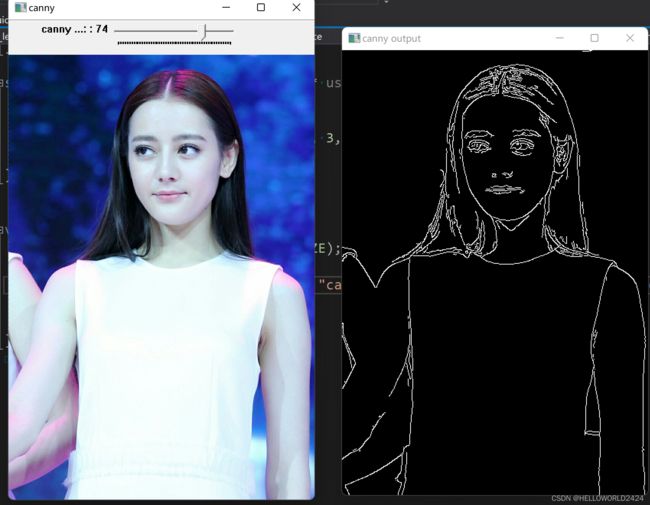

26. canny边缘提取加上trackbar演示

static void canny_demo(int thres, void* user_data) {

Mat src = *((Mat *)user_data);

Mat edges;

Canny(src, edges, thres, thres * 3, 3, false);

imshow("canny output", edges);

}

void Advance::canny(Mat& src) {

namedWindow("canny", WINDOW_AUTOSIZE);

int thres=50;

createTrackbar("canny threshod: ", "canny", &thres, 100, canny_demo, (void*)(&src));

imshow("canny", src);

canny_demo(thres, &src);

}

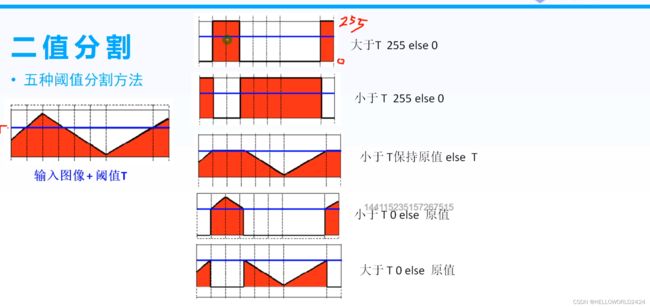

27. 二值图像的概念

void Advance::threshold_demo(Mat& src) {

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 127, 255, THRESH_BINARY);

imshow("THRESH_BINARY", binary);

threshold(gray, binary, 127, 255, THRESH_BINARY_INV);

imshow("THRESH_BINARY_INV", binary);

threshold(gray, binary, 127, 255, THRESH_TRUNC);

imshow("THRESH_TRUNC", binary);

threshold(gray, binary, 127, 255, THRESH_TOZERO);

imshow("THRESH_TOZERO", binary);

threshold(gray, binary, 127, 255, THRESH_TOZERO_INV);

imshow("THRESH_TOZERO_INV", binary);

}

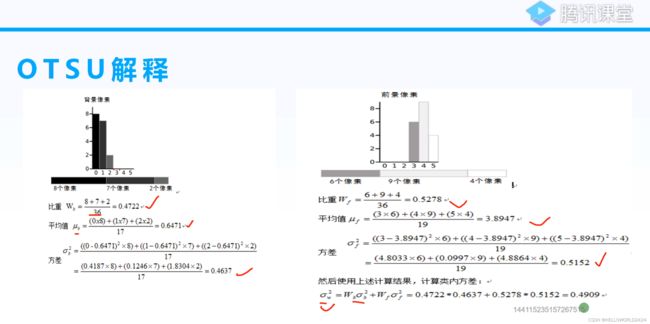

28. 全局阈值

void Advance::global_threshold(Mat& src) {

Mat gray, binary;

cvtColor(src, gray, COLOR_BGR2GRAY);

// 求均值

Scalar m = mean(gray);

threshold(gray, binary, m[0], 255, THRESH_BINARY);

imshow("mean", binary);

// OTSU

double t1 = threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

imshow("OTSU", binary);

cout << "OTSU threshold: " << t1 << endl;

// 三角法 triangle

double t2 = threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_TRIANGLE);

imshow("triangle", binary);

cout << "triangle threshold: " << t2 << endl;

}

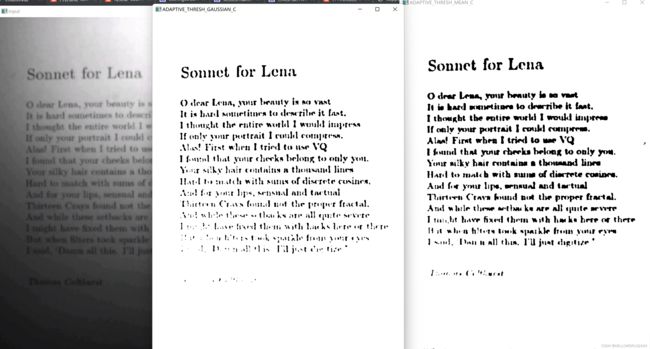

29. 自适应阈值

void Advance::adjust_threshold(Mat& src) {

Mat dst, gray;

cvtColor(src, gray, COLOR_BGR2GRAY);

adaptiveThreshold(gray, dst, 255, ADAPTIVE_THRESH_GAUSSIAN_C, THRESH_BINARY, 25, 10);

imshow("ADAPTIVE_THRESH_GAUSSIAN_C", dst);

adaptiveThreshold(gray, dst, 255, ADAPTIVE_THRESH_MEAN_C, THRESH_BINARY, 25, 10);

imshow("ADAPTIVE_THRESH_MEAN_C", dst);

}

30. 联通件的扫描

void Advance::connect(Mat& src) {

Mat gray, binary;

GaussianBlur(src, src, Size(3, 3), 0);

cvtColor(src, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

imshow("otsu", binary);

Mat labels = Mat::zeros(binary.size(), CV_32S);

int num_labels = connectedComponents(binary, labels, 8, CV_32S, CCL_DEFAULT);

printf("num of objects %d", num_labels-1);

RNG rng(123);

vector<Vec3b> colors(num_labels);

colors[0] = Vec3b(0, 0, 0);

for (int i = 1; i < num_labels; ++i) {

colors[i] = Vec3b(rng.uniform(0, 255), rng.uniform(0, 255), rng.uniform(0, 255));

}

Mat result = Mat::zeros(src.size(), src.type());

for (int h = 0; h < binary.rows; ++h) {

for (int w = 0; w < binary.cols; ++w) {

int label = labels.at<int>(h, w);

result.at<Vec3b>(h, w) = colors[label];

}

}

putText(result, format("num of objects %d", num_labels - 1), Point(50, 50), FONT_HERSHEY_PLAIN, 2, Scalar(0, 255, 0), 2, 8);

imshow("colorful", result);

}

31. 带统计信息联通件扫描

统计的信息有联通件对中心,外接矩形的左上角坐标,高宽值,联通件面积等值。

void Advance::ccl_statistic_demo(Mat &image) {

Mat gray, binary;

cvtColor(image, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

imshow("otsu", binary);

Mat labels = Mat::zeros(binary.size(), CV_32S);

Mat stats, centroids;

int num_labels = connectedComponentsWithStats(binary, labels, stats, centroids, 8, CV_32S, CCL_DEFAULT);

// 生成不同的颜色

RNG rng(123);

vector<Vec3b> colors(num_labels);

colors[0] = Vec3b(0, 0, 0);

for (int i = 1; i < num_labels; ++i) {

colors[i] = Vec3b(rng.uniform(0, 255), rng.uniform(0, 255), rng.uniform(0, 255));

}

Mat result = Mat::zeros(image.size(), CV_8UC3);

for (int h = 0; h < binary.rows; ++h) {

for (int w = 0; w < binary.cols; ++w) {

int label = labels.at<int>(h, w);

result.at<Vec3b>(h, w) = colors[label];

}

}

for (int i = 1; i < num_labels; ++i) {

int cx = centroids.at<double>(i, 0);

int cy = centroids.at<double>(i, 1);

circle(result, Point(cx, cy), 3, Scalar(0, 255, 0), 2, LINE_8, 0);

int x = stats.at<int>(i, CC_STAT_LEFT);

int y = stats.at<int>(i, CC_STAT_TOP);

int height = stats.at<int>(i, CC_STAT_HEIGHT);

int width = stats.at<int>(i, CC_STAT_WIDTH);

int area = stats.at<int>(i, CC_STAT_AREA);

Rect rect(x, y, width, height);

rectangle(result, rect, Scalar(0, 0, 255), 2, LINE_8, 0);

putText(result, format("%d", area), Point(x, y), FONT_HERSHEY_PLAIN, 2, Scalar(255, 0, 0), 1, LINE_8);

}

putText(result, format("num of objects %d", num_labels - 1), Point(10, 10), FONT_HERSHEY_PLAIN, 1, Scalar(0, 255, 0), 2, 8);

imshow("colorful", result);

}

32. 轮廓的发现和绘制

void Advance::find_contours(Mat &image) {

Mat gray, binary;

cvtColor(image, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

imshow("otsu", binary);

vector<vector<Point>> contours;

vector<Vec4i> hirerachy;

findContours(binary, contours, hirerachy, RETR_TREE, CHAIN_APPROX_SIMPLE, Point());

drawContours(image, contours, -1, Scalar(0, 255, 0), 2, LINE_8);

imshow("find contours", image);

}

33. 图像轮廓的计算

void Advance::cal_contours(Mat &image) {

Mat gray, binary;

cvtColor(image, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY_INV | THRESH_OTSU);

imshow("otsu", binary);

vector<vector<Point>> contours;

vector<Vec4i> hirerachy;

findContours(binary, contours, hirerachy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE, Point());

for (size_t i = 0; i < contours.size(); ++i) {

double area = contourArea(contours[i]);

double len = arcLength(contours[i], true);

if (area < 100 || area < 10) {

continue;

}

Rect box = boundingRect(contours[i]);

//drawContours(image, contours, i, Scalar(0, 255, 0), 2, LINE_8);

//rectangle(image, box, Scalar(0, 255, 0), 2);

RotatedRect rrt = minAreaRect(contours[i]);

// 绘制最小包围的椭圆

//ellipse(image, rrt, Scalar(255, 0, 0), 2);

// 最小包围的斜矩形

Point2f pts[4];

rrt.points(pts);

for (int j = 0; j < 4; ++j) {

line(image, pts[j], pts[(j + 1) % 4], Scalar(255,0, 255), 2);

}

double angle = rrt.angle;

printf("index: %d, area: %.1f, length: %.1f, angle: %.1f\n", i, area, len, angle);

}

imshow("find contours", image);

}

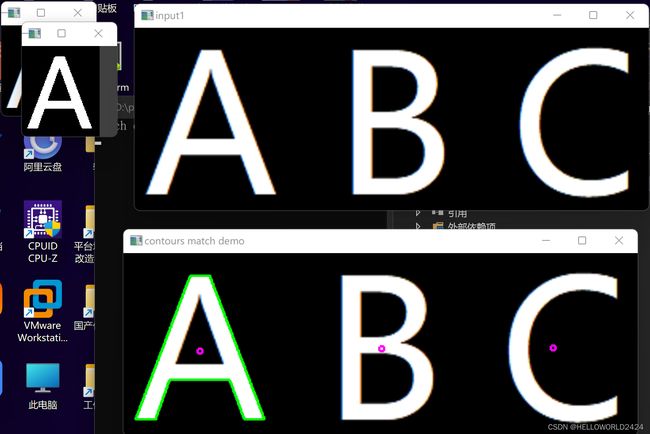

34. 轮廓匹配

void contour_info(Mat &image, vector<vector<Point>> &contours) {

Mat dst, gray, binary;

GaussianBlur(image, dst, Size(3, 3), 0);

cvtColor(dst, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

imshow("binary", binary);

vector<Vec4i> hirearchy;

findContours(binary, contours, hirearchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE);

}

void Advance::match_contours() {

Mat src1 = imread("D:/images/abc.png");

Mat src2 = imread("D:/images/a5.png");

if (src1.empty() || src2.empty()) {

printf("could not load image");

return;

}

imshow("input1", src1);

imshow("imput2", src2);

vector<vector<Point>> contours1, contours2;

contour_info(src1, contours1);

contour_info(src2, contours2);

Moments mm2 = moments(contours2[0]);

Mat hu2;

HuMoments(mm2, hu2);

for (size_t i = 0; i < contours1.size(); ++i) {

Moments mm = moments(contours1[i]);

double cx = mm.m10 / mm.m00;

double cy = mm.m01 / mm.m00;

circle(src1, Point(cx, cy), 3, Scalar(255, 0, 255), 2);

Mat hu;

HuMoments(mm, hu);

double dist = matchShapes(hu, hu2, CONTOURS_MATCH_I1, 0);

if (dist < 1.0) {

printf("match distance value: %.2f\n", dist);

drawContours(src1, contours1, i, Scalar(0, 255, 0), 2);

}

}

imshow("contours match demo", src1);

}

3.5 轮廓逼近和拟合

使用线段去逼近

void Advance::approx_contours(Mat &image) {

Mat dst;

GaussianBlur(image, dst, Size(3, 3), 0);

Mat gray;

cvtColor(dst, gray, COLOR_BGR2GRAY);

Mat binary;

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

imshow("binary", binary);

vector<vector<Point>> contours;

vector<Vec4i> hirearchy;

findContours(binary, contours, hirearchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE);

for (size_t i = 0; i < contours.size(); ++i) {

Mat result;

approxPolyDP(contours[i], result, 4, true);

printf("coners: %d\n", result.rows);

double c = arcLength(contours[i], true);

double s = contourArea(contours[i]);

// 计算中心点

Moments mm = moments(contours[i]);

double cx = mm.m10 / mm.m00;

double cy = mm.m01 / mm.m00;

if (result.rows == 3) {

putText(image, format("triangle, c=%.0f, s=%.0f\n", c, s), Point(cx-10, cy-10), FONT_HERSHEY_PLAIN, 1, Scalar(255, 0, 255));

circle(image, Point(cx, cy), 3, Scalar(0, 255, 0));

}

}

imshow("approx contours", image);

}

![]()

使用椭圆去逼近

void Advance::approx_contours2(Mat &image) {

Mat dst;

GaussianBlur(image, dst, Size(3, 3), 0);

Mat gray;

cvtColor(dst, gray, COLOR_BGR2GRAY);

Mat binary;

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

imshow("binary", binary);

vector<vector<Point>> contours;

vector<Vec4i> hirearchy;

findContours(binary, contours, hirearchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE);

//drawContours(image, contours, 0, Scalar(0, 255, 0), 2);

RotatedRect rrt = fitEllipse(contours[0]);

ellipse(image, rrt, Scalar(255, 0, 0), 2);

imshow("approx contours", image);

}

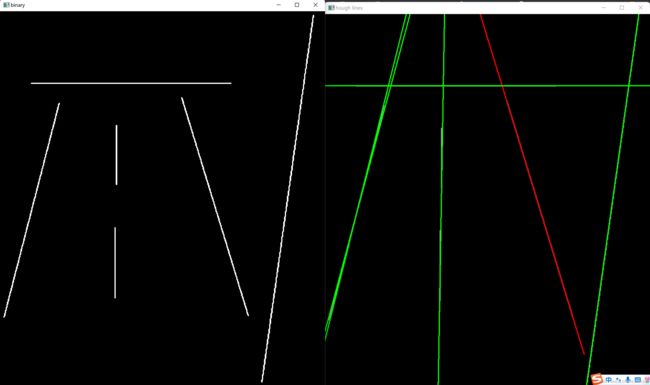

36 霍夫直线检测

void Advance::hough_lines(Mat &image) {

Mat dst;

GaussianBlur(image, dst, Size(3, 3), 0);

Mat gray;

cvtColor(dst, gray, COLOR_BGR2GRAY);

Mat binary;

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

imshow("binary", binary);

// 霍夫直线检测

vector<Vec3f> lines;

HoughLines(binary, lines, 1, CV_PI / 180.0, 250, 0, 0);

Point pt1, pt2;

for (size_t i = 0; i < lines.size(); ++i) {

float rho = lines[i][0]; // 距离

float theta = lines[i][1]; //角度

float acc = lines[i][2]; // 累加值

double a = cos(theta);

double b = sin(theta);

double x0 = a * rho, y0 = b * rho;

pt1.x = cvRound(x0 + 1000 * (-b));

pt1.y = cvRound(y0 + 1000 * a);

pt2.x = cvRound(x0 - 1000 * (-b));

pt2.y = cvRound(y0 - 1000 * a);

int angle = round(theta / CV_PI * 180);

if (angle > 90) {

line(image, pt1, pt2, Scalar(0, 0, 255), 2, 8, 0);

}

else {

line(image, pt1, pt2, Scalar(0, 255, 0), 2, 8, 0);

}

printf("rho: %.2f, theta: %.2f, acc: %.2f, angle: %d\n", rho, theta, acc, angle);

}

imshow("hough lines", image);

}

37 霍夫直线检测2

void Advance::hough_linesp(Mat &image) {

Mat binary;

Canny(image, binary, 80, 160, 3, false);

imshow("canny", binary);

vector<Vec4i> lines;

HoughLinesP(binary, lines, 1, CV_PI / 180, 80, 200, 10);

Mat result = Mat::zeros(image.size(), image.type());

for (int i = 0; i < lines.size(); ++i) {

line(result, Point(lines[i][0], lines[i][1]), Point(lines[i][2], lines[i][3]), Scalar(0, 255, 0), 1);

}

imshow("hough linesp demo", result);

}

38. 霍夫圆检测

霍夫类型算法对噪声很敏感的,所以使用前需要先进行高斯模糊

void Advance::hough_circle(Mat &image) {

Mat gray, binary;

cvtColor(image, gray, COLOR_BGR2GRAY);

GaussianBlur(gray, gray, Size(9, 9), 2, 2);

imshow("gray", gray);

vector<Vec3f> circles;

int min_dis = 20, min_r = 10, max_r = 50;

HoughCircles(gray, circles, HOUGH_GRADIENT, 2, min_dis, 100, 100, min_r, max_r);

for (int t = 0; t < circles.size(); ++t) {

Point centre(circles[t][0], circles[t][1]);

double radius = round(circles[t][2]);

circle(image, centre, radius, Scalar(0, 255, 0), 2);

}

imshow("hough circle", image);

}

有高斯模糊

没有高斯模糊

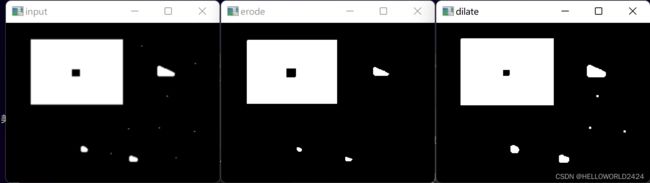

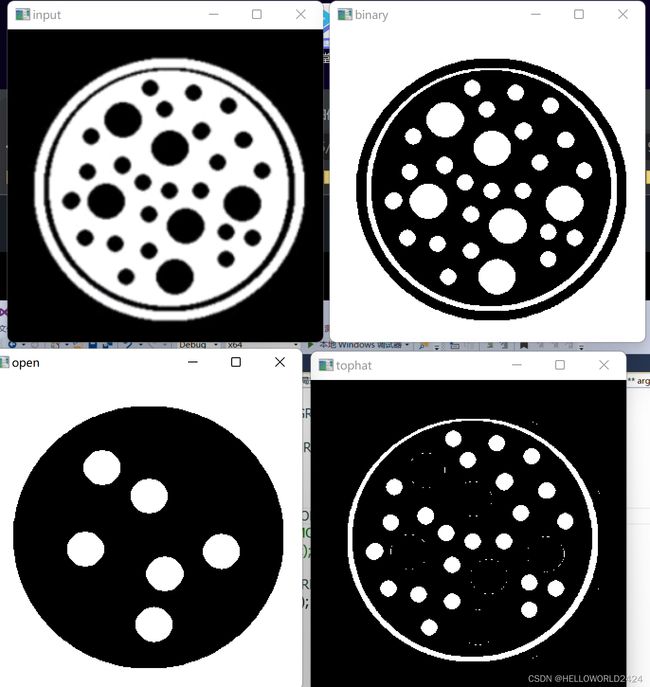

39. 图像形态学操作

膨胀和腐蚀

void Advance::erode_dilate(Mat &image) {

Mat gray, binary;

cvtColor(image, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

Mat dst1, dst2;

// 腐蚀

Mat kernel = getStructuringElement(MORPH_RECT, Size(3, 3), Point(-1, -1));

erode(binary, dst1, kernel);

imshow("erode", dst1);

// 膨胀

dilate(binary, dst2, kernel);

imshow("dilate", dst2);

}

40. 图像的开闭

void Advance::open_close(Mat &image) {

// 开闭

Mat gray, binary;

cvtColor(image, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

Mat dst1, dst2;

// 闭操作 先膨胀后腐蚀,去掉黑色的小东西

//Mat kernel = getStructuringElement(MORPH_ELLIPSE, Size(25, 25), Point(-1, -1));

//morphologyEx(binary, dst1, MORPH_CLOSE, kernel, Point(-1, -1), 1);

//imshow("open", dst1);

// 开操作,先腐蚀后膨胀, 去掉白色(前景, OpenCV所有形态学操作,白是前景,黑色背景)的小东西

Mat kernel1 = getStructuringElement(MORPH_RECT, Size(30, 1), Point(-1, -1));

morphologyEx(binary, dst2, MORPH_OPEN, kernel1, Point(-1, -1));

imshow("open", dst2);

}

41. 形态学梯度

基本梯度 – 膨胀减去腐蚀之后的结果

内梯度 – 原图减去腐蚀后结果

外梯度 – 膨胀减去原图后结果

void Advance::morph_gradient(Mat &image) {

Mat gray;

cvtColor(image, gray, COLOR_BGR2GRAY);

Mat kernel = getStructuringElement(MORPH_RECT, Size(3, 3), Point(-1, -1));

Mat basic_grad;

// 基本梯度 -- 膨胀减去腐蚀之后的结果

morphologyEx(gray, basic_grad, MORPH_GRADIENT, kernel, Point(-1, -1));

imshow("basic gradient", basic_grad);

// 内梯度 -- 原图减去腐蚀后结果

Mat dst1, inner_grad;

morphologyEx(gray, dst1, MORPH_ERODE, kernel);

subtract(gray, dst1, inner_grad);

imshow("inner grad", inner_grad);

// 外梯度 -- 膨胀减去原图后结果

Mat dst2, outter_grad;

morphologyEx(gray, dst2, MORPH_DILATE, kernel);

subtract(dst2, gray, outter_grad);

imshow("outter grad", outter_grad);

}

42. 更多形态学操作

// 顶帽: 原图减去开操作之后的结果

void Advance::other_morph(Mat &image) {

Mat gray, binary;

cvtColor(image, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY_INV | THRESH_OTSU);

imshow("binary", binary);

// 顶帽: 原图减去开操作之后的结果

Mat kernel = getStructuringElement(MORPH_ELLIPSE, Size(25, 25));

Mat tophat, open;

morphologyEx(binary, tophat, MORPH_TOPHAT, kernel);

morphologyEx(binary, open, MORPH_OPEN, kernel, Point(-1, -1));

imshow("open", open);

imshow("tophat", tophat);

}

void Advance::other_morph(Mat &image) {

Mat gray, binary;

cvtColor(image, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

imshow("binary", binary);

// 黑帽:闭操作之后结果减去原图

Mat kernel = getStructuringElement(MORPH_ELLIPSE, Size(25, 25));

Mat blackhat, close;

morphologyEx(binary, blackhat, MORPH_BLACKHAT, kernel);

morphologyEx(binary, close, MORPH_CLOSE, kernel, Point(-1, -1));

imshow("close", close);

imshow("blackhat", blackhat);

}

void Advance::other_morph(Mat &image) {

Mat gray, binary;

cvtColor(image, gray, COLOR_BGR2GRAY);

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

imshow("binary", binary);

// hit and miss

Mat kernel = getStructuringElement(MORPH_CROSS, Size(25, 25));

Mat hitmiss;

morphologyEx(binary, hitmiss, MORPH_HITMISS, kernel);

imshow("hitmiss", hitmiss);

}

43. 综合练习 找出卫星图岛屿轮廓

void Advance::image_process_demo(Mat &image) {

Mat gray;

cvtColor(image, gray, COLOR_BGR2GRAY);

Mat blur;

GaussianBlur(gray, blur, Size(3, 3), 0);

Mat binary;

// 二值化

threshold(gray, binary, 0, 255, THRESH_BINARY | THRESH_OTSU);

imshow("binary", binary);

// 闭操作,用于填充最大的白色轮廓

Mat morph;

Mat kernel = getStructuringElement(MORPH_RECT, Size(15, 15), Point(-1, -1));

morphologyEx(binary, morph, MORPH_CLOSE, kernel);

imshow("morph", morph);

vector<vector<Point>> contours;

vector<Vec4i> hirerachy;

findContours(morph, contours, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE);

Mat output = image.clone();

double max_area = 0.;

size_t max_index;

for (size_t i = 0; i < contours.size(); ++i) {

double area = contourArea(contours[i]);

printf("index: %d, area: %.1f\n", i, area);

if (area > max_area) {

max_index = i;

max_area = area;

}

drawContours(output, contours, i, Scalar(0, 255, 0), 2);

}

Mat result = Mat::zeros(image.size(), image.type());

drawContours(result, contours, max_index, Scalar(0, 0, 255), 2);

Mat pts;

// 多边形逼近

approxPolyDP(contours[max_index], pts, 4, true);

for (int i = 0; i < pts.rows; ++i) {

Vec2i pt = pts.at<Vec2i>(i, 0);

circle(result, Point(pt[0], pt[1]), 2, Scalar(0, 255, 0), 2, 8, 0);

}

printf("max index: %d", max_index);

imshow("output", output);

imshow("result", result);

}

44. 视频读写api

读取一个视频信息并保存

void Advance::video_capture() {

VideoCapture capture("D:/images/bike.avi");

if (!capture.isOpened()) {

printf("video is not opened");

return;

}

namedWindow("frame", WINDOW_AUTOSIZE);

int height = capture.get(CAP_PROP_FRAME_HEIGHT);

int width = capture.get(CAP_PROP_FRAME_WIDTH);

int fps = capture.get(CAP_PROP_FPS);

int type = capture.get(CAP_PROP_FOURCC);

printf("height: %d, width: %d, fps: %d", height, width, fps);

VideoWriter writer("D:/test.mp4", type, fps, Size(width, height), true);

while (true) {

char c = waitKey(50);

if (c == 27) {

break;

}

Mat frame;

bool ret = capture.read(frame);

if (!ret) {

break;

}

writer.write(frame);

imshow("frame", frame);

}

capture.release();

writer.release();

destroyAllWindows();

}

45. 色彩空间转换

使用色彩空间转换进行绿幕抠图

void Advance::color_space() {

VideoCapture capture("D:/images/01.mp4");

while (true) {

char c = waitKey(50);

if (c == 27) {

break;

}

Mat frame, hsv, lab;

bool ret = capture.read(frame);

if (!ret) {

break;

}

cvtColor(frame, hsv, COLOR_BGR2HSV);

//cvtColor(frame, lab, COLOR_BGR2Lab);

//imshow("hsv", hsv);

//imshow("lab", lab);

Mat mask,dst;

inRange(hsv, Scalar(35, 43, 46), Scalar(77, 255, 255), mask);

//imshow("mask", mask);

bitwise_not(mask, mask);

bitwise_and(frame, frame, dst, mask);

imshow("front", dst);

}

}

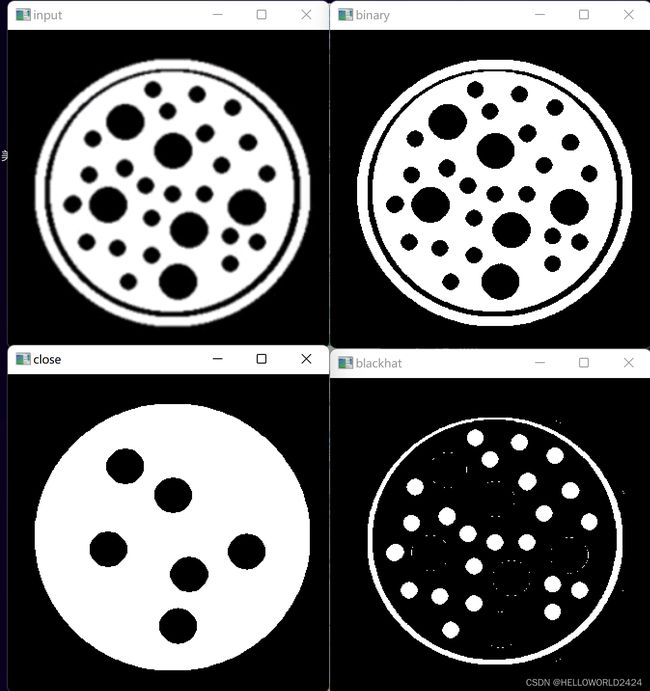

46. 直方图的反向投影

void Advance::back_project() {

Mat src = imread("D:/images/hand.jpg");

imshow("src", src);

Mat sample = imread("D:/images/shand.png");

imshow("sample", sample);

Mat hsv;

cvtColor(src, hsv, COLOR_BGR2HSV);

int hbins = 48, s_bins = 48;

int hist_size[] = { hbins, s_bins };

int channels[] = { 0, 1 };

Mat roi_hist;

float h_ranges[] = { 0, 180 };

float s_ranges[] = { 0, 255 };

const float* ranges[] = { h_ranges, s_ranges };

calcHist(&hsv, 1, channels, Mat(), roi_hist, 2, hist_size, ranges, true, false);

normalize(roi_hist, roi_hist, 0, 255, NORM_MINMAX);

MatND backproj;

calcBackProject(&hsv, 1, channels, roi_hist, backproj, ranges, 1.0);

imshow("back projection demo", backproj);

}

47. Harris角点检测

void Advance::harris_corner() {

VideoCapture capture("D:/images/bike.avi");

while (true) {

if (!capture.isOpened()) {

return;

}

int n = waitKey(50);

if (n == 27) {

break;

}

Mat frame;

bool ret = capture.read(frame);

if (!ret) {

break;

}

Mat gray;

cvtColor(frame, gray, COLOR_BGR2GRAY);

Mat dst;

double k = 0.04;

int block_size = 2;

int ksize = 3;

cornerHarris(gray, dst, block_size, ksize, k);

Mat dst_norm = Mat::zeros(frame.size(), frame.type());

normalize(dst, dst_norm, 0, 255, NORM_MINMAX, -1, Mat());

convertScaleAbs(dst_norm, dst_norm);

RNG rng(143);

for (int row = 0; row < frame.rows; ++row) {

for (int col = 0; col < frame.cols; ++col) {

int rsp = dst_norm.at<uchar>(row, col);

if (rsp > 150) {

int b = rng.uniform(0, 255);

int g = rng.uniform(0, 255);

int r = rng.uniform(0, 255);

circle(frame, Point(col, row), 3, Scalar(b, g, r));

}

}

}

imshow("harris corner", frame);

}

}

![]()

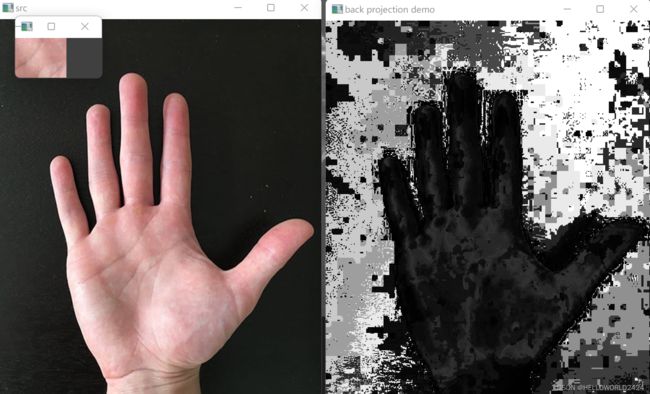

48. shitomas角点检测

void Advance::shitomas_corner_detector() {

// shitomas脚点检测

VideoCapture capture("D:/images/bike.avi");

while (true) {

if (!capture.isOpened()) {

return;

}

int key = waitKey(50);

if (key == 27) {

break;

}

Mat frame;

bool ret = capture.read(frame);

if (!ret) {

break;

}

Mat gray;

cvtColor(frame, gray, COLOR_BGR2GRAY);

vector<Point2f> corners;

RNG rng(123);

// qualty_level = 0.01代表response小于10的都过滤了

double qualty_level = 0.01;

goodFeaturesToTrack(gray, corners, 50, 0.01, 3, Mat());

Mat shitomas = frame.clone();

for (size_t i = 0; i < corners.size(); ++i) {

int b = rng.uniform(0, 255);

int g = rng.uniform(0, 255);

int r = rng.uniform(0, 255);

circle(frame, corners[i], 3, Scalar(b, g, r));

}

imshow("frame", frame);

imshow("shitomas", shitomas);

}

}

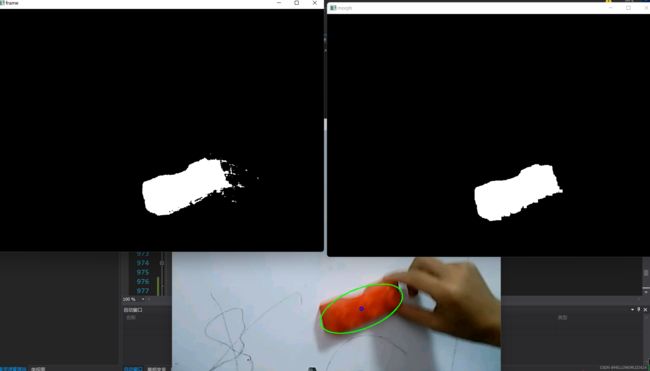

49. 基于颜色对象的跟踪

本小节学习的重点:要进行颜色的提取,必须先转换到hsv的色彩空间上。

void Advance::color_trace() {

VideoCapture capture("D:/images/blackboard.mp4");

if (!capture.isOpened()) {

return;

}

while (true)

{

int key = waitKey(50);

if (key == 27) {

break;

}

Mat frame;

bool ret = capture.read(frame);

if (!ret) {

break;

}

Mat hsv, dst;

cvtColor(frame, hsv, COLOR_BGR2HSV);

// 0-10 43-255 46-255

inRange(hsv, Scalar(0, 43, 46), Scalar(10, 255, 255), dst);

imshow("frame", dst);

// 形态学操作

Mat morph;

Mat se = getStructuringElement(MORPH_RECT, Size(8, 8));

morphologyEx(dst, morph, MORPH_OPEN, se);

imshow("morph", morph);

// 查找轮廓contours

vector<vector<Point>> contours;

vector<Vec4i> hirearchy;

findContours(morph, contours, hirearchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE);

double max_area = 0;

size_t contour_index = -1;

for (size_t t = 0; t < contours.size(); ++t) {

double area = contourArea(contours[t]);

if (area > max_area) {

contour_index = t;

max_area = area;

}

}

printf("max area: %.1f\n", max_area);

// 拟合

if (contour_index >= 0) {

RotatedRect rrt = minAreaRect(contours[contour_index]);

ellipse(frame, rrt, Scalar(0, 255, 0), 2);

circle(frame, rrt.center, 4, Scalar(255, 0, 0), 2);

}

imshow("color_trace", frame);

}

}

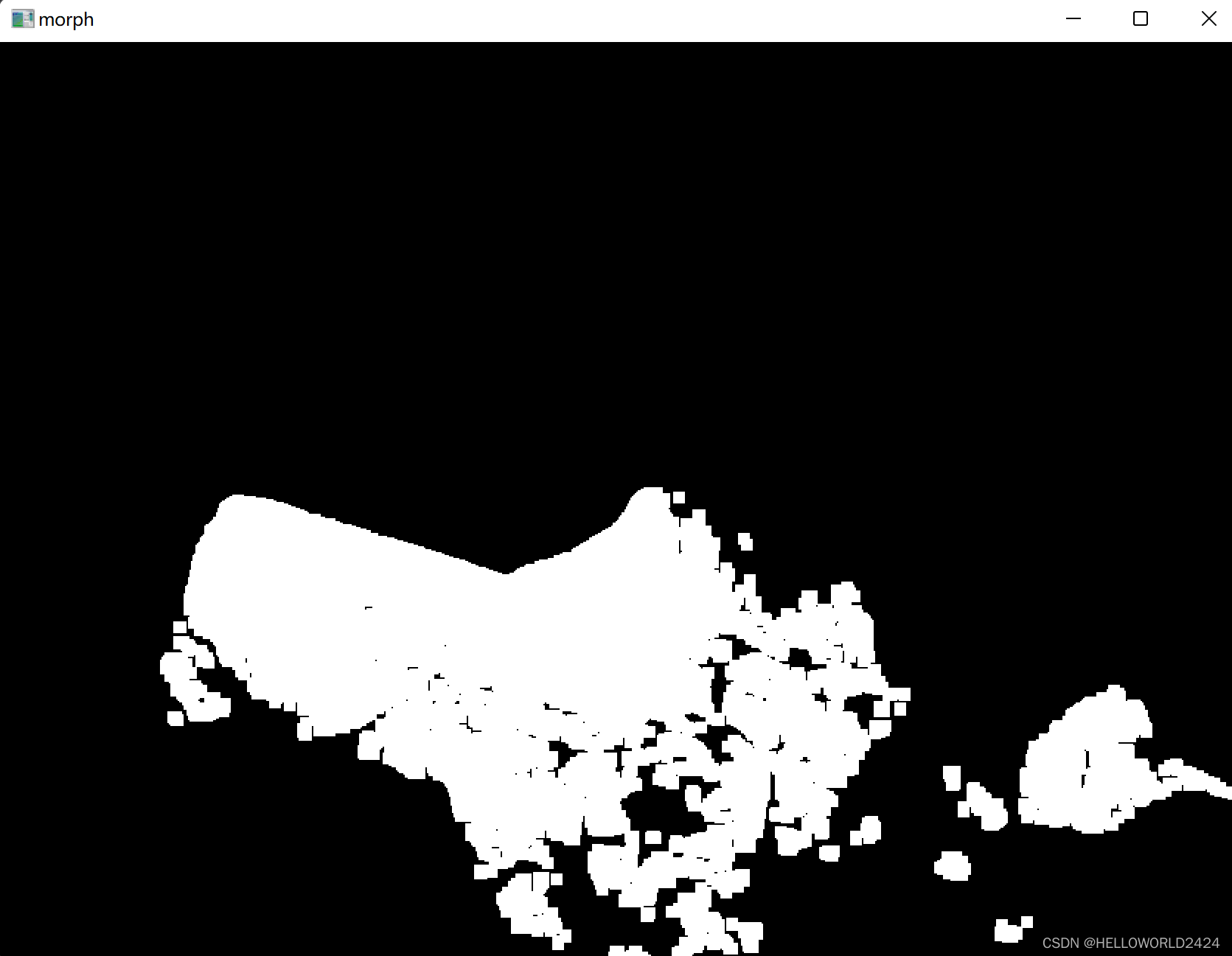

50. 背景分析

void Advance::bg_extraction() {

VideoCapture capture("D:/images/blackboard.mp4");

if (!capture.isOpened()) {

return;

}

auto pMOG2 = createBackgroundSubtractorMOG2(500, 1000, false);

while (true)

{

int key = waitKey(50);

if (key == 27) {

break;

}

Mat frame;

bool ret = capture.read(frame);

if (!ret) {

break;

}

Mat mask, bg_image;

pMOG2->apply(frame, mask);

pMOG2->getBackgroundImage(bg_image);

Mat morph;

Mat kernel = getStructuringElement(MORPH_RECT, Size(8, 8));

morphologyEx(mask, morph, MORPH_OPEN, kernel);

imshow("morph", morph);

imshow("backgroud", bg_image);

}

}

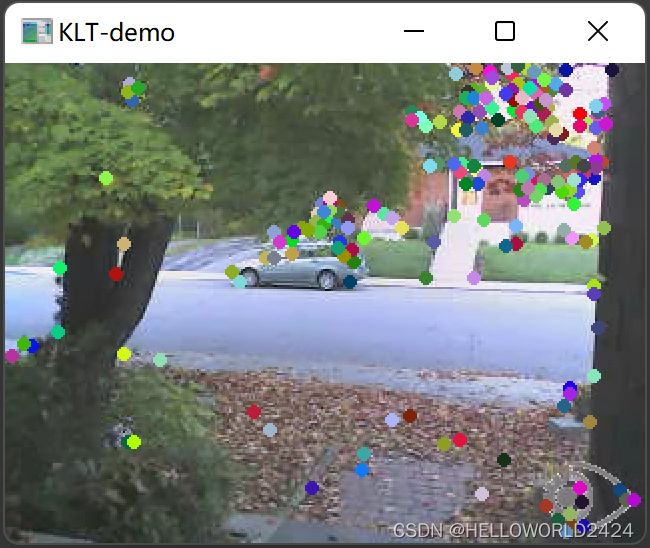

51. 光流法

void Advance::optical_analysis() {

VideoCapture capture("D:/images/bike.avi");

if (!capture.isOpened()) {

return;

}

namedWindow("frame", WINDOW_AUTOSIZE);

Mat old_frame, old_gray;

capture.read(old_frame);

cvtColor(old_frame, old_gray, COLOR_BGR2GRAY);

vector<Point2f> feature_pts;

double quality_level = 0.01;

// shitomas角点检测

goodFeaturesToTrack(old_gray, feature_pts, 200, quality_level, 3, Mat(), 3, false);

vector<uchar> status;

vector<float> err;

Mat frame, gray;

vector<Point2f> pts[2];

pts[0].insert(pts[0].end(), feature_pts.begin(), feature_pts.end());

TermCriteria criteria(TermCriteria::COUNT + TermCriteria::EPS, 10, 0.01);

RNG rng(123);

while (true)

{

int key = waitKey(50);

if (key == 27) {

break;

}

bool ret = capture.read(frame);

if (!ret) {

break;

}

capture.read(frame);

cvtColor(frame, gray, COLOR_BGR2GRAY);

// 光流分析

calcOpticalFlowPyrLK(old_gray, gray, pts[0], pts[1], status, err, Size(21, 21), 3, criteria, 0);

size_t i = 0, k = 0;

for (i = 0; i < pts[1].size(); ++i) {

if (status[i]) {

pts[0][k] = pts[0][i];

pts[1][k++] = pts[1][i];

int b = rng.uniform(0, 255);

int g = rng.uniform(0, 255);

int r = rng.uniform(0, 255);

circle(frame, pts[1][i], 2, Scalar(b, g, r), 2, 8, 0);

line(frame, pts[0][i], pts[1][i], Scalar(b, g, r), 2, 8, 0);

}

}

pts[0].resize(k);

pts[1].resize(k);

imshow("KLT-demo", frame);

std::swap(pts[0], pts[1]);

cv::swap(old_gray, gray);

}

}

55. 基于均值迁移的视频分析

void Advance::mean_analysis() {

VideoCapture capture("D:/images/blackboard.mp4");

if (!capture.isOpened()) {

printf("could not open the camera...\n");

}

namedWindow("frame", WINDOW_AUTOSIZE);

Mat frame, hsv, hue, mask, hist, backproj;

capture.read(frame);

bool init = false;

Rect trackWindow;

int hsize = 16;

float hranges[] = { 0,180 };

const float* ranges = hranges;

Rect selection = selectROI("MeanShift Demo", frame, true, false);

while (true) {

bool ret = capture.read(frame);

if (!ret) {

break;

}

cvtColor(frame, hsv, COLOR_BGR2HSV);

inRange(hsv, Scalar(156, 43, 46), Scalar(180, 255, 255), mask);

int ch[] = { 0, 0 };

hue.create(hsv.size(), hsv.depth());

mixChannels(&hsv, 1, &hue, 1, ch, 1);

if (init) {

Mat roi(hue, selection), maskroi(mask, selection);

calcHist(&roi, 1, 0, maskroi, hist, 1, &hsize, &ranges);

normalize(hist, hist, 0, 255, NORM_MINMAX);

trackWindow = selection;

init = false;

}

// ms

calcBackProject(&hue, 1, 0, hist, backproj, &ranges);

backproj &= mask;

meanShift(backproj, trackWindow, TermCriteria(TermCriteria::COUNT | TermCriteria::EPS, 10, 1));

rectangle(frame, trackWindow, Scalar(0, 0, 255), 3, LINE_AA);

imshow("MeanShift Demo", frame);

char c = waitKey(1);

if (c == 27) {

break;

}

}

}