NNDL实验6

卷积神经网络(Convolutional Neural Network,CNN)

- 受生物学上感受野机制的启发而提出。

- 一般是由卷积层、汇聚层和全连接层交叉堆叠而成的前馈神经网络

- 有三个结构上的特性:局部连接、权重共享、汇聚。

- 具有一定程度上的平移、缩放和旋转不变性。

- 和前馈神经网络相比,卷积神经网络的参数更少。

- 主要应用在图像和视频分析的任务上,其准确率一般也远远超出了其他的神经网络模型。

- 近年来卷积神经网络也广泛地应用到自然语言处理、推荐系统等领域。

5.1 卷积

5.1.1 二维卷积运算

5.1.2 二维卷积算子

import torch

import torch.nn as nn

class Conv2D(nn.Module):

def __init__(self, weight_attr=torch.tensor([[0., 1.], [2., 3.]])):

super(Conv2D, self).__init__()

# 使用'torch.Parameter'进行参数初始化

self.weight = torch.nn.Parameter(weight_attr)

def forward(self, X):

"""

输入:

- X:输入矩阵,shape=[B, M, N],B为样本数量

输出:

- output:输出矩阵

"""

u, v = self.weight.shape

output = torch.zeros([X.shape[0], X.shape[1] - u + 1, X.shape[2] - v + 1])

for i in range(output.shape[1]):

for j in range(output.shape[2]):

output[:, i, j] = torch.sum(X[:, i:i + u, j:j + v] * self.weight, dim=[1, 2])

return output

# 随机构造一个二维输入矩阵

torch.random.manual_seed(100)

inputs = torch.tensor([[[1., 2., 3.], [4., 5., 6.], [7., 8., 9.]]])

conv2d = Conv2D()

outputs = conv2d(inputs)

print("input: {}, \noutput: {}".format(inputs, outputs))

input: tensor([[[1., 2., 3.],

[4., 5., 6.],

[7., 8., 9.]]]),

output: tensor([[[25., 31.],

[43., 49.]]], grad_fn=)

5.1.3 二维卷积的参数量和计算量

随着隐藏层神经元数量的变多以及层数的加深,使用全连接前馈网络处理图像数据时,参数量会急剧增加。

如果使用卷积进行图像处理,相较于全连接前馈网络,参数量少了非常多。

5.1.4 感受野

5.1.5 卷积的变种

5.1.5.1 步长(Stride)

5.1.5.2 零填充(Zero Padding)

关于这几个

5.1.6 带步长和零填充的二维卷积算子

import torch

import torch.nn as nn

class Conv2D(nn.Module):

def __init__(self, kernel_size, stride=1, padding=0, weight_attr=False):

super(Conv2D, self).__init__()

if type(weight_attr) == bool:

weight_attr = torch.ones(size=(kernel_size, kernel_size))

self.weight = torch.nn.Parameter(weight_attr)

# 步长

self.stride = stride

# 零填充

self.padding = padding

def forward(self, X):

# 零填充

new_X = torch.zeros([X.shape[0], X.shape[1] + 2 * self.padding, X.shape[2] + 2 * self.padding])

new_X[:, self.padding:X.shape[1] + self.padding, self.padding:X.shape[2] + self.padding] = X

u, v = self.weight.shape

output_w = (new_X.shape[1] - u) // self.stride + 1

output_h = (new_X.shape[2] - v) // self.stride + 1

output = torch.zeros([X.shape[0], output_w, output_h])

for i in range(0, output.shape[1]):

for j in range(0, output.shape[2]):

output[:, i, j] = torch.sum(

new_X[:, self.stride * i:self.stride * i + u, self.stride * j:self.stride * j + v] * self.weight,

dim=[1, 2])

return output

inputs = torch.randn(size=[2, 8, 8])

conv2d_padding = Conv2D(kernel_size=3, padding=1,weight_attr=torch.zeros((3,3)))

outputs = conv2d_padding(inputs)

print(

"When kernel_size=3, padding=1 stride=1, input's shape: {}, output's shape: {}".format(inputs.shape, outputs.shape))

conv2d_stride = Conv2D(kernel_size=3, stride=2, padding=1)

outputs = conv2d_stride(inputs)

print(

"When kernel_size=3, padding=1 stride=2, input's shape: {}, output's shape: {}".format(inputs.shape, outputs.shape))

When kernel_size=3, padding=1 stride=1, input's shape: torch.Size([2, 8, 8]), output's shape: torch.Size([2, 8, 8])

When kernel_size=3, padding=1 stride=2, input's shape: torch.Size([2, 8, 8]), output's shape: torch.Size([2, 4, 4])

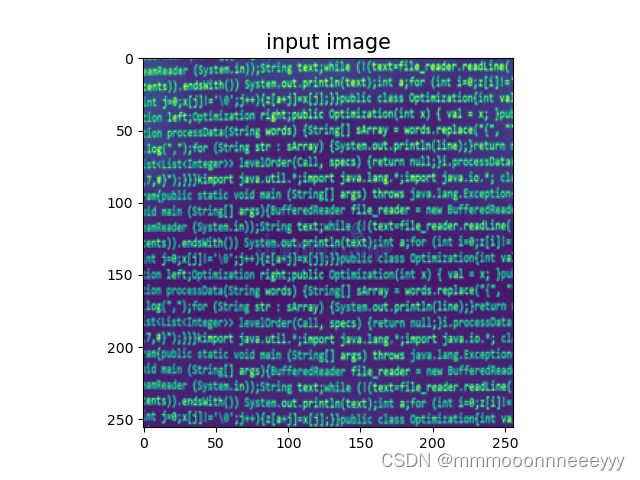

5.1.7 使用卷积运算完成图像边缘检测任务

【使用pytorch实现图像边缘检测】

import torch

import torch.nn as nn

class Conv2D(nn.Module):

def __init__(self, kernel_size, stride=1, padding=0, weight_attr=False):

super(Conv2D, self).__init__()

if type(weight_attr) == bool:

weight_attr = torch.ones(size=(kernel_size, kernel_size))

self.weight = torch.nn.Parameter(weight_attr)

# 步长

self.stride = stride

# 零填充

self.padding = padding

def forward(self, X):

# 零填充

new_X = nn.ZeroPad2d(1)(X)

u, v = self.weight.shape

output_w = (new_X.shape[1] - u) // self.stride + 1

output_h = (new_X.shape[2] - v) // self.stride + 1

output = torch.zeros([X.shape[0], output_w, output_h])

for i in range(0, output.shape[1]):

for j in range(0, output.shape[2]):

output[:, i, j] = torch.sum(

new_X[:, self.stride * i:self.stride * i + u, self.stride * j:self.stride * j + v] * self.weight,

dim=[1, 2])

return output

import matplotlib.pyplot as plt

from PIL import Image

import numpy as np

def main():

# 读取图片

img = Image.open('number.jpg').convert('L').resize((256, 256))

# 设置卷积核参数

w = np.array([[-1, -1, -1], [-1, 8, -1], [-1, -1, -1]], dtype='float32')

# 创建卷积算子,卷积核大小为3x3,并使用上面的设置好的数值作为卷积核权重的初始化参数

conv = Conv2D(kernel_size=3, stride=1, padding=0, weight_attr=torch.tensor(w))

# 将读入的图片转化为float32类型的numpy.ndarray

inputs = np.array(img).astype('float32')

print("bf to_tensor, inputs:", inputs)

# 将图片转为Tensor

inputs = torch.tensor(inputs)

print("bf unsqueeze, inputs:", inputs)

inputs = torch.unsqueeze(inputs, dim=0)

print("af unsqueeze, inputs:", inputs)

outputs = conv.forward(inputs)

outputs = outputs.squeeze()

outputs =outputs.data.detach().numpy()

# 可视化结果

plt.imshow(img)

plt.title('input image', fontsize=15)

plt.show()

plt.imshow(outputs, cmap='gray')

plt.title('output feature map', fontsize=15)

plt.show()

if __name__ == "__main__":

main()

bf to_tensor, inputs: [[159. 145. 175. ... 46. 47. 49.]

[210. 217. 119. ... 47. 45. 48.]

[ 90. 95. 68. ... 62. 43. 47.]

...

[ 28. 28. 26. ... 63. 50. 40.]

[ 27. 27. 27. ... 28. 28. 31.]

[ 27. 27. 24. ... 32. 31. 32.]]

bf unsqueeze, inputs: tensor([[159., 145., 175., ..., 46., 47., 49.],

[210., 217., 119., ..., 47., 45., 48.],

[ 90., 95., 68., ..., 62., 43., 47.],

...,

[ 28., 28., 26., ..., 63., 50., 40.],

[ 27., 27., 27., ..., 28., 28., 31.],

[ 27., 27., 24., ..., 32., 31., 32.]])

af unsqueeze, inputs: tensor([[[159., 145., 175., ..., 46., 47., 49.],

[210., 217., 119., ..., 47., 45., 48.],

[ 90., 95., 68., ..., 62., 43., 47.],

...,

[ 28., 28., 26., ..., 63., 50., 40.],

[ 27., 27., 27., ..., 28., 28., 31.],

[ 27., 27., 24., ..., 32., 31., 32.]]])

tensor([[[ 700., 280., 665., ..., 124., 141., 252.],

[ 974., 675., -83., ..., -122., -29., 153.],

[ 68., -142., -329., ..., -128., -55., 148.],

...,

[ 96., 18., 0., ..., -297., -312., -86.],

[ 79., 2., -73., ..., -93., -83., 67.],

[ 135., 84., -20., ..., 109., 97., 166.]]],

grad_fn=)

ps:以上来自不是蒋承翰的博客

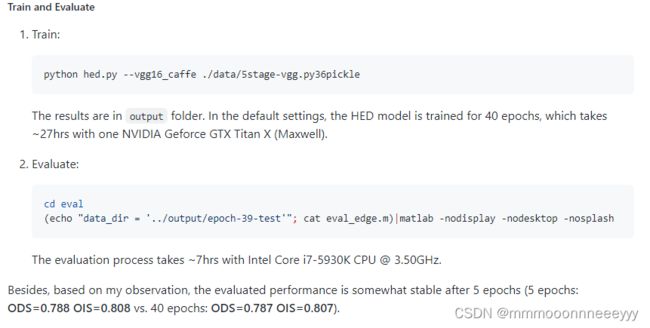

复现论文 Holistically-Nested Edge Detection,发表于 CVPR 2015

一个基于深度学习的端到端边缘检测模型。

虽然数据集导入成功了但是这单薄的显存告诉我这个模型看看就好

RuntimeError: CUDA out of memory. Tried to allocate 14.00 MiB (GPU 0; 1024.00 MiB total capacity; 459.71 MiB already allocated; 4.11 MiB free; 26.29 MiB cached)

看了这个时间真是离谱,还是在有好的显卡的情况下,我就算了吧,刚看有人发布了一个预训练模型接下来看看能不能用

https://github.com/meteorshowers/hed