[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]

一、目标

最近一直在做自动化的开发测试,功能测试断言无法cover,接口测试有些底层需要UI配合又不能完全模拟,有些功能还是需要截图后人工来判断,所以就想引入下图片检测机制来检测截图是否符合预期功能,从而替代断言功能无法实现的困境

看了网上很多的评价,后面选择了谷歌的Tensorflow平台来实现功能,这个平台是2015年的时候出现的,到现在已经很久了,版本也更新迭代了很久,最新的都是2.+的版本,新版本支持了很多新特性,但是相应的bug也多了很多,所以这里我还是选择了相对稳定的1.15.0的版本

由于本人测试设备有限,正常的肯定要选择tensorflow-gpu的版本,也就是带独立显卡的机器上运行速度要比cpu版本高几十倍,奈何巧妇难为无米之炊,我们本身检测量也不是很多,量大的是在训练自己的模型上,到时候只能多跑很久的时间了

二、环境

正常作为运算肯定是Linux系统进行搭建,效果会好很多,毕竟windows系统占系统资源更多,但是我们的很多测试都是在windows上进行的,截图也是windows上,所以为了兼容性更好还是选择在windows上搭建

首先查看下支持的版本信息,OS,Python, Tensorflow有严格的版本对应要求,详情见:

https://tensorflow.google.cn/install/source

所以我们这边选择1+版本最新的版本,环境如下:

| 环境 | 版本 |

|---|---|

| OS | Windows10/11 |

| Python | 3.7.9 |

| Tensorflow | 1.15.0 |

三、配置Python环境

步骤1: 下载Python3.7.9

去Python官网下载3.7.9版本,链接: https://www.python.org/downloads/release/python-379/ ,选择Windows x86-64 executable installer

下载完成后就正常安装完了,记得勾选环境变量,这是为了后面安装依赖包的时候懒人操作,但是这个切记,如果windows下安装了多个版本python的时候,需要切换python版本

步骤2:配置Pycharm的环境

首先安装官网最新的版本即可,用过Pycharm的都知道,正常开发一个python项目的话都需要设置自己的虚拟环境,可以使用conda,也可以使用pipenv等等,目前市面上python早就3.10+版本,所以我这里3.7版本只是为了练习Tensorflow,就选择了最懒的就是用最基础的python环境

步骤3:安装Tensorflow

直接在Pycharm下打开终端,确保当前Python3.7.9安装目录和Scripts目录设置了系统环境变量,否则等会使用pip安装命令提示不识别

直接输入以下命令即可:

pip install tensorflow==1.15.0

如果网速特别慢可以选择清华源下载:

pip install tensorflow==1.15.0 -i https://pypi.tuna.tsinghua.edu.cn/simple

等待安装完成后我们可以测试一下,tensorflow是否运行正常

import tensorflow as tf

# 查看tensorflow版本

print(tf.__version__)

# 输出如下:

2022-11-17 10:11:35.020549: W tensorflow/stream_executor/platform/default/dso_loader.cc:55] Could not load dynamic library 'cudart64_100.dll'; dlerror: cudart64_100.dll not found

2022-11-17 10:11:35.020886: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

1.15.0在输出Tensorflow版本之前弹出这两个错误,是由于Tensorflow优先回去运行GPU模式,但是测试环境没有GPU,所以后面跳过这个error然后选择CPU来执行

步骤4:下载 object detection API

直接去github官网pull下

git clone https://github.com/tensorflow/models.git

如果没有git,就打开官网下载zip包即可

https://github.com/tensorflow/models

下载完成后将其解压,然后拷贝到之前Pycharm新建的项目下

步骤5:下载并配置Protobuf

Protobuf全称是Google Protocol Buffer,是谷歌公司内部的混个语言数据标准, 提供了一种轻量高效的结构化数据存储

首先安装python库protobuf

pip install protobuf==3.20.0

然后去github上下载一个windows版本,链接: https://github.com/google/protobuf/releases,选择win32或者win64下载

下载完成后将bin目录下的protoc.exe拷贝到之前object detection API(models)的research目录下

将models\research\object_detection\protos目录下的所有proto文件转化成python文件

打开终端并切换到research目录,运行以下命令:

protoc.exe object_detection\protos\*.proto --python_out=.

运行完成后如果没有报错,在proto目录下会生成很多python文件,转换成功

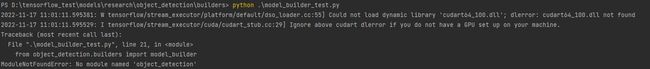

步骤6:测试Tensorflow-object_detection API

打开终端切换到research\object_detection\builders目录

python model_builder_test.py刚开始运行后会报出以下错误 No model name 'object_detection'

这是因为你当前运行的object_detection不是系统环境变量,python环境解释器不识别这个model,需要将其添加到python环境site-packages下,拷贝过去或者添加tensorflow_model.pth文件都行,我们这里创建tensorflow_model.path文件,内容如下:

D:\tensorflow_test\models\research

D:\tensorflow_test\models\research\slim

然后重新执行上面的测试,弹出OK则表明object_detection API库环境OK

步骤7:下载SSD_Mobilenet_V1神经网络

打开终端然后输入curl命令来下载SSD mobilenet

curl -O http://download.tensorflow.org/models/object_detection/ssd_mobilenet_v1_coco_2018_01_28.tar.gz

![[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]_第8张图片](http://img.e-com-net.com/image/info8/b60f8924609f4f4293fb4a582d03a006.jpg) 下载完成后使用7z进行解压,得到 frozen_inference_graph.pb文件,将这个文件拷贝到object_detection目录下,我这里有两个

下载完成后使用7z进行解压,得到 frozen_inference_graph.pb文件,将这个文件拷贝到object_detection目录下,我这里有两个

步骤8:测试Tensorflow自带的模型

测试自带模型就是运行object_detection_tutorial.ipynb,可以替换自己的图片进行测试

方法一: 使用Jupyter

Jupyter是一个Web程序,便于创建和共享程序文档,支持实时代码,可视化等

首先安装一些依赖库

jupyter numpy lxml matplotlib opencv-python Pillow

将这些文件放到一个requirements.txt中然后运行pip install -r requirements.txt 等待安装完成

打开终端然后切换到research\object_detection目录运行 jupyter notebook

会自动打开浏览器,检查默认路径就是object_detection

双击object_detection_tutorial.ipynb,然后修改以下代码,否则跑不起来

步骤1: 注释检查tensorflow版本,因为我们使用的是1.15.0版本

步骤2: 注释下载Model的代码,因为之前我们已经手动下载过了

步骤3:注释整个download model

步骤4:替换自己的images

image存放在test_images目录,然后文件名是image*.jpg,默认遍历2张,可以自行放多张,修改range范围即可

步骤5:运行,点击Cell-->Run All

步骤6:检查结果

如果上面运行不显示图片,要先运行下 ,然后重新运行最后一个代码

# This is needed to display the images.

%matplotlib inline# This is needed since the notebook is stored in the object_detection folder.

sys.path.append("..")

步骤7:替换自己的images,然后执行

方法二: 自己修改object_detection_tutorial.ipynb为python脚本

import numpy as np

import os, time

import six.moves.urllib as urllib

import sys

import tarfile

import tensorflow as tf

import zipfile

from distutils.version import StrictVersion

from collections import defaultdict

from io import StringIO

import matplotlib

# matplotlib.use('nbAgg')

import matplotlib.pyplot as plt

from PIL import Image

from object_detection.utils import ops as utils_ops

from utils import label_map_util

from utils import visualization_utils as vis_util

# 检查当前tf版本小于1.14即报错

if StrictVersion(tf.__version__) < StrictVersion('1.14.0'):

raise ImportError('Please upgrade your TensorFlow installation to v1.9.* or later!')

"""

=================

Env setup

=================

"""

# 检测完输出图片路径

base_path = os.path.abspath(os.path.dirname(__file__))

output_img_dic = base_path + r'\output_images'

# What model to download.

MODEL_NAME = 'ssd_mobilenet_v1_coco_2018_01_28'

# MODEL_FILE = MODEL_NAME + '.tar.gz'

#DOWNLOAD_BASE = 'http://download.tensorflow.org/models/object_detection/'

# Path to frozen detection graph. This is the actual model that is used for the object detection.

PATH_TO_FROZEN_GRAPH = MODEL_NAME + '/frozen_inference_graph.pb'

# List of the strings that is used to add correct label for each box.

PATH_TO_LABELS = os.path.join('data','mscoco_label_map.pbtxt')

NUM_CLASSES = 90

"""

===================

Download Model

===================

"""

# opener = urllib.request.URLopener()

# opener.retrieve(DOWNLOAD_BASE + MODEL_FILE,MODEL_FILE)

# tar_file = tarfile.open(MODEL_FILE)

# for file in tar_file.getmembers():

# file_name = os.path.basename(file.name)

# if 'frozen_inference_graph.pb' in file_name:

# tar_file.extract(file,os.getcwd())

"""

Load a (frozen) Tensorflow model into memory.

"""

detection_graph = tf.Graph()

with detection_graph.as_default():

od_graph_def = tf.GraphDef()

with tf.gfile.GFile(PATH_TO_FROZEN_GRAPH,'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def,name='')

label_map = label_map_util.load_labelmap(PATH_TO_LABELS)

categories = label_map_util.convert_label_map_to_categories(label_map, max_num_classes=NUM_CLASSES, use_display_name=True)

category_index = label_map_util.create_category_index(categories)

def load_image_into_numpy_array(image):

(im_width,im_height) = image.size

return np.array(image.getdata()).reshape(

(im_height,im_width,3)).astype(np.uint8)

# For the sake of simplicity we will use only 2 images:

# image1.jpg.bak

# image2.jpg.bak

# If you want to test the code with your images, just add path to the images to the TEST_IMAGE_PATHS.

PATH_TO_TEST_IMAGES_DIR = 'test_images'

TEST_IMAGE_PATHS = [os.path.join(PATH_TO_TEST_IMAGES_DIR,'image{}.jpg'.format(i)) for i in range(1,3)]

# Size, in inches, of the output images.

IMAGE_SIZE = (12,8)

def run_inference_for_single_image(image,graph):

with graph.as_default():

with tf.Session() as sess:

# Get handles to input and output tensors

ops = tf.get_default_graph().get_operations()

all_tensor_names = {output.name for op in ops for output in op.outputs}

tensor_dict = {}

for key in [

'num_detections','detection_boxes','detection_scores',

'detection_classes','detection_masks'

]:

tensor_name = key + ':0'

if tensor_name in all_tensor_names:

tensor_dict[key] = tf.get_default_graph().get_tensor_by_name(

tensor_name)

if 'detection_masks' in tensor_dict:

# The following processing is only for single image

detection_boxes = tf.squeeze(tensor_dict['detection_boxes'],[0])

detection_masks = tf.squeeze(tensor_dict['detection_masks'],[0])

# Reframe is required to translate mask from box coordinates to image coordinates and fit the image size.

real_num_detection = tf.cast(tensor_dict['num_detections'][0],tf.int32)

detection_boxes = tf.slice(detection_boxes,[0,0],[real_num_detection,-1])

detection_masks = tf.slice(detection_masks,[0,0,0],[real_num_detection,-1,-1])

detection_masks_reframed = utils_ops.reframe_box_masks_to_image_masks(

detection_masks,detection_boxes,image.shape[0],image.shape[1])

detection_masks_reframed = tf.cast(

tf.greater(detection_masks_reframed,0.5),tf.uint8)

# Follow the convention by adding back the batch dimension

tensor_dict['detection_masks'] = tf.expand_dims(

detection_masks_reframed,0)

image_tensor = tf.get_default_graph().get_tensor_by_name('image_tensor:0')

# Run inference

output_dict = sess.run(tensor_dict,

feed_dict={image_tensor: np.expand_dims(image,0)})

# all outputs are float32 numpy arrays, so convert types as appropriate

output_dict['num_detections'] = int(output_dict['num_detections'][0])

output_dict['detection_classes'] = output_dict[

'detection_classes'][0].astype(np.uint8)

output_dict['detection_boxes'] = output_dict['detection_boxes'][0]

output_dict['detection_scores'] = output_dict['detection_scores'][0]

if 'detection_masks' in output_dict:

output_dict['detection_masks'] = output_dict['detection_masks'][0]

return output_dict

for image_path in TEST_IMAGE_PATHS:

image = Image.open(image_path)

# the array based representation of the image will be used later in order to prepare the

# result image with boxes and labels on it.

image_np = load_image_into_numpy_array(image)

# Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np,axis=0)

# Actual detection.

output_dict = run_inference_for_single_image(image_np,detection_graph)

# Visualization of the results of a detection.

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

output_dict['detection_boxes'],

output_dict['detection_classes'],

output_dict['detection_scores'],

category_index,

instance_masks=output_dict.get('detection_masks'),

use_normalized_coordinates=True,

line_thickness=8)

plt.figure(figsize=IMAGE_SIZE)

# print(1,image_np)

plt.imshow(image_np)

# plt.show() # 这里安装了matplotlib库任然无效

# 输出目录不存在则创建

if not os.path.exists(output_img_dic):

os.mkdir(output_img_dic)

now_time = time.strftime('%Y-%m-%d %H-%M-%S',time.localtime())

output_img_path = os.path.join(output_img_dic,str(now_time) + ".png")

plt.savefig(output_img_path)

执行完后会在object_detection下生成output_images

至此,本地的搭建模型算是完成了

下面提供下python环境库

软件包 版本 Jinja2 3.1.2 Keras-Applications 1.0.8 Keras-Preprocessing 1.1.2 Markdown 3.4.1 MarkupSafe 2.1.1 Pillow 9.3.0 PyQt5 5.8.1 Pygments 2.13.0 QtPy 2.3.0 Send2Trash 1.8.0 Werkzeug 2.2.2 absl-py 1.3.0 anyio 3.6.2 argon2-cffi 21.3.0 argon2-cffi-bindings 21.2.0 astor 0.8.1 attrs 22.1.0 backcall 0.2.0 beautifulsoup4 4.11.1 bleach 5.0.1 cffi 1.15.1 colorama 0.4.6 cycler 0.11.0 debugpy 1.6.3 decorator 5.1.1 defusedxml 0.7.1 entrypoints 0.4 fastjsonschema 2.16.2 fonttools 4.38.0 gast 0.2.2 google-pasta 0.2.0 grpcio 1.50.0 h5py 3.7.0 idna 3.4 importlib-metadata 5.0.0 importlib-resources 5.10.0 ipykernel 6.16.2 ipython 7.34.0 ipython-genutils 0.2.0 ipywidgets 8.0.2 jedi 0.18.1 joblib 1.2.0 jsonschema 4.17.0 jupyter 1.0.0 jupyter-client 7.4.6 jupyter-console 6.4.4 jupyter-core 4.11.2 jupyter-server 1.23.2 jupyterlab-pygments 0.2.2 jupyterlab-widgets 3.0.3 kiwisolver 1.4.4 lxml 4.9.1 matplotlib 3.5.3 matplotlib-inline 0.1.6 mistune 2.0.4 nbclassic 0.4.8 nbclient 0.7.0 nbconvert 7.2.5 nbformat 5.7.0 nest-asyncio 1.5.6 newpy 1.1.16 notebook 6.5.2 notebook-shim 0.2.2 numpy 1.21.6 opencv-python 4.6.0.66 opt-einsum 3.3.0 packaging 21.3 pandas 1.3.5 pandocfilters 1.5.0 parso 0.8.3 pickleshare 0.7.5 pip 22.3.1 pkgutil-resolve-name 1.3.10 prometheus-client 0.15.0 prompt-toolkit 3.0.32 protobuf 3.20.0 psutil 5.9.4 pycparser 2.21 pyparsing 3.0.9 pyrsistent 0.19.2 python-dateutil 2.8.2 pytz 2022.6 pywin32 305 pywinpty 2.0.9 pyzmq 24.0.1 qtconsole 5.4.0 resources 0.0.1 scikit-learn 1.0.2 scipy 1.7.3 setuptools 47.1.0 sip 4.19.8 six 1.16.0 sniffio 1.3.0 soupsieve 2.3.2.post1 tensorboard 1.15.0 tensorflow 1.15.0 tensorflow-estimator 1.15.1 termcolor 2.1.0 terminado 0.17.0 threadpoolctl 3.1.0 tinycss2 1.2.1 tornado 6.2 traitlets 5.5.0 typing-extensions 4.4.0 wcwidth 0.2.5 webencodings 0.5.1 websocket-client 1.4.2 wheel 0.38.4 widgetsnbextension 4.0.3 wrapt 1.14.1 zipp 3.10.0

![[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]_第1张图片](http://img.e-com-net.com/image/info8/72abef289c8b4aeebb8198115f394525.jpg)

![[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]_第2张图片](http://img.e-com-net.com/image/info8/5efbc6d1104544a9a5bd504832e0ad6e.jpg)

![[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]_第3张图片](http://img.e-com-net.com/image/info8/4bd03364bc3241ae822cbaaac879a4f4.jpg)

![[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]_第4张图片](http://img.e-com-net.com/image/info8/9796c949bbaf408e9d6b1718deb3ac48.jpg)

![[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]_第5张图片](http://img.e-com-net.com/image/info8/2c859a81956a40419b1973abda306868.jpg)

![[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]_第6张图片](http://img.e-com-net.com/image/info8/e72474ab6b1641acaeee3db66bc763ea.jpg)

![[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]_第7张图片](http://img.e-com-net.com/image/info8/7aaf0844068643fba8a73000d0086a5b.jpg)

![[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]_第9张图片](http://img.e-com-net.com/image/info8/4b61d4457bcd48348ae450ffa262002f.jpg)

![[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]_第10张图片](http://img.e-com-net.com/image/info8/d98762bc810d4015938274283839ff8f.jpg)

![[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]_第11张图片](http://img.e-com-net.com/image/info8/1dbe59be7bcb48ee815f81d6e15ef7e1.jpg)

![[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]_第12张图片](http://img.e-com-net.com/image/info8/b00e7d8a00a34ff3a36012a842d40198.jpg)

![[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]_第13张图片](http://img.e-com-net.com/image/info8/51b32538274e482f9b2cb647eded63e3.jpg)

![[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]_第14张图片](http://img.e-com-net.com/image/info8/ec406d3953dd4b4cad3f2f7470a8e9ed.jpg)

![[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]_第15张图片](http://img.e-com-net.com/image/info8/05977fda03d34cff8dc78b16dff8e75f.jpg)

![[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]_第16张图片](http://img.e-com-net.com/image/info8/bb13655cb6e246a0a563d771c6589bf2.jpg)

![[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]_第17张图片](http://img.e-com-net.com/image/info8/1d2d50fb7b774b9f8eef086049ff9861.jpg)

![[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]_第18张图片](http://img.e-com-net.com/image/info8/7c2fb9d529e44cbaa5769ef8f278f569.jpg)

![[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]_第19张图片](http://img.e-com-net.com/image/info8/5e5fa74736cc4d31a924d03bc9245dba.jpg)

![[Windows+Tensorflow1.15.0搭建属于自己的物体识别环境]_第20张图片](http://img.e-com-net.com/image/info8/3281cf5276344e6ba15022c9ee761dcd.jpg)