DeepLab v3 模型

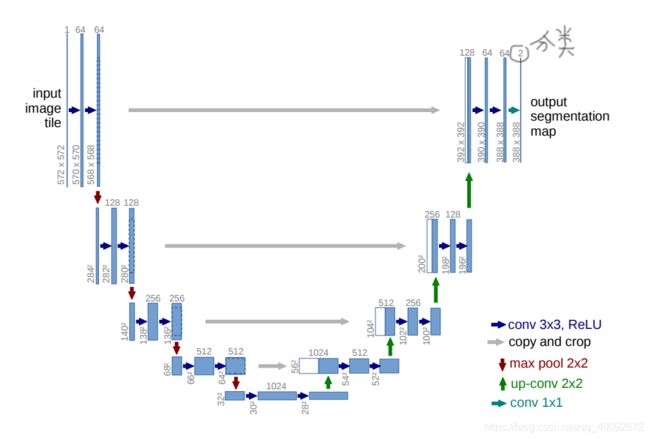

一、U-net 模型

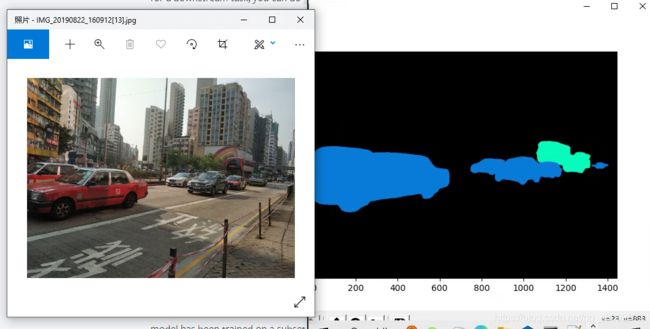

U-net 结构图

这是来自于论文中的网络模型结构图,图中的输出为2维的,由此可见,该模型主要用于图像分割,根据图中的结构,实现代码如下,输入位512x512的图片,输出像素预测有两类(边缘,非边缘)

# 输入512*512的照片,3个色彩维度

inputs = keras.layers.Input((512, 512, 3))

conv1 = keras.layers.Conv2D(128, (3, 3), activation='relu', padding='same')(inputs)

conv1 = keras.layers.Conv2D(128, (3, 3), activation='relu', padding='same')(conv1)

pool1 = keras.layers.MaxPool2D((2, 2))(conv1)

conv2 = keras.layers.Conv2D(128, (3, 3), activation='relu', padding='same')(pool1)

conv2 = keras.layers.Conv2D(128, (3, 3), activation='relu', padding='same')(conv2)

pool2 = keras.layers.MaxPool2D((2, 2))(conv2)

conv3 = keras.layers.Conv2D(256, (3, 3), activation='relu', padding='same')(pool2)

conv3 = keras.layers.Conv2D(256, (3, 3), activation='relu', padding='same')(conv3)

pool3 = keras.layers.MaxPool2D((2, 2))(conv3)

conv4 = keras.layers.Conv2D(512, (3, 3), activation='relu', padding='same')(pool3)

conv4 = keras.layers.Conv2D(512, (3, 3), activation='relu', padding='same')(conv4)

pool4 = keras.layers.MaxPool2D((2, 2))(conv4)

conv5 = keras.layers.Conv2D(1024, (3, 3), activation='relu', padding='same')(pool4)

conv5 = keras.layers.Conv2D(1024, (3, 3), activation='relu', padding='same')(conv5)

deconv1 = keras.layers.Conv2D(512, (2, 2), activation='relu', padding='same')(conv5)

upsa1 = keras.layers.UpSampling2D((2, 2))(deconv1)

upsa1 = keras.layers.concatenate([conv4, upsa1], axis=3)

deconv1 = keras.layers.Conv2D(512, (3, 3), activation='relu', padding='same')(upsa1)

deconv1 = keras.layers.Conv2D(512, (3, 3), activation='relu', padding='same')(deconv1)

deconv2 = keras.layers.Conv2D(256, (2, 2), activation='relu', padding='same')(deconv1)

upsa2 = keras.layers.UpSampling2D((2, 2))(deconv2)

upsa2 = keras.layers.concatenate([conv3, upsa2], axis=3)

deconv2 = keras.layers.Conv2D(256, (3, 3), activation='relu', padding='same')(upsa2)

deconv2 = keras.layers.Conv2D(256, (3, 3), activation='relu', padding='same')(deconv2)

deconv3 = keras.layers.Conv2D(128, (2, 2), activation='relu', padding='same')(deconv2)

upsa3 = keras.layers.UpSampling2D((2, 2))(deconv3)

upsa3 = keras.layers.concatenate([conv2, upsa3], axis=3)

deconv3 = keras.layers.Conv2D(128, (3, 3), activation='relu', padding='same')(upsa3)

deconv3 = keras.layers.Conv2D(128, (3, 3), activation='relu', padding='same')(deconv3)

deconv4 = keras.layers.Conv2D(64, (2, 2), activation='relu', padding='same')(deconv3)

upsa4 = keras.layers.UpSampling2D((2, 2))(deconv4)

upsa4 = keras.layers.concatenate([conv1, upsa4], axis=3)

deconv4 = keras.layers.Conv2D(64, (3, 3), activation='relu', padding='same')(upsa4)

deconv4 = keras.layers.Conv2D(64, (3, 3), activation='relu', padding='same')(deconv4)

output = keras.layers.Conv2D(2, (1, 1), activation='softmax', padding='same')(deconv4)

model = keras.models.Model(inputs=inputs, outputs=output)

model.summary()

model.compile(optimizer=keras.optimizers.Adam(lr=1e-4), loss='binary_crossentropy', metrics=['accuracy'])

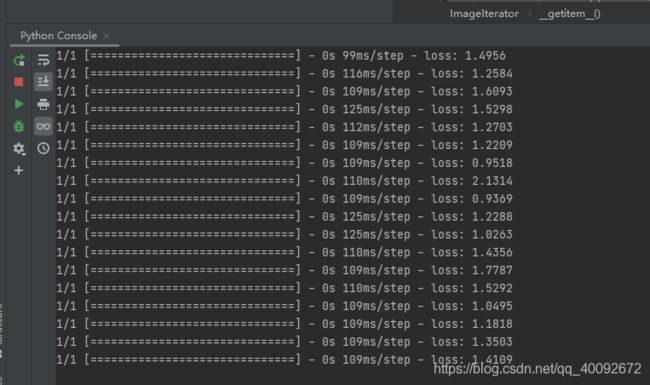

使用迭代器异步读取数据可以提高执行速度

在NVIDA 1660显卡下,动态分配内存

physical_devices = config.list_physical_devices('GPU')

config.experimental.set_memory_growth(physical_devices[0], True)

二、DeepLab V3 模型

DeepLab V3 模型主要应用于图像的语义分割,输出的尺寸与原图相差16倍。

DeepLab v3基于其中的ResNet 50/101 模型,下图是 ResNet 模型结构,output stride代表原图像尺寸与输出尺寸的比例,deeplabv3的Block3之前(包括Block3)都是和ResNet一样的,

bottleneck 结构

ResNet中存在两种Block结构,building block和bottlenck block。DeepLab V3模型中使用了bottleneck结构,在deeplab v3中对该结构做了调整

使用pytorch下载预训练模型

keras实现Deeplab v3

与论文中的模型存在一点差别,

from tensorflow import keras as keras

from tensorflow import config as config

physical_devices = config.list_physical_devices('GPU')

config.experimental.set_memory_growth(physical_devices[0], True)

def deeplab3(input_size=(512, 512), num_classes=21):

inputs = keras.Input(shape=input_size + (3,))

net = keras.layers.Conv2D(64, (7, 7), strides=(2, 2), padding='same')(inputs)

net = keras.layers.MaxPool2D((3, 3), strides=(2, 2), padding='same')(net)

# Block 1

net = bottleneck(64, 64, 256, strides=(1, 1))(net)

net = bottleneck(256, 64, 256, strides=(1, 1))(net)

net = bottleneck(256, 64, 256, strides=(2, 2))(net)

# Block 2

net = bottleneck(256, 128, 512, strides=(1, 1))(net)

net = bottleneck(512, 128, 512, strides=(1, 1))(net)

net = bottleneck(512, 128, 512, strides=(1, 1))(net)

net = bottleneck(512, 128, 512, strides=(2, 2))(net)

# Block 3

net = bottleneck(512, 256, 1024, strides=(1, 1))(net)

for i in range(22):

net = bottleneck(1024, 256, 1024, strides=(1, 1))(net)

net = bottleneck(1024, 256, 1024, strides=(1, 1))(net)

# Block 4

net = bottleneck(1024, 512, 2048, strides=(1, 1), rate=1)(net)

net = bottleneck(2048, 512, 2048, strides=(1, 1), rate=2)(net)

net = bottleneck(2048, 512, 2048, strides=(1, 1), rate=4)(net)

# Atrous Spatial Pyramid Pooling

# part a

net_p1 = keras.layers.Conv2D(256, (1, 1), padding='same')(net)

net_p2 = keras.layers.Conv2D(256, (3, 3), padding='same', dilation_rate=(6, 6))(net)

net_p3 = keras.layers.Conv2D(256, (3, 3), padding='same', dilation_rate=(12, 12))(net)

net_p4 = keras.layers.Conv2D(256, (3, 3), padding='same', dilation_rate=(18, 18))(net)

# part b

shape_in = net.shape[1:3]

pooled = keras.layers.GlobalAvgPool2D()(net)

pooled = keras.layers.Reshape(target_shape=(-1, 1, 2048))(pooled)

pooled = keras.layers.Conv2D(256, (1, 1))(pooled)

pooled = keras.layers.BatchNormalization()(pooled)

pooled = keras.layers.UpSampling2D(shape_in)(pooled)

net = keras.layers.concatenate([net_p1, net_p2, net_p3, net_p4, pooled])

net = keras.layers.Conv2D(256, (3, 3), strides=(1, 1), padding='same')(net)

outputs = keras.layers.Conv2D(num_classes, (1, 1), strides=(1, 1), padding='same', activation='relu')(net)

model = keras.Model(inputs, outputs)

return model

def bottleneck(depth_input, depth_bottleneck, depth_output, strides=(1, 1), rate=1):

def fun(inputs):

if depth_input == depth_output:

if strides == (1, 1):

shortcut = inputs

else:

shortcut = keras.layers.MaxPool2D((1, 1), strides=strides, padding='same')(inputs)

else:

shortcut = keras.layers.Conv2D(depth_output, (1, 1), strides=strides, padding='same')(inputs)

residual = keras.layers.Conv2D(depth_bottleneck, (1, 1), padding='same')(inputs)

residual = keras.layers.BatchNormalization()(residual)

residual = keras.layers.Conv2D(depth_bottleneck, (3, 3), strides=strides, padding='same', dilation_rate=rate)(

residual)

residual = keras.layers.BatchNormalization()(residual)

residual = keras.layers.Conv2D(depth_output, (1, 1), padding='same')(residual)

residual = keras.layers.BatchNormalization()(residual)

outputs = keras.layers.ReLU()(shortcut + residual)

outputs = keras.layers.BatchNormalization()(outputs)

return outputs

return fun