【深度学习基础】PyTorch实现GoogleNet亲身实践

【深度学习基础】PyTorch实现GoogleNet亲身实践践

- 1. 论文关键信息

-

- 1.1 Inception结构

- 1.2 Inception-v1, Inception-v2网络架构

- 1.3 Inception-v3网络架构

- 1.4 复现思路

- 1.5 Inception-v3的详细结构

- 2. PyTorch复现

-

- 2.1 编写BN_Conv2d_ReLU结构

- 2.1 Inception-v1,v2复现

- 2.2 Inception-v3复现

1. 论文关键信息

1.1 Inception结构

1、GoogleNet的贡献是提出了各种网络结构,其称之为Inception。论文中提出CNN构建的几个原则:

(1)在设计的网络中,特别是网络的早起部分要避免瓶颈效应。在得到最终特征表示之前,特征的尺寸应该是从输入尺寸逐步地减小的。特征表达所蕴含的信息内容不能仅仅通过特征的维度来判断,还应该关注提取这些特征的网络结构。

(2)高维的特征表示适合在当前局部结构中处理。在每层后面添加激活单元,可以得到更可分的特征,网络也会训练得更快。

(3)空间聚合(说得应该是inception结构)可以使用更低维度的卷积核上进行,在聚合之前使用更多的小尺寸卷积层仅会带来很少的特征损失。如果采用一个空间聚合结构,相邻单元之间的强相关性会减少这部分损失,这也有助于网络的训练。

(4)平衡网络的宽度和深度,也就是要平衡每个结构内的卷积层数量以及网络的总的深度。同时增加深度和宽度有利于构建更好的网络,但是我们需要在计算资源的限定内平衡二者。

2、在Inception-v3的论文中,归纳了以下几种结构:

注意:

1、为了方面后续称呼和代码复现,我给每种block结构命了个名;

2、每个block结构都包括4条支路,然后将结果进行concatenate;从左到右,我们可以依次称这些支路为branch1,branch2,branch3,branch4。

(1)原始结构,Inception-v1网络使用这种结构

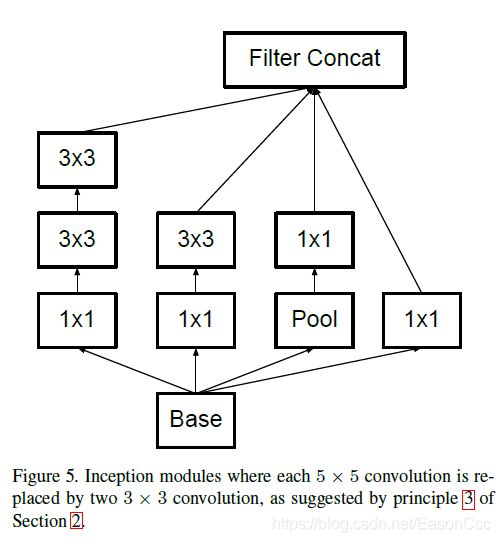

(2)Type1 (为了方便代码中命名,以及后续提到,我给它取个名字)结构,Inception-v2均使用该结构,inception-v3中也用到。

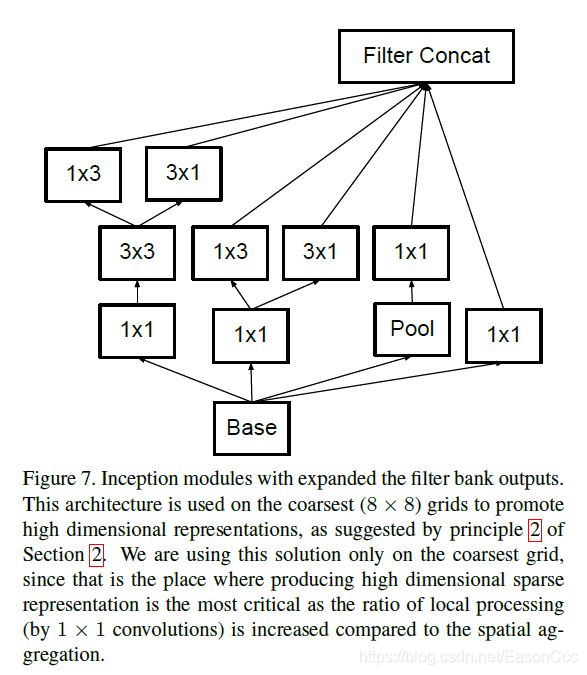

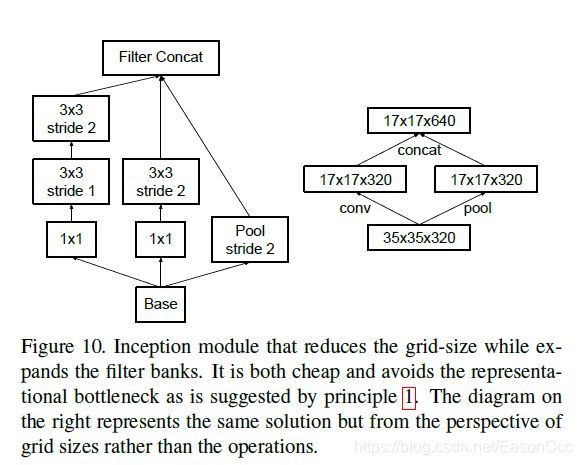

(3)Type2,下图中坐标的结构,在Inception-v3中用到(但其实现与改图有点不同)。

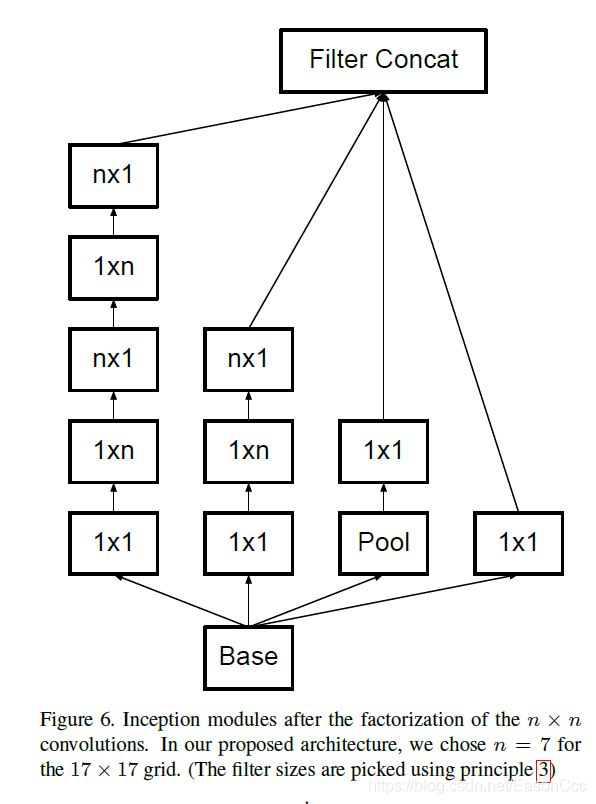

(4)Type3,v3中用到,其实现中n==7。思想是,将nxn的卷积等效成1xn和nx1的卷积串联,进一步加速训练。

(5)Type4,v3中用到,论文中没有给出这种结构的图。(inception-v3的实现与论文中给出的网络架构并不相同,我后面再解释)

1.2 Inception-v1, Inception-v2网络架构

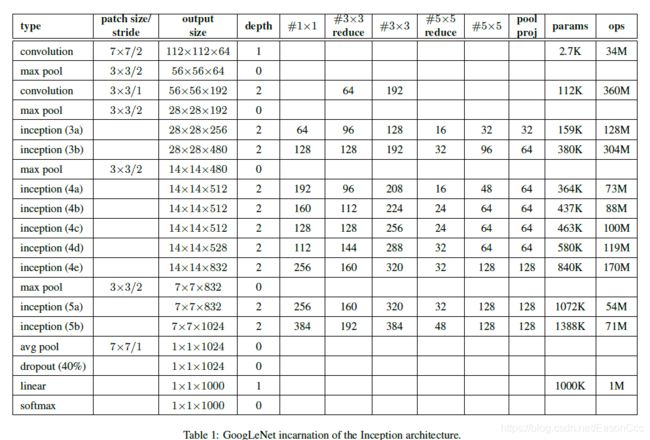

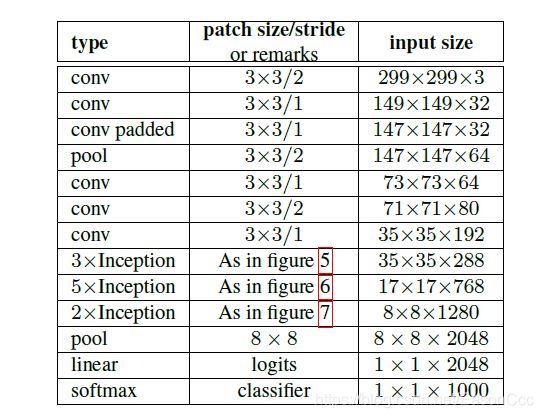

- 上面这张表,在v1网络实现时,采用的inception block均为原始结构;

- #1x1表示的branch4的1x1卷积核的数量;#5x5 reduce 表示的是branch2中1x1卷积核的数量,#5x5表示branch2中5x5的卷积核的数量;#3x3 reduce,#3x3的含义同前;pool proj表示branch3在池化层后1x1卷积核的数量;

- 原始block结构中的池化层均采用最大值池化

(2)Inception-v2的架构同v1,但是将其block结构改成了Type1结构(VGG中提出的思路,用小尺寸的卷积核串联代替卷积核,从而减少参数量。)另外,Inception-v2开始使用了BN层。(像复现VGG一样,我复现inception-v1的时候也引入了BN层。原文没有用是因为当时后不知道,既然BN层好没有理由不给它补上。)

1.3 Inception-v3网络架构

- inception-v3弃用了原始block结构,用了type1,type2,…,type5这些block;

- v3中所有block中的池化均采用均值池化;

- v3的输入改成了299x299

- 论文的提出的结构如下表(实际结构和论文有出入,1.5节提到):

1.4 复现思路

通过观察,我们可以发现:

1、各种type的block有4条支路(有的是3条),我们称之为branch1,branch2,branch3,branch4,不同block的各条branch有相同的,也有不同的;

2、每条branch的卷积核尺寸可以通过参数控制,branch1有两个参数,分别是#nxn reduce和#nxn的数量,branch2同样,branch3有一个参数是池化层后面1x1卷积核的数量,branch4也有一个参数是1x1卷积核的数量

于是,我们可以创建一个bank类来提供这些不同的branch,然后通过传入block_type参数控制各种branch的组合形成不同type的inception block。

由于Inception-v1,v2的网络架构相同,所以我将它们放在同一个文件内实现;inception-v3的网络结构,branch的种类,以及block内部使用的池化层都与前二者相差比较大,我用单独的一个文件实现它。

1.5 Inception-v3的详细结构

在Inception-v3的论文中有这样一段话:

The detailed structure of the network, including the sizes of filter banks inside the Inception modules, is given in the supplementary material, given in the model.txt that is in the tar-file of this submission.

然后,我搜了很多地方没有找到这个model.txt文件,最后终于再arXiv上面找到了作者上传的压缩包,从中获取到model.txt文件。但是关于文件内容,有几点需要注意:

1、文件中描述的详细网络结构和论文中描述的有差异,主要是里面使用的inception block结构有变化;

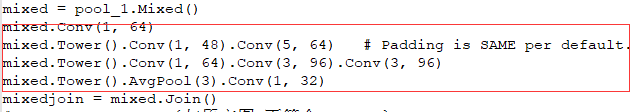

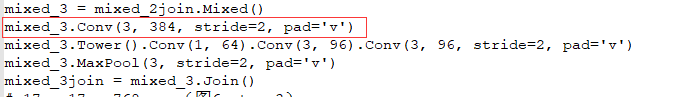

(1) 比如其中使用的Type1 block中branch2的卷积核尺寸是5x5而非3x3:

以及Type2中的branch2,并没有#3x3 reduce,而直接是#3x3:

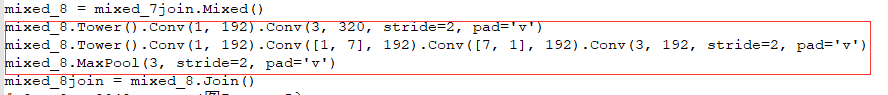

(2)还有的block结构在文中没有给出,比如type4:

2、model.txt中的注释出现了混淆,有的地方注释标示的是下一层的输入尺寸,而后又变成了下一层输出尺寸标示。

从宏观上讲,论文提供的是Inception block这种思想,没有错,但是我觉得在进行新的实验后,应该同步论文和实验数据,这样更严谨。我的复现是按照model.txt给出的结构来进行的。

2. PyTorch复现

2.1 编写BN_Conv2d_ReLU结构

# BN_CONV_RELU

class BN_Conv2d(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, stride, padding, dilation=1, bias=True):

super(BN_Conv2d, self).__init__()

self.seq = nn.Sequential(

nn.Conv2d(in_channels, out_channels, kernel_size=kernel_size, stride=stride,

padding=padding, dilation=dilation, bias=bias),

nn.BatchNorm2d(out_channels)

)

def forward(self, x):

return F.relu(self.seq(x))

2.1 Inception-v1,v2复现

按照上面的思路,给出代码:

1、block bank类

这里的type1对应1.1节所提到的原始结构,type2对应1.1节的type1。

import torch

import torch.nn as nn

import torch.nn.functional as F

from torchsummary import summary

class Inception_builder(nn.Module):

"""

types of Inception block

"""

def __init__(self, block_type, in_channels, b1_reduce, b1, b2_reduce, b2, b3, b4):

super(Inception_builder, self).__init__()

self.block_type = block_type # controlled by strings "type1", "type2"

# 5x5 reduce, 5x5

self.branch1_type1 = nn.Sequential(

BN_Conv2d(in_channels, b1_reduce, 1, stride=1, padding=0, bias=False),

BN_Conv2d(b1_reduce, b1, 5, stride=1, padding=2, bias=False) # same padding

)

# 5x5 reduce, 2x3x3

self.branch1_type2 = nn.Sequential(

BN_Conv2d(in_channels, b1_reduce, 1, stride=1, padding=0, bias=False),

BN_Conv2d(b1_reduce, b1, 3, stride=1, padding=1, bias=False), # same padding

BN_Conv2d(b1, b1, 3, stride=1, padding=1, bias=False)

)

# 3x3 reduce, 3x3

self.branch2 = nn.Sequential(

BN_Conv2d(in_channels, b2_reduce, 1, stride=1, padding=0, bias=False),

BN_Conv2d(b2_reduce, b2, 3, stride=1, padding=1, bias=False)

)

# max pool, pool proj

self.branch3 = nn.Sequential(

nn.MaxPool2d(3, stride=1, padding=1), # to keep size, also use same padding

BN_Conv2d(in_channels, b3, 1, stride=1, padding=0, bias=False)

)

# 1x1

self.branch4 = BN_Conv2d(in_channels, b4, 1, stride=1, padding=0, bias=False)

def forward(self, x):

if self.block_type == "type1":

out1 = self.branch1_type1(x)

out2 = self.branch2(x)

elif self.block_type == "type2":

out1 = self.branch1_type2(x)

out2 = self.branch2(x)

out3 = self.branch3(x)

out4 = self.branch4(x)

out = torch.cat((out1, out2, out3, out4), 1)

return out

2、Inception构建类

class GoogleNet(nn.Module):

"""

Inception-v1, Inception-v2

"""

def __init__(self, str_version, num_classes):

super(GoogleNet, self).__init__()

self.block_type = "type1" if str_version == "v1" else "type2"

self.version = str_version # "v1", "v2"

self.conv1 = BN_Conv2d(3, 64, 7, stride=2, padding=3, bias=False)

self.conv2 = BN_Conv2d(64, 192, 3, stride=1, padding=1, bias=False)

self.inception3_a = Inception_builder(self.block_type, 192, 16, 32, 96, 128, 32, 64)

self.inception3_b = Inception_builder(self.block_type, 256, 32, 96, 128, 192, 64, 128)

self.inception4_a = Inception_builder(self.block_type, 480, 16, 48, 96, 208, 64, 192)

self.inception4_b = Inception_builder(self.block_type, 512, 24, 64, 112, 224, 64, 160)

self.inception4_c = Inception_builder(self.block_type, 512, 24, 64, 128, 256, 64, 128)

self.inception4_d = Inception_builder(self.block_type, 512, 32, 64, 144, 288, 64, 112)

self.inception4_e = Inception_builder(self.block_type, 528, 32, 128, 160, 320, 128, 256)

self.inception5_a = Inception_builder(self.block_type, 832, 32, 128, 160, 320, 128, 256)

self.inception5_b = Inception_builder(self.block_type, 832, 48, 128, 192, 384, 128, 384)

self.fc = nn.Linear(1024, num_classes)

def forward(self, x):

out = self.conv1(x)

out = F.max_pool2d(out, 3, 2, 1)

out = self.conv2(out)

out = F.max_pool2d(out, 3, 2, 1)

out = self.inception3_a(out)

out = self.inception3_b(out)

out = F.max_pool2d(out, 3, 2, 1)

out = self.inception4_a(out)

out = self.inception4_b(out)

out = self.inception4_c(out)

out = self.inception4_d(out)

out = self.inception4_e(out)

out = F.max_pool2d(out, 3, 2, 1)

out = self.inception5_a(out)

out = self.inception5_b(out)

out = F.avg_pool2d(out, 7)

out = F.dropout(out, 0.4, training=self.training)

out = out.view(out.size(0), -1)

out = self.fc(out)

return F.softmax(out)

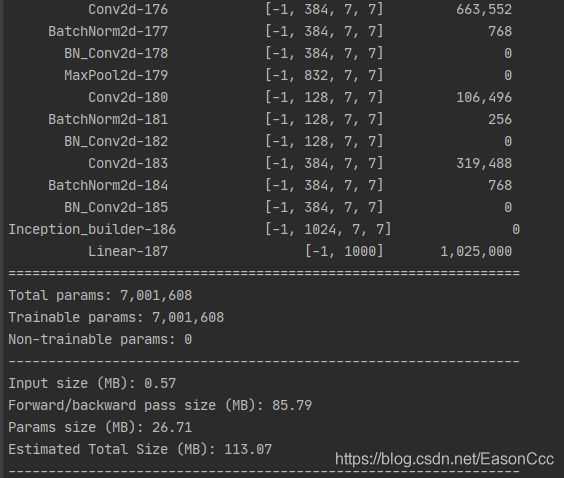

3、构建网络并测试

def inception_v1():

return GoogleNet("v1", num_classes=1000)

def inception_v2():

return GoogleNet("v2", num_classes=1000)

def test():

net = inception_v1()

# net = inception_v2()

summary(net, (3, 224, 224))

test()

2.2 Inception-v3复现

1、block back类

这里的type1,type2,type3,type4,type5与1.1节对应。

class Block_bank(nn.Module):

"""

inception structures

"""

def __init__(self, block_type, in_channels, b1_reduce, b1, b2_reduce, b2, b3, b4):

super(Block_bank, self).__init__()

self.block_type = block_type # controlled by strings "type1", "type2", "type3", "type4", "type5"

"""

branch 1

"""

# reduce, 3x3, 3x3

self.branch1_type1 = nn.Sequential(

BN_Conv2d(in_channels, b1_reduce, 1, 1, 0, bias=False),

BN_Conv2d(b1_reduce, b1, 3, 1, 1, bias=False),

BN_Conv2d(b1, b1, 3, 1, 1, bias=False)

)

# reduce, 3x3, 3x3_s2

self.branch1_type2 = nn.Sequential(

BN_Conv2d(in_channels, b1_reduce, 1, 1, 0, bias=False),

BN_Conv2d(b1_reduce, b1, 3, 1, 1, bias=False),

BN_Conv2d(b1, b1, 3, 2, 0, bias=False)

)

# reduce, 1x7, 7x1, 1x7, 7x1

self.branch1_type3 = nn.Sequential(

BN_Conv2d(in_channels, b1_reduce, 1, 1, 0, bias=False),

BN_Conv2d(b1_reduce, b1_reduce, (7, 1), (1, 1), (3, 0), bias=False),

BN_Conv2d(b1_reduce, b1_reduce, (1, 7), (1, 1), (0, 3), bias=False), # same padding

BN_Conv2d(b1_reduce, b1_reduce, (7, 1), (1, 1), (3, 0), bias=False),

BN_Conv2d(b1_reduce, b1, (1, 7), (1, 1), (0, 3), bias=False)

)

# reduce, 1x7, 7x1, 3x3_s2

self.branch1_type4 = nn.Sequential(

BN_Conv2d(in_channels, b1_reduce, 1, 1, 0, bias=False),

BN_Conv2d(b1_reduce, b1, (1, 7), (1, 1), (0, 3), bias=False),

BN_Conv2d(b1, b1, (7, 1), (1, 1), (3, 0), bias=False),

BN_Conv2d(b1, b1, 3, 2, 0, bias=False)

)

# reduce, 3x3, 2 sub-branch of 1x3, 3x1

self.branch1_type5_head = nn.Sequential(

BN_Conv2d(in_channels, b1_reduce, 1, 1, 0, bias=False),

BN_Conv2d(b1_reduce, b1, 3, 1, 1, bias=False)

)

self.branch1_type5_body1 = BN_Conv2d(b1, b1, (1, 3), (1, 1), (0, 1), bias=False)

self.branch1_type5_body2 = BN_Conv2d(b1, b1, (3, 1), (1, 1), (1, 0), bias=False)

"""

branch 2

"""

# reduce, 5x5

self.branch2_type1 = nn.Sequential(

BN_Conv2d(in_channels, b2_reduce, 1, 1, 0, bias=False),

BN_Conv2d(b2_reduce, b2, 5, 1, 2, bias=False)

)

# 3x3_s2

self.branch2_type2 = BN_Conv2d(in_channels, b2, 3, 2, 0, bias=False)

# reduce, 1x7, 7x1

self.branch2_type3 = nn.Sequential(

BN_Conv2d(in_channels, b2_reduce, 1, 1, 0, bias=False),

BN_Conv2d(b2_reduce, b2_reduce, (1, 7), (1, 1), (0, 3), bias=False),

BN_Conv2d(b2_reduce, b2, (7, 1), (1, 1), (3, 0), bias=False)

)

# reduce, 3x3_s2

self.branch2_type4 = nn.Sequential(

BN_Conv2d(in_channels, b2_reduce, 1, 1, 0, bias=False),

BN_Conv2d(b2_reduce, b2, 3, 2, 0, bias=False)

)

# reduce, 2 sub-branch of 1x3, 3x1

self.branch2_type5_head = BN_Conv2d(in_channels, b2_reduce, 1, 1, 0, bias=False)

self.branch2_type5_body1 = BN_Conv2d(b2_reduce, b2, (1, 3), (1, 1), (0, 1), bias=False)

self.branch2_type5_body2 = BN_Conv2d(b2_reduce, b2, (3, 1), (1, 1), (1, 0), bias=False)

"""

branch 3

"""

# avg pool, 1x1

self.branch3 = nn.Sequential(

nn.AvgPool2d(3, 1, 1),

BN_Conv2d(in_channels, b3, 1, 1, 0, bias=False)

)

"""

branch 4

"""

# 1x1

self.branch4 = BN_Conv2d(in_channels, b4, 1, 1, 0, bias=False)

def forward(self, x):

if self.block_type == "type1":

out1 = self.branch1_type1(x)

out2 = self.branch2_type1(x)

out3 = self.branch3(x)

out4 = self.branch4(x)

out = torch.cat((out1, out2, out3, out4), 1)

elif self.block_type == "type2":

out1 = self.branch1_type2(x)

out2 = self.branch2_type2(x)

out3 = F.max_pool2d(x, 3, 2, 0)

out = torch.cat((out1, out2, out3), 1)

elif self.block_type == "type3":

out1 = self.branch1_type3(x)

out2 = self.branch2_type3(x)

out3 = self.branch3(x)

out4 = self.branch4(x)

out = torch.cat((out1, out2, out3, out4), 1)

elif self.block_type == "type4":

out1 = self.branch1_type4(x)

out2 = self.branch2_type4(x)

out3 = F.max_pool2d(x, 3, 2, 0)

out = torch.cat((out1, out2, out3), 1)

else: # type5

tmp = self.branch1_type5_head(x)

out1_1 = self.branch1_type5_body1(tmp)

out1_2 = self.branch1_type5_body2(tmp)

tmp = self.branch2_type5_head(x)

out2_1 = self.branch2_type5_body1(tmp)

out2_2 = self.branch2_type5_body2(tmp)

out3 = self.branch3(x)

out4 = self.branch4(x)

out = torch.cat((out1_1, out1_2, out2_1, out2_2, out3, out4), 1)

return out

2、Inception-v3构建类

class Inception_v3(nn.Module):

def __init__(self, num_classes):

super(Inception_v3, self).__init__()

self.conv = BN_Conv2d(3, 32, 3, 2, 0, bias=False)

self.conv1 = BN_Conv2d(32, 32, 3, 1, 0, bias=False)

self.conv2 = BN_Conv2d(32, 64, 3, 1, 1, bias=False)

self.conv3 = BN_Conv2d(64, 80, 1, 1, 0, bias=False)

self.conv4 = BN_Conv2d(80, 192, 3, 1, 0, bias=False)

self.inception1_1 = Block_bank("type1", 192, 64, 96, 48, 64, 32, 64)

self.inception1_2 = Block_bank("type1", 256, 64, 96, 48, 64, 64, 64)

self.inception1_3 = Block_bank("type1", 288, 64, 96, 48, 64, 64, 64)

self.inception2 = Block_bank("type2", 288, 64, 96, 288, 384, 288, 288)

self.inception3_1 = Block_bank("type3", 768, 128, 192, 128, 192, 192, 192)

self.inception3_2 = Block_bank("type3", 768, 160, 192, 160, 192, 192, 192)

self.inception3_3 = Block_bank("type3", 768, 160, 192, 160, 192, 192, 192)

self.inception3_4 = Block_bank("type3", 768, 192, 192, 192, 192, 192, 192)

self.inception4 = Block_bank("type4", 768, 192, 192, 192, 320, 288, 288)

self.inception5_1 = Block_bank("type5", 1280, 448, 384, 384, 384, 192, 320)

self.inception5_2 = Block_bank("type5", 2048, 448, 384, 384, 384, 192, 320)

self.fc = nn.Linear(2048, num_classes)

def forward(self, x):

out = self.conv(x)

out = self.conv1(out)

out = self.conv2(out)

out = F.max_pool2d(out, 3, 2, 0)

out = self.conv3(out)

out = self.conv4(out)

out = F.max_pool2d(out, 3, 2, 0)

out = self.inception1_1(out)

# print(out.shape, "=================== inception1_1")

out = self.inception1_2(out)

# print(out.shape, "=================== inception1_2")

out = self.inception1_3(out)

# print(out.shape, "=================== inception1_3")

out = self.inception2(out)

# print(out.shape, "=================== inception2")

out = self.inception3_1(out)

# print(out.shape, "=================== inception3_1")

out = self.inception3_2(out)

# print(out.shape, "=================== inception3_2")

out = self.inception3_3(out)

# print(out.shape, "=================== inception3_3")

out = self.inception3_4(out)

# print(out.shape, "=================== inception3_4")

out = self.inception4(out)

# print(out.shape, "=================== inception4")

out = self.inception5_1(out)

# print(out.shape, "=================== inception5_1")

out = self.inception5_2(out)

# print(out.shape, "=================== inception5_2")

out = F.avg_pool2d(out, 8)

# print(out.shape, "=================== avg_pool")

out = F.dropout(out, 0.2, training=self.training)

# print(out.shape, "=================== drop_out")

out = out.view(out.size(0), -1)

# print(out.shape, "=================== view")

out = self.fc(out)

# print(out.shape, "=================== fc")

return F.softmax(out)

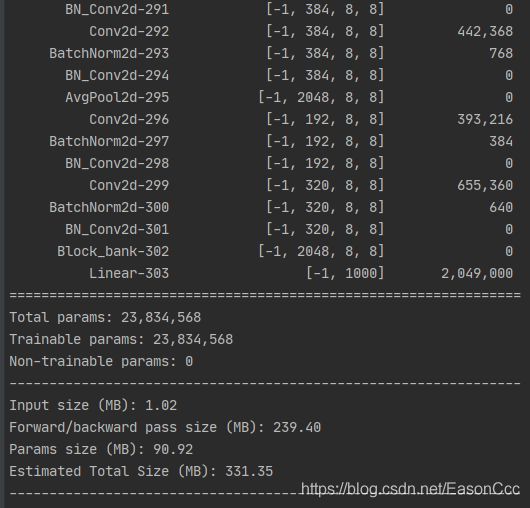

3、构建网络并测试

def inception_v3():

return Inception_v3(num_classes=1000)

def test():

net = inception_v3()

summary(net, (3, 299, 299))

test()