决策树处理UNSW-NB15数据集

import numpy as np

import pandas as pd

from sklearn import metrics

from sklearn.tree import DecisionTreeClassifier

from sklearn.preprocessing import Normalizer

from sklearn.metrics import (precision_score, recall_score,f1_score, accuracy_score,mean_squared_error,mean_absolute_error, roc_curve, classification_report,auc)

from sklearn.preprocessing import LabelEncoder

traindata=pd.read_csv('UNSW_NB15_testing-set.csv',skiprows=1,names=['id','dur','proto','service','state','spkts','dpkts','sbytes','dbytes','rate','sttl','dttl','sload','dload','sloss','dloss','sinpkt','dinpkt','sjit','djit','swin','stcpb','dtcpb','dwin','tcprtt','synack','ackdat','smean','dmean','trans_depth','response_body_len','ct_srv_src','ct_state_ttl','ct_dst_ltm','ct_src_dport_ltm','ct_dst_sport_ltm','ct_dst_src_ltm','is_ftp_login','ct_ftp_cmd','ct_flw_http_mthd','ct_src_ltm','ct_srv_dst','is_sm_ips_ports','attack_cat','label'])

testdata=pd.read_csv('UNSW_NB15_training-set.csv',skiprows=1,names=['id','dur','proto','service','state','spkts','dpkts','sbytes','dbytes','rate','sttl','dttl','sload','dload','sloss','dloss','sinpkt','dinpkt','sjit','djit','swin','stcpb','dtcpb','dwin','tcprtt','synack','ackdat','smean','dmean','trans_depth','response_body_len','ct_srv_src','ct_state_ttl','ct_dst_ltm','ct_src_dport_ltm','ct_dst_sport_ltm','ct_dst_src_ltm','is_ftp_login','ct_ftp_cmd','ct_flw_http_mthd','ct_src_ltm','ct_srv_dst','is_sm_ips_ports','attack_cat','label'])

for column in traindata.columns:

if traindata[column].dtype == type(object):

le = LabelEncoder() #标签编码,即是对不连续的数字或者文本进行编号,转换成连续的数值型变量

traindata[column] = le.fit_transform(traindata[column])

for column in testdata.columns:

if testdata[column].dtype == type(object):

le = LabelEncoder()

testdata[column] = le.fit_transform(testdata[column])

X1 = traindata.iloc[:,1:44]

Y1 = traindata.iloc[:,44]

Y2 = testdata.iloc[:,44]

X2 = testdata.iloc[:,1:44]

scaler = Normalizer().fit(X1)

trainX = scaler.transform(X1)

scaler = Normalizer().fit(X2)

testT = scaler.transform(X2)

traindata = np.array(trainX)

trainlabel = np.array(Y1)

testdata = np.array(testT)

testlabel = np.array(Y2)

model = DecisionTreeClassifier() #决策树

model.fit(traindata, trainlabel)

expected = testlabel

predicted = model.predict(testdata)

accuracy = accuracy_score(expected, predicted)

recall = recall_score(expected, predicted, average="binary")

precision = precision_score(expected, predicted , average="binary") #精确率

f1 = f1_score(expected, predicted , average="binary")

cm = metrics.confusion_matrix(expected, predicted)

print(cm,cm[0][0],cm[0][1]) #混淆矩阵

tpr = float(cm[0][0])/np.sum(cm[0])

fpr = float(cm[1][1])/np.sum(cm[1])

print("%.3f" %tpr)

print("%.3f" %fpr)

print("Accuracy","%.3f" %accuracy)

print("precision","%.3f" %precision)

print("recall","%.3f" %recall)

print("f-score","%.3f" %f1)

print("fpr","%.3f" %fpr)

print("tpr","%.3f" %tpr)

————————————————

决策树分析入侵检测UNSW-NB15数据集

accuracy 0.802

precision 0.774

recall 0.906

f-score 0.835

简介

这一篇介绍关于UNSW-NB15数据集的相关内容, 也是关于入侵检测的一个数据集. 这里主要会对这个数据集进行介绍. 之前我们对另一个入侵检测的数据集进行过介绍, 链接如下: KDD99数据集与NSL-KDD数据集介绍

UNSW-NB15总体介绍

数据集的官网: The UNSW-NB15 Dataset Description

数据集下载链接: UNSW-NB15 Download

数据集中一共有9种攻击: This dataset has nine types of attacks, namely, Fuzzers, Analysis, Backdoors, DoS, Exploits, Generic, Reconnaissance, Shellcode and Worms.

数据集一共有49个特征, 我们会在后面对每一种特征进行介绍.

在csv中保存的数据共有2,540044条数据, 被包含在四个文件中: The total number of records is two million and 540,044 which are stored in the four CSV files.

这里包含了每一种攻击的数量, 后面会做简单的分析: UNSW-NB15_LIST_EVENTS.csv.

该数据集已经进行了训练集和测试集的分割, 文件分别如下: UNSW_NB15_training-set.csv and UNSW_NB15_testing-set.csv.

在训练集中共有175341条记录, 在测试集中共有82332条记录. The number of records in the training set is 175,341 records and the testing set is 82,332 records from the different types, attack and normal.Figure 1 and 2 show the testbed configuration dataset and the method of the feature creation of the UNSW-NB15, respectively.

UNSW-NB15特征介绍

数据集共有49个特征, 下面分别进行介绍, 这里的内容来源为:

- Moustafa, Nour, and Jill Slay. "UNSW-NB15: a comprehensive data set for network intrusion detection systems (UNSW-NB15 network data set)." In 2015 military communications and information systems conference (MilCIS), pp. 1-6. IEEE, 2015.

关于下面的数据介绍中, Type的简写的对于关系分别如下所示:

- N: nominal,

- I: integer,

- F: float,

- T: timestamp,

- B: binary

Flow Features

- #, Name, Type, Description

- ------------------------------

- 1. srcip, N, Source IP address

- 2. sport, I, Source port number

- 3. dstip, N, Destination IP address

- 4. dsport, I, Destination port number

- 5. proto, N, Transaction protocol

Base Features

- 6, state, N, The state and its dependent protocol, e.g. ACC, CLO, else (-)

- 7, dur, F, Record total duration

- 8, sbytes, I, Source to destination bytes

- 9, dbytes, I, Destination to source bytes

- 10, sttl, I, Source to destination time to live

- 11, dttl, I, Destination to source time to live

- 12, sloss, I, Source packets retransmitted or dropped

- 13, dloss, I, Destination packets retransmitted or dropped

- 14, service, N, http, ftp, ssh, dns ..,else (-)

- 15, sload, F, Source bits per second

- 16, dload, F, Destination bits per second

- 17, spkts, I, Source to destination packet count

- 18, dpkts, I, Destination to source packet count

Content Features

- 19, swin, I, Source TCP window advertisement

- 20, dwin, I, Destination TCP window advertisement

- 21, stcpb, I, Source TCP sequence number

- 22, dtcpb, I, Destination TCP sequence number

- 23, smeansz, I, Mean of the flow packet size transmitted by the src

- 24, dmeansz, I, Mean of the flow packet size transmitted by the dst

- 25, trans_depth, I, the depth into the connection of http request/response transaction

- 26, res_bdy_len, I, The content size of the data transferred from the server's http service.

Time Features

- 27, sjit, F, Source jitter (mSec)

- 28, djit, F, Destination jitter (mSec)

- 29, stime, T, record start time

- 30, ltime, T, record last time

- 31, sintpkt, F, Source inter-packet arrival time (mSec)

- 32, dintpkt, F, Destination inter-packet arrival time (mSec)

- 33, tcprtt, F, The sum of 'synack' and 'ackdat' of the TCP.

- 34, synack, F, The time between the SYN and the SYN_ACK packets of the TCP.

- 35, ackdat, F, The time between the SYN_ACK and the ACK packets of the TCP.

The features from 1-35 represent the integrated gathered information from data packets. The majority of features are generated from header packets as reflected above.

Additional Generated Features--General purpose features

In the general purpose features, each feature has its own purpose, according to the defence point of view.

- 36, is_sm_ips_ports, B, If source (1) equals to destination (3)IP addresses and port numbers (2)(4) are equal, this variable takes value 1 else 0

- 37, ct_state_ttl, I, No. for each state (6) according to specific range of values for source/destination time to live (10) (11).

- 38, ct_flw_http_mthd, I, No. of flows that has methods such as Get and Post in http service.

- 39, is_ftp_login, B, If the ftp session is accessed by user and password then 1 else 0.

- 40, ct_ftp_cmd, I, No of flows that has a command in ftp session.

Additional Generated Features--Connection features

Connection features are solely created to provide defence during attempt to connection scenarios.

The attackers might scan hosts in a capricious way. For example, once per minute or one scan per hour . In order to identify these attackers, the features 36-47 are intended to sort accordingly with the last time feature to capture similar characteristics of the connection records for each 100 connections sequentially ordered.

- 41, ct_srv_src, I, No. of connections that contain the same service (14) and source address (1) in 100 connections according to the last time (26).

- 42, ct_srv_dst, I, No. of connections that contain the same service (14) and destination address (3) in 100 connections according to the last time (26).

- 43, ct_dst_ltm, I, No. of connections of the same destination address (3) in 100 connections according to the last time (26).

- 44, ct_src_ ltm, I, No. of connections of the same source address (1) in 100 connections according to the last time (26).

- 45, ct_src_dport_ltm, I, No of connections of the same source address (1) and the destination port (4) in 100 connections according to the last time (26).

- 46, ct_dst_sport_ltm, I, No of connections of the same destination address (3) and the source port (2) in 100 connections according to the last time (26).

- 47, ct_dst_src_ltm, I, No of connections of the same source (1) and the destination (3) address in in 100 connections according to the last time (26).

Labelled Features

- 48, attack_cat, N, The name of each attack category. In this data set, nine categories (e.g., Fuzzers, Analysis, Backdoors, DoS, Exploits, Generic, Reconnaissance, Shellcode and Worms), 一共9种攻击, 算上Normal是一共有10个类别.

- 49, Label, B, 0 for normal and 1 for attack records

UNSW-NB15数据介绍

数据集的分布介绍

It represents the distribution of all records of the UNSW-NB15 data set. The major categories of the records are normal and attack. The attack records are further classified into nine families according to the nature of the attacks.

- (1)Normal: 2,218,761; Natural transaction data.

- (2)Fuzzers: 24,246; Attempting to cause a program or network suspended by feeding it the randomly generated data. (模糊攻击)

- (3)Analysis: 2,677; It contains different attacks of port scan, spam and html files penetrations.

- (4)Backdoors: 2,329; A technique in which a system security mechanism is bypassed stealthily to access a computer or its data.

- (5)DoS: 16,353; A malicious attempt to make a server or a network resource unavailable to users, usually by temporarily interrupting or suspending the services of a host connected to the Internet.

- (6)Exploits: 44,525; The attacker knows of a security problem within an operating system or a piece of software and leverages that knowledge by exploiting the vulnerability.

- (7)Generic: 215,481; A technique works against all blockciphers(分组密码) (with a given block and key size), without consideration about the structure of the block-cipher.

- (8)Reconnaissance(侦察): 13,987; Contains all Strikes that can simulate attacks that gather information.

- (9)Shellcode: 1,511; A small piece of code used as the payload in the exploitation of software vulnerability.

- (10)Worms: 174; Attacker replicates itself in order to spread to other computers. Often, it uses a computer network to spread itself, relying on security failures on the target computer to access it.

UNSW-NB15文件介绍

Four CSV files of the data records are provided and each CSV file contains attack and normal records. The names of the CSV files are UNSWNB15_1.csv, UNSW-NB15_2.csv, UNSW NB15_3.csv and UNSW-NB15_4.csv.

In each CSV file, all the records are ordered according the last time attribute. Further, the first three CSV files each file contains 700000 records and the fourth file contains 440044 records.

The list of event file is labelled UNSWNB15_LIST_EVENTS which contains attack category and subcategory.

UNSW-NB15准确率分析

这里我们看一下UNSW-NB15数据集使用各种算法的准确率的分析. 这里的结果来源于以下的论文.

- @article{moustafa2016evaluation,

- title={The evaluation of Network Anomaly Detection Systems: Statistical analysis of the UNSW-NB15 data set and the comparison with the KDD99 data set},

- author={Moustafa, Nour and Slay, Jill},

- journal={Information Security Journal: A Global Perspective},

- volume={25},

- number={1-3},

- pages={18--31},

- year={2016},

- publisher={Taylor \& Francis}

- }

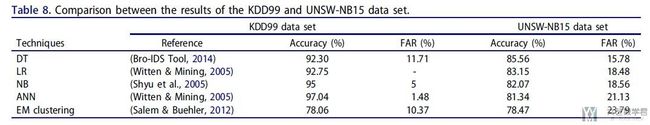

在这里会使用五种算法来进行评估: The five techniques used are Naive Bayes (NB) (Panda & Patra, 2007), Decision Tree (DT) (Bouzida & Cuppens, 2006), Artificial Neural Network (ANN) (Bouzida & Cuppens, 2006; Mukkamala et al., 2005), Logistic Regression (LR) (Mukkamala et al., 2005), and Expectation-Maximization (EM) Clustering (Sharif et al., 2012).

模型评估的标准分别是Accuracy和false alarm rates (FAR). 关于更多评价标准的内容, 可以参考链接: 模型评价指标说明与实践–混淆矩阵的说明

最终文章测试的结果如下图所示, 可以看到准确率大概在85%不到的样子:

UNSW-NB15实验

这里包含一些使用UNSW-NB15数据集来进行的实验, 做实验的时候可以参考这些代码.

Github上关于该数据集的汇总: Github汇总--UNSW-NB15数据集

做好数据处理的数据(做了数据预处理): Feature coded UNSW_NB15 intrusion detection data.

使用SVM和Naive Bayes来对UNSW-NB15进行处理: UNSW-Network_Packet_Classification