转载:GAN实例——野马变斑马【实测成功】

仅作为记录,大佬请跳过。

文章目录

- 参考

- 第一部分——数据集准备的源代码

- 第二部分——训练与效果的源代码

- 展示

- 注

参考

感谢大佬博主文章传送门

(博主完全参考上面的大佬博主文章)

第一部分——数据集准备的源代码

文件夹位置:

下载的数据解压后放在任何路径(该路径在c1gan_calcu.py引入);

在任何路径新建c1gan_calcu.py

1、数据下载

2、新建c1gan_calcu.py文件

3、c1gan_calcu.py放着第一部分源代码,——将数据集解压后的文件夹的路径写入c1gan_calcu.py文件

4、直接运行.py即可。(——得到整理后的数据集文件horse2zebra_256.npz)

c1gan_calcu.py文件的源代码:

from os import listdir

import numpy as np

from numpy import asarray

from keras.preprocessing.image import img_to_array

from keras.preprocessing.image import load_img

# load all images in a directory into memory

def load_images(path, size=(256, 256)):

data_list = list()

# enumerate filenames in directory, assume all are images

for filename in listdir(path):

# load and resize the image

pixels = load_img(path + filename, target_size=size)

# convert to numpy array

pixels = img_to_array(pixels)

# store

data_list.append(pixels)

return asarray(data_list)

# dataset path

path = 'F:/cycleganhorse/horse2zebra/'

# load dataset A

dataA1 = load_images(path + 'trainA/')

dataAB = load_images(path + 'testA/')

dataA = np.vstack((dataA1, dataAB))

print('Loaded dataA: ', dataA.shape)

# load dataset B

dataB1 = load_images(path + 'trainB/')

dataB2 = load_images(path + 'testB/')

dataB = np.vstack((dataB1, dataB2))

print('Loaded dataB: ', dataB.shape)

# save as compressed numpy array

filename = 'horse2zebra_256.npz'

# np.savez_compressed(filename, dataA, dataB)

print('Saved dataset: ', filename)

# 查看horse2zebra_256.npz里的图片

# load and plot the prepared dataset

from numpy import load

from matplotlib import pyplot

# load the dataset

data = load(r'F:\cycleganhorse\horse2zebra_256.npz')

dataA, dataB = data['arr_0'], data['arr_1']

print('Loaded: ', dataA.shape, dataB.shape)

# plot source images

n_samples = 3

for i in range(n_samples):

pyplot.subplot(2, n_samples, 1 + i)

pyplot.axis('off')

pyplot.imshow(dataA[i].astype('uint8'))

# plot target image

for i in range(n_samples):

pyplot.subplot(2, n_samples, 1 + n_samples + i)

pyplot.axis('off')

pyplot.imshow(dataB[i].astype('uint8'))

pyplot.show()

运行后还可查看horse2zebra_256.npz里的图片

展示:

第二部分——训练与效果的源代码

1、将第一部分生成的数据集文件horse2zebra_256.npz,与c1gan.py放在同一个文件夹:

2、然后运行c1gan.py文件即可,

c1gan.py的源代码:

import tensorflow as tf

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.initializers import RandomNormal

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input

from tensorflow.keras.layers import Conv2D

from tensorflow.keras.layers import Conv2DTranspose

from tensorflow.keras.layers import Activation

from tensorflow.keras.layers import Concatenate

from tensorflow.keras.layers import Dropout

from matplotlib import pyplot

from tensorflow.keras.layers import LeakyReLU

import tensorflow_addons as tfa

import numpy as np

from random import random

def define_discriminator(image_shape):

# weight initialization

init = RandomNormal(stddev=0.02)

# source image input

in_image = Input(shape=image_shape)

# C64

d = Conv2D(64, (4,4), strides=(2,2), padding='same', kernel_initializer=init)(in_image)

d = LeakyReLU(alpha=0.2)(d)

# C128

d = Conv2D(128, (4,4), strides=(2,2), padding='same', kernel_initializer=init)(d)

d = tfa.layers.InstanceNormalization(axis=-1)(d)

d = LeakyReLU(alpha=0.2)(d)

# C256

d = Conv2D(256, (4,4), strides=(2,2), padding='same', kernel_initializer=init)(d)

d = tfa.layers.InstanceNormalization(axis=-1)(d)

d = LeakyReLU(alpha=0.2)(d)

# C512

d = Conv2D(512, (4,4), strides=(2,2), padding='same', kernel_initializer=init)(d)

d = tfa.layers.InstanceNormalization(axis=-1)(d)

d = LeakyReLU(alpha=0.2)(d)

# second last output layer

d = Conv2D(512, (4,4), padding='same', kernel_initializer=init)(d)

d = tfa.layers.InstanceNormalization(axis=-1)(d)

d = LeakyReLU(alpha=0.2)(d)

# patch output

patch_out = Conv2D(1, (4,4), padding='same', kernel_initializer=init)(d)

# define model

model = Model(in_image, patch_out)

# compile model

model.compile(loss='mse', optimizer=Adam(lr=0.0002, beta_1=0.5), loss_weights=[0.5])

return model

# generator a resnet block

def resnet_block(n_filters, input_layer):

# weight initialization

init = RandomNormal(stddev=0.02)

# first layer convolutional layer

g = Conv2D(n_filters, (3,3), padding='same', kernel_initializer=init)(input_layer)

g = tfa.layers.InstanceNormalization(axis=-1)(g)

g = Activation('relu')(g)

# second convolutional layer

g = Conv2D(n_filters, (3,3), padding='same', kernel_initializer=init)(g)

g = tfa.layers.InstanceNormalization(axis=-1)(g)

# concatenate merge channel-wise with input layer

g = Concatenate()([g, input_layer])

return g

# define the standalone generator model

def define_generator(image_shape, n_resnet=9):

# weight initialization

init = RandomNormal(stddev=0.02)

# image input

in_image = Input(shape=image_shape)

# c7s1-64

g = Conv2D(64, (7,7), padding='same', kernel_initializer=init)(in_image)

g = tfa.layers.InstanceNormalization(axis=-1)(g)

g = Activation('relu')(g)

# d128

g = Conv2D(128, (3,3), strides=(2,2), padding='same', kernel_initializer=init)(g)

g = tfa.layers.InstanceNormalization(axis=-1)(g)

g = Activation('relu')(g)

# d256

g = Conv2D(256, (3,3), strides=(2,2), padding='same', kernel_initializer=init)(g)

g = tfa.layers.InstanceNormalization(axis=-1)(g)

g = Activation('relu')(g)

# R256

for _ in range(n_resnet):

g = resnet_block(256, g)

# u128

g = Conv2DTranspose(128, (3,3), strides=(2,2), padding='same', kernel_initializer=init)(g)

g = tfa.layers.InstanceNormalization(axis=-1)(g)

g = Activation('relu')(g)

# u64

g = Conv2DTranspose(64, (3,3), strides=(2,2), padding='same', kernel_initializer=init)(g)

g = tfa.layers.InstanceNormalization(axis=-1)(g)

g = Activation('relu')(g)

# c7s1-3

g = Conv2D(3, (7,7), padding='same', kernel_initializer=init)(g)

g = tfa.layers.InstanceNormalization(axis=-1)(g)

out_image = Activation('tanh')(g)

# define model

model = Model(in_image, out_image)

return model

# define a composite model for updating generators by adversarial and cycle loss

def define_composite_model(g_model_1, d_model, g_model_2, image_shape):

# ensure the model we're updating is trainable

g_model_1.trainable = True

# mark discriminator as not trainable

d_model.trainable = False

# mark other generator model as not trainable

g_model_2.trainable = False

# discriminator element

input_gen = Input(shape=image_shape)

gen1_out = g_model_1(input_gen) # A >B

output_d = d_model(gen1_out) # 识别B

# identity element

input_id = Input(shape=image_shape)

output_id = g_model_1(input_id) # B >A

# forward cycle

output_f = g_model_2(gen1_out) # A>B >A

# backward cycle

gen2_out = g_model_2(input_id) # B >A

output_b = g_model_1(gen2_out) # B> A >B

# define model graph

model = Model([input_gen, input_id], [output_d, output_id, output_f, output_b])

# define optimization algorithm configuration

opt = Adam(lr=0.0002, beta_1=0.5)

# compile model with weighting of least squares loss and L1 loss

model.compile(loss=['mse', 'mae', 'mae', 'mae'], loss_weights=[1, 5, 10, 10], optimizer=opt)

return model

# load and prepare training images

def load_real_samples(filename):

# load the dataset

data = np.load(filename)

# unpack arrays

X1, X2 = data['arr_0'], data['arr_1']

# scale from [0,255] to [-1,1]

X1 = (X1 - 127.5) / 127.5

X2 = (X2 - 127.5) / 127.5

return [X1, X2]

# select a batch of random samples, returns images and target

def generate_real_samples(dataset, n_samples, patch_shape):

# choose random instances

ix = np.random.randint(0, dataset.shape[0], n_samples)

# retrieve selected images

X = dataset[ix]

# generate 'real' class labels (1)

y = np.ones((n_samples, patch_shape, patch_shape, 1)) #(1,16,16,1)

return X, y

# generate a batch of images, returns images and targets

def generate_fake_samples(g_model, dataset, patch_shape):

# generate fake instance

X = g_model.predict(dataset)

# create 'fake' class labels (0)

y = np.zeros((len(X), patch_shape, patch_shape, 1)) # (1, 16,16,1)

return X, y

# save the generator models to file

def save_models(step, g_model_AtoB, g_model_BtoA):

# save the first generator model

filename1 = 'g_model_AtoB_%06d.h5' % (step+1)

g_model_AtoB.save(filename1)

# save the second generator model

filename2 = 'g_model_BtoA_%06d.h5' % (step+1)

g_model_BtoA.save(filename2)

print('>Saved: %s and %s' % (filename1, filename2))

# generate samples and save as a plot and save the model

def summarize_performance(step, g_model, trainX, name, n_samples=5):

# select a sample of input images

X_in, _ = generate_real_samples(trainX, n_samples, 0)

# generate translated images

X_out, _ = generate_fake_samples(g_model, X_in, 0)

# scale all pixels from [-1,1] to [0,1]

X_in = (X_in + 1) / 2.0

X_out = (X_out + 1) / 2.0

# plot real images

for i in range(n_samples):

pyplot.subplot(2, n_samples, 1 + i)

pyplot.axis('off')

pyplot.imshow(X_in[i])

# plot translated image

for i in range(n_samples):

pyplot.subplot(2, n_samples, 1 + n_samples + i)

pyplot.axis('off')

pyplot.imshow(X_out[i])

# save plot to file

filename1 = '%s_generated_plot_%06d.png' % (name, (step+1))

pyplot.savefig(filename1)

pyplot.close()

# update image pool for fake images

def update_image_pool(pool, images, max_size=50):

selected = list()

for image in images:

if len(pool) < max_size:

# stock the pool

pool.append(image)

selected.append(image)

elif random() < 0.5:

# use image, but don't add it to the pool

selected.append(image)

else:

# replace an existing image and use replaced image

ix = np.random.randint(0, len(pool))

selected.append(pool[ix])

pool[ix] = image

return np.asarray(selected)

# train cyclegan models

def train(d_model_A, d_model_B, g_model_AtoB, g_model_BtoA, c_model_AtoB, c_model_BtoA, dataset):

# define properties of the training run

n_epochs, n_batch, = 100, 1

# determine the output square shape of the discriminator

n_patch = d_model_A.output_shape[1] # 16

# unpack dataset

trainA, trainB = dataset

# prepare image pool for fakes

poolA, poolB = list(), list()

# calculate the number of batches per training epoch

bat_per_epo = int(len(trainA) / n_batch) # 1187

# calculate the number of training iterations

n_steps = bat_per_epo * n_epochs # 1187 * 100

# manually enumerate epochs

for i in range(n_steps):

# select a batch of real samples

X_realA, y_realA = generate_real_samples(trainA, n_batch, n_patch) # 1187, 1, 16

X_realB, y_realB = generate_real_samples(trainB, n_batch, n_patch)

# generate a batch of fake samples

X_fakeA, y_fakeA = generate_fake_samples(g_model_BtoA, X_realB, n_patch) # B>A

X_fakeB, y_fakeB = generate_fake_samples(g_model_AtoB, X_realA, n_patch) # A>B

# update fakes from pool

X_fakeA = update_image_pool(poolA, X_fakeA)

X_fakeB = update_image_pool(poolB, X_fakeB)

# update generator B->A via adversarial and cycle loss

g_loss2, _, _, _, _ = c_model_BtoA.train_on_batch([X_realB, X_realA], [y_realA, X_realA, X_realB, X_realA])

# update discriminator for A -> [real/fake]

dA_loss1 = d_model_A.train_on_batch(X_realA, y_realA)

dA_loss2 = d_model_A.train_on_batch(X_fakeA, y_fakeA)

# update generator A->B via adversarial and cycle loss

g_loss1, _, _, _, _ = c_model_AtoB.train_on_batch([X_realA, X_realB], [y_realB, X_realB, X_realA, X_realB])

# update discriminator for B -> [real/fake]

dB_loss1 = d_model_B.train_on_batch(X_realB, y_realB)

dB_loss2 = d_model_B.train_on_batch(X_fakeB, y_fakeB)

# summarize performance

print('>%d, dA[%.3f,%.3f] dB[%.3f,%.3f] g[%.3f,%.3f]' % (i+1, dA_loss1,dA_loss2, dB_loss1,dB_loss2, g_loss1,g_loss2))

# evaluate the model performance every so often

if (i+1) % (bat_per_epo * 1) == 0:

# plot A->B translation

summarize_performance(i, g_model_AtoB, trainA, 'AtoB')

# plot B->A translation

summarize_performance(i, g_model_BtoA, trainB, 'BtoA')

if (i+1) % (bat_per_epo * 5) == 0:

# save the models

save_models(i, g_model_AtoB, g_model_BtoA)

if __name__ == '__main__':

# load image data

dataset = load_real_samples('horse2zebra_256.npz')

print('Loaded', dataset[0].shape, dataset[1].shape)

# define input shape based on the loaded dataset

image_shape = dataset[0].shape[1:]

# generator: A -> B

g_model_AtoB = define_generator(image_shape)

# generator: B -> A

g_model_BtoA = define_generator(image_shape)

# discriminator: A -> [real/fake]

d_model_A = define_discriminator(image_shape)

# discriminator: B -> [real/fake]

d_model_B = define_discriminator(image_shape)

# composite: A -> B -> [real/fake, A]

c_model_AtoB = define_composite_model(g_model_AtoB, d_model_B, g_model_BtoA, image_shape)

# composite: B -> A -> [real/fake, B]

c_model_BtoA = define_composite_model(g_model_BtoA, d_model_A, g_model_AtoB, image_shape)

# train models

train(d_model_A, d_model_B, g_model_AtoB, g_model_BtoA, c_model_AtoB, c_model_BtoA, dataset)

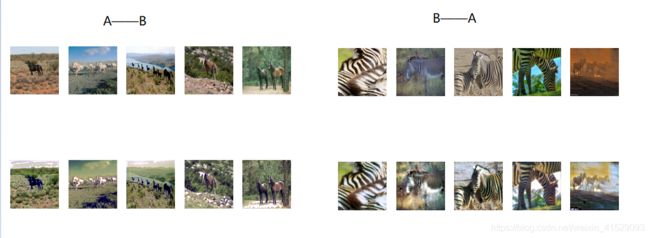

展示

第二部分代码运行后生成的生成假图片——野马转斑马,斑马转野马

注

博主第一部分代码在自己的神舟笔记本的pycharm运行(得到数据集文件horse2zebra_256.npz);

第二部分代码用服务器linux运行。

该部分代码是用cpu运行,运行一个epoch需要10个小时(一共需要运行100个epoch)——所以训练完需要一个月——不知道是因为linux的tensorflow是cpu版本的原因,还是需要将linux的tensorflow重装成gpu版本后再进行cuda设置)