图像处理-004滤波

图像处理-004滤波

与一维信号一样,二维图像也能使用低通滤波器(low-pass filter, LPF),高通滤波器(high-pass fiter, HPF)进行滤波。低通滤波能去除噪声、模糊图像,高通滤波能发现图像边缘。

相关理论基础

Correlation:通俗讲是指图像中各部分与卷积核(kernel)间的运算;

kernel(卷积核): 本质上是一个固定大小的数值型数组,在该数组中有一个位于中心的锚点,如下图所示:在图像处理中,卷积核用来计算表示当前像素点亮度时所需的周围像素范围。通常卷积核是奇数长度的方阵,如3×3、5×5、7×7矩阵。

图像卷积处理的运算步骤如下:

-

卷积核的锚点置于图像的第一个像素点(左上角象素(0,0)处),卷积核中的其他点覆盖图像中对应的像素点;

-

将卷积核中的各元素分别与图像中对应的元素相乘后后的和作为锚点对应的图像象素点的卷积值;

-

重复步骤2,直至图像中的所有点都计算过卷积值;

图像卷积运算的步骤可用如下公式表示:

H ( x , y ) = ∑ i = 0 M i − 1 ∑ j = 0 M j − 1 I ( x + i − a i , y + j − a j ) K ( i , j ) H(x,y) = \sum_{i=0}^{M_{i} - 1} \sum_{j=0}^{M_{j}-1} I(x+i - a_{i}, y + j - a_{j})K(i,j) H(x,y)=i=0∑Mi−1j=0∑Mj−1I(x+i−ai,y+j−aj)K(i,j)

opencv提供 cv.filter2D()函数用于滤波。函数的详细说明如下:

void cv::filter2D(InputArray src, #以二维数组形式输入的源

OutputArray dst,#以二维数组形式输出的目标图像,与源图像同大小,同类型

int ddepth,#目标图像的深度,默认是原来的图像深度相同,即-1

InputArray kernel, #卷积核,一个单通道浮点型矩阵,若想在不同的通道使用不同的kernel,可以先使用split()函数将图像通道分开

Point anchor = Point(-1,-1),#kernel基准点,默认(-1,-1)此时位于kernel的中心

double delta = 0,将过滤后的像素存储到dst之前,向其添加可选值

int borderType = BORDER_DEFAULT #像素外推方式

)

void LinerFilter::filter(const Mat &img) {

logger_info("======filter========%d===", img.channels());

Mat img_gray;

// 非灰度图像先转化为灰度

if (img.channels() == 3) {

cvtColor(img, img_gray, COLOR_RGB2GRAY);

} else {

img_gray = img;

}

logger_info("======filter========%d===", img_gray.channels());

//默认锚点

filter_default_anchor(img_gray);

//delta值为20

filter_default_anchor_delta_20(img_gray);

}

void LinerFilter::filter_default_anchor(const Mat &gray_image) {

logger_info("======filter_default_anchor===========");

Point anchor = Point(-1, -1);

double delta = 0;

int ddepth = -1;

Mat kernel, dst;

int kernel_size = 7;

kernel = Mat::ones(kernel_size, kernel_size, CV_32F) / (float) (kernel_size * kernel_size);

// Apply filter

filter2D(gray_image, dst, ddepth, kernel, anchor, delta, BORDER_DEFAULT);

imshow("filter_default_anchor", dst);

}

void LinerFilter::filter_default_anchor_delta_20(const Mat &gray_image) {

logger_info("======filter_default_anchor===========");

Point anchor = Point(-1, -1);

double delta = 50;

int ddepth = -1;

Mat kernel, dst;

int kernel_size = 7;

kernel = Mat::ones(kernel_size, kernel_size, CV_32F) / (float) (kernel_size * kernel_size);

// Apply filter

filter2D(gray_image, dst, ddepth, kernel, anchor, delta, BORDER_DEFAULT);

imshow("filter_default_anchor_delta_20", dst);

}

实现代码 Python

import cv2 as cv

import matplotlib.pyplot as plt

import numpy as np

from logger import logger

def filter(origin_img):

logger.log.info("====filter=======")

# 非灰度图像转化维灰度图像

if origin_img.shape[2] == 3:

gray_image = cv.cvtColor(origin_img, cv.COLOR_BGR2GRAY)

else:

gray_image = origin_img

default_anchor = filter_default_anchor(gray_image)

default_anchor_delta_20 = filter_default_anchor_delta_50(gray_image);

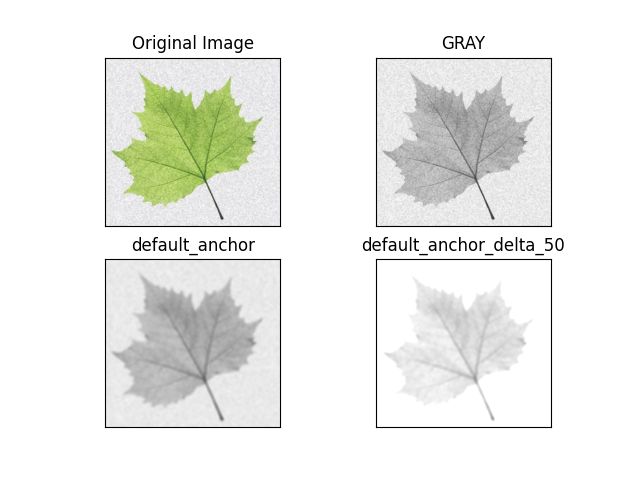

titles = ['Original Image', "GRAY", 'default_anchor', 'default_anchor_delta_50']

images = [cv.cvtColor(origin_img, cv.COLOR_BGR2RGB), gray_image, default_anchor, default_anchor_delta_20]

for i in range(4):

plt.subplot(2, 2, i + 1), plt.imshow(images[i], 'gray', vmin=0, vmax=255)

plt.title(titles[i])

plt.xticks([]), plt.yticks([])

plt.show()

key = cv.waitKey(0)

while key != ord('q'):

key = cv.waitKey(0)

cv.destroyAllWindows()

def filter_default_anchor(gray_image):

kernel = np.ones((7, 7), np.float32) / (7 * 7)

anchor = None

delta = 0

dst = cv.filter2D(gray_image, -1, kernel, None, anchor, delta)

cv.imshow("filter_default_anchor", dst)

return dst

def filter_default_anchor_delta_50(gray_image):

kernel = np.ones((7, 7), np.float32) / (7 * 7)

anchor = None

delta = 50

dst = cv.filter2D(gray_image, -1, kernel, None, anchor, delta)

cv.imshow("filter_default_anchor_delta_50", dst)

return dst

参考文献:

- https://docs.opencv.org/4.6.0/d4/dbd/tutorial_filter_2d.html