Bilinear CNN PyTorch版代码解读

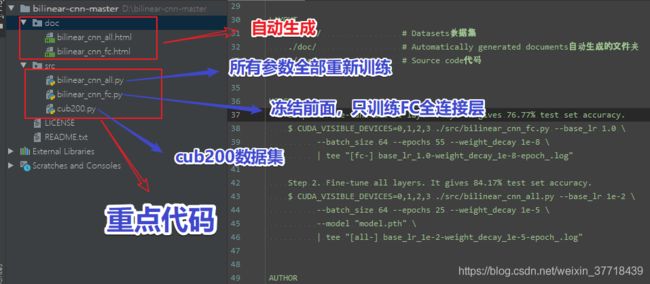

本文是个人对Bilinear CNN的代码的理解,代码来自于Hao Zhang,适用PyTorch 0.3.0,骨干网选择的是vgg16-pool5,应用于CUB200-2011数据集。

1.文件结构

2 bilinear_cnn_all.py

所有的权重参数都要重新微调

#!/usr/bin/env python

# -*- coding: utf-8 -*-

"""Fine-tune all layers for bilinear CNN.

Usage:

CUDA_VISIBLE_DEVICES=0,1,2,3 ./src/bilinear_cnn_all.py --base_lr 0.05 \

--batch_size 64 --epochs 100 --weight_decay 5e-4

"""

import os

import torch

import torchvision

import cub200

torch.manual_seed(0)#为CPU设置种子用于生成随机数,

torch.cuda.manual_seed_all(0))#为当前GPU设置随机种子

__all__ = ['BCNN', 'BCNNManager']

__author__ = 'Hao Zhang'

__copyright__ = '2018 LAMDA'

__date__ = '2018-01-09'

__email__ = '[email protected]'

__license__ = 'CC BY-SA 3.0'

__status__ = 'Development'

__updated__ = '2018-01-13'

__version__ = '1.2'

#在BCNNManager中调用

class BCNN(torch.nn.Module):

"""B-CNN for CUB200.

使用VGG-16,VGG-16的结构如https://blog.csdn.net/weixin_37718439/article/details/104440048

The B-CNN model is illustrated as follows.

conv1^2 (64) -> pool1 -> conv2^2 (128) -> pool2 -> conv3^3 (256) -> pool3

-> conv4^3 (512) -> pool4 -> conv5^3 (512) -> bilinear pooling

-> sqrt-normalize -> L2-normalize -> fc (200).

The network accepts a 3*448*448 input, and the pool5 activation has shape

512*28*28 since we down-sample 5 times.

Attributes:

features, torch.nn.Module: Convolution and pooling layers.

fc, torch.nn.Module: 200.

"""

def __init__(self):

"""Declare all needed layers."""

torch.nn.Module.__init__(self)

# Convolution and pooling layers of VGG-16.

self.features = torchvision.models.vgg16(pretrained=False).features#只导入网络结构,不导入参数:

self.features = torch.nn.Sequential(*list(self.features.children())

[:-1]) # Remove pool5.#https://www.cnblogs.com/lfri/p/10493408.html

# Linear classifier.

self.fc = torch.nn.Linear(512**2, 200)#线性FC层,进行分类

def forward(self, X):

"""Forward pass of the network.

Args:

X, torch.autograd.Variable of shape N*3*448*448.

Returns:

Score, torch.autograd.Variable of shape N*200.

"""

N = X.size()[0]#N是batch size

assert X.size() == (N, 3, 448, 448)#x是(batchsize,channel,448,448)

X = self.features(X)#x经过vgg-pool5提取得到features

assert X.size() == (N, 512, 28, 28)#提取后为(batchsize,512,28,28)

X = X.view(N, 512, 28**2)#整形为(batchsize,512,28X28)

X = torch.bmm(X, torch.transpose(X, 1, 2)) / (28**2) # Bilinear

#开始X为 (N,512,28**2)

#torch.transpose(X, 1, 2)把X转置为 (N,28**2,512)

# torch.bmm(X, torch.transpose(X, 1, 2))乘的结果为 (N,512,512)

assert X.size() == (N, 512, 512)#是否为 (N, 512, 512)

X = X.view(N, 512**2)#调整为(N, 512**2)

X = torch.sqrt(X + 1e-5)

X = torch.nn.functional.normalize(X)#normalize

X = self.fc(X)#全连接,输出为(N, 200)

assert X.size() == (N, 200)#验证时候为(N, 200)

return X#输出全连接处理后的结果

#在main()调用,加载数据

class BCNNManager(object):

"""Manager class to train bilinear CNN.

Attributes:

_options: Hyperparameters.

_path: Useful paths.

_net: Bilinear CNN.

_criterion: Cross-entropy loss.

_solver: SGD with momentum.

_scheduler: Reduce learning rate by a fator of 0.1 when plateau.

_train_loader: Training data.

_test_loader: Testing data.

"""

def __init__(self, options, path):

"""Prepare the network, criterion, solver, and data.

Args:

options, dict: Hyperparameters.

"""

print('Prepare the network and data.')

self._options = options

self._path = path#模型地址

# Network.

self._net = torch.nn.DataParallel(BCNN()).cuda()#调用上面的BCNN,多GPU训练

#加载权重

# Load the model from disk.

self._net.load_state_dict(torch.load(self._path['model']))#加载网络模型参数

print(self._net)

# Criterion.选用损失函数

self._criterion = torch.nn.CrossEntropyLoss().cuda()#使用交叉熵损失函数

# Solver.优化器

self._solver = torch.optim.SGD(

self._net.parameters(), lr=self._options['base_lr'],#基础学习率

momentum=0.9, weight_decay=self._options['weight_decay'])#动量 ,权重衰减

#学习率调度

self._scheduler = torch.optim.lr_scheduler.ReduceLROnPlateau(#网络的评价指标不在提升的时候,可以通过降低网络的学习率来提高网络性能

self._solver, mode='max', factor=0.1, patience=3, verbose=True,

threshold=1e-4)

#max表示当监控量停止上升的时候,学习率将减小,默认为min

#factor学习率每次降低多少,new_lr = old_lr * factor

#patience=3,容忍网路的性能不提升的次数,高于这个次数就降低学习率

#verbose(bool) - 如果为True,则为每次更新向stdout输出一条消息。 默认值:False

#threshold(float) - 测量新最佳值的阈值,仅关注重大变化。 默认值:1e-4

#训练图像增广操作

train_transforms = torchvision.transforms.Compose([

torchvision.transforms.Resize(size=448), # Let smaller edge match调整大小

torchvision.transforms.RandomHorizontalFlip(),#依概率p垂直翻

torchvision.transforms.RandomCrop(size=448),#随机裁剪

torchvision.transforms.ToTensor(),#

torchvision.transforms.Normalize(mean=(0.485, 0.456, 0.406),

std=(0.229, 0.224, 0.225))

])

#测试图像增广操作

test_transforms = torchvision.transforms.Compose([

torchvision.transforms.Resize(size=448),

torchvision.transforms.CenterCrop(size=448),

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize(mean=(0.485, 0.456, 0.406),

std=(0.229, 0.224, 0.225))

])

#训练数据

train_data = cub200.CUB200(

root=self._path['cub200'], train=True, download=True,

transform=train_transforms)

#test数据

test_data = cub200.CUB200(

root=self._path['cub200'], train=False, download=True,

transform=test_transforms)

#加载train数据

self._train_loader = torch.utils.data.DataLoader(

train_data, batch_size=self._options['batch_size'],

shuffle=True, num_workers=4, pin_memory=True)

#加载test数据

self._test_loader = torch.utils.data.DataLoader(

test_data, batch_size=16,

shuffle=False, num_workers=4, pin_memory=True)

#在main()中被调用

def train(self):

"""Train the network."""

print('Training.')

best_acc = 0.0

best_epoch = None

print('Epoch\tTrain loss\tTrain acc\tTest acc')

for t in range(self._options['epochs']):

epoch_loss = []

num_correct = 0#记录检测正确共多少张图片

num_total = 0#记录检测过多少张图片

for X, y in self._train_loader:

# Data.

X = torch.autograd.Variable(X.cuda())

y = torch.autograd.Variable(y.cuda(async=True))

# Clear the existing gradients.

self._solver.zero_grad()#梯度置0

# Forward pass.

score = self._net(X)#经过改进后的vgg16输出

loss = self._criterion(score, y)#交叉熵损失

epoch_loss.append(loss.data[0])

# Prediction.

_, prediction = torch.max(score.data, 1)#按维度dim 返回最大值

num_total += y.size(0)#记录检测过多少张图片

num_correct += torch.sum(prediction == y.data)#预测和真实数据y相等,则为正确,计算预测正确的数量

# Backward pass.

loss.backward()

self._solver.step()

train_acc = 100 * num_correct / num_total#train正确百分比

test_acc = self._accuracy(self._test_loader)#调用下面的_accuracy函数,计算出当前的test的精度acc

self._scheduler.step(test_acc)#scheduler.step()是对lr进行调整,依据当前的acc来调整学习率

if test_acc > best_acc:#保留最佳的acc

best_acc = test_acc

best_epoch = t + 1

print('*', end='')

print('%d\t%4.3f\t\t%4.2f%%\t\t%4.2f%%' %

(t+1, sum(epoch_loss) / len(epoch_loss), train_acc, test_acc))

print('Best at epoch %d, test accuaray %f' % (best_epoch, best_acc))

#计算当前的精度

def _accuracy(self, data_loader):

"""Compute the train/test accuracy.

Args:

data_loader: Train/Test DataLoader.

Returns:

Train/Test accuracy in percentage.

"""

self._net.train(False)#网络置为测试模式

num_correct = 0#记录测试正确的图像的数目

num_total = 0#记录测试过的图像的数目

for X, y in data_loader:

# Data.加载数据

X = torch.autograd.Variable(X.cuda())

y = torch.autograd.Variable(y.cuda(async=True))

# Prediction.

score = self._net(X)

_, prediction = torch.max(score.data, 1)

num_total += y.size(0)#测试过的图像+1

num_correct += torch.sum(prediction == y.data).item()#预测正确的图像+1

self._net.train(True) # Set the model to training phase 置为训练模式

return 100 * num_correct / num_total#返回测试结果,测试正确率

#没有调用,注释掉了,平均数,和方差计算Compute mean and variance for training data.

#求数据集每个通道的mean和std

def getStat(self):

"""Get the mean and std value for a certain dataset."""

print('Compute mean and variance for training data.')

#加载数据

train_data = cub200.CUB200(

root=self._path['cub200'], train=True,

transform=torchvision.transforms.ToTensor(), download=True)

train_loader = torch.utils.data.DataLoader(

train_data, batch_size=1, shuffle=False, num_workers=4,

pin_memory=True)

mean = torch.zeros(3)#初始化为3维全0的tensor

std = torch.zeros(3)#初始化为3维全0的tensor

#所有图像按照通道求其mean和std

for X, _ in train_loader:

for d in range(3):

mean[d] += X[:, d, :, :].mean()

std[d] += X[:, d, :, :].std()

mean.div_(len(train_data))

std.div_(len(train_data))

print(mean)

print(std)

def main():

"""The main function."""

import argparse

#输入参数

parser = argparse.ArgumentParser(

description='Train bilinear CNN on CUB200.')

parser.add_argument('--base_lr', dest='base_lr', type=float, required=True,

help='Base learning rate for training.')

parser.add_argument('--batch_size', dest='batch_size', type=int,

required=True, help='Batch size.')

parser.add_argument('--epochs', dest='epochs', type=int, required=True,

help='Epochs for training.')

parser.add_argument('--weight_decay', dest='weight_decay', type=float,

required=True, help='Weight decay.')

parser.add_argument('--model', dest='model', type=str, required=True,

help='Model for fine-tuning.')

# 解析输入的参数

args = parser.parse_args()

#判断输入的参数是否合适

if args.base_lr <= 0:

raise AttributeError('--base_lr parameter must >0.')

if args.batch_size <= 0:

raise AttributeError('--batch_size parameter must >0.')

if args.epochs < 0:

raise AttributeError('--epochs parameter must >=0.')

if args.weight_decay <= 0:

raise AttributeError('--weight_decay parameter must >0.')

#合适的参数加入options

options = {

'base_lr': args.base_lr,

'batch_size': args.batch_size,

'epochs': args.epochs,

'weight_decay': args.weight_decay,

}

project_root = os.popen('pwd').read().strip()

path = {

'cub200': os.path.join(project_root, 'data/cub200'),

'model': os.path.join(project_root, 'model', args.model),

}

for d in path:

if d == 'model':

assert os.path.isfile(path[d])#用于判断对象是否为一个文件

else:

assert os.path.isdir(path[d])#用于判断对象是否为一个目录

#加载数据

manager = BCNNManager(options, path)

# manager.getStat()

#进行训练

manager.train()

if __name__ == '__main__':

main()

3.bilinear_cnn_fc.py

只需要微调FC部分的参数,前面的参数都冻结

#!/usr/bin/env python

# -*- coding: utf-8 -*-

"""Fine-tune the fc layer only for bilinear CNN.

Usage:

CUDA_VISIBLE_DEVICES=0,1,2,3 ./src/bilinear_cnn_fc.py --base_lr 0.05 \

--batch_size 64 --epochs 100 --weight_decay 5e-4

"""

import os

import torch

import torchvision

import cub200

torch.manual_seed(0)

torch.cuda.manual_seed_all(0)

__all__ = ['BCNN', 'BCNNManager']

__author__ = 'Hao Zhang'

__copyright__ = '2018 LAMDA'

__date__ = '2018-01-09'

__email__ = '[email protected]'

__license__ = 'CC BY-SA 3.0'

__status__ = 'Development'

__updated__ = '2018-01-13'

__version__ = '1.2'

class BCNN(torch.nn.Module):

"""B-CNN for CUB200.

The B-CNN model is illustrated as follows.

conv1^2 (64) -> pool1 -> conv2^2 (128) -> pool2 -> conv3^3 (256) -> pool3

-> conv4^3 (512) -> pool4 -> conv5^3 (512) -> bilinear pooling

-> sqrt-normalize -> L2-normalize -> fc (200).

The network accepts a 3*448*448 input, and the pool5 activation has shape

512*28*28 since we down-sample 5 times.

Attributes:

features, torch.nn.Module: Convolution and pooling layers.

fc, torch.nn.Module: 200.

"""

def __init__(self):

"""Declare all needed layers."""

torch.nn.Module.__init__(self)

# Convolution and pooling layers of VGG-16.

self.features = torchvision.models.vgg16(pretrained=True).features

self.features = torch.nn.Sequential(*list(self.features.children())

[:-1]) # Remove pool5.

# Linear classifier.

self.fc = torch.nn.Linear(512**2, 200)

#冻结freeze FC层之前的所有层,只训练FC层

# Freeze all previous layers.

for param in self.features.parameters():

param.requires_grad = False

# Initialize the fc layers.

torch.nn.init.kaiming_normal(self.fc.weight.data)#何凯明初始化

if self.fc.bias is not None:

torch.nn.init.constant(self.fc.bias.data, val=0)#fc层的bias进行constant初始化

def forward(self, X):

"""Forward pass of the network.

Args:

X, torch.autograd.Variable of shape N*3*448*448.

Returns:

Score, torch.autograd.Variable of shape N*200.

"""

N = X.size()[0]

assert X.size() == (N, 3, 448, 448)

X = self.features(X)

assert X.size() == (N, 512, 28, 28)

X = X.view(N, 512, 28**2)

X = torch.bmm(X, torch.transpose(X, 1, 2)) / (28**2) # Bilinear 计算

assert X.size() == (N, 512, 512)

X = X.view(N, 512**2)

X = torch.sqrt(X + 1e-5)

X = torch.nn.functional.normalize(X)

X = self.fc(X)

assert X.size() == (N, 200)

return X

class BCNNManager(object):

"""Manager class to train bilinear CNN.

Attributes:

_options: Hyperparameters.

_path: Useful paths.

_net: Bilinear CNN.

_criterion: Cross-entropy loss.

_solver: SGD with momentum.

_scheduler: Reduce learning rate by a fator of 0.1 when plateau.

_train_loader: Training data.

_test_loader: Testing data.

"""

def __init__(self, options, path):

"""Prepare the network, criterion, solver, and data.

Args:

options, dict: Hyperparameters.

"""

print('Prepare the network and data.')

self._options = options

self._path = path

# Network.

self._net = torch.nn.DataParallel(BCNN()).cuda()

print(self._net)

# Criterion.

self._criterion = torch.nn.CrossEntropyLoss().cuda()#选用交叉熵损失函数计算loss

# Solver.

self._solver = torch.optim.SGD( #选择SGD优化器

self._net.module.fc.parameters(), lr=self._options['base_lr'],

momentum=0.9, weight_decay=self._options['weight_decay'])

self._scheduler = torch.optim.lr_scheduler.ReduceLROnPlateau( #动态优学习率

self._solver, mode='max', factor=0.1, patience=3, verbose=True,

threshold=1e-4)

#train数据增广

train_transforms = torchvision.transforms.Compose([

torchvision.transforms.Resize(size=448), # Let smaller edge match

torchvision.transforms.RandomHorizontalFlip(),

torchvision.transforms.RandomCrop(size=448),

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize(mean=(0.485, 0.456, 0.406),

std=(0.229, 0.224, 0.225))

])

#test数据增广

test_transforms = torchvision.transforms.Compose([

torchvision.transforms.Resize(size=448),

torchvision.transforms.CenterCrop(size=448),

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize(mean=(0.485, 0.456, 0.406),

std=(0.229, 0.224, 0.225))

])

#加载train和test数据集

train_data = cub200.CUB200(

root=self._path['cub200'], train=True, download=True,

transform=train_transforms)

test_data = cub200.CUB200(

root=self._path['cub200'], train=False, download=True,

transform=test_transforms)

self._train_loader = torch.utils.data.DataLoader(

train_data, batch_size=self._options['batch_size'],

shuffle=True, num_workers=4, pin_memory=True)

self._test_loader = torch.utils.data.DataLoader(

test_data, batch_size=16,

shuffle=False, num_workers=4, pin_memory=True)

def train(self):

"""Train the network."""

print('Training.')

best_acc = 0.0

best_epoch = None

print('Epoch\tTrain loss\tTrain acc\tTest acc')

for t in range(self._options['epochs']):

epoch_loss = []

num_correct = 0

num_total = 0

for X, y in self._train_loader:

# Data.

X = torch.autograd.Variable(X.cuda())

y = torch.autograd.Variable(y.cuda(async=True))

# Clear the existing gradients.

self._solver.zero_grad()

# Forward pass.

score = self._net(X)

loss = self._criterion(score, y)

epoch_loss.append(loss.data[0])

# Prediction.

_, prediction = torch.max(score.data, 1)

num_total += y.size(0)

num_correct += torch.sum(prediction == y.data)

# Backward pass.

loss.backward()

self._solver.step()

train_acc = 100 * num_correct / num_total

test_acc = self._accuracy(self._test_loader)#调用下面的_accuracy函数,计算出当前的test的精度acc

self._scheduler.step(test_acc)#scheduler.step()是对lr进行调整,依据当前的acc来调整学习率

if test_acc > best_acc:

best_acc = test_acc

best_epoch = t + 1

print('*', end='')

# Save model onto disk.

torch.save(self._net.state_dict(),

os.path.join(self._path['model'],

'vgg_16_epoch_%d.pth' % (t + 1)))

print('%d\t%4.3f\t\t%4.2f%%\t\t%4.2f%%' %

(t+1, sum(epoch_loss) / len(epoch_loss), train_acc, test_acc))

print('Best at epoch %d, test accuaray %f' % (best_epoch, best_acc))

def _accuracy(self, data_loader):

"""Compute the train/test accuracy.

Args:

data_loader: Train/Test DataLoader.

Returns:

Train/Test accuracy in percentage.

"""

self._net.train(False)

num_correct = 0

num_total = 0

for X, y in data_loader:

# Data.

X = torch.autograd.Variable(X.cuda())

y = torch.autograd.Variable(y.cuda(async=True))

# Prediction.

score = self._net(X)

_, prediction = torch.max(score.data, 1)

num_total += y.size(0)

num_correct += torch.sum(prediction == y.data).item()

self._net.train(True) # Set the model to training phase

return 100 * num_correct / num_total

def getStat(self):

"""Get the mean and std value for a certain dataset."""

print('Compute mean and variance for training data.')

train_data = cub200.CUB200(

root=self._path['cub200'], train=True,

transform=torchvision.transforms.ToTensor(), download=True)

train_loader = torch.utils.data.DataLoader(

train_data, batch_size=1, shuffle=False, num_workers=4,

pin_memory=True)

mean = torch.zeros(3)

std = torch.zeros(3)

for X, _ in train_loader:

for d in range(3):

mean[d] += X[:, d, :, :].mean()

std[d] += X[:, d, :, :].std()

mean.div_(len(train_data))

std.div_(len(train_data))

print(mean)

print(std)

def main():

"""The main function."""

import argparse

parser = argparse.ArgumentParser(

description='Train bilinear CNN on CUB200.')

parser.add_argument('--base_lr', dest='base_lr', type=float, required=True,

help='Base learning rate for training.')

parser.add_argument('--batch_size', dest='batch_size', type=int,

required=True, help='Batch size.')

parser.add_argument('--epochs', dest='epochs', type=int,

required=True, help='Epochs for training.')

parser.add_argument('--weight_decay', dest='weight_decay', type=float,

required=True, help='Weight decay.')

args = parser.parse_args()

if args.base_lr <= 0:

raise AttributeError('--base_lr parameter must >0.')

if args.batch_size <= 0:

raise AttributeError('--batch_size parameter must >0.')

if args.epochs < 0:

raise AttributeError('--epochs parameter must >=0.')

if args.weight_decay <= 0:

raise AttributeError('--weight_decay parameter must >0.')

options = {

'base_lr': args.base_lr,

'batch_size': args.batch_size,

'epochs': args.epochs,

'weight_decay': args.weight_decay,

}

project_root = os.popen('pwd').read().strip()

path = {

'cub200': os.path.join(project_root, 'data/cub200'),

'model': os.path.join(project_root, 'model'),

}

for d in path:

assert os.path.isdir(path[d])

manager = BCNNManager(options, path)

# manager.getStat()

manager.train()

if __name__ == '__main__':

main()

4.cub200.py

# -*- coding: utf-8 -*

"""This module is served as torchvision.datasets to load CUB200-2011.

CUB200-2011 dataset has 11,788 images of 200 bird species. The project page

is as follows.

http://www.vision.caltech.edu/visipedia/CUB-200-2011.html

- Images are contained in the directory data/cub200/raw/images/,

with 200 subdirectories.

- Format of images.txt:

- Format of train_test_split.txt:

- Format of classes.txt:

- Format of iamge_class_labels.txt:

This file is modified from:

https://github.com/vishwakftw/vision.

"""

import os

import pickle

import numpy as np

import PIL.Image

import torch

__all__ = ['CUB200']

__author__ = 'Hao Zhang'

__copyright__ = '2018 LAMDA'

__date__ = '2018-01-09'

__email__ = '[email protected]'

__license__ = 'CC BY-SA 3.0'

__status__ = 'Development'

__updated__ = '2018-01-10'

__version__ = '1.0'

class CUB200(torch.utils.data.Dataset):

"""CUB200 dataset.

Args:

_root, str: Root directory of the dataset.

_train, bool: Load train/test data.

_transform, callable: A function/transform that takes in a PIL.Image

and transforms it.

_target_transform, callable: A function/transform that takes in the

target and transforms it.

_train_data, list of np.ndarray.

_train_labels, list of int.

_test_data, list of np.ndarray.

_test_labels, list of int.

"""

def __init__(self, root, train=True, transform=None, target_transform=None,

download=False):

"""Load the dataset.

Args

root, str: Root directory of the dataset.

train, bool [True]: Load train/test data.

transform, callable [None]: A function/transform that takes in a

PIL.Image and transforms it.

target_transform, callable [None]: A function/transform that takes

in the target and transforms it.

download, bool [False]: If true, downloads the dataset from the

internet and puts it in root directory. If dataset is already

downloaded, it is not downloaded again.

"""

self._root = os.path.expanduser(root) # Replace ~ by the complete dir

self._train = train

self._transform = transform

self._target_transform = target_transform

if self._checkIntegrity():#时候存在文件,是否完整的检验

print('Files already downloaded and verified.')

elif download:#没有下,就去网上下载

url = ('http://www.vision.caltech.edu/visipedia-data/CUB-200-2011/'

'CUB_200_2011.tgz')

self._download(url) #调用下面的_download

self._extract()#调用下面的_extract

else:

raise RuntimeError(

'Dataset not found. You can use download=True to download it.')

# Now load the picked data

# load指定类型的data和labels

if self._train:

self._train_data, self._train_labels = pickle.load(open(

os.path.join(self._root, 'processed/train.pkl'), 'rb'))

assert (len(self._train_data) == 5994 #数据时候完整5994,不够就提示错误

and len(self._train_labels) == 5994)

else:

self._test_data, self._test_labels = pickle.load(open(

os.path.join(self._root, 'processed/test.pkl'), 'rb'))

assert (len(self._test_data) == 5794

and len(self._test_labels) == 5794)

#提取指定index的图像image和对应的标签targets

def __getitem__(self, index):

"""

Args:

index, int: Index.

Returns:

image, PIL.Image: Image of the given index.

target, str: target of the given index.

"""

if self._train:

image, target = self._train_data[index], self._train_labels[index]

else:

image, target = self._test_data[index], self._test_labels[index]

# Doing this so that it is consistent with all other datasets.

image = PIL.Image.fromarray(image)

if self._transform is not None:#图像进行transform处理

image = self._transform(image)

if self._target_transform is not None:#标签进行transform处理

target = self._target_transform(target)

return image, target

def __len__(self):

"""Length of the dataset.

Returns:

length, int: Length of the dataset.

"""

if self._train:

return len(self._train_data)

return len(self._test_data)

def _checkIntegrity(self):

"""Check whether we have already processed the data.

Returns:

flag, bool: True if we have already processed the data.

"""

return (

os.path.isfile(os.path.join(self._root, 'processed/train.pkl'))

and os.path.isfile(os.path.join(self._root, 'processed/test.pkl')))

def _download(self, url):

"""Download and uncompress the tar.gz file from a given URL.

Args:

url, str: URL to be downloaded.

"""

import six.moves

import tarfile

raw_path = os.path.join(self._root, 'raw')

processed_path = os.path.join(self._root, 'processed')

if not os.path.isdir(raw_path):

os.mkdir(raw_path, mode=0o775)

if not os.path.isdir(processed_path):

os.mkdir(processed_path, mode=0x775)

# Downloads file.

fpath = os.path.join(self._root, 'raw/CUB_200_2011.tgz')

try:

print('Downloading ' + url + ' to ' + fpath)

six.moves.urllib.request.urlretrieve(url, fpath)

except six.moves.urllib.error.URLError:

if url[:5] == 'https:':

self._url = self._url.replace('https:', 'http:')

print('Failed download. Trying https -> http instead.')

print('Downloading ' + url + ' to ' + fpath)

six.moves.urllib.request.urlretrieve(url, fpath)

# Extract file.

cwd = os.getcwd()

tar = tarfile.open(fpath, 'r:gz')

os.chdir(os.path.join(self._root, 'raw'))

tar.extractall()

tar.close()

os.chdir(cwd)

def _extract(self):

"""Prepare the data for train/test split and save onto disk."""

image_path = os.path.join(self._root, 'raw/CUB_200_2011/images/')

# Format of images.txt:

id2name = np.genfromtxt(os.path.join(

self._root, 'raw/CUB_200_2011/images.txt'), dtype=str)

# Format of train_test_split.txt:

id2train = np.genfromtxt(os.path.join(

self._root, 'raw/CUB_200_2011/train_test_split.txt'), dtype=int)

train_data = []

train_labels = []

test_data = []

test_labels = []

for id_ in range(id2name.shape[0]):

image = PIL.Image.open(os.path.join(image_path, id2name[id_, 1]))

label = int(id2name[id_, 1][:3]) - 1 # Label starts with 0

# Convert gray scale image to RGB image.

if image.getbands()[0] == 'L':

image = image.convert('RGB')

image_np = np.array(image)

image.close()

if id2train[id_, 1] == 1:

train_data.append(image_np)

train_labels.append(label)

else:

test_data.append(image_np)

test_labels.append(label)

pickle.dump((train_data, train_labels),#反序列化

open(os.path.join(self._root, 'processed/train.pkl'), 'wb'))

pickle.dump((test_data, test_labels),

open(os.path.join(self._root, 'processed/test.pkl'), 'wb'))