windows 基于 MediaPipe 实现 IrisTracking

谷歌日前发布了用于精确虹膜估计的全新机器学习模型:MediaPipe Iris。所述模型以MediaPipe Face Mesh的研究作为基础,而它无需专用硬件就能够通过单个RGB摄像头实时追踪涉及虹膜,瞳孔和眼睛轮廓的界标。利用虹膜界标,模型同时能够在不使用深度传感器的情况下以相对误差小于10%的精度确定对象和摄像头之间的度量距离。请注意,虹膜追踪不会推断人们正在注视的位置,同时不能提供任何形式的身份识别。MediaPipe是一个旨在帮助研究人员和开发者构建世界级机器学习解决方案与应用程序的开源跨平台框架,所以在MediaPipe中实现的这一系统能够支持大多数现代智能手机,PC,笔记本电脑,甚至是Web。

实现过程

-

环境配置参考

- https://blog.csdn.net/sunnyblogs/article/details/118891249

- https://blog.csdn.net/haiyangyunbao813/article/details/119192951

-

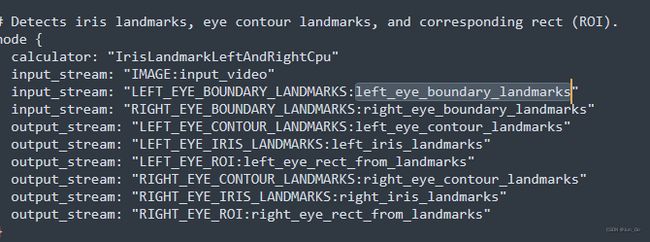

编译 Iris

- iris_tracking_cpu (基于cpu实现眼球追踪,调用成功,会开启摄像头)

bazel build -c opt --define MEDIAPIPE_DISABLE_GPU=1 --action_env PYTHON_BIN_PATH="xxx//python.exe" mediapipe/examples/desktop/iris_tracking:iris_tracking_cpu --verbose_failuresbazel-bin\mediapipe\examples\desktop\iris_tracking\iris_tracking_cpu --calculator_graph_config_file=mediapipe\graphs\iris_tracking\iris_tracking_cpu.pbtxt

- iris_tracking_cpu_video_input

bazel build -c opt --define MEDIAPIPE_DISABLE_GPU=1 --action_env PYTHON_BIN_PATH="xxx//python.exe" mediapipe/examples/desktop/iris_tracking:iris_tracking_cpu_video_inputbazel-bin\mediapipe\examples\desktop\iris_tracking\iris_tracking_cpu_video_input --calculator_graph_config_file=mediapipe\graphs\iris_tracking\iris_tracking_cpu_video_input.pbtxt --input_side_packets=input_video_path=,output_video_path=

- iris_depth_from_image_desktop ( 图片带有 EXIF 信息 )

bazel build -c opt --define MEDIAPIPE_DISABLE_GPU=1 --action_env PYTHON_BIN_PATH="xxx//python.exe" mediapipe/examples/desktop/iris_tracking:iris_depth_from_image_desktopbazel-bin\mediapipe\examples\desktop\iris_tracking\iris_depth_from_image_desktop --input_image_path="image/1.jpg" --output_image_path="image/out.jpg

- iris_tracking_cpu (基于cpu实现眼球追踪,调用成功,会开启摄像头)

-

demo

- mediapipe\examples\desktop\ 中创建 iris_tracking_sample

- 拷贝iris_tracking中 BUILD文件

- iris_detect.h

#ifndef _IRIS_DETECT_H_ #define _IRIS_DETECT_H_ #include#include "absl/flags/flag.h" #include "absl/flags/parse.h" #include "mediapipe/framework/calculator_framework.h" #include "mediapipe/framework/formats/image_frame.h" #include "mediapipe/framework/formats/image_frame_opencv.h" #include "mediapipe/framework/port/file_helpers.h" #include "mediapipe/framework/port/opencv_highgui_inc.h" #include "mediapipe/framework/port/opencv_imgproc_inc.h" #include "mediapipe/framework/port/opencv_video_inc.h" #include "mediapipe/framework/port/parse_text_proto.h" #include "mediapipe/framework/port/status.h" #include "mediapipe/framework/formats/detection.pb.h" #include "mediapipe/framework/formats/landmark.pb.h" #include "mediapipe/framework/formats/rect.pb.h" namespace IrisSample { using iris_callback_t = std::function<void()>; class IrisDectect { public: int initModel(const char* model_path) noexcept; int detectVideoCamera(iris_callback_t call); int detectVideo(const char* video_path, int show_image, iris_callback_t call); int release(); private: absl::Status initGraph(const char* model_path); absl::Status runMPPGraphVideo(const char* video_path, int show_image, iris_callback_t call); absl::Status releaseGraph(); void drawEyeLandmarks(cv::Mat& image); void drawEyeContourLandmarks(cv::Mat& image); void drawEyeRectLandmarks(cv::Mat& image); void drawEyeBoundaryLandmarks(cv::Mat& image); const char* kInputStream = "input_video"; const char* kOutputStream = "output_video"; const char* kWindowName = "MediaPipe"; const char* kOutputLandmarks = "iris_landmarks";// 眼球(单眼 5个点) const char* kOutputEyeContourLandmarks = "left_eye_contour_landmarks";// 眼睛轮廓(单眼 71个点) const char* kOutputEyeRectLandmarks = "left_eye_rect_from_landmarks";// 眼睛边框 const char* kOutputEyeBoundaryLandmarks = "left_eye_boundary_landmarks";// 眼角(单眼 2个点) bool init_ = false; mediapipe::CalculatorGraph graph_; std::unique_ptr<mediapipe::OutputStreamPoller> pPoller_; std::unique_ptr<mediapipe::OutputStreamPoller> pPollerLandmarks_; // eye iris std::unique_ptr<mediapipe::OutputStreamPoller> pPollerEyeContourLandmarks_; std::unique_ptr<mediapipe::OutputStreamPoller> pPollerEyeRectLandmarks_; Std::unique_ptr<mediapipe::OutputStreamPoller> pPollerEyeBoundaryLandmarks_; }; } #endif - iris_detect.cpp

#include "iris_detect.h" using namespace IrisSample; int IrisDectect::initModel(const char* model_path) noexcept { absl::Status run_status = initGraph(model_path); if (!run_status.ok()) return -1; init_ = true; return 1; } int IrisDectect::detectVideoCamera(iris_callback_t call) { if (!init_) return -1; absl::Status run_status = runMPPGraphVideo("", true, call); return run_status.ok() ? 1 : -1; } int IrisDectect::detectVideo(const char* video_path, int show_image, iris_callback_t call) { if (!init_) return -1; absl::Status run_status = runMPPGraphVideo(video_path, show_image, call); return run_status.ok() ? 1 : -1; } int IrisDectect::release() { absl::Status run_status = releaseGraph(); return run_status.ok() ? 1 : -1; } absl::Status IrisDectect::initGraph(const char* model_path) { std::string calculator_graph_config_contents; MP_RETURN_IF_ERROR(mediapipe::file::GetContents(model_path, &calculator_graph_config_contents)); mediapipe::CalculatorGraphConfig config = mediapipe::ParseTextProtoOrDie<mediapipe::CalculatorGraphConfig>( calculator_graph_config_contents); MP_RETURN_IF_ERROR(graph_.Initialize(config)); auto sop = graph_.AddOutputStreamPoller(kOutputStream); assert(sop.ok()); pPoller_ = std::make_unique<mediapipe::OutputStreamPoller>(std::move(sop.value())); mediapipe::StatusOrPoller irisLandmark = graph_.AddOutputStreamPoller(kOutputLandmarks); assert(irisLandmark.ok()); pPollerLandmarks_ = std::make_unique<mediapipe::OutputStreamPoller>(std::move(irisLandmark.value())); mediapipe::StatusOrPoller eyeContourlandmark = graph_.AddOutputStreamPoller(kOutputEyeContourLandmarks); assert(eyeContourlandmark.ok()); pPollerEyeContourLandmarks_ = std::make_unique<mediapipe::OutputStreamPoller>(std::move(eyeContourlandmark.value())); mediapipe::StatusOrPoller eyeRectlandmark = graph_.AddOutputStreamPoller(kOutputEyeRectLandmarks); assert(eyeRectlandmark.ok()); pPollerEyeRectLandmarks_ = std::make_unique<mediapipe::OutputStreamPoller>(std::move(eyeRectlandmark.value())); mediapipe::StatusOrPoller eyeBoundarylandmark = graph_.AddOutputStreamPoller(kOutputEyeBoundaryLandmarks); assert(eyeBoundarylandmark.ok()); pPollerEyeBoundaryLandmarks_ = std::make_unique<mediapipe::OutputStreamPoller>(std::move(eyeBoundarylandmark.value())); MP_RETURN_IF_ERROR(graph_.StartRun({})); std::cout << "======= graph_ StartRun success ============" << std::endl; return absl::OkStatus(); } void IrisDectect::drawEyeLandmarks(cv::Mat& image) { mediapipe::Packet packet_landmarks; if (pPollerLandmarks_->QueueSize() == 0 || !pPollerLandmarks_->Next(&packet_landmarks)) return; auto& output_landmarks = packet_landmarks.Get<mediapipe::NormalizedLandmarkList>(); for (int i = 0; i < output_landmarks.landmark_size(); ++i) { const mediapipe::NormalizedLandmark landmark = output_landmarks.landmark(i); float x = landmark.x() * image.cols; float y = landmark.y() * image.rows; cv::circle(image, cv::Point(x, y), 2, cv::Scalar(0, 255, 0)); } } void IrisDectect::drawEyeContourLandmarks(cv::Mat& image) { mediapipe::Packet packet_landmarks; if (pPollerEyeContourLandmarks_->QueueSize() == 0 || !pPollerEyeContourLandmarks_->Next(&packet_landmarks)) return; auto& output_landmarks = packet_landmarks.Get<mediapipe::NormalizedLandmarkList>(); for (int i = 0; i < output_landmarks.landmark_size(); ++i) { const mediapipe::NormalizedLandmark landmark = output_landmarks.landmark(i); float x = landmark.x() * image.cols; float y = landmark.y() * image.rows; cv::circle(image, cv::Point(x, y), 2, cv::Scalar(255, 0, 0)); } } void IrisDectect::drawEyeRectLandmarks(cv::Mat& image) { mediapipe::Packet packet_landmarks; if (pPollerEyeRectLandmarks_->QueueSize() == 0 || !pPollerEyeRectLandmarks_->Next(&packet_landmarks)) return; auto& output_landmarks = packet_landmarks.Get<mediapipe::NormalizedRect>(); float x = output_landmarks.x_center() * image.cols; float y = output_landmarks.y_center() * image.rows; int w = output_landmarks.width() * image.cols; int h = output_landmarks.height() * image.rows; cv::rectangle(image, cv::Rect((x - w / 2), (y - h / 2), w, h), cv::Scalar(255, 0, 0), 1, cv::LINE_8, 0); } void IrisDectect::drawEyeBoundaryLandmarks(cv::Mat& image) { mediapipe::Packet packet_landmarks; if (pPollerEyeBoundaryLandmarks_->QueueSize() == 0 || !pPollerEyeBoundaryLandmarks_->Next(&packet_landmarks)) return; auto& output_landmarks = packet_landmarks.Get<mediapipe::NormalizedLandmarkList>(); for (int i = 0; i < output_landmarks.landmark_size(); ++i) { const mediapipe::NormalizedLandmark landmark = output_landmarks.landmark(i); float x = landmark.x() * image.cols; float y = landmark.y() * image.rows; cv::circle(image, cv::Point(x, y), 2, cv::Scalar(255, 0, 0)); } } absl::Status IrisDectect::runMPPGraphVideo(const char* video_path, int show_image, iris_callback_t call) { cv::VideoCapture capture(0); RET_CHECK(capture.isOpened()); #if (CV_MAJOR_VERSION >= 3) && (CV_MINOR_VERSION >= 2) capture.set(cv::CAP_PROP_FRAME_WIDTH, 640); capture.set(cv::CAP_PROP_FRAME_HEIGHT, 480); capture.set(cv::CAP_PROP_FPS, 30); #endif bool grab_frames = true; while (grab_frames) { // Capture opencv camera or video frame. cv::Mat camera_frame_raw; capture >> camera_frame_raw; if (camera_frame_raw.empty()) break; cv::Mat camera_frame; cv::cvtColor(camera_frame_raw, camera_frame, cv::COLOR_BGR2RGB); cv::flip(camera_frame, camera_frame, /*flipcode=HORIZONTAL*/ 1); // Wrap Mat into an ImageFrame. auto input_frame = absl::make_unique<mediapipe::ImageFrame>( mediapipe::ImageFormat::SRGB, camera_frame.cols, camera_frame.rows, mediapipe::ImageFrame::kDefaultAlignmentBoundary); cv::Mat input_frame_mat = mediapipe::formats::MatView(input_frame.get()); camera_frame.copyTo(input_frame_mat); // Send image packet into the graph. size_t frame_timestamp_us = (double)cv::getTickCount() / (double)cv::getTickFrequency() * 1e6; MP_RETURN_IF_ERROR(graph_.AddPacketToInputStream( kInputStream, mediapipe::Adopt(input_frame.release()) .At(mediapipe::Timestamp(frame_timestamp_us)))); // Get the graph result packet, or stop if that fails. mediapipe::Packet packet; if (!pPoller_->Next(&packet)) break; //drawEyeLandmarks(camera_frame); // 眼球 //drawEyeContourLandmarks(camera_frame);// 眼轮廓 //drawEyeRectLandmarks(camera_frame);// 眼边框 //drawEyeBoundaryLandmarks(camera_frame);// 眼角 //cv::imwrite("res.jpg", camera_frame); if (show_image) { auto& output_frame = packet.Get<mediapipe::ImageFrame>(); // Convert back to opencv for display or saving. cv::Mat output_frame_mat = mediapipe::formats::MatView(&output_frame); cv::cvtColor(output_frame_mat, output_frame_mat, cv::COLOR_RGB2BGR); cv::Mat dst; cv::resize(output_frame_mat, dst, cv::Size(output_frame_mat.cols / 2, output_frame_mat.rows / 2)); cv::imshow(kWindowName, dst); cv::waitKey(1); } } if (show_image) cv::destroyWindow(kWindowName); return absl::OkStatus(); } absl::Status IrisDectect::releaseGraph() { MP_RETURN_IF_ERROR(graph_.CloseInputStream(kInputStream)); MP_RETURN_IF_ERROR(graph_.CloseInputStream(kOutputLandmarks)); return graph_.WaitUntilDone(); }- main.cpp

#include "iris_detect.h" using namespace IrisSample; IrisDectect irisDetect_; void call() { } int main(int argc, char* argv[]) { std::cout << "======= iris_detect ============" << std::endl; const char* model = argv[1]; const char* video = argv[2]; int res = irisDetect_.initModel(model); if (res < 0) { std::cout << "======= initModel error ============" << std::endl; return -1; } res = irisDetect_.detectVideo(video, true, call); //res = irisDetect_.detectVideoCamera(call); // 调取摄像头 if (res < 0) return -1; irisDetect_.release(); return 0; }cc_binary( name = "iris_sample", srcs = ["main.cpp","iris_detect.h","iris_detect.cpp"], # linkshared=True, // 生成动态库,这里生成exe deps = [ "//mediapipe/graphs/iris_tracking:iris_tracking_cpu_deps", ], )- 编译

bazel build -c opt --define MEDIAPIPE_DISABLE_GPU=1 --action_env PYTHON_BIN_PATH="xxx//python.exe" mediapipe/examples/desktop/iris_tracking_sample:iris_sample --verbose_failures- 成功后,执行

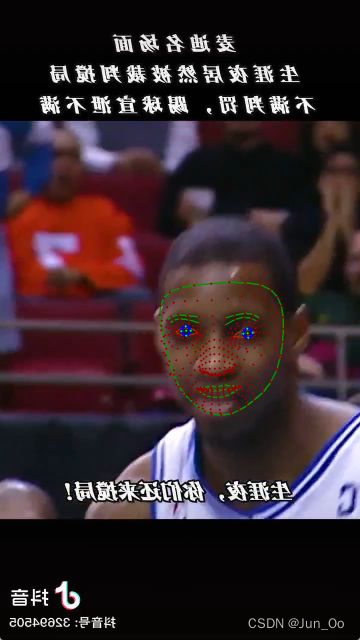

bazel-bin\mediapipe\examples\desktop\iris_tracking_sample\iris_sample "mediapipe\graphs\iris_tracking\iris_tracking_cpu.pbtxt"- 测试效果 (抖音上下载了我麦的视频)

参考连接

- https://github.com/google/mediapipe

- https://blog.csdn.net/sunnyblogs/article/details/118891249

- https://google.github.io/mediapipe/

其他实现

- HandTracking

- PoseTracking

- 其他待续…

Demo地址

Iris: https://download.csdn.net/download/haiyangyunbao813/72210048

Pose: https://download.csdn.net/download/haiyangyunbao813/20625281