LIO-SAM_based_relocalization运行kitti回环序列并保存轨迹评估(一)——————源码的分析

LIO-SAM_based_relocalization是由哈尔滨工业大学GaoChao开发的基于lio-sam改版的机器人重定位系统,依赖于先验地图信息。github链接如下:

https://github.com/Gaochao-hit/LIO-SAM_based_relocalization

LIO-SAM源码包含imageProjection、imuPreintegration、featureExtraction、mapOptmization四个cpp文件。GaoChao在此基础上增加了globalLocalize代码,并修改简化了源代码。

LIO-SAM源码的分析网络上比比皆是,但对于LIO-SAM_based_relocalization的分析确没有。笔者花费大半个月的时间分析并且测试了LIO-SAM_based_relocalization,从网上下载kitti原始数据并制作成bag包,整个过程较为曲折,踩了不少坑,特此记录。

该算法中imageProjection主要进行数据预处理,移动机器人小车在一帧lidar数据获取过程中也在不断的运动,导致lidar获取的数据不在同一个坐标系下,所以需要除去雷达帧的平移和旋转畸变,即这部分代码的作用就是把雷达旋转一周时,所有的点云都转换到同一个位姿下,也就是该帧初始时刻的lidar坐标系下;featureExtraction提取特征点云;imuPreintegration用来imu预积分。

最为核心的部分就是mapOptmization模块,代码量也是其余之和。Gao对其进行了修改和简化,貌似是去掉了回环检测的功能(笔者的bag包测试也证实了这一点)。下文详细注释了代码的过程,并增加了tum格式的轨迹输出(此处踩坑较多:

#include "utility.h"

#include "lio_sam/cloud_info.h"

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

using namespace gtsam;

using symbol_shorthand::X; // Pose3 (x,y,z,r,p,y)

using symbol_shorthand::V; // Vel (xdot,ydot,zdot)

using symbol_shorthand::B; // Bias (ax,ay,az,gx,gy,gz)

using symbol_shorthand::G; // GPS pose

/*

* A point cloud type that has 6D pose info ([x,y,z,roll,pitch,yaw] intensity is time stamp)

*/

struct PointXYZIRPYT //6D位姿点云结构体定义

{

PCL_ADD_POINT4D

PCL_ADD_INTENSITY; // preferred way of adding a XYZ+padding

float roll;

float pitch;

float yaw;

double time;

EIGEN_MAKE_ALIGNED_OPERATOR_NEW // make sure our new allocators are aligned

} EIGEN_ALIGN16; // enforce SSE padding for correct memory alignment

POINT_CLOUD_REGISTER_POINT_STRUCT (PointXYZIRPYT,

(float, x, x) (float, y, y)

(float, z, z) (float, intensity, intensity)

(float, roll, roll) (float, pitch, pitch) (float, yaw, yaw)

(double, time, time))

typedef PointXYZIRPYT PointTypePose;

class mapOptimization : public ParamServer

{

public:

// gtsam

NonlinearFactorGraph gtSAMgraph;

Values initialEstimate;

Values optimizedEstimate;

ISAM2 *isam;

Values isamCurrentEstimate;

Eigen::MatrixXd poseCovariance;

ros::Publisher pubLaserCloudSurround;

ros::Publisher pubOdomAftMappedROS;

ros::Publisher pubKeyPoses;

ros::Publisher pubPath;

ros::Publisher pubHistoryKeyFrames;

ros::Publisher pubIcpKeyFrames;

ros::Publisher pubRecentKeyFrames;

ros::Publisher pubRecentKeyFrame;

ros::Publisher pubCloudRegisteredRaw;

ros::Subscriber subLaserCloudInfo;

ros::Subscriber subGPS;

std::deque gpsQueue;

lio_sam::cloud_info cloudInfo;

//历史所有关键帧的角点集合(降采样)

vector::Ptr> cornerCloudKeyFrames;

//历史所有关键帧的平面点集合(降采样)

vector::Ptr> surfCloudKeyFrames;

//历史关键帧位姿(位置)

pcl::PointCloud::Ptr cloudKeyPoses3D;

//历史关键帧位姿

pcl::PointCloud::Ptr cloudKeyPoses6D;

// * pcl::PointCloud::Ptr copy_cloudKeyPoses3D;

// * pcl::PointCloud::Ptr copy_cloudKeyPoses6D;

//当前激光帧角点集合

pcl::PointCloud::Ptr laserCloudCornerLast; // corner feature set from odoOptimization

//当前激光帧平面点集合

pcl::PointCloud::Ptr laserCloudSurfLast; // surf feature set from odoOptimization

//当前激光帧角点集合,降采样 DS:down sample

pcl::PointCloud::Ptr laserCloudCornerLastDS; // downsampled corner featuer set from odoOptimization

//当前降采样平面点集合

pcl::PointCloud::Ptr laserCloudSurfLastDS; // downsampled surf featuer set from odoOptimization

// 当前帧与局部map匹配上的角点,平面点加入同一集合;后面是对应的参数

pcl::PointCloud::Ptr laserCloudOri;

pcl::PointCloud::Ptr coeffSel;

// 当前帧与局部map匹配上的角点、参数、标记;

std::vector laserCloudOriCornerVec; // corner point holder for parallel computation

std::vector coeffSelCornerVec;

std::vector laserCloudOriCornerFlag;

// 当前帧与局部map匹配上的平面点、参数、标记

std::vector laserCloudOriSurfVec; // surfstampMapDS;

std::vector coeffSelSurfVec;

std::vector laserCloudOriSurfFlag;

// 局部角点集合

pcl::PointCloud::Ptr laserCloudCornerFromMap;

// 局部平面点集合

pcl::PointCloud::Ptr laserCloudSurfFromMap;

// 局部map角点集合,降采样

pcl::PointCloud::Ptr laserCloudCornerFromMapDS;

// 局部map平面点集合,降采样

pcl::PointCloud::Ptr laserCloudSurfFromMapDS;

// 局部关键帧构建的map点云,对应kd-tree,用于scan-to-map找相邻点

pcl::KdTreeFLANN::Ptr kdtreeCornerFromMap;

pcl::KdTreeFLANN::Ptr kdtreeSurfFromMap;

pcl::KdTreeFLANN::Ptr kdtreeSurroundingKeyPoses;

pcl::KdTreeFLANN::Ptr kdtreeHistoryKeyPoses;

pcl::PointCloud::Ptr latestKeyFrameCloud;

pcl::PointCloud::Ptr nearHistoryKeyFrameCloud;

// 降采样

pcl::VoxelGrid downSizeFilterCorner;

pcl::VoxelGrid downSizeFilterSurf;

pcl::VoxelGrid downSizeFilterICP;

pcl::VoxelGrid downSizeFilterSurroundingKeyPoses; // for surrounding key poses of scan-to-map optimization

ros::Time timeLaserInfoStamp;

double timeLaserCloudInfoLast;

float transformTobeMapped[6];

std::mutex mtx;

double timeLastProcessing = -1;

// * std::mutex mtxLoopInfo

bool isDegenerate = false; //退化

Eigen::Matrix matP;

// 局部map降采样角点数量

int laserCloudCornerFromMapDSNum = 0;

// 局部map降采样平面点数量

int laserCloudSurfFromMapDSNum = 0;

// 当前激光帧降采样角点数量

int laserCloudCornerLastDSNum = 0;

// 当前激光帧降采样平面点数量

int laserCloudSurfLastDSNum = 0;

bool aLoopIsClosed = false;

int imuPreintegrationResetId = 0;

// * vector> loopIndexQueue;

// * vector loopPoseQueue;

// * vector loopNoiseQueue;

// * deque loopInfoVec;

nav_msgs::Path globalPath;

// 当前帧位姿

Eigen::Affine3f transPointAssociateToMap;

// 前一帧位姿

// * Eigen::Affine3f incrementalOdometryAffineFront;

// 当前帧位姿

// * Eigen::Affine3f incrementalOdometryAffineBack;

mapOptimization()

{

// ISM2参数

ISAM2Params parameters;

parameters.relinearizeThreshold = 0.1;

parameters.relinearizeSkip = 1;

isam = new ISAM2(parameters);

// 发布历史关键帧里程计

pubKeyPoses = nh.advertise("lio_sam/mapping/trajectory", 1);

// 发布局部关键帧map的特征点云

pubLaserCloudSurround = nh.advertise("lio_sam/mapping/map_global", 1);

// 发布激光里程计,rviz中表现为坐标轴

// pubLaserOdometryGlobal = nh.advertise ("lio_sam/mapping/odometry", 1);

// 发布激光里程计,它与上面的激光里程计基本一样,只是roll、pitch用imu数据加权平均了一下,z做了限制

// pubLaserOdometryIncremental = nh.advertise ("lio_sam/mapping/odometry_incremental", 1);

pubOdomAftMappedROS = nh.advertise ("lio_sam/mapping/odometry", 1);

// 发布激光里程及路径,rviz中表现为小车的运行轨迹

pubPath = nh.advertise("lio_sam/mapping/path", 1);

subLaserCloudInfo = nh.subscribe("lio_sam/feature/cloud_info", 10, &mapOptimization::laserCloudInfoHandler, this, ros::TransportHints().tcpNoDelay());

// 订阅gps里程计

subGPS = nh.subscribe (gpsTopic, 200, &mapOptimization::gpsHandler, this, ros::TransportHints().tcpNoDelay());

// 订阅来自外部闭环检测程序提供的闭环数据,本程序没有提供,这里实际没用上

// subLoop = nh.subscribe("lio_loop/loop_closure_detection", 1, &mapOptimization::loopInfoHandler, this, ros::TransportHints().tcpNoDelay());

// 发布地图保存服务

// srvSaveMap = nh.advertiseService("lio_sam/save_map", &mapOptimization::saveMapService, this);

pubHistoryKeyFrames = nh.advertise("lio_sam/mapping/icp_loop_closure_history_cloud", 1);

pubIcpKeyFrames = nh.advertise("lio_sam/mapping/icp_loop_closure_corrected_cloud", 1);

// 发布局部map的降采样平面点集合

pubRecentKeyFrames = nh.advertise("lio_sam/mapping/map_local", 1);

// 发布历史帧(累加的)的角点、平面点降采样集合

pubRecentKeyFrame = nh.advertise("lio_sam/mapping/cloud_registered", 1);

// 发布当前帧原始点云配准之后的点云

pubCloudRegisteredRaw = nh.advertise("lio_sam/mapping/cloud_registered_raw", 1);

downSizeFilterCorner.setLeafSize(mappingCornerLeafSize, mappingCornerLeafSize, mappingCornerLeafSize);

downSizeFilterSurf.setLeafSize(mappingSurfLeafSize, mappingSurfLeafSize, mappingSurfLeafSize);

downSizeFilterICP.setLeafSize(mappingSurfLeafSize, mappingSurfLeafSize, mappingSurfLeafSize);

downSizeFilterSurroundingKeyPoses.setLeafSize(surroundingKeyframeDensity, surroundingKeyframeDensity, surroundingKeyframeDensity); // for surrounding key poses of scan-to-map optimization

allocateMemory();

}

// 内存分配

void allocateMemory()

{

cloudKeyPoses3D.reset(new pcl::PointCloud());

cloudKeyPoses6D.reset(new pcl::PointCloud());

kdtreeSurroundingKeyPoses.reset(new pcl::KdTreeFLANN());

kdtreeHistoryKeyPoses.reset(new pcl::KdTreeFLANN());

laserCloudCornerLast.reset(new pcl::PointCloud()); // corner feature set from odoOptimization

laserCloudSurfLast.reset(new pcl::PointCloud()); // surf feature set from odoOptimization

laserCloudCornerLastDS.reset(new pcl::PointCloud()); // downsampled corner featuer set from odoOptimization

laserCloudSurfLastDS.reset(new pcl::PointCloud()); // downsampled surf featuer set from odoOptimization

laserCloudOri.reset(new pcl::PointCloud());

coeffSel.reset(new pcl::PointCloud());

laserCloudOriCornerVec.resize(N_SCAN * Horizon_SCAN);

coeffSelCornerVec.resize(N_SCAN * Horizon_SCAN);

laserCloudOriCornerFlag.resize(N_SCAN * Horizon_SCAN);

laserCloudOriSurfVec.resize(N_SCAN * Horizon_SCAN);

coeffSelSurfVec.resize(N_SCAN * Horizon_SCAN);

laserCloudOriSurfFlag.resize(N_SCAN * Horizon_SCAN);

std::fill(laserCloudOriCornerFlag.begin(), laserCloudOriCornerFlag.end(), false);

std::fill(laserCloudOriSurfFlag.begin(), laserCloudOriSurfFlag.end(), false);

laserCloudCornerFromMap.reset(new pcl::PointCloud());

laserCloudSurfFromMap.reset(new pcl::PointCloud());

laserCloudCornerFromMapDS.reset(new pcl::PointCloud());

laserCloudSurfFromMapDS.reset(new pcl::PointCloud());

kdtreeCornerFromMap.reset(new pcl::KdTreeFLANN());

kdtreeSurfFromMap.reset(new pcl::KdTreeFLANN());

latestKeyFrameCloud.reset(new pcl::PointCloud());

nearHistoryKeyFrameCloud.reset(new pcl::PointCloud());

for (int i = 0; i < 6; ++i){

transformTobeMapped[i] = 0;

}

matP.setZero();

}

void laserCloudInfoHandler(const lio_sam::cloud_infoConstPtr& msgIn)

{

// extract time stamp

//获取当前激光帧时间戳

timeLaserInfoStamp = msgIn->header.stamp;

timeLaserCloudInfoLast = msgIn->header.stamp.toSec();

// extract info and feature cloud

// 提取来自featureExtraction的当前激光帧角点、平面点集合

cloudInfo = *msgIn;

pcl::fromROSMsg(msgIn->cloud_corner, *laserCloudCornerLast); //将ros的角点点云消息数据转换成pcl数据

pcl::fromROSMsg(msgIn->cloud_surface, *laserCloudSurfLast); //将ros的平面点点云消息转换成pcl数据

std::lock_guard lock(mtx);

// mapping执行频率控制

// static double timeLastProcessing = -1;

if (timeLaserCloudInfoLast - timeLastProcessing >= mappingProcessInterval) {//gc:control the rate of mapping process

timeLastProcessing = timeLaserCloudInfoLast;

// 当前帧位姿初始化

// 1、如果是第一帧,用原始imu数据的RPY初始化当前帧位姿(旋转部分)

// 2、后续帧,用imu里程计计算两帧之间的增量位姿变换,作用于前一帧的激光位姿,得到当前帧激光初始位姿

updateInitialGuess();//gc: update initial value for states

// 获得当前帧的点云,降采样角点和平面点

downsampleCurrentScan();//gc:down sample the current corner points and surface points

// 然后和全局地图去匹配(scan to map),得到当前帧的一个更好的位姿。

scan2MapOptimization();//gc: calculate the tranformtion using lidar measurement with the Imu preintegration as initial values

//and then interpolate roll and pitch angle using IMU measurement and above measurement

//但不可能拿全局地图来匹配,所以选取和当前帧比较近的点云,获取局部点云图

extractSurroundingKeyFrames();//gc:

//但上述匹配仍不够完美,需要一个后端融合所有信息获取真正准确的位姿。设置当前帧为关键帧,并执行因子图优化

saveKeyFramesAndFactor();//gc: save corner cloud and surface cloud of this scan, and add odom and GPS factors

//随后更新所有的状态量,即更新因子图中所有变量节点的位姿,也就是所有历史关键帧的位姿,更新里程计轨迹

correctPoses();

//最后把结果给下游模块发布

publishOdometry();

// 发布里程计、点云、轨迹

// 1、发布历史关键帧位姿集合

// 2、发布局部map的降采样平面点集合

// 3、发布历史帧(累加的)的角点、平面点降采样集合

// 4、发布里程计轨迹

publishFrames();

}

}

void gpsHandler(const nav_msgs::Odometry::ConstPtr& gpsMsg)

{

gpsQueue.push_back(*gpsMsg);

}

// 根据当前帧位姿,变换到世界坐标系(map)下

void pointAssociateToMap(PointType const * const pi, PointType * const po)

{

po->x = transPointAssociateToMap(0,0) * pi->x + transPointAssociateToMap(0,1) * pi->y + transPointAssociateToMap(0,2) * pi->z + transPointAssociateToMap(0,3);

po->y = transPointAssociateToMap(1,0) * pi->x + transPointAssociateToMap(1,1) * pi->y + transPointAssociateToMap(1,2) * pi->z + transPointAssociateToMap(1,3);

po->z = transPointAssociateToMap(2,0) * pi->x + transPointAssociateToMap(2,1) * pi->y + transPointAssociateToMap(2,2) * pi->z + transPointAssociateToMap(2,3);

po->intensity = pi->intensity;

}

pcl::PointCloud::Ptr transformPointCloud(pcl::PointCloud::Ptr cloudIn, PointTypePose* transformIn) //输入两个形参,分别为点云和变换,返回变换到世界坐标系下的点

{

pcl::PointCloud::Ptr cloudOut(new pcl::PointCloud());

PointType *pointFrom;

int cloudSize = cloudIn->size();

cloudOut->resize(cloudSize);

Eigen::Affine3f transCur = pcl::getTransformation(transformIn->x, transformIn->y, transformIn->z, transformIn->roll, transformIn->pitch, transformIn->yaw);

for (int i = 0; i < cloudSize; ++i){

pointFrom = &cloudIn->points[i];

cloudOut->points[i].x = transCur(0,0) * pointFrom->x + transCur(0,1) * pointFrom->y + transCur(0,2) * pointFrom->z + transCur(0,3);

cloudOut->points[i].y = transCur(1,0) * pointFrom->x + transCur(1,1) * pointFrom->y + transCur(1,2) * pointFrom->z + transCur(1,3);

cloudOut->points[i].z = transCur(2,0) * pointFrom->x + transCur(2,1) * pointFrom->y + transCur(2,2) * pointFrom->z + transCur(2,3);

cloudOut->points[i].intensity = pointFrom->intensity;

}

return cloudOut;

}

gtsam::Pose3 pclPointTogtsamPose3(PointTypePose thisPoint)

{

return gtsam::Pose3(gtsam::Rot3::RzRyRx(double(thisPoint.roll), double(thisPoint.pitch), double(thisPoint.yaw)),

gtsam::Point3(double(thisPoint.x), double(thisPoint.y), double(thisPoint.z)));

}

gtsam::Pose3 trans2gtsamPose(float transformIn[])

{

return gtsam::Pose3(gtsam::Rot3::RzRyRx(transformIn[0], transformIn[1], transformIn[2]),

gtsam::Point3(transformIn[3], transformIn[4], transformIn[5]));

}

Eigen::Affine3f pclPointToAffine3f(PointTypePose thisPoint)

{

return pcl::getTransformation(thisPoint.x, thisPoint.y, thisPoint.z, thisPoint.roll, thisPoint.pitch, thisPoint.yaw);

}

// Eigen格式的位姿变换

Eigen::Affine3f trans2Affine3f(float transformIn[])

{

return pcl::getTransformation(transformIn[3], transformIn[4], transformIn[5], transformIn[0], transformIn[1], transformIn[2]);

}

PointTypePose trans2PointTypePose(float transformIn[])

{

PointTypePose thisPose6D;

thisPose6D.x = transformIn[3];

thisPose6D.y = transformIn[4];

thisPose6D.z = transformIn[5];

thisPose6D.roll = transformIn[0];

thisPose6D.pitch = transformIn[1];

thisPose6D.yaw = transformIn[2];

return thisPose6D;

}

void visualizeGlobalMapThread()

{

ros::Rate rate(0.2);

while (ros::ok()){

rate.sleep();

publishGlobalMap();

}

if (savePCD == false)

return;

cout << "****************************************************" << endl;

cout << "Saving map to pcd files ..." << endl;

// create directory and remove old files;

savePCDDirectory = std::getenv("HOME") + savePCDDirectory;

int unused = system((std::string("exec rm -r ") + savePCDDirectory).c_str());

unused = system((std::string("mkdir ") + savePCDDirectory).c_str());

// save key frame transformations

pcl::io::savePCDFileASCII(savePCDDirectory + "trajectory.pcd", *cloudKeyPoses3D);

pcl::io::savePCDFileASCII(savePCDDirectory + "transformations.pcd", *cloudKeyPoses6D);

// extract global point cloud map

pcl::PointCloud::Ptr globalCornerCloud(new pcl::PointCloud());

pcl::PointCloud::Ptr globalCornerCloudDS(new pcl::PointCloud());

pcl::PointCloud::Ptr globalSurfCloud(new pcl::PointCloud());

pcl::PointCloud::Ptr globalSurfCloudDS(new pcl::PointCloud());

pcl::PointCloud::Ptr globalMapCloud(new pcl::PointCloud());

for (int i = 0; i < (int)cloudKeyPoses3D->size(); i++) {

*globalCornerCloud += *transformPointCloud(cornerCloudKeyFrames[i], &cloudKeyPoses6D->points[i]);

*globalSurfCloud += *transformPointCloud(surfCloudKeyFrames[i], &cloudKeyPoses6D->points[i]);

cout << "\r" << std::flush << "Processing feature cloud " << i << " of " << cloudKeyPoses6D->size() << " ...";

}

// down-sample and save corner cloud

downSizeFilterCorner.setInputCloud(globalCornerCloud);

downSizeFilterCorner.filter(*globalCornerCloudDS);

pcl::io::savePCDFileASCII(savePCDDirectory + "cloudCorner.pcd", *globalCornerCloudDS);

// down-sample and save surf cloud

downSizeFilterSurf.setInputCloud(globalSurfCloud);

downSizeFilterSurf.filter(*globalSurfCloudDS);

pcl::io::savePCDFileASCII(savePCDDirectory + "cloudSurf.pcd", *globalSurfCloudDS);

// down-sample and save global point cloud map

*globalMapCloud += *globalCornerCloud;

*globalMapCloud += *globalSurfCloud;

pcl::io::savePCDFileASCII(savePCDDirectory + "cloudGlobal.pcd", *globalMapCloud);

cout << "****************************************************" << endl;

cout << "Saving map to pcd files completed" << endl;

}

void publishGlobalMap()

{

if (pubLaserCloudSurround.getNumSubscribers() == 0)

return;

if (cloudKeyPoses3D->points.empty() == true)

return;

pcl::KdTreeFLANN::Ptr kdtreeGlobalMap(new pcl::KdTreeFLANN());;

pcl::PointCloud::Ptr globalMapKeyPoses(new pcl::PointCloud());

pcl::PointCloud::Ptr globalMapKeyPosesDS(new pcl::PointCloud());

pcl::PointCloud::Ptr globalMapKeyFrames(new pcl::PointCloud());

pcl::PointCloud::Ptr globalMapKeyFramesDS(new pcl::PointCloud());

// kd-tree to find near key frames to visualize

std::vector pointSearchIndGlobalMap;

std::vector pointSearchSqDisGlobalMap;

// search near key frames to visualize

mtx.lock();

kdtreeGlobalMap->setInputCloud(cloudKeyPoses3D);

kdtreeGlobalMap->radiusSearch(cloudKeyPoses3D->back(), globalMapVisualizationSearchRadius, pointSearchIndGlobalMap, pointSearchSqDisGlobalMap, 0);

mtx.unlock();

for (int i = 0; i < (int)pointSearchIndGlobalMap.size(); ++i)

globalMapKeyPoses->push_back(cloudKeyPoses3D->points[pointSearchIndGlobalMap[i]]);

// downsample near selected key frames

pcl::VoxelGrid downSizeFilterGlobalMapKeyPoses; // for global map visualization

downSizeFilterGlobalMapKeyPoses.setLeafSize(globalMapVisualizationPoseDensity, globalMapVisualizationPoseDensity, globalMapVisualizationPoseDensity); // for global map visualization

downSizeFilterGlobalMapKeyPoses.setInputCloud(globalMapKeyPoses);

downSizeFilterGlobalMapKeyPoses.filter(*globalMapKeyPosesDS);

// extract visualized and downsampled key frames

for (int i = 0; i < (int)globalMapKeyPosesDS->size(); ++i){

if (pointDistance(globalMapKeyPosesDS->points[i], cloudKeyPoses3D->back()) > globalMapVisualizationSearchRadius)

continue;

int thisKeyInd = (int)globalMapKeyPosesDS->points[i].intensity;

*globalMapKeyFrames += *transformPointCloud(cornerCloudKeyFrames[thisKeyInd], &cloudKeyPoses6D->points[thisKeyInd]);

*globalMapKeyFrames += *transformPointCloud(surfCloudKeyFrames[thisKeyInd], &cloudKeyPoses6D->points[thisKeyInd]);

}

// downsample visualized points

pcl::VoxelGrid downSizeFilterGlobalMapKeyFrames; // for global map visualization

downSizeFilterGlobalMapKeyFrames.setLeafSize(globalMapVisualizationLeafSize, globalMapVisualizationLeafSize, globalMapVisualizationLeafSize); // for global map visualization

downSizeFilterGlobalMapKeyFrames.setInputCloud(globalMapKeyFrames);

downSizeFilterGlobalMapKeyFrames.filter(*globalMapKeyFramesDS);

publishCloud(&pubLaserCloudSurround, globalMapKeyFramesDS, timeLaserInfoStamp, "odom");

}

void loopClosureThread()

{

if (loopClosureEnableFlag == false)

return;

ros::Rate rate(0.1);

while (ros::ok())

{

rate.sleep();

performLoopClosure();

}

}

bool detectLoopClosure(int *latestID, int *closestID)

{

int latestFrameIDLoopCloure;

int closestHistoryFrameID;

latestKeyFrameCloud->clear();

nearHistoryKeyFrameCloud->clear();

std::lock_guard lock(mtx);

// find the closest history key frame

std::vector pointSearchIndLoop;

std::vector pointSearchSqDisLoop;

kdtreeHistoryKeyPoses->setInputCloud(cloudKeyPoses3D);

//gc:search the last keyframe's nearest keyframes

kdtreeHistoryKeyPoses->radiusSearch(cloudKeyPoses3D->back(), historyKeyframeSearchRadius, pointSearchIndLoop, pointSearchSqDisLoop, 0);

closestHistoryFrameID = -1;

for (int i = 0; i < (int)pointSearchIndLoop.size(); ++i)

{

int id = pointSearchIndLoop[i];

//gc: find the nearest keyframe whose time is not close

if (abs(cloudKeyPoses6D->points[id].time - timeLaserCloudInfoLast) > historyKeyframeSearchTimeDiff)

{

closestHistoryFrameID = id;

break;

}

}

if (closestHistoryFrameID == -1)

return false;

//gc: if the closest keyframe is

if ((int)cloudKeyPoses3D->size() - 1 == closestHistoryFrameID)

return false;

// save latest key frames

latestFrameIDLoopCloure = cloudKeyPoses3D->size() - 1;

*latestKeyFrameCloud += *transformPointCloud(cornerCloudKeyFrames[latestFrameIDLoopCloure], &cloudKeyPoses6D->points[latestFrameIDLoopCloure]);

*latestKeyFrameCloud += *transformPointCloud(surfCloudKeyFrames[latestFrameIDLoopCloure], &cloudKeyPoses6D->points[latestFrameIDLoopCloure]);

// save history near key frames

//gc: combine the time_close frame of the nearset frame

bool nearFrameAvailable = false;

for (int j = -historyKeyframeSearchNum; j <= historyKeyframeSearchNum; ++j)

{

if (closestHistoryFrameID + j < 0 || closestHistoryFrameID + j > latestFrameIDLoopCloure)

continue;

*nearHistoryKeyFrameCloud += *transformPointCloud(cornerCloudKeyFrames[closestHistoryFrameID+j], &cloudKeyPoses6D->points[closestHistoryFrameID+j]);

*nearHistoryKeyFrameCloud += *transformPointCloud(surfCloudKeyFrames[closestHistoryFrameID+j], &cloudKeyPoses6D->points[closestHistoryFrameID+j]);

nearFrameAvailable = true;

}

if (nearFrameAvailable == false)

return false;

*latestID = latestFrameIDLoopCloure;

*closestID = closestHistoryFrameID;

return true;

}

void performLoopClosure()

{

if (cloudKeyPoses3D->points.empty() == true)

return;

int latestFrameIDLoopCloure;

int closestHistoryFrameID;

if (detectLoopClosure(&latestFrameIDLoopCloure, &closestHistoryFrameID) == false)

return;

// ICP Settings

pcl::IterativeClosestPoint icp;

icp.setMaxCorrespondenceDistance(100);

icp.setMaximumIterations(100);

icp.setTransformationEpsilon(1e-6);

icp.setEuclideanFitnessEpsilon(1e-6);

icp.setRANSACIterations(0);

// Downsample map cloud

pcl::PointCloud::Ptr cloud_temp(new pcl::PointCloud());

downSizeFilterICP.setInputCloud(nearHistoryKeyFrameCloud);

downSizeFilterICP.filter(*cloud_temp);

*nearHistoryKeyFrameCloud = *cloud_temp;

// publish history near key frames

publishCloud(&pubHistoryKeyFrames, nearHistoryKeyFrameCloud, timeLaserInfoStamp, "odom");

// Align clouds

// icp.setin

icp.setInputSource(latestKeyFrameCloud);

icp.setInputTarget(nearHistoryKeyFrameCloud);

pcl::PointCloud::Ptr unused_result(new pcl::PointCloud());

icp.align(*unused_result);

// std::cout << "ICP converg flag:" << icp.hasConverged() << ". Fitness score: " << icp.getFitnessScore() << std::endl;

if (icp.hasConverged() == false || icp.getFitnessScore() > historyKeyframeFitnessScore)

return;

// publish corrected cloud

if (pubIcpKeyFrames.getNumSubscribers() != 0){

pcl::PointCloud::Ptr closed_cloud(new pcl::PointCloud());

pcl::transformPointCloud(*latestKeyFrameCloud, *closed_cloud, icp.getFinalTransformation());

publishCloud(&pubIcpKeyFrames, closed_cloud, timeLaserInfoStamp, "odom");

}

// Get pose transformation

float x, y, z, roll, pitch, yaw;

Eigen::Affine3f correctionLidarFrame;

//gc: realtive transformation

correctionLidarFrame = icp.getFinalTransformation();

// transform from world origin to wrong pose

Eigen::Affine3f tWrong = pclPointToAffine3f(cloudKeyPoses6D->points[latestFrameIDLoopCloure]);

// transform from world origin to corrected pose

Eigen::Affine3f tCorrect = correctionLidarFrame * tWrong;// pre-multiplying -> successive rotation about a fixed frame

pcl::getTranslationAndEulerAngles (tCorrect, x, y, z, roll, pitch, yaw);

gtsam::Pose3 poseFrom = Pose3(Rot3::RzRyRx(roll, pitch, yaw), Point3(x, y, z));

gtsam::Pose3 poseTo = pclPointTogtsamPose3(cloudKeyPoses6D->points[closestHistoryFrameID]);

gtsam::Vector Vector6(6);

float noiseScore = icp.getFitnessScore();//gc: noise right??

Vector6 << noiseScore, noiseScore, noiseScore, noiseScore, noiseScore, noiseScore;

noiseModel::Diagonal::shared_ptr constraintNoise = noiseModel::Diagonal::Variances(Vector6);

// Add pose constraint

std::lock_guard lock(mtx);

gtSAMgraph.add(BetweenFactor(latestFrameIDLoopCloure, closestHistoryFrameID, poseFrom.between(poseTo), constraintNoise));

isam->update(gtSAMgraph);

isam->update();

gtSAMgraph.resize(0);

isamCurrentEstimate = isam->calculateEstimate();

Pose3 latestEstimate = isamCurrentEstimate.at(isamCurrentEstimate.size()-1);

transformTobeMapped[0] = latestEstimate.rotation().roll();

transformTobeMapped[1] = latestEstimate.rotation().pitch();

transformTobeMapped[2] = latestEstimate.rotation().yaw();

transformTobeMapped[3] = latestEstimate.translation().x();

transformTobeMapped[4] = latestEstimate.translation().y();

transformTobeMapped[5] = latestEstimate.translation().z();

correctPoses();

aLoopIsClosed = true;

}

// 当前帧位姿初始化

// 2、后续帧,用imu里程计计算两帧之间的增量位姿变换,作用于前一帧的激光位姿,得到当前帧激光初始位姿

void updateInitialGuess()

{

// save current transformation before any processing

// 前一帧的位姿,注:这里指lidar的位姿,后面都简写成位姿

// incrementalOdometryAffineFront = trans2Affine3f(transformTobeMapped);

// 前一帧的初始化姿态角(来自cloudInfo的IMU数据)

static Eigen::Affine3f lastImuTransformation;//gc: note that this is static type

// initialization

// 如果是第一帧,用原始imu数据的RPY初始化当前帧位姿(旋转部分)

if (cloudKeyPoses3D->points.empty())//gc: there is no key pose 初始化

{

transformTobeMapped[0] = cloudInfo.imuRollInit;

transformTobeMapped[1] = cloudInfo.imuPitchInit;

transformTobeMapped[2] = cloudInfo.imuYawInit;

if (!useImuHeadingInitialization)//gc: if not use the heading of init_IMU as Initialization //GPS

transformTobeMapped[2] = 0;

lastImuTransformation = pcl::getTransformation(0, 0, 0, cloudInfo.imuRollInit, cloudInfo.imuPitchInit, cloudInfo.imuYawInit); // save imu before return;

return;

}

// 用当前帧和前一帧对应的imu里程计计算相对位姿变换,再用前一帧的位姿与相对变换,计算当前帧的位姿,存transformTobeMapped

// * static bool lastImuPreTransAvailable = false;

// * static Eigen::Affine3f lastImuPreTransformation;

//odomAvailable和imuAvailable均来源于imageProjection.cpp中赋值,

//imuAvailable是遍历激光帧前后起止时刻0.01s之内的imu数据,

//如果都没有那就是false,因为imu频率一般比激光帧快,因此这里应该是都有的。

//odomAvailable同理,是监听imu里程计的位姿,如果没有紧挨着激光帧的imu里程计数据,那么就是false;

//这俩应该一般都有

// use imu pre-integration estimation for pose guess

//

if (cloudInfo.odomAvailable == true && cloudInfo.imuPreintegrationResetId == imuPreintegrationResetId) //该段代码与原文相差较大

{

// cloudInfo来自featureExtraction.cpp发布的liox-sam/feature/cloud_info

// 直接使用imu里程计数据

transformTobeMapped[0] = cloudInfo.initialGuessRoll;

transformTobeMapped[1] = cloudInfo.initialGuessPitch;

transformTobeMapped[2] = cloudInfo.initialGuessYaw;

transformTobeMapped[3] = cloudInfo.initialGuessX;

transformTobeMapped[4] = cloudInfo.initialGuessY;

transformTobeMapped[5] = cloudInfo.initialGuessZ;

lastImuTransformation = pcl::getTransformation(0, 0, 0, cloudInfo.imuRollInit, cloudInfo.imuPitchInit, cloudInfo.imuYawInit); // save imu before return;

return;

}

// use previous pose for pose guess

// if (cloudKeyPoses6D->points.size() >= 2)

// {

// int oldId = cloudKeyPoses6D->points.size() - 2;

// int preId = cloudKeyPoses6D->points.size() - 1;

// Eigen::Affine3f transOld = pclPointToAffine3f(cloudKeyPoses6D->points[oldId]);

// Eigen::Affine3f transPre = pclPointToAffine3f(cloudKeyPoses6D->points[preId]);

// double deltaTimePre = cloudKeyPoses6D->points[preId].time - cloudKeyPoses6D->points[oldId].time;

// double deltaTimeNow = timeLaserCloudInfoLast - cloudKeyPoses6D->points[preId].time;

// double alpha = deltaTimeNow / deltaTimePre;

// Eigen::Affine3f transIncPre = transOld.inverse() * transPre;

// float x, y, z, roll, pitch, yaw;

// pcl::getTranslationAndEulerAngles (transIncPre, x, y, z, roll, pitch, yaw);

// Eigen::Affine3f transIncNow = pcl::getTransformation(alpha*x, alpha*y, alpha*z, alpha*roll, alpha*pitch, alpha*yaw);

// Eigen::Affine3f transTobe = trans2Affine3f(transformTobeMapped);

// Eigen::Affine3f transFinal = transTobe * transIncNow;

// pcl::getTranslationAndEulerAngles(transFinal, transformTobeMapped[3], transformTobeMapped[4], transformTobeMapped[5],

// transformTobeMapped[0], transformTobeMapped[1], transformTobeMapped[2]);

// lastImuTransformation = pcl::getTransformation(0, 0, 0, cloudInfo.imuRollInit, cloudInfo.imuPitchInit, cloudInfo.imuYawInit); // save imu before return;

// return;

// }

// use imu incremental estimation for pose guess (only rotation)

// 如果是第二帧

if (cloudInfo.imuAvailable == true)

{

// transBack是这一帧时刻的imu位姿

Eigen::Affine3f transBack = pcl::getTransformation(0, 0, 0, cloudInfo.imuRollInit, cloudInfo.imuPitchInit, cloudInfo.imuYawInit);

// lastImuTransFormation是最新的上一刻数据,求逆后与transback相乘才是增量

Eigen::Affine3f transIncre = lastImuTransformation.inverse() * transBack;//gc: the transform of IMU between two scans

//前一帧的位姿

Eigen::Affine3f transTobe = trans2Affine3f(transformTobeMapped);

// 当前帧姿态

Eigen::Affine3f transFinal = transTobe * transIncre;

//将transFinal传入,结果输出至transformTobeMapped

pcl::getTranslationAndEulerAngles(transFinal, transformTobeMapped[3], transformTobeMapped[4], transformTobeMapped[5],

transformTobeMapped[0], transformTobeMapped[1], transformTobeMapped[2]);

lastImuTransformation = pcl::getTransformation(0, 0, 0, cloudInfo.imuRollInit, cloudInfo.imuPitchInit, cloudInfo.imuYawInit); // save imu before return;

return;

}

}

void extractForLoopClosure()

{

pcl::PointCloud::Ptr cloudToExtract(new pcl::PointCloud());

int numPoses = cloudKeyPoses3D->size();

for (int i = numPoses-1; i >= 0; --i)

{

if ((int)cloudToExtract->size() <= surroundingKeyframeSize)

cloudToExtract->push_back(cloudKeyPoses3D->points[i]);

else

break;

}

extractCloud(cloudToExtract);

}

//gc:search nearby key poses and downsample them and then extract the local map points

void extractNearby()

{

pcl::PointCloud::Ptr surroundingKeyPoses(new pcl::PointCloud());

// 降采样后的关键帧

pcl::PointCloud::Ptr surroundingKeyPosesDS(new pcl::PointCloud());//gc: the key poses after downsample

std::vector pointSearchInd;

std::vector pointSearchSqDis;

// extract all the nearby key poses and downsample them

// kd-tree的输入,全局关键帧位姿集合(历史所有关键帧)

kdtreeSurroundingKeyPoses->setInputCloud(cloudKeyPoses3D); // create kd-tree

//gc: kd-tree is used for nearby key poses

// 创建kd-tree。固定半径近邻搜索,pointSearchInd返回index,pointSearchSqDis依次距离中心点距离

kdtreeSurroundingKeyPoses->radiusSearch(cloudKeyPoses3D->back(), (double)surroundingKeyframeSearchRadius, pointSearchInd, pointSearchSqDis);

for (int i = 0; i < (int)pointSearchInd.size(); ++i)

{

int id = pointSearchInd[i];

surroundingKeyPoses->push_back(cloudKeyPoses3D->points[id]);

}

// 降采样,再滤波,存入surroundingKeyPosesDS

downSizeFilterSurroundingKeyPoses.setInputCloud(surroundingKeyPoses);

downSizeFilterSurroundingKeyPoses.filter(*surroundingKeyPosesDS);

// also extract some latest key frames in case the robot rotates in one position

// 提取一些最新的关键帧以防机器人原地旋转,即和当前帧时间近的帧

int numPoses = cloudKeyPoses3D->size();

// 把10s内的关键帧也加到surroundingKeyPosesDS中,注意是“也”,原先已经装了下采样的位姿(位置)

for (int i = numPoses-1; i >= 0; --i)

{

if (timeLaserCloudInfoLast - cloudKeyPoses6D->points[i].time < 10.0)

surroundingKeyPosesDS->push_back(cloudKeyPoses3D->points[i]);

else

break;

}

//对降采样后的点云提取出角点和平面点,加入局部map中,作为scan-to-map的局部点云地图

extractCloud(surroundingKeyPosesDS);

}

//gc: extract the nearby Map points

/*

* 将相邻关键帧集合对应的角点、平面点,加入到局部map中,作为scan-to-map匹配的局部点云地图

*/

void extractCloud(pcl::PointCloud::Ptr cloudToExtract)

{

std::vector> laserCloudCornerSurroundingVec; //新加变量,降采样后附近角点

std::vector> laserCloudSurfSurroundingVec;

laserCloudCornerSurroundingVec.resize(cloudToExtract->size());

laserCloudSurfSurroundingVec.resize(cloudToExtract->size());

// extract surrounding map

#pragma omp parallel for num_threads(numberOfCores) //4个线程共同执行循环

for (int i = 0; i < (int)cloudToExtract->size(); ++i)

{

// 距离超过50m,丢弃

if (pointDistance(cloudToExtract->points[i], cloudKeyPoses3D->back()) > surroundingKeyframeSearchRadius)

continue;

//相邻关键帧索引

int thisKeyInd = (int)cloudToExtract->points[i].intensity;//gc: the index of this key frame

//gc: tranform the corner points and surfpoints of the nearby keyFrames into the world frame

//相邻关键帧对应的角点、平面点云,通过6D位姿变换到世界坐标系下

//transformPointCloud输入的两个形参,分别为点云和变换,返回变换位姿后的点

laserCloudCornerSurroundingVec[i] = *transformPointCloud(cornerCloudKeyFrames[thisKeyInd], &cloudKeyPoses6D->points[thisKeyInd]);

laserCloudSurfSurroundingVec[i] = *transformPointCloud(surfCloudKeyFrames[thisKeyInd], &cloudKeyPoses6D->points[thisKeyInd]);

}

// fuse the map,角点面点清空

laserCloudCornerFromMap->clear();

laserCloudSurfFromMap->clear();

// 遍历当前帧(实际是取最近的一个关键帧来找它相邻的关键帧集合)时空维度上相邻的关键帧集合

for (int i = 0; i < (int)cloudToExtract->size(); ++i)

{

// 加入局部map

*laserCloudCornerFromMap += laserCloudCornerSurroundingVec[i];

*laserCloudSurfFromMap += laserCloudSurfSurroundingVec[i];

}

// Downsample the surrounding corner key frames (or map)

// 降采样局部角点map

downSizeFilterCorner.setInputCloud(laserCloudCornerFromMap);

downSizeFilterCorner.filter(*laserCloudCornerFromMapDS);

laserCloudCornerFromMapDSNum = laserCloudCornerFromMapDS->size();

// Downsample the surrounding surf key frames (or map)

// 降采样局部平面点map

downSizeFilterSurf.setInputCloud(laserCloudSurfFromMap);

downSizeFilterSurf.filter(*laserCloudSurfFromMapDS);

laserCloudSurfFromMapDSNum = laserCloudSurfFromMapDS->size();

// 太大了,清空一下内存

// if (laserCloudMapContainer.size() > 1000)

// laserCloudMapContainer.clear();

}

/*

* 提取局部角点、平面点云集合,加入局部map

* 1、对最近的一帧关键帧,搜索时空维度上相邻的关键帧集合,降采样一下

* 2、对关键帧集合中的每一帧,提取对应的角点、平面点,加入局部map中

*/

void extractSurroundingKeyFrames()

{

if (cloudKeyPoses3D->points.empty() == true)

return;

if (loopClosureEnableFlag == true)//gc:TODO: a little weired: Loop closure should search the whole map while

{

extractForLoopClosure(); //gc: the name is misleading

} else {

extractNearby();

}

}

void downsampleCurrentScan()

{

// Downsample cloud from current scan

//对当前帧点云降采样 刚刚完成了周围关键帧的降采样

//大量的降采样工作无非是为了使点云稀疏化 加快匹配以及实时性要求

laserCloudCornerLastDS->clear();

downSizeFilterCorner.setInputCloud(laserCloudCornerLast);

downSizeFilterCorner.filter(*laserCloudCornerLastDS);

// 降采样角点数量

laserCloudCornerLastDSNum = laserCloudCornerLastDS->size();

// 降采样面点数量

laserCloudSurfLastDS->clear();

downSizeFilterSurf.setInputCloud(laserCloudSurfLast);

downSizeFilterSurf.filter(*laserCloudSurfLastDS);

laserCloudSurfLastDSNum = laserCloudSurfLastDS->size();

}

/*

* 当前激光帧角点寻找局部map匹配点

* 1、更新当前帧位姿,将当前帧角点坐标变换到map系下,在局部map中查找5个最近点,距离小于1m,且5个点构成直线(用距离中心点的协方差矩阵,特征值进行判断),则认为匹配上了

* 2、计算当前帧角点到直线的距离、垂线的单位向量,存储为角点参数

*/

void cornerOptimization()

{

//实现transformTobeMapped的矩阵形式转换

// 把结果存入transPointAssociateToMap中

updatePointAssociateToMap();

#pragma omp parallel for num_threads(numberOfCores)

//gc: for every corner point

for (int i = 0; i < laserCloudCornerLastDSNum; i++)

{

PointType pointOri, pointSel, coeff;

std::vector pointSearchInd;

std::vector pointSearchSqDis;

pointOri = laserCloudCornerLastDS->points[i];

//gc: calculate its location in the map using the prediction pose

//第i帧的点转换到第一帧坐标系下

//这里就调用了第一步中updatePointAssociateToMap中实现的transPointAssociateToMap,

//然后利用这个函数,把pointOri的点转换到pointSel下,pointSel作为输出

pointAssociateToMap(&pointOri, &pointSel);

// kd-tree最近搜索

kdtreeCornerFromMap->nearestKSearch(pointSel, 5, pointSearchInd, pointSearchSqDis);

cv::Mat matA1(3, 3, CV_32F, cv::Scalar::all(0)); //mat(int rows行数, int cols列数, int type类型);

cv::Mat matD1(1, 3, CV_32F, cv::Scalar::all(0));

cv::Mat matV1(3, 3, CV_32F, cv::Scalar::all(0));

if (pointSearchSqDis[4] < 1.0) {

float cx = 0, cy = 0, cz = 0;

// 5个样本的均值

for (int j = 0; j < 5; j++) {

cx += laserCloudCornerFromMapDS->points[pointSearchInd[j]].x;

cy += laserCloudCornerFromMapDS->points[pointSearchInd[j]].y;

cz += laserCloudCornerFromMapDS->points[pointSearchInd[j]].z;

}

//gc: the average coordinate of the most nearest points

cx /= 5; cy /= 5; cz /= 5;

// 下面求矩阵matA1=[ax,ay,az]^t*[ax,ay,az]

// 更准确地说应该是在求协方差matA1

float a11 = 0, a12 = 0, a13 = 0, a22 = 0, a23 = 0, a33 = 0;

for (int j = 0; j < 5; j++) {

float ax = laserCloudCornerFromMapDS->points[pointSearchInd[j]].x - cx;

float ay = laserCloudCornerFromMapDS->points[pointSearchInd[j]].y - cy;

float az = laserCloudCornerFromMapDS->points[pointSearchInd[j]].z - cz;

a11 += ax * ax; a12 += ax * ay; a13 += ax * az;

a22 += ay * ay; a23 += ay * az;

a33 += az * az;

}

a11 /= 5; a12 /= 5; a13 /= 5; a22 /= 5; a23 /= 5; a33 /= 5;

matA1.at(0, 0) = a11; matA1.at(0, 1) = a12; matA1.at(0, 2) = a13;

matA1.at(1, 0) = a12; matA1.at(1, 1) = a22; matA1.at(1, 2) = a23;

matA1.at(2, 0) = a13; matA1.at(2, 1) = a23; matA1.at(2, 2) = a33;

// 求正交阵的特征值和特征向量

// 特征值:matD1,特征向量:matV1中 对应于LOAM论文里雷达建图 特征值与特征向量那块

cv::eigen(matA1, matD1, matV1);

// 边缘:与较大特征值相对应的特征向量代表边缘线的方向(一大两小,大方向)

// 以下这一大块是在计算点到边缘的距离,最后通过系数s来判断是否距离很近

// 如果距离很近就认为这个点在边缘上,需要放到laserCloudOri中

// 如果最大的特征值相比次大特征值,大很多,认为构成了线,角点是合格的

if (matD1.at(0, 0) > 3 * matD1.at(0, 1)) {

// 当前帧角点坐标(map系下)

float x0 = pointSel.x;

float y0 = pointSel.y;

float z0 = pointSel.z;

// 局部map对应中心角点,沿着特征向量(直线方向)方向,前后各取一个点

float x1 = cx + 0.1 * matV1.at(0, 0);

float y1 = cy + 0.1 * matV1.at(0, 1);

float z1 = cz + 0.1 * matV1.at(0, 2);

float x2 = cx - 0.1 * matV1.at(0, 0);

float y2 = cy - 0.1 * matV1.at(0, 1);

float z2 = cz - 0.1 * matV1.at(0, 2);

// 这边是在求[(x0-x1),(y0-y1),(z0-z1)]与[(x0-x2),(y0-y2),(z0-z2)]叉乘得到的向量的模长

// 这个模长是由0.2*V1[0]和点[x0,y0,z0]构成的平行四边形的面积

// 因为[(x0-x1),(y0-y1),(z0-z1)]x[(x0-x2),(y0-y2),(z0-z2)]=[XXX,YYY,ZZZ],

// [XXX,YYY,ZZZ]=[(y0-y1)(z0-z2)-(y0-y2)(z0-z1),-(x0-x1)(z0-z2)+(x0-x2)(z0-z1),(x0-x1)(y0-y2)-(x0-x2)(y0-y1)]

// area_012,也就是三个点组成的三角形面积*2,叉积的模|axb|=a*b*sin(theta)

float a012 = sqrt(((x0 - x1)*(y0 - y2) - (x0 - x2)*(y0 - y1)) * ((x0 - x1)*(y0 - y2) - (x0 - x2)*(y0 - y1))

+ ((x0 - x1)*(z0 - z2) - (x0 - x2)*(z0 - z1)) * ((x0 - x1)*(z0 - z2) - (x0 - x2)*(z0 - z1))

+ ((y0 - y1)*(z0 - z2) - (y0 - y2)*(z0 - z1)) * ((y0 - y1)*(z0 - z2) - (y0 - y2)*(z0 - z1)));

// line_12,底边边长

float l12 = sqrt((x1 - x2)*(x1 - x2) + (y1 - y2)*(y1 - y2) + (z1 - z2)*(z1 - z2));

// 两次叉积,得到点到直线的垂线段单位向量,x分量,下面同理

// 求叉乘结果[la',lb',lc']=[(x1-x2),(y1-y2),(z1-z2)]x[XXX,YYY,ZZZ]

// [la,lb,lc]=[la',lb',lc']/a012/l12

// 得到底边上的高的方向向量[la,lb,lc]

// LLL=[la,lb,lc]是V1[0]这条高上的单位法向量。||LLL||=1;

//如不理解则看图:

// A

// B C

// 这里ABxAC,代表垂直于ABC面的法向量,其模长为平行四边形面积

//因此BCx(ABxAC),代表了BC和(ABC平面的法向量)的叉乘,那么其实这个向量就是A到BC的垂线的方向向量

//那么(ABxAC)/|ABxAC|,代表着ABC平面的单位法向量

//BCxABC平面单位法向量,即为一个长度为|BC|的(A到BC垂线的方向向量),因此再除以|BC|,得到A到BC垂线的单位方向向量

float la = ((y1 - y2)*((x0 - x1)*(y0 - y2) - (x0 - x2)*(y0 - y1))

+ (z1 - z2)*((x0 - x1)*(z0 - z2) - (x0 - x2)*(z0 - z1))) / a012 / l12;

float lb = -((x1 - x2)*((x0 - x1)*(y0 - y2) - (x0 - x2)*(y0 - y1))

- (z1 - z2)*((y0 - y1)*(z0 - z2) - (y0 - y2)*(z0 - z1))) / a012 / l12;

float lc = -((x1 - x2)*((x0 - x1)*(z0 - z2) - (x0 - x2)*(z0 - z1))

+ (y1 - y2)*((y0 - y1)*(z0 - z2) - (y0 - y2)*(z0 - z1))) / a012 / l12;

// 三角形的高,也就是点到直线距离

// 计算点pointSel到直线的距离

// 这里需要特别说明的是ld2代表的是点pointSel到过点[cx,cy,cz]的方向向量直线的距离

float ld2 = a012 / l12;

// 下面涉及到一个鲁棒核函数,作者简单地设计了这个核函数。

// 距离越大,s越小,是个距离惩罚因子(权重)

float s = 1 - 0.9 * fabs(ld2);

// coeff代表系数的意思

// coff用于保存距离的方向向量

coeff.x = s * la;

coeff.y = s * lb;

coeff.z = s * lc;

// intensity本质上构成了一个核函数,ld2越接近于1,增长越慢

// intensity=(1-0.9*ld2)*ld2=ld2-0.9*ld2*ld2

coeff.intensity = s * ld2;

// 程序末尾根据s的值来判断是否将点云点放入点云集合laserCloudOri以及coeffSel中。

// 所以就应该认为这个点是边缘点

// s>0.1 也就是要求点到直线的距离ld2要小于1m

// s越大说明ld2越小(离边缘线越近),这样就说明点pointOri在直线上

if (s > 0.1) {

laserCloudOriCornerVec[i] = pointOri;

coeffSelCornerVec[i] = coeff;

laserCloudOriCornerFlag[i] = true;

}

}

}

}

}

/*

* 当前激光帧平面点寻找局部map匹配点

* 1、更新当前帧位姿,将当前帧平面点坐标变换到map系下,在局部map中查找5个最近点,距离小于1m,且5个点构成平面(最小二乘拟合平面),则认为匹配上了

* 2、计算当前帧平面点到平面的距离、垂线的单位向量,存储为平面点参数

*/

void surfOptimization()

{

updatePointAssociateToMap();

// 遍历当前帧平面点集合

#pragma omp parallel for num_threads(numberOfCores)

for (int i = 0; i < laserCloudSurfLastDSNum; i++)

{

PointType pointOri, pointSel, coeff;

std::vector pointSearchInd;

std::vector pointSearchSqDis;

// 寻找5个紧邻点, 计算其特征值和特征向量

// 平面点(坐标还是lidar系)

pointOri = laserCloudSurfLastDS->points[i];

// 根据当前帧位姿,变换到世界坐标系(map系)下

pointAssociateToMap(&pointOri, &pointSel);

// 在局部平面点map中查找当前平面点相邻的5个平面点

kdtreeSurfFromMap->nearestKSearch(pointSel, 5, pointSearchInd, pointSearchSqDis);

Eigen::Matrix matA0;

Eigen::Matrix matB0;

Eigen::Vector3f matX0;

matA0.setZero(); // 5*3 存储5个紧邻点

matB0.fill(-1);

matX0.setZero();

// 只考虑附近1.0m内

if (pointSearchSqDis[4] < 1.0) {

for (int j = 0; j < 5; j++) {

matA0(j, 0) = laserCloudSurfFromMapDS->points[pointSearchInd[j]].x;

matA0(j, 1) = laserCloudSurfFromMapDS->points[pointSearchInd[j]].y;

matA0(j, 2) = laserCloudSurfFromMapDS->points[pointSearchInd[j]].z;

}

// 求maxA0中点构成的平面法向量

//matB0是-1,这个函数用来求解AX=B的X,

//也就是AX+BY+CZ+1=0

matX0 = matA0.colPivHouseholderQr().solve(matB0);

// 假设平面方程为ax+by+cz+1=0,这里就是求方程的系数abc,d=1

float pa = matX0(0, 0);

float pb = matX0(1, 0);

float pc = matX0(2, 0);

float pd = 1;

// 单位法向量

// 对[pa,pb,pc,pd]进行单位化

float ps = sqrt(pa * pa + pb * pb + pc * pc);

pa /= ps; pb /= ps; pc /= ps; pd /= ps;

// 检查平面是否合格,如果5个点中有点到平面的距离超过0.2m,那么认为这些点太分散了,不构成平面

bool planeValid = true;

for (int j = 0; j < 5; j++) {

if (fabs(pa * laserCloudSurfFromMapDS->points[pointSearchInd[j]].x +

pb * laserCloudSurfFromMapDS->points[pointSearchInd[j]].y +

pc * laserCloudSurfFromMapDS->points[pointSearchInd[j]].z + pd) > 0.2) {

planeValid = false;

break;

}

}

if (planeValid) {

// 当前激光帧点到平面距离

//点(x0,y0,z0)到了平面Ax+By+Cz+D=0的距离为:d=|Ax0+By0+Cz0+D|/√(A^2+B^2+C^2)

//但是会发现下面的分母开了两次方,不知道为什么,分母多开一次方会更小,这因此求出的距离会更大

float pd2 = pa * pointSel.x + pb * pointSel.y + pc * pointSel.z + pd;

// 距离越大,s越小,是个距离惩罚因子(权重)

// 后面部分相除求的是[pa,pb,pc,pd]与pointSel的夹角余弦值(两个sqrt,其实并不是余弦值)

// 这个夹角余弦值越小越好,越小证明所求的[pa,pb,pc,pd]与平面越垂直

float s = 1 - 0.9 * fabs(pd2) / sqrt(sqrt(pointSel.x * pointSel.x

+ pointSel.y * pointSel.y + pointSel.z * pointSel.z));

// 点到平面垂线单位法向量(其实等价于平面法向量)

coeff.x = s * pa;

coeff.y = s * pb;

coeff.z = s * pc;

coeff.intensity = s * pd2;

if (s > 0.1) {

// 当前激光帧平面点,加入匹配集合中.

//如果s>0.1,代表fabs(pd2) / sqrt(sqrt(pointSel.x * pointSel.x+ pointSel.y * pointSel.y + pointSel.z * pointSel.z))这一项<1,即"伪距离"<1

laserCloudOriSurfVec[i] = pointOri;

coeffSelSurfVec[i] = coeff;

laserCloudOriSurfFlag[i] = true;

}

}

}

}

}

/*

* 提取当前帧中与局部map匹配上了的角点、平面点,加入同一集合

*/

void combineOptimizationCoeffs()

{

// combine corner coeffs

// 遍历当前帧角点集合,提取出与局部map匹配上了的角点

for (int i = 0; i < laserCloudCornerLastDSNum; ++i){

if (laserCloudOriCornerFlag[i] == true){

laserCloudOri->push_back(laserCloudOriCornerVec[i]);

coeffSel->push_back(coeffSelCornerVec[i]);

}

}

// combine surf coeffs

// 遍历当前帧平面点集合,提取出与局部map匹配上了的平面点

for (int i = 0; i < laserCloudSurfLastDSNum; ++i){

if (laserCloudOriSurfFlag[i] == true){

laserCloudOri->push_back(laserCloudOriSurfVec[i]);

coeffSel->push_back(coeffSelSurfVec[i]);

}

}

// reset flag for next iteration

// 清空标记

std::fill(laserCloudOriCornerFlag.begin(), laserCloudOriCornerFlag.end(), false);

std::fill(laserCloudOriSurfFlag.begin(), laserCloudOriSurfFlag.end(), false);

}

void scan2MapOptimization()

{

//根据现有地图与最新点云数据进行配准从而更新机器人精确位姿与融合建图,

//它分为角点优化、平面点优化、配准与更新等部分。

//优化的过程与里程计的计算类似,是通过计算点到直线或平面的距离,构建优化公式再用LM法求解。

if (cloudKeyPoses3D->points.empty())

return;

//降采样后角点和平面点数量如果大于最小合理角点数量10和面点数量10

if (laserCloudCornerLastDSNum > edgeFeatureMinValidNum && laserCloudSurfLastDSNum > surfFeatureMinValidNum)

{

// 构建kd-tree

kdtreeCornerFromMap->setInputCloud(laserCloudCornerFromMapDS);

kdtreeSurfFromMap->setInputCloud(laserCloudSurfFromMapDS);

// 迭代30次

for (int iterCount = 0; iterCount < 30; iterCount++)

{

laserCloudOri->clear();

coeffSel->clear();

//gc: calculate some coeff and judge whether tho point is valid corner point

// 当前激光帧与局部地图之间的:角点-角点 特征匹配

cornerOptimization();

//gc: calculate some coeff and judge whether tho point is valid surface point

// 当前激光帧与局部地图之间的:面点-面点 特征匹配

surfOptimization();

// 提取当前帧中与局部map匹配上了的角点、平面点,加入同一集合

combineOptimizationCoeffs();

//gc: the true iteration steps, calculate the transform

// scan-to-map优化

if (LMOptimization(iterCount) == true)

break;

}

//gc: interpolate the roll and pitch angle using the IMU measurement and Lidar calculation

//使用了9轴imu的orientation与做transformTobeMapped插值,并且roll和pitch收到常量阈值约束(权重)

// 更新当前位姿

transformUpdate();

} else {

ROS_WARN("Not enough features! Only %d edge and %d planar features available.", laserCloudCornerLastDSNum, laserCloudSurfLastDSNum);

}

}

/*

* 用imu原始RPY数据与scan-to-map优化后的位姿进行加权融合,更新当前帧位姿的roll、pitch,约束z坐标

*/

//gc: interpolate the roll and pitch angle using the IMU measurement and Lidar calculation

void transformUpdate()

{

if (cloudInfo.imuAvailable == true)

{

// 俯仰角小于1.4

if (std::abs(cloudInfo.imuPitchInit) < 1.4)

{

double imuWeight = 0.01;

tf::Quaternion imuQuaternion;

tf::Quaternion transformQuaternion;

double rollMid, pitchMid, yawMid;

// slerp roll

transformQuaternion.setRPY(transformTobeMapped[0], 0, 0);

imuQuaternion.setRPY(cloudInfo.imuRollInit, 0, 0);

//gc: interpolate between Imu roll measurement and angle from lidar calculation

tf::Matrix3x3(transformQuaternion.slerp(imuQuaternion, imuWeight)).getRPY(rollMid, pitchMid, yawMid);

transformTobeMapped[0] = rollMid;

// slerp pitch

transformQuaternion.setRPY(0, transformTobeMapped[1], 0);

imuQuaternion.setRPY(0, cloudInfo.imuPitchInit, 0);

//gc: interpolate between Imu roll measurement and angle from lidar calculation

tf::Matrix3x3(transformQuaternion.slerp(imuQuaternion, imuWeight)).getRPY(rollMid, pitchMid, yawMid);

transformTobeMapped[1] = pitchMid;

}

}

// 更新当前帧位姿的roll, pitch, z坐标;因为是小车,roll、pitch是相对稳定的,

// 不会有很大变动,一定程度上可以信赖imu的数据,z是进行高度约束

transformTobeMapped[0] = constraintTransformation(transformTobeMapped[0], rotation_tollerance);

transformTobeMapped[1] = constraintTransformation(transformTobeMapped[1], rotation_tollerance);

transformTobeMapped[5] = constraintTransformation(transformTobeMapped[5], z_tollerance);

}

//相当于clib函数

float constraintTransformation(float value, float limit)

{

if (value < -limit)

value = -limit;

if (value > limit)

value = limit;

return value;

}

/*

* 计算当前帧与前一帧位姿变换,如果变化太小,不设为关键帧,反之设为关键帧

*/

bool saveFrame()

{

if (cloudKeyPoses3D->points.empty())

return true;

// 前一帧位姿

//注:最开始没有的时候,在函数extractCloud里面有

Eigen::Affine3f transStart = pclPointToAffine3f(cloudKeyPoses6D->back());

// 当前帧位姿

Eigen::Affine3f transFinal = pcl::getTransformation(transformTobeMapped[3], transformTobeMapped[4], transformTobeMapped[5],

transformTobeMapped[0], transformTobeMapped[1], transformTobeMapped[2]);

// 位姿变换增量

Eigen::Affine3f transBetween = transStart.inverse() * transFinal;

float x, y, z, roll, pitch, yaw;

pcl::getTranslationAndEulerAngles(transBetween, x, y, z, roll, pitch, yaw);

//gc: judge whther should generate key pose

// 旋转和平移量都较小,当前帧不设为关键帧

if (abs(roll) < surroundingkeyframeAddingAngleThreshold &&

abs(pitch) < surroundingkeyframeAddingAngleThreshold &&

abs(yaw) < surroundingkeyframeAddingAngleThreshold &&

sqrt(x*x + y*y + z*z) < surroundingkeyframeAddingDistThreshold)

return false;

return true;

}

void addOdomFactor()

{

//gc: the first key pose

if (cloudKeyPoses3D->points.empty())

{

noiseModel::Diagonal::shared_ptr priorNoise = noiseModel::Diagonal::Variances((Vector(6) << 1e-2, 1e-2, M_PI*M_PI, 1e8, 1e8, 1e8).finished()); // rad*rad, meter*meter

gtSAMgraph.add(PriorFactor(0, trans2gtsamPose(transformTobeMapped), priorNoise));

initialEstimate.insert(0, trans2gtsamPose(transformTobeMapped));

}else{

noiseModel::Diagonal::shared_ptr odometryNoise = noiseModel::Diagonal::Variances((Vector(6) << 1e-6, 1e-6, 1e-6, 1e-4, 1e-4, 1e-4).finished());

gtsam::Pose3 poseFrom = pclPointTogtsamPose3(cloudKeyPoses6D->points.back());

gtsam::Pose3 poseTo = trans2gtsamPose(transformTobeMapped);

//gc: add constraint between current pose and previous pose

gtSAMgraph.add(BetweenFactor(cloudKeyPoses3D->size()-1, cloudKeyPoses3D->size(), poseFrom.between(poseTo), odometryNoise));

initialEstimate.insert(cloudKeyPoses3D->size(), poseTo);

}

}

void addGPSFactor()

{

if (gpsQueue.empty())

return;

// wait for system initialized and settles down

if (cloudKeyPoses3D->points.empty())

return;

else

{

//gc: the translation between the first and last pose is very small

if (pointDistance(cloudKeyPoses3D->front(), cloudKeyPoses3D->back()) < 5.0)

return;

}

// pose covariance small, no need to correct

//gc: the odom covariance is small TODO: although small maybe some interpolation

if (poseCovariance(3,3) < poseCovThreshold && poseCovariance(4,4) < poseCovThreshold)

return;

// last gps position

static PointType lastGPSPoint;

while (!gpsQueue.empty())

{

if (gpsQueue.front().header.stamp.toSec() < timeLaserCloudInfoLast - 0.2)

{

// message too old

gpsQueue.pop_front();

}

else if (gpsQueue.front().header.stamp.toSec() > timeLaserCloudInfoLast + 0.2)

{

// message too new

break;

}

else

{

nav_msgs::Odometry thisGPS = gpsQueue.front();

gpsQueue.pop_front();

// GPS too noisy, skip

float noise_x = thisGPS.pose.covariance[0];

float noise_y = thisGPS.pose.covariance[7];

float noise_z = thisGPS.pose.covariance[14];

if (noise_x > gpsCovThreshold || noise_y > gpsCovThreshold)

continue;

float gps_x = thisGPS.pose.pose.position.x;

float gps_y = thisGPS.pose.pose.position.y;

float gps_z = thisGPS.pose.pose.position.z;

if (!useGpsElevation)

{

gps_z = transformTobeMapped[5];

noise_z = 0.01;

}

// GPS not properly initialized (0,0,0)

if (abs(gps_x) < 1e-6 && abs(gps_y) < 1e-6)

continue;

// Add GPS every a few meters

PointType curGPSPoint;

curGPSPoint.x = gps_x;

curGPSPoint.y = gps_y;

curGPSPoint.z = gps_z;

if (pointDistance(curGPSPoint, lastGPSPoint) < 5.0)

continue;

else

lastGPSPoint = curGPSPoint;

gtsam::Vector Vector3(3);

Vector3 << max(noise_x, 1.0f), max(noise_y, 1.0f), max(noise_z, 1.0f);

noiseModel::Diagonal::shared_ptr gps_noise = noiseModel::Diagonal::Variances(Vector3);

gtsam::GPSFactor gps_factor(cloudKeyPoses3D->size(), gtsam::Point3(gps_x, gps_y, gps_z), gps_noise);

gtSAMgraph.add(gps_factor);

aLoopIsClosed = true;

break;

}

}

}

/*

* 设置当前帧为关键帧并执行因子图优化

* 1、计算当前帧与前一帧位姿变换,如果变化太小,不设为关键帧,反之设为关键帧

* 2、添加激光里程计因子、GPS因子、闭环因子

* 3、执行因子图优化

* 4、得到当前帧优化后位姿,位姿协方差

* 5、添加cloudKeyPoses3D,cloudKeyPoses6D,更新transformTobeMapped,添加当前关键帧的角点、平面点集合

*/

void saveKeyFramesAndFactor()

{

//gc: judge whther should generate key pose

// 计算当前帧与前一帧位姿变换,如果变化太小,不设为关键帧,反之设为关键帧

if (saveFrame() == false)

return;

// 往GTSAM里添加因子都是添加的是序号和相对间的位姿变换和noise(一般设置为固定值),

// odom factor

//gc: add odom factor in the graph

addOdomFactor();

// gps factor

addGPSFactor();

// cout << "****************************************************" << endl;

// gtSAMgraph.print("GTSAM Graph:\n");

// update iSAM

// 执行优化

isam->update(gtSAMgraph, initialEstimate);

isam->update();

// update之后要清空一下保存的因子图,注:历史数据不会清掉,ISAM保存起来了

gtSAMgraph.resize(0);

initialEstimate.clear();

//save key poses

PointType thisPose3D;

PointTypePose thisPose6D;

Pose3 latestEstimate;

// 优化结果

isamCurrentEstimate = isam->calculateEstimate();

// 当前位姿结果

latestEstimate = isamCurrentEstimate.at(isamCurrentEstimate.size()-1);

// cout << "****************************************************" << endl;

// isamCurrentEstimate.print("Current estimate: ");

//gc:cloudKeyPoses3D can be used to calculate the nearest key frames

// cloudKeyPoses3D加入当前帧位姿

thisPose3D.x = latestEstimate.translation().x();

thisPose3D.y = latestEstimate.translation().y();

thisPose3D.z = latestEstimate.translation().z();

// 索引

thisPose3D.intensity = cloudKeyPoses3D->size(); // this can be used as index

cloudKeyPoses3D->push_back(thisPose3D);

// cloudKeyPoses6D加入当前帧位姿

thisPose6D.x = thisPose3D.x;

thisPose6D.y = thisPose3D.y;

thisPose6D.z = thisPose3D.z;

thisPose6D.intensity = thisPose3D.intensity ; // this can be used as index

thisPose6D.roll = latestEstimate.rotation().roll();

thisPose6D.pitch = latestEstimate.rotation().pitch();

thisPose6D.yaw = latestEstimate.rotation().yaw();

thisPose6D.time = timeLaserCloudInfoLast;

cloudKeyPoses6D->push_back(thisPose6D);

// cout << "****************************************************" << endl;

// cout << "Pose covariance:" << endl;

// cout << isam->marginalCovariance(isamCurrentEstimate.size()-1) << endl << endl;

// 位姿协方差

poseCovariance = isam->marginalCovariance(isamCurrentEstimate.size()-1);

// save updated transform

// transformTobeMapped更新当前帧位姿

transformTobeMapped[0] = latestEstimate.rotation().roll();

transformTobeMapped[1] = latestEstimate.rotation().pitch();

transformTobeMapped[2] = latestEstimate.rotation().yaw();

transformTobeMapped[3] = latestEstimate.translation().x();

transformTobeMapped[4] = latestEstimate.translation().y();

transformTobeMapped[5] = latestEstimate.translation().z();

// save all the received edge and surf points

// 当前帧激光角点、面点,降采样集合

// 把当前帧点云放进全局地图里

pcl::PointCloud::Ptr thisCornerKeyFrame(new pcl::PointCloud());

pcl::PointCloud::Ptr thisSurfKeyFrame(new pcl::PointCloud());

//gc:

pcl::copyPointCloud(*laserCloudCornerLastDS, *thisCornerKeyFrame);

pcl::copyPointCloud(*laserCloudSurfLastDS, *thisSurfKeyFrame);

// save key frame cloud

// 保存特征点降采样集合

cornerCloudKeyFrames.push_back(thisCornerKeyFrame);

surfCloudKeyFrames.push_back(thisSurfKeyFrame);

// save path for visualization

// 更新里程计轨迹

updatePath(thisPose6D);

}

void updatePointAssociateToMap()

{

transPointAssociateToMap = trans2Affine3f(transformTobeMapped);

}

// 进行完一次图优化之后,改变的不单单是当前帧的位姿,其它节点的位姿也可能变化,所以都更新一下

// 更新因子图所有变量节点的位姿,也就是所有历史关键帧的位姿,更新里程计轨迹

void correctPoses()

{

if (cloudKeyPoses3D->points.empty())

return;

if (aLoopIsClosed == true)

{

// clear path

// 清空里程计轨迹

globalPath.poses.clear();

// update key poses

//更新因子图所有变量节点的位姿,也就是所有历史关键帧的位姿,

int numPoses = isamCurrentEstimate.size();

for (int i = 0; i < numPoses; ++i)

{

cloudKeyPoses3D->points[i].x = isamCurrentEstimate.at(i).translation().x();

cloudKeyPoses3D->points[i].y = isamCurrentEstimate.at(i).translation().y();

cloudKeyPoses3D->points[i].z = isamCurrentEstimate.at(i).translation().z();

cloudKeyPoses6D->points[i].x = cloudKeyPoses3D->points[i].x;

cloudKeyPoses6D->points[i].y = cloudKeyPoses3D->points[i].y;

cloudKeyPoses6D->points[i].z = cloudKeyPoses3D->points[i].z;

cloudKeyPoses6D->points[i].roll = isamCurrentEstimate.at(i).rotation().roll();

cloudKeyPoses6D->points[i].pitch = isamCurrentEstimate.at(i).rotation().pitch();

cloudKeyPoses6D->points[i].yaw = isamCurrentEstimate.at(i).rotation().yaw();

updatePath(cloudKeyPoses6D->points[i]);

}

aLoopIsClosed = false;

// ID for reseting IMU pre-integration

++imuPreintegrationResetId;

}

}

// 更新里程计轨迹。输入:thisPose6D

void updatePath(const PointTypePose& pose_in)

{

geometry_msgs::PoseStamped pose_stamped;

pose_stamped.header.stamp = ros::Time().fromSec(pose_in.time);

pose_stamped.header.frame_id = "odom";

pose_stamped.pose.position.x = pose_in.x;

pose_stamped.pose.position.y = pose_in.y;

pose_stamped.pose.position.z = pose_in.z;

tf::Quaternion q = tf::createQuaternionFromRPY(pose_in.roll, pose_in.pitch, pose_in.yaw);

pose_stamped.pose.orientation.x = q.x();

pose_stamped.pose.orientation.y = q.y();

pose_stamped.pose.orientation.z = q.z();

pose_stamped.pose.orientation.w = q.w();

globalPath.poses.push_back(pose_stamped);

}

// 较原码修改、删除较多

void publishOdometry()

{

// Publish odometry for ROS

nav_msgs::Odometry laserOdometryROS;

laserOdometryROS.header.stamp = timeLaserInfoStamp;

laserOdometryROS.header.frame_id = "odom";

laserOdometryROS.child_frame_id = "odom_mapping";

laserOdometryROS.pose.pose.position.x = transformTobeMapped[3];

laserOdometryROS.pose.pose.position.y = transformTobeMapped[4];

laserOdometryROS.pose.pose.position.z = transformTobeMapped[5];

laserOdometryROS.pose.pose.orientation = tf::createQuaternionMsgFromRollPitchYaw(transformTobeMapped[0], transformTobeMapped[1], transformTobeMapped[2]);

laserOdometryROS.pose.covariance[0] = double(imuPreintegrationResetId);

// 没有经过IMU数据融合,单纯由mapOptimization经过GTSAM优化后的位姿是由pubOdomAftMappedROS发布的

// 它的话题是"lio_sam/mapping/odometry",这个是由transformFusion订阅的,用于与IMU里程计进行融合

pubOdomAftMappedROS.publish(laserOdometryROS);

// 在publishOdometry()里,删除了pubLaserOdometryIncremental,它的话题是"lio_sam/mapping/odometry_incremental"

// 它发布的laserOdomIncremental位姿是经过IMU数据加权融合的,这个是由IMUPreintegration订阅的

// 用于制作高频的IMU里程计;

// 代码段如下:

// Publish odometry for ROS (incremental)

// static bool lastIncreOdomPubFlag = false;

// static nav_msgs::Odometry laserOdomIncremental; // incremental odometry msg

// static Eigen::Affine3f increOdomAffine; // incremental odometry in affine

// //第一次数据直接用全局里程计初始化

// if (lastIncreOdomPubFlag == false)

// {

// lastIncreOdomPubFlag = true;

// laserOdomIncremental = laserOdometryROS;

// increOdomAffine = trans2Affine3f(transformTobeMapped);

// } else {

// // 当前帧与前一帧之间的位姿变换

// Eigen::Affine3f affineIncre = incrementalOdometryAffineFront.inverse() * incrementalOdometryAffineBack;

// increOdomAffine = increOdomAffine * affineIncre;

// float x, y, z, roll, pitch, yaw;

// pcl::getTranslationAndEulerAngles (increOdomAffine, x, y, z, roll, pitch, yaw);

// if (cloudInfo.imuAvailable == true)

// {

// if (std::abs(cloudInfo.imuPitchInit) < 1.4)

// {

// double imuWeight = 0.1;

// tf::Quaternion imuQuaternion;

// tf::Quaternion transformQuaternion;

// double rollMid, pitchMid, yawMid;

// // slerp roll

// // roll姿态角加权平均

// transformQuaternion.setRPY(roll, 0, 0);

// imuQuaternion.setRPY(cloudInfo.imuRollInit, 0, 0);

// tf::Matrix3x3(transformQuaternion.slerp(imuQuaternion, imuWeight)).getRPY(rollMid, pitchMid, yawMid);

// roll = rollMid;

// // slerp pitch

// // pitch姿态角加权平均

// transformQuaternion.setRPY(0, pitch, 0);

// imuQuaternion.setRPY(0, cloudInfo.imuPitchInit, 0);

// tf::Matrix3x3(transformQuaternion.slerp(imuQuaternion, imuWeight)).getRPY(rollMid, pitchMid, yawMid);

// pitch = pitchMid;

// }

// }

// laserOdomIncremental.header.stamp = timeLaserInfoStamp;

// laserOdomIncremental.header.frame_id = odometryFrame;

// laserOdomIncremental.child_frame_id = "odom_mapping";

// laserOdomIncremental.pose.pose.position.x = x;

// laserOdomIncremental.pose.pose.position.y = y;

// laserOdomIncremental.pose.pose.position.z = z;

// laserOdomIncremental.pose.pose.orientation = tf::createQuaternionMsgFromRollPitchYaw(roll, pitch, yaw);

// if (isDegenerate)

// laserOdomIncremental.pose.covariance[0] = 1;

// else

// laserOdomIncremental.pose.covariance[0] = 0;

// }

// pubLaserOdometryIncremental.publish(laserOdomIncremental);

// }

// 较原码还删除了isDegenerate

// 这个判断是在scan2map的LMOptimization那里计算匹配的J矩阵时获得的

// 如果发生退化,那么标志位covariance[0] = 1,在IMUPreintegration那里的图优化会给当前位姿的计算加一个大的方差。

// if (isDegenerate)

// laserOdomIncremental.pose.covariance[0] = 1;

// else

// laserOdomIncremental.pose.covariance[0] = 0;

// tum位姿输出到txt文档

std::ofstream pose1("/home/clf/trajectory/lio-sam-tum/test.txt", std::ios::app);

std::ofstream pose2("/home/clf/trajectory/lio-sam-tum/test_time.txt", std::ios::app);

pose1.setf(std::ios::scientific, std::ios::floatfield);

pose2.setf(std::ios::scientific, std::ios::floatfield);

// pose1.precision(15);

//save final trajectory in the left camera coordinate system.

Eigen::Matrix3d rotation_matrix;

// rotation_matrix = q_w_curr.toRotationMatrix();

rotation_matrix = Eigen::AngleAxisd(transformTobeMapped[2], Eigen::Vector3d::UnitZ()) *

Eigen::AngleAxisd(transformTobeMapped[1], Eigen::Vector3d::UnitY()) *

Eigen::AngleAxisd(transformTobeMapped[0], Eigen::Vector3d::UnitX());

Eigen::Matrix myaloam_pose;

myaloam_pose.topLeftCorner(3,3) = rotation_matrix;

myaloam_pose(0,3) = laserOdometryROS.pose.pose.position.x;

myaloam_pose(1,3) = laserOdometryROS.pose.pose.position.y;

myaloam_pose(2,3) = laserOdometryROS.pose.pose.position.z;

Eigen::Matrix cali_paremeter;

// cali_paremeter << 4.276802385584e-04, -9.999672484946e-01, -8.084491683471e-03, -1.198459927713e-02, //00-02

// -7.210626507497e-03, 8.081198471645e-03, -9.999413164504e-01, -5.403984729748e-02,

// 9.999738645903e-01, 4.859485810390e-04, -7.206933692422e-03, -2.921968648686e-01,

// 0, 0, 0, 1;

cali_paremeter << -1.857739385241e-03,-9.999659513510e-01, -8.039975204516e-03, -4.784029760483e-03, //04-10

-6.481465826011e-03, 8.051860151134e-03, -9.999466081774e-01, -7.337429464231e-02,

9.999773098287e-01, -1.805528627661e-03, -6.496203536139e-03, -3.339968064433e-01,

0, 0, 0, 1;

/*cali_paremeter << 2.347736981471e-04, -9.999441545438e-01, -1.056347781105e-02, -2.796816941295e-03, // 03

1.044940741659e-02, 1.056535364138e-02, -9.998895741176e-01, -7.510879138296e-02,

9.999453885620e-01, 1.243653783865e-04, 1.045130299567e-02, -2.721327964059e-01,

0, 0, 0, 1;*/

Eigen::Matrix myloam_pose_f;

myloam_pose_f = cali_paremeter * myaloam_pose * cali_paremeter.inverse();

pose1 << myloam_pose_f(0,0) << " " << myloam_pose_f(0,1) << " " << myloam_pose_f(0,2) << " " << myloam_pose_f(0,3) << " "

<< myloam_pose_f(1,0) << " " << myloam_pose_f(1,1) << " " << myloam_pose_f(1,2) << " " << myloam_pose_f(1,3) << " "

<< myloam_pose_f(2,0) << " " << myloam_pose_f(2,1) << " " << myloam_pose_f(2,2) << " " << myloam_pose_f(2,3) << std::endl;

// 获取当前更新的时间 这样与ground turth对比才更准确

// 相当于是第一帧的时间

static auto T1 = timeLaserInfoStamp;

pose2 << laserOdometryROS.header.stamp - T1 << std::endl;

pose1.close();

pose2.close();

}

void publishFrames()

{

if (cloudKeyPoses3D->points.empty())

return;

// publish key poses

publishCloud(&pubKeyPoses, cloudKeyPoses3D, timeLaserInfoStamp, "odom");

// Publish surrounding key frames

publishCloud(&pubRecentKeyFrames, laserCloudSurfFromMapDS, timeLaserInfoStamp, "odom");

// publish registered key frame

//gc: feature points

if (pubRecentKeyFrame.getNumSubscribers() != 0)

{

pcl::PointCloud::Ptr cloudOut(new pcl::PointCloud());

PointTypePose thisPose6D = trans2PointTypePose(transformTobeMapped);

*cloudOut += *transformPointCloud(laserCloudCornerLastDS, &thisPose6D);

*cloudOut += *transformPointCloud(laserCloudSurfLastDS, &thisPose6D);

publishCloud(&pubRecentKeyFrame, cloudOut, timeLaserInfoStamp, "odom");

}

// publish registered high-res raw cloud

//gc: whole point_cloud of the scan

if (pubCloudRegisteredRaw.getNumSubscribers() != 0)

{

pcl::PointCloud::Ptr cloudOut(new pcl::PointCloud());

pcl::fromROSMsg(cloudInfo.cloud_deskewed, *cloudOut);

PointTypePose thisPose6D = trans2PointTypePose(transformTobeMapped);

*cloudOut = *transformPointCloud(cloudOut, &thisPose6D);

publishCloud(&pubCloudRegisteredRaw, cloudOut, timeLaserInfoStamp, "odom");

}

// publish path

if (pubPath.getNumSubscribers() != 0)

{

globalPath.header.stamp = timeLaserInfoStamp;

globalPath.header.frame_id = "odom";

pubPath.publish(globalPath);

}

}

};

int main(int argc, char** argv)

{

ros::init(argc, argv, "lio_sam");

mapOptimization MO;

ROS_INFO("\033[1;32m----> Map Optimization Started.\033[0m");

std::thread loopthread(&mapOptimization::loopClosureThread, &MO);

std::thread visualizeMapThread(&mapOptimization::visualizeGlobalMapThread, &MO);

ros::spin();

loopthread.join();

visualizeMapThread.join();

return 0;

} 之后会抽空详细写一下kitti数据集的制作、轨迹代码的选择和修改、evo工具的使用等等内容。

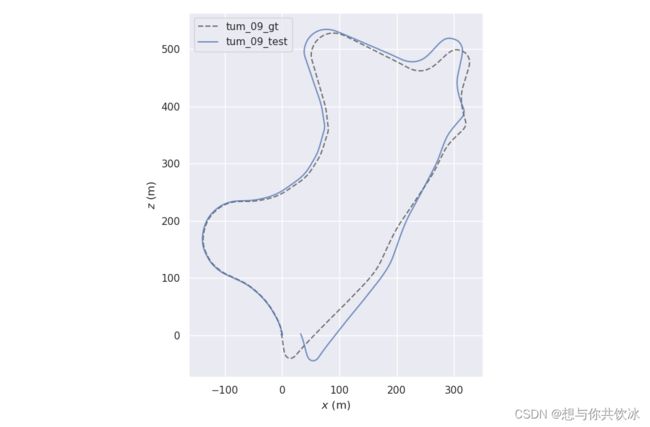

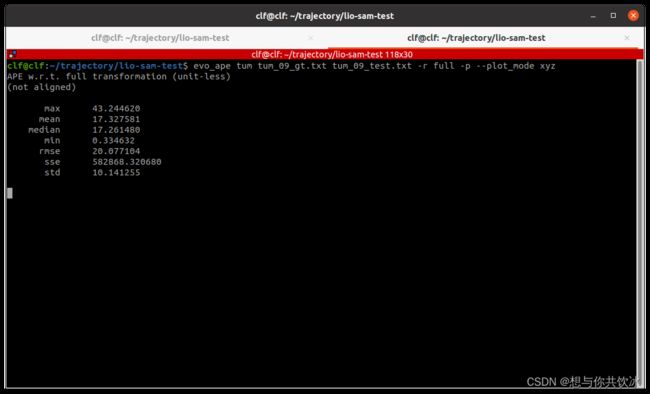

最后放几张笔者的测试结果图: