Halcon Deep Learning Object Detection 和 C# 客户端调用模型进行识别

文章目录

- 分类识别样例程序

- 工具

- 标注图片

-

- 样例图片

- 导入图片并标注

- 样例工程地址

- Halcon代码

- 导出语言文件

- C# 客户端使用模型进行识别

-

- 新建项目

- 代码

- 总结

分类识别样例程序

工具

标注图片

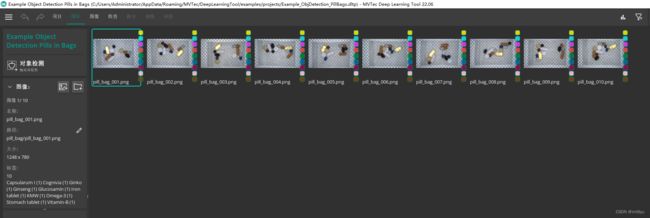

样例图片

利用了demo的图片

C:\Users\Public\Documents\MVTec\HALCON-20.11-Steady\examples\images\food

导入图片并标注

在 DL(Halcon 标注工具)工具中新建一个分类项目,如下图导入图片并且标注

样例工程地址

C:\Users\Administrator\AppData\Roaming\MVTec\DeepLearningTool\examples\projects\Example_ObjDetection_PillBags.dltp

Halcon代码

*

* Deep learning object detection workflow:

*

* This example demonstrates the overall workflow for

* object detection based on deep learning, using axis-aligned bounding boxes.

* (instance_type = 'rectangle1')

*

* PLEASE NOTE:

* - This is a bare-bones example.

* - For this, default parameters are used as much as possible.

* Hence, the results may differ from those obtained in other examples.

* - For more detailed steps, please refer to the respective examples from the series,

* e.g. detect_pills_deep_learning_1_prepare.hdev etc.

*

dev_close_window ()

dev_update_off ()

set_system ('seed_rand', 42)

*

* *** 0) SET INPUT/OUTPUT PATHS ***

*

* get_system ('example_dir', HalconExampleDir)

* PillBagJsonFile := HalconExampleDir + '/hdevelop/Deep-Learning/Detection/pill_bag.json'

* InputImageDir := HalconExampleDir + '/images/'

*

OutputDir := 'detect_pills_data'

* Set to true, if the results should be deleted after running this program.

RemoveResults := false

*

* *** 1.) PREPARE ***

*

* Read in a DLDataset.

* Here, we read the data from a COCO file.

* Alternatively, you can read a DLDataset dictionary

* as created by e.g., the MVTec Deep Learning Tool using read_dict().

* read_dl_dataset_from_coco (PillBagJsonFile, InputImageDir, [], DLDataset)

read_dict ('D:/test/halcon/images/pill_bag/药片检测.hdict', [], [], DLDataset)

*

* Create the detection model with parameters suitable for

* the dataset.

create_dict (DLModelDetectionParam)

set_dict_tuple (DLModelDetectionParam, 'image_dimensions', [512,320,3])

set_dict_tuple (DLModelDetectionParam, 'max_level', 4)

get_dict_tuple (DLDataset, 'class_ids', ClassIDs)

set_dict_tuple (DLModelDetectionParam, 'class_ids', ClassIDs)

create_dl_model_detection ('pretrained_dl_classifier_compact.hdl', |ClassIDs|, DLModelDetectionParam, DLModelHandle)

*

* Preprocess the data in DLDataset.

split_dl_dataset (DLDataset, 60, 20, [])

create_dict (PreprocessSettings)

* Here, existing preprocessed data will be overwritten.

set_dict_tuple (PreprocessSettings, 'overwrite_files', true)

create_dl_preprocess_param_from_model (DLModelHandle, 'none', 'full_domain', [], [], [], DLPreprocessParam)

preprocess_dl_dataset (DLDataset, OutputDir, DLPreprocessParam, PreprocessSettings, DLDatasetFileName)

write_dict (DLPreprocessParam, 'D:/test/halcon/目标检测-药片/detect_pills_data/dl_preprocess_param.hdict', [], [])

*

* Inspect 10 randomly selected preprocessed DLSamples visually.

* create_dict (WindowDict)

* get_dict_tuple (DLDataset, 'samples', DatasetSamples)

* for Index := 0 to 9 by 1

* SampleIndex := round(rand(1) * (|DatasetSamples| - 1))

* read_dl_samples (DLDataset, SampleIndex, DLSample)

* dev_display_dl_data (DLSample, [], DLDataset, 'bbox_ground_truth', [], WindowDict)

* dev_disp_text ('Press F5 to continue', 'window', 'bottom', 'right', 'black', [], [])

* stop ()

* endfor

* dev_close_window_dict (WindowDict)

stop()

*

* *** 2.) TRAIN ***

*

* Training can be performed on a GPU or CPU.

* See the respective system requirements in the Installation Guide.

* If possible a GPU is used in this example.

* In case you explicitely wish to run this example on the CPU,

* choose the CPU device instead.

query_available_dl_devices (['runtime','runtime'], ['gpu','cpu'], DLDeviceHandles)

if (|DLDeviceHandles| == 0)

throw ('No supported device found to continue this example.')

endif

* Due to the filter used in query_available_dl_devices, the first device is a GPU, if available.

DLDevice := DLDeviceHandles[1]

get_dl_device_param (DLDevice, 'type', DLDeviceType)

if (DLDeviceType == 'cpu')

* The number of used threads may have an impact

* on the training duration.

NumThreadsTraining := 4

set_system ('thread_num', NumThreadsTraining)

endif

*

* For details see the documentation of set_dl_model_param () and get_dl_model_param ().

set_dl_model_param (DLModelHandle, 'batch_size', 1)

set_dl_model_param (DLModelHandle, 'learning_rate', 0.001)

set_dl_model_param (DLModelHandle, 'device', DLDevice)

*

* Here, we run a short training of 10 epochs.

* For better model performance increase the number of epochs,

* from 10 to e.g. 60.

create_dl_train_param (DLModelHandle, 10, 1, 'true', 42, [], [], TrainParam)

* The training and thus the call of train_dl_model_batch ()

* is done using the following procedure.

train_dl_model (DLDataset, DLModelHandle, TrainParam, 0, TrainResults, TrainInfos, EvaluationInfos)

*

* Read the best model, which is written to file by train_dl_model.

read_dl_model ('model_best.hdl', DLModelHandle)

dev_disp_text ('Press F5 to continue', 'window', 'bottom', 'left', 'black', [], [])

stop ()

*

dev_close_window ()

dev_close_window ()

stop ()

*

* *** 3.) EVALUATE ***

*

create_dict (GenParamEval)

set_dict_tuple (GenParamEval, 'detailed_evaluation', true)

set_dict_tuple (GenParamEval, 'show_progress', true)

*

*设置评估时使用cpu,因为当前笔记本的mx320 gpu 不够用

*

set_dl_model_param(DLModelHandle,'runtime','cpu')

evaluate_dl_model (DLDataset, DLModelHandle, 'split', 'test', GenParamEval, EvaluationResult, EvalParams)

*

create_dict (DisplayMode)

set_dict_tuple (DisplayMode, 'display_mode', ['pie_charts_precision','pie_charts_recall'])

dev_display_detection_detailed_evaluation (EvaluationResult, EvalParams, DisplayMode, WindowDict)

dev_disp_text ('Press F5 to continue', 'window', 'bottom', 'right', 'black', [], [])

stop ()

dev_close_window_dict (WindowDict)

*

* Optimize the model for inference,

* meaning, reduce its memory consumption.

set_dl_model_param (DLModelHandle, 'optimize_for_inference', 'true')

set_dl_model_param (DLModelHandle, 'batch_size', 1)

* Save the model in this optimized state.

write_dl_model (DLModelHandle, 'model_best.hdl')

stop ()

*

* *** 4.) INFER ***

*

* To demonstrate the inference steps, we apply

* the trained model to some randomly chosen example images.

list_image_files ('C:/Users/Administrator/AppData/Roaming/MVTec/DeepLearningTool/examples/images/pill_bag', 'default', 'recursive', ImageFiles)

tuple_shuffle (ImageFiles, ImageFilesShuffled)

*

create_dict (WindowDict)

for IndexInference := 0 to 9 by 1

read_image (Image, ImageFilesShuffled[IndexInference])

gen_dl_samples_from_images (Image, DLSampleInference)

preprocess_dl_samples (DLSampleInference, DLPreprocessParam)

apply_dl_model (DLModelHandle, DLSampleInference, [], DLResult)

*

dev_display_dl_data (DLSampleInference, DLResult, DLDataset, 'bbox_result', [], WindowDict)

dev_disp_text ('Press F5 to continue', 'window', 'bottom', 'right', 'black', [], [])

stop ()

endfor

dev_close_window_dict (WindowDict)

导出语言文件

C# 客户端使用模型进行识别

新建项目

- 新建一个桌面项目

- 将导出的.cs文件添加到项目中

- 创建页面

页面如下

代码

using HalconDotNet;

using System;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows.Forms;

namespace 目标检测

{

public partial class Form1 : Form

{

public Form1()

{

InitializeComponent();

this.Load += Form1_Load;

}

private void Form1_Load(object sender, EventArgs e)

{

openFileDialog1.FileOk += OpenFileDialog1_FileOk;

}

private void OpenFileDialog1_FileOk(object sender, CancelEventArgs e)

{

pictureBox1.Image = new Bitmap(openFileDialog1.FileName);

}

private void button1_Click(object sender, EventArgs e)

{

openFileDialog1.ShowDialog();

}

private void button2_Click(object sender, EventArgs e)

{

if (!string.IsNullOrEmpty(openFileDialog1.FileName))

Action(openFileDialog1.FileName);

}

private void Action(string filename)

{

HDevelopExport developExport = new HDevelopExport();

// Local iconic variables

HObject ho_ImageBatch;

// Local control variables

HTuple hv_DLDeviceHandles = new HTuple(), hv_DLDevice = new HTuple();

HTuple hv_ClassNames = new HTuple(), hv_ClassIDs = new HTuple();

HTuple hv_DLModelHandle = new HTuple(), hv_DLPreprocessParam = new HTuple();

HTuple hv_WindowHandleDict = new HTuple(), hv_DLDataInfo = new HTuple();

HTuple hv_DLSample = new HTuple(), hv_DLResult = new HTuple();

HTuple hv_txt = new HTuple(), hv_DetectedClassIDs = new HTuple();

HTuple hv_DetectedConfidences = new HTuple(), hv_Rows1 = new HTuple();

HTuple hv_Rows2 = new HTuple(), hv_Cols1 = new HTuple();

HTuple hv_Cols2 = new HTuple(), hv_Index = new HTuple();

// Initialize local and output iconic variables

HOperatorSet.GenEmptyObj(out ho_ImageBatch);

try

{

//

//This example is part of a series of examples, which summarizes

//the workflow for DL object detection. It uses the MVTec pill bag dataset.

//

//The four parts are:

//1. Creation of the model and dataset preprocessing.

//2. Training of the model.

//3. Evaluation of the trained model.

//4. Inference on new images.

//

//This examples covers part 4: 'Inference on new images'

//

//It explains how to apply a trained model on new images and shows

//an application based on the pill bag dataset.

//

//Please note: This script uses a pretrained model. To use the output

//of part 1 and part 2 of this example series, set UsePretrainedModel

//to false below.

//

//

//Inference can be done on a GPU or CPU.

//See the respective system requirements in the Installation Guide.

//If possible a GPU is used in this example.

//In case you explicitely wish to run this example on the CPU,

//choose the CPU device instead.

hv_DLDeviceHandles.Dispose();

HOperatorSet.QueryAvailableDlDevices((new HTuple("runtime")).TupleConcat("runtime"),

(new HTuple("gpu")).TupleConcat("cpu"), out hv_DLDeviceHandles);

if ((int)(new HTuple((new HTuple(hv_DLDeviceHandles.TupleLength())).TupleEqual(

0))) != 0)

{

throw new HalconException("No supported device found to continue this example.");

}

//Due to the filter used in query_available_dl_devices, the first device is a GPU, if available.

hv_DLDevice.Dispose();

using (HDevDisposeHelper dh = new HDevDisposeHelper())

{

hv_DLDevice = hv_DLDeviceHandles.TupleSelect(

0);

}

//Provide the class names and IDs.

//Class names.

//ClassNames := ['Omega-3','KMW','Stomach tablet','Ginko','Ginseng','Glucosamine','Cognivia','Capsularum I','Iron tablet','Vitamin-B']

//Respective class ids.

//ClassIDs := [1,2,3,4,5,6,7,8,9,10]

//

//Batch Size used during inference.

//BatchSizeInference := 1

//

//Postprocessing parameters for the detection model.

//MinConfidence := 0.6

//MaxOverlap := 0.2

//MaxOverlapClassAgnostic := 0.7

//

//********************

//** Inference ***

//********************

//Read in the retrained model.

hv_DLModelHandle.Dispose();

HOperatorSet.ReadDlModel("D:/test/halcon/目标检测-药片/model_best.hdl",

out hv_DLModelHandle);

// stop(...); only in hdevelop

hv_ClassIDs.Dispose();

HOperatorSet.GetDlModelParam(hv_DLModelHandle, "class_ids", out hv_ClassIDs);

//get_dl_model_param (DLModelHandle, 'class_names', ClassNames)

hv_ClassNames.Dispose();

hv_ClassNames = new HTuple();

hv_ClassNames[0] = "Omega-3";

hv_ClassNames[1] = "KMW";

hv_ClassNames[2] = "Stomach tablet";

hv_ClassNames[3] = "Ginko";

hv_ClassNames[4] = "Ginseng";

hv_ClassNames[5] = "Glucosamine";

hv_ClassNames[6] = "Cognivia";

hv_ClassNames[7] = "Capsularum I";

hv_ClassNames[8] = "Iron tablet";

hv_ClassNames[9] = "Vitamin-B";

//

//Set the batch size.

HOperatorSet.SetDlModelParam(hv_DLModelHandle, "batch_size", 1);

//

//Initialize the model for inference.

HOperatorSet.SetDlModelParam(hv_DLModelHandle, "device", hv_DLDevice);

//

//Set postprocessing parameters for model.

//set_dl_model_param (DLModelHandle, 'min_confidence', MinConfidence)

//set_dl_model_param (DLModelHandle, 'max_overlap', MaxOverlap)

//set_dl_model_param (DLModelHandle, 'max_overlap_class_agnostic', MaxOverlapClassAgnostic)

//

//Get the parameters used for preprocessing.

hv_DLPreprocessParam.Dispose();

HOperatorSet.ReadDict("D:/test/halcon/目标检测-药片/detect_pills_data/dl_preprocess_param.hdict",

new HTuple(), new HTuple(), out hv_DLPreprocessParam);

//

//Create window dictionary for displaying results.

hv_WindowHandleDict.Dispose();

HOperatorSet.CreateDict(out hv_WindowHandleDict);

//Create dictionary with dataset parameters necessary for displaying.

hv_DLDataInfo.Dispose();

HOperatorSet.CreateDict(out hv_DLDataInfo);

HOperatorSet.SetDictTuple(hv_DLDataInfo, "class_names", hv_ClassNames);

HOperatorSet.SetDictTuple(hv_DLDataInfo, "class_ids", hv_ClassIDs);

//Read the images of the batch.

ho_ImageBatch.Dispose();

HOperatorSet.ReadImage(out ho_ImageBatch, filename);

//

//Generate the DLSampleBatch.

hv_DLSample.Dispose();

developExport.gen_dl_samples_from_images(ho_ImageBatch, out hv_DLSample);

//

//Preprocess the DLSampleBatch.

developExport.preprocess_dl_samples(hv_DLSample, hv_DLPreprocessParam);

//

//Apply the DL model on the DLSampleBatch.

hv_DLResult.Dispose();

HOperatorSet.ApplyDlModel(hv_DLModelHandle, hv_DLSample, new HTuple(), out hv_DLResult);

hv_txt.Dispose();

hv_txt = "";

hv_DetectedClassIDs.Dispose();

HOperatorSet.GetDictTuple(hv_DLResult, "bbox_class_id", out hv_DetectedClassIDs);

hv_DetectedConfidences.Dispose();

HOperatorSet.GetDictTuple(hv_DLResult, "bbox_confidence", out hv_DetectedConfidences);

hv_Rows1.Dispose();

HOperatorSet.GetDictTuple(hv_DLResult, "bbox_row1", out hv_Rows1);

hv_Rows2.Dispose();

HOperatorSet.GetDictTuple(hv_DLResult, "bbox_row2", out hv_Rows2);

hv_Cols1.Dispose();

HOperatorSet.GetDictTuple(hv_DLResult, "bbox_col1", out hv_Cols1);

hv_Cols2.Dispose();

HOperatorSet.GetDictTuple(hv_DLResult, "bbox_col2", out hv_Cols2);

for (hv_Index = 0; (int)hv_Index <= (int)((new HTuple(hv_DetectedClassIDs.TupleLength()

)) - 1); hv_Index = (int)hv_Index + 1)

{

using (HDevDisposeHelper dh = new HDevDisposeHelper())

{

{

HTuple

ExpTmpLocalVar_txt = ((((((((((((hv_txt + "检测结果:") + (hv_ClassNames.TupleSelect(

hv_DetectedClassIDs.TupleSelect(hv_Index)))) + new HTuple(",得分:")) + (hv_DetectedConfidences.TupleSelect(

hv_Index))) + new HTuple(",坐标:([")) + (hv_Rows1.TupleSelect(hv_Index))) + new HTuple(",")) + (hv_Cols1.TupleSelect(

hv_Index))) + new HTuple("],[")) + (hv_Rows2.TupleSelect(hv_Index))) + new HTuple(",")) + (hv_Cols2.TupleSelect(

hv_Index))) + "]) ;\n";

hv_txt.Dispose();

hv_txt = ExpTmpLocalVar_txt;

}

}

}

richTextBox1.AppendText(hv_txt.S);

}

catch (HalconException HDevExpDefaultException)

{

ho_ImageBatch.Dispose();

hv_DLDeviceHandles.Dispose();

hv_DLDevice.Dispose();

hv_ClassNames.Dispose();

hv_ClassIDs.Dispose();

hv_DLModelHandle.Dispose();

hv_DLPreprocessParam.Dispose();

hv_WindowHandleDict.Dispose();

hv_DLDataInfo.Dispose();

hv_DLSample.Dispose();

hv_DLResult.Dispose();

hv_txt.Dispose();

hv_DetectedClassIDs.Dispose();

hv_DetectedConfidences.Dispose();

hv_Rows1.Dispose();

hv_Rows2.Dispose();

hv_Cols1.Dispose();

hv_Cols2.Dispose();

hv_Index.Dispose();

throw HDevExpDefaultException;

}

ho_ImageBatch.Dispose();

hv_DLDeviceHandles.Dispose();

hv_DLDevice.Dispose();

hv_ClassNames.Dispose();

hv_ClassIDs.Dispose();

hv_DLModelHandle.Dispose();

hv_DLPreprocessParam.Dispose();

hv_WindowHandleDict.Dispose();

hv_DLDataInfo.Dispose();

hv_DLSample.Dispose();

hv_DLResult.Dispose();

hv_txt.Dispose();

hv_DetectedClassIDs.Dispose();

hv_DetectedConfidences.Dispose();

hv_Rows1.Dispose();

hv_Rows2.Dispose();

hv_Cols1.Dispose();

hv_Cols2.Dispose();

hv_Index.Dispose();

}

}

}

总结

以上内容简简单单实现一个调用功能,实际业务中还有很多功能需要完善,实际检测过程中也需要对算法的更多参数进行修改。