Hive3入门至精通(基础、部署、理论、SQL、函数、运算以及性能优化)1-14章

Hive3入门至精通(基础、部署、理论、SQL、函数、运算以及性能优化)1-14章

Hive3入门至精通(基础、部署、理论、SQL、函数、运算以及性能优化)15-28章

第1章:数据仓库基础理论

1-1.数据仓库概念

数据仓库(英语:Data Warehouse,简称数仓、DW),是一个用于存储、分析、报告的数据系统。

数据仓库的目的是构建面向分析的集成化数据环境,分析结果为企业提供决策支持(Decision Support)。

- 数据仓库本身并不“生产”任何数据,其数据来源于不同外部系统

- 同时数据仓库自身也不需要“消费”任何的数据,其结果开放给各个外部应用使用

- 这也是为什么叫“数据仓库”,而不叫“数据工厂”的原因

1-2.场景案例:数据仓库由来

数据仓库为了分析数据而来,分析结果给企业决策提供支撑。

企业中,信息数据总是用作两个目的:

(1)操作型记录的保存

(2)分析型决策的制定

操作型记录的保存

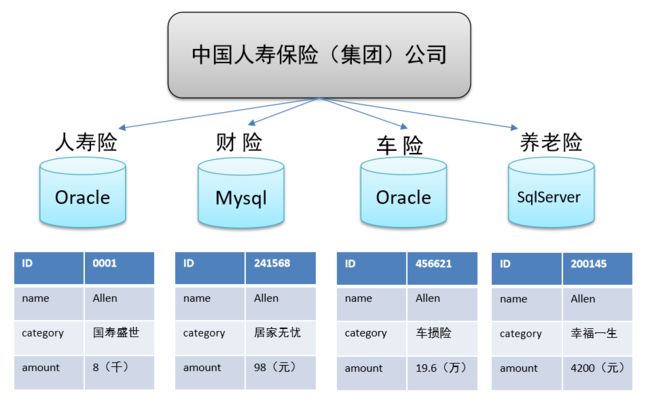

- 中国人寿保险(集团)公司下辖多条业务线,包括:人寿险、财险、车险,养老险等。各业务线的业务正常运营需要记录维护包括客户、保单、收付费、核保、理赔等信息。

- 联机事务处理系统(OLTP)正好可以满足上述业务需求开展, 其主要任务是执行联机事务处理。其基本特征是前台接收的用户数据可以立即传送到后台进行处理,并在很短的时间内给出处理结果。

- 关系型数据库(RDBMS)是OLTP典型应用,比如:Oracle、MySQL、SQL Server等

分析型决策的制定

随着集团业务的持续运营,业务数据将会越来越多。由此也产生出许多运营相关的困惑:

能够确定哪些险种正在恶化或已成为不良险种?

能够用有效的方式制定新增和续保的政策吗?

理赔过程有欺诈的可能吗?

现在得到的报表是否只是某条业务线的?集团整体层面数据如何?

…

为了能够正确认识这些问题,最稳妥办法就是:基于业务数据开展数据分析,基于分析的结果给决策提供支撑。也就是所谓的数据驱动决策的制定

OLTP环境开展数据分析可行性

-

OLTP系统的核心是面向业务,支持业务,支持事务。所有的业务操作可以分为读、写两种操作,一般来说读的压力明显大于写的压力。如果在OLTP环境直接开展各种分析,有以下问题需要考虑:

- 数据分析也是对数据进行读取操作,会让读取压力倍增;

- OLTP仅存储数周或数月的数据;

- 数据分散在不同系统不同表中,字段类型属性不统一;

-

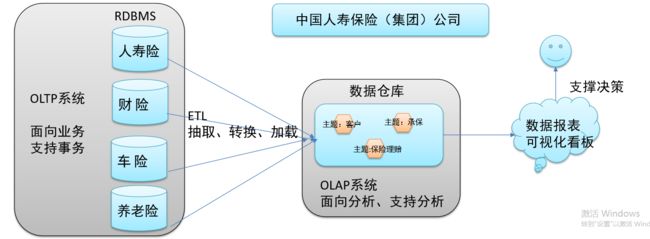

当分析所涉及数据规模较小的时候,在业务低峰期时可以在OLTP系统上开展直接分析。但是为了更好的进行各种规模的数据分析,同时也不影响OLTP系统运行,此时需要构建一个集成统一的数据分析平台。

-

该平台的目的很简单:面向分析,支持分析,并且和OLTP系统解耦合。

-

基于这种需求,数据仓库的雏形开始在企业中出现了

数据仓库的构建

- 如数仓定义所说,数仓是一个用于存储、分析、报告的数据系统,目的是构建面向分析的集成化数据环境。我们把这种面向分析、支持分析的系统称之为OLAP(联机分析处理)系统,数据仓库是OLAP一种。

- 中国人寿保险公司就可以基于分析决策需求,构建数仓平台。

1-3.数据仓库主要特征

- 数据仓库目的是构建面向分析的集成化数据环境,分析结果为企业提供决策支持(Decision Support)。

- 数据仓库本身并不“生产”任何数据,其数据来源于不同外部系统;

- 数据仓库自身也不需要“消费”任何的数据,其结果开放给各个外部应用使用;

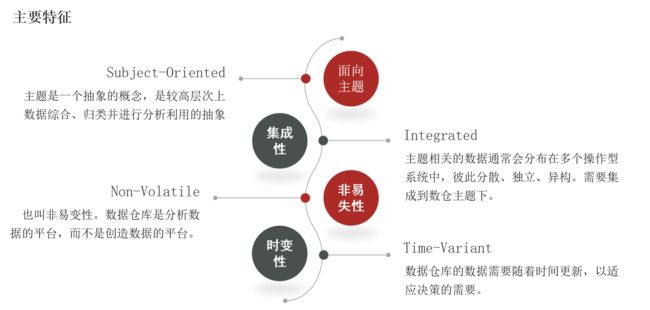

面向主题

- 数据库中,最大的特点是面向应用进行数据的组织,各个业务系统可能是相互分离的。

- 而数据仓库则是面向主题的。主题是一个抽象的概念,是较高层次上企业信息系统中的数据综合、归类并进行分析利用的抽象。在逻辑意义上,它是对应企业中某一宏观分析领域所涉及的分析对象。

- 操作型处理(传统数据)对数据的划分并不适用于决策分析。而基于主题组织的数据则不同,它们被划分为各自独立的领域,每个领域有各自的逻辑内涵但互不交叉,在抽象层次上对数据进行完整、一致和准确的描述。

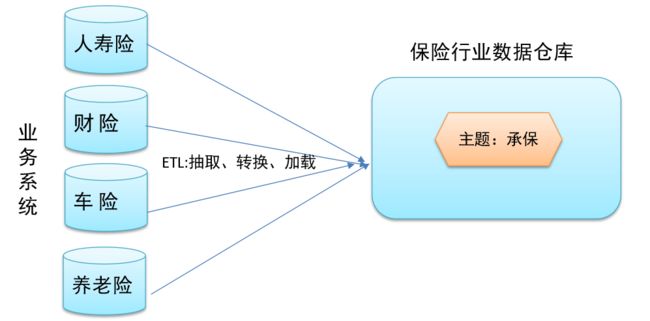

集成性

- 确定主题之后,就需要获取和主题相关的数据。当下企业中主题相关的数据通常会分布在多个操作型系统中,彼此分散、独立、异构。

- 在数据进入数据仓库之前,必然要经过统一与综合,对数据进行抽取、清理、转换和汇总,这一步是数据仓库建设中最关键、最复杂的一步,所要完成的工作有:

- 要统一源数据中所有矛盾之处,如字段的同名异义、异名同义、单位不统一、字长不一致,等等。

- 进行数据综合和计算。数据仓库中的数据综合工作可以在从原有数据库抽取数据时生成,但许多是在数据仓库内部生成的,即进入数据仓库以后进行综合生成的。

下图说明了保险公司综合数据的简单处理过程,其中数据仓库中与“承保”主题有关的数据来自于多个不同的操作型系统。这些系统内部数据的命名可能不同,数据格式也可能不同。把不同来源的数据存储到数据仓库之前,需要去除这些不一致

非易失性

- 数据仓库是分析数据的平台,而不是创造数据的平台。我们是通过数仓去分析数据中的规律,而不是去创造修改其中的规律。因此数据进入数据仓库后,它便稳定且不会改变。

- 操作型数据库主要服务于日常的业务操作,使得数据库需要不断地对数据实时更新,以便迅速获得当前最新数据,不至于影响正常的业务运作。在数据仓库中只要保存过去的业务数据,不需要每一笔业务都实时更新数据仓库,而是根据商业需要每隔一段时间把一批较新的数据导入数据仓库。

- 数据仓库的数据反映的是一段相当长的时间内历史数据的内容,是不同时点的数据库快照的集合,以及基于这些快照进行统计、综合和重组的导出数据。

- 数据仓库的用户对数据的操作大多是数据查询或比较复杂的挖掘,一旦数据进入数据仓库以后,一般情况下被较长时间保留。数据仓库中一般有大量的查询操作,但修改和删除操作很少。

时变性

- 数据仓库包含各种粒度的历史数据,数据可能与某个特定日期、星期、月份、季度或者年份有关。

- 数据仓库的用户不能修改数据,但并不是说数据仓库的数据是永远不变的。分析的结果只能反映过去的情况,当业务变化后,挖掘出的模式会失去时效性。因此数据仓库的数据需要随着时间更新,以适应决策的需要。从这个角度讲,数据仓库建设是一个项目,更是一个过程 。

- 数据仓库的数据随时间的变化表现在以下几个方面。

- 数据仓库的数据时限一般要远远长于操作型数据的数据时限。

- 操作型系统存储的是当前数据,而数据仓库中的数据是历史数据。

- 数据仓库中的数据是按照时间顺序追加的,它们都带有时间属性。

1-4.数据仓库、数据库、数据集市

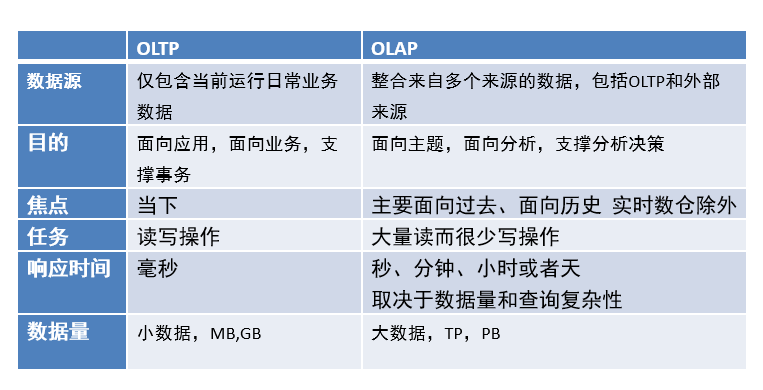

OLTP、OLAP

联机事务处理 OLTP(On-Line Transaction Processing)。

联机分析处理 OLAP(On-Line Analytical Processing)。

OLTP

- 操作型处理,叫联机事务处理OLTP(On-Line Transaction Processing),主要目标是做数据处理,它是针对具体业务在数据库联机的日常操作,通常对少数记录进行查询、修改。

- 用户较为关心操作的响应时间、数据的安全性、完整性和并发支持的用户数等问题。

- 传统的关系型数据库系统(RDBMS)作为数据管理的主要手段,主要用于操作型处理。

OLAP

- 分析型处理,叫联机分析处理OLAP(On-Line Analytical Processing),主要目标是做数据分析。

- 一般针对某些主题的历史数据进行复杂的多维分析,支持管理决策。

- 数据仓库是OLAP系统的一个典型示例,主要用于数据分析。

数据仓库、数据库区别

- 数据库与数据仓库的区别实际讲的是OLTP与OLAP的区别。

- OLTP系统的典型应用就是RDBMS,也就是我们俗称的数据库,当然这里要特别强调此数据库表示的是关系型数据库,Nosql数据库并不在讨论范围内。

- OLAP系统的典型应用就是DW,也就是我们俗称的数据仓库。

- 数据仓库不是大型的数据库,虽然数据仓库存储数据规模大。

- 数据仓库的出现,并不是要取代数据库。

- 数据库是面向事务的设计,数据仓库是面向主题设计的。

- 数据库一般存储业务数据,数据仓库存储的一般是历史数据。

- 数据库是为捕获数据而设计,数据仓库是为分析数据而设计。

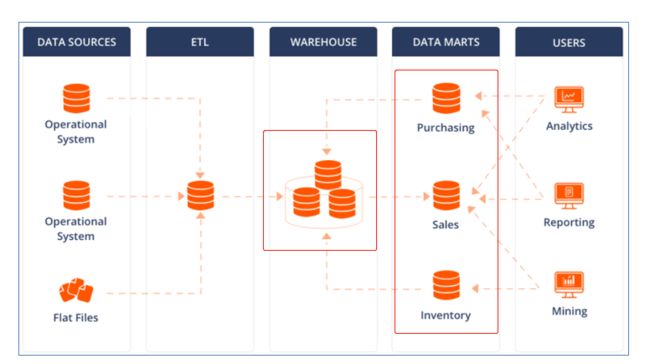

数据仓库、数据集市区别

- 数据仓库(Data Warehouse)是面向整个集团组织的数据,数据集市( Data Mart ) 是面向单个部门使用的。

- 可以认为数据集市是数据仓库的子集,也有把数据集市叫做小型数据仓库。数据集市通常只涉及一个主题领域,例如市场营销或销售。因为它们较小且更具体,所以它们通常更易于管理和维护,并具有更灵活的结构。

- 下图中,各种操作型系统数据和包括文件在内的等其他数据作为数据源,经过ETL(抽取转换加载)填充到数据仓库中;数据仓库中有不同主题数据,数据集市则根据部门特点面向指定主题,比如Purchasing(采购)、Sales(销售)、Inventory(库存);

- 用户可以基于主题数据开展各种应用:数据分析、数据报表、数据挖掘。

1-5.数据仓库分层架构

分层思想和标准

- 数据仓库的特点是本身不生产数据,也不最终消费数据。按照数据流入流出数仓的过程进行分层就显得水到渠成。

- 每个企业根据自己的业务需求可以分成不同的层次。但是最基础的分层思想,理论上分为三个层:操作型数据层(ODS)、数据仓库层(DW)和数据应用层(DA)。

- 企业在实际运用中可以基于这个基础分层之上添加新的层次,来满足不同的业务需求

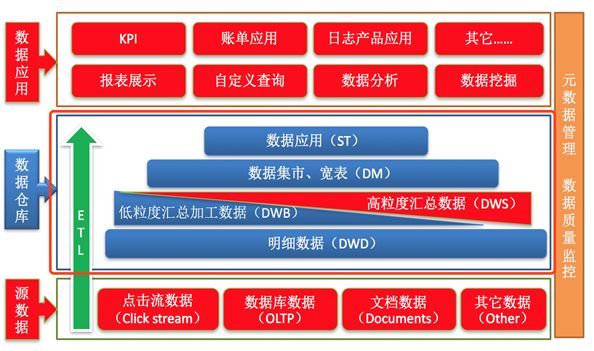

阿里巴巴数仓3层架构介绍

为了更好的理解数据仓库分层的思想以及每层的功能意义,下面结合阿里巴巴提供出的数仓分层架构图进行分析。

阿里数仓是非常经典的3层架构,从下往上依次是:ODS、DW、DA。

通过元数据管理和数据质量监控来把控整个数仓中数据的流转过程、血缘依赖关系和生命周期。

ODS层(Operation Data Store)

- 操作型数据层,也称之为源数据层、数据引入层、数据暂存层、临时缓存层。

- 此层存放未经过处理的原始数据至数据仓库系统,结构上与源系统保持一致,是数据仓库的数据准备区。

- 主要完成基础数据引入到数仓的职责,和数据源系统进行解耦合,同时记录基础数据的历史变化。

DW层(Data Warehouse)

- 数据仓库层,由ODS层数据加工而成。主要完成数据加工与整合,建立一致性的维度,构建可复用的面向分析和统计的明细事实表,以及汇总公共粒度的指标。内部具体划分如下:

- 公共维度层(DIM):基于维度建模理念思想,建立整个企业一致性维度。

- 公共汇总粒度事实层(DWS、DWB):以分析的主题对象作为建模驱动,基于上层的应用和产品的指标需求,构建公共粒度的汇总指标事实表,以宽表化手段物理化模型

- 明细粒度事实层(DWD): 将明细事实表的某些重要维度属性字段做适当冗余,即宽表化处理。

DA层(或ADS层)

数据应用层,面向最终用户,面向业务定制提供给产品和数据分析使用的数据。

包括前端报表、分析图表、KPI、仪表盘、OLAP专题、数据挖掘等分析。

数据仓库分层优点

- 清晰数据结构

- 每一个数据分层都有它的作用域,在使用表的时候能更方便地定位和理解。

- 数据血缘追踪

- 简单来说,我们最终给业务呈现的是一个能直接使用业务表,但是它的来源有很多,如果有一张来源表出问题了,我们希望能够快速准确地定位到问题,并清楚它的危害范围。

- 减少重复开发

- 规范数据分层,开发一些通用的中间层数据,能够减少极大的重复计算。

- 把复杂问题简单化

- 将一个复杂的任务分解成多个步骤来完成,每一层只处理单一的步骤,比较简单和容易理解。而且便于维护数据的准确性,当数据出现问题之后,可以不用修复所有的数据,只需要从有问题的步骤开始修复。

- 屏蔽原始数据的异常

- 屏蔽业务的影响,不必改一次业务就需要重新接入数据

ETL、ELT区别

数据仓库从各数据源获取数据及在数据仓库内的数据转换和流动都可以认为是ETL(抽取Extra, 转化Transfer, 装载Load)的过程。

但是在实际操作中将数据加载到仓库却产生了两种不同做法:ETL和ELT。

ETL

Extract,Transform,Load ETL

首先从数据源池中提取数据,这些数据源通常是事务性数据库。数据保存在临时暂存数据库中(ODS)。然后执行转换操作,将数据结构化并转换为适合目标数据仓库系统的形式。然后将结构化数据加载到仓库中,以备分析。

ELT

Extract,Load,Transform ELT

使用ELT,数据在从源数据池中提取后立即加载。没有专门的临时数据库(ODS),这意味着数据会立即加载到单一的集中存储库中。数据在数据仓库系统中进行转换,以便与商业智能工具(BI工具)一起使用。大数据时代的数仓这个特点很明显。

第2章:Apache Hive入门

2-1.Apache Hive概述

什么是Hive

- Apache Hive是一款建立在Hadoop之上的开源数据仓库系统,可以将存储在Hadoop文件中的结构化、半结构化数据文件映射为一张数据库表,基于表提供了一种类似SQL的查询模型,称为Hive查询语言(HQL),用于访问和分析存储在Hadoop文件中的大型数据集。

- Hive核心是将HQL转换为MapReduce程序,然后将程序提交到Hadoop群集执行。

- Hive由Facebook实现并开源。

为什么使用Hive

- 使用Hadoop MapReduce直接处理数据所面临的问题

- 人员学习成本太高,需要掌握java语言

- MapReduce实现复杂查询逻辑开发难度太大

- 使用Hive处理数据的好处

- 操作接口采用类SQL语法,提供快速开发的能力(简单、容易上手)

- 避免直接写MapReduce,减少开发人员的学习成本

- 支持自定义函数,功能扩展很方便

- 背靠Hadoop,擅长存储分析海量数据集

Hive和Hadoop关系

- 从功能来说,数据仓库软件,至少需要具备下述两种能力:

- 存储数据的能力

- 分析数据的能力

- Apache Hive作为一款大数据时代的数据仓库软件,当然也具备上述两种能力。只不过Hive并不是自己实现了上述两种能力,而是借助Hadoop。

- Hive利用Hadoop的HDFS存储数据,利用Hadoop的MapReduce查询分析数据。

- 这样突然发现Hive没啥用,不过是套壳Hadoop罢了。其实不然,Hive的最大的魅力在于用户专注于编写HQL,Hive帮您转换成为MapReduce程序完成对数据的分析。

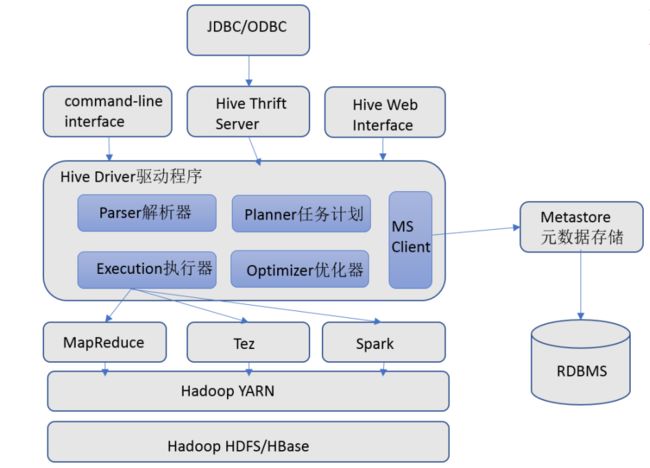

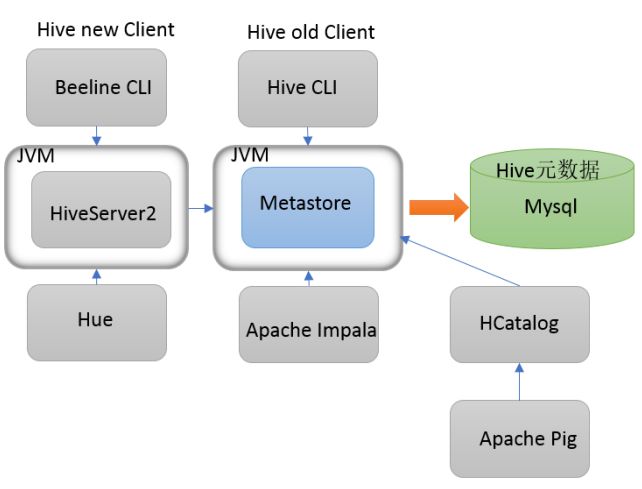

2-2.Apache Hive架构、组件

Hive架构图

Hive组件

用户接口

包括 CLI、JDBC/ODBC、WebGUI。其中,CLI(command line interface)为shell命令行;Hive中的Thrift服务器允许外部客户端通过网络与Hive进行交互,类似于JDBC或ODBC协议。WebGUI是通过浏览器访问Hive

元数据存储

通常是存储在关系数据库如 mysql/derby中。Hive 中的元数据包括表的名字,表的列和分区及其属性,表的属性(是否为外部表等),表的数据所在目录等

Driver驱动程序

包括语法解析器、计划编译器、优化器、执行器

完成 HQL 查询语句从词法分析、语法分析、编译、优化以及查询计划的生成。生成的查询计划存储在 HDFS 中,并在随后有执行引擎调用执行

执行引擎

Hive本身并不直接处理数据文件。而是通过执行引擎处理。当下Hive支持MapReduce、Tez、Spark3种执行引擎

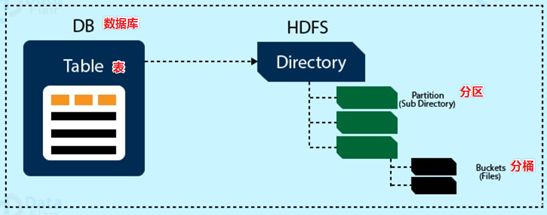

2-3.Apache Hive数据模型

Data Model概念

- 数据模型:用来描述数据、组织数据和对数据进行操作,是对现实世界数据特征的描述。、

- Hive的数据模型类似于RDBMS库表结构,此外还有自己特有模型。

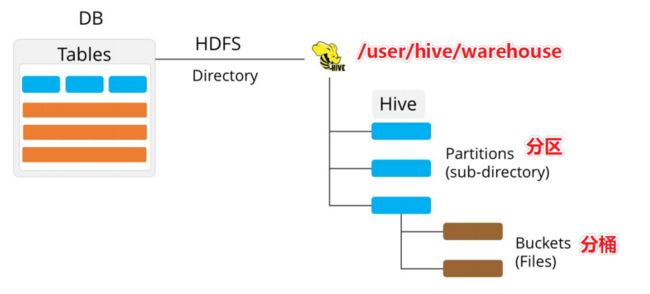

- Hive中的数据可以在粒度级别上分为三类:

- Table 表

- Partition 分区

- Bucket 分桶

Databases 数据库

- Hive作为一个数据仓库,在结构上积极向传统数据库看齐,也分数据库(Schema),每个数据库下面有各自的表组成。默认数据库default。

- Hive的数据都是存储在HDFS上的,默认有一个根目录,在hive-site.xml中,由参数hive.metastore.warehouse.dir指定。默认值为/user/hive/warehouse。

- 因此,如果没有设置特定路径,那么Hive中的数据库在HDFS上的存储路径为:/user/hive/warehouse/databasename.db

Tables 表

- Hive表与关系数据库中的表相同。Hive中的表所对应的数据通常是存储在HDFS中,而表相关的元数据是存储在RDBMS中。

- Hive中的表的数据在HDFS上的存储路径为:/user/hive/warehouse/databasename.db/tablename

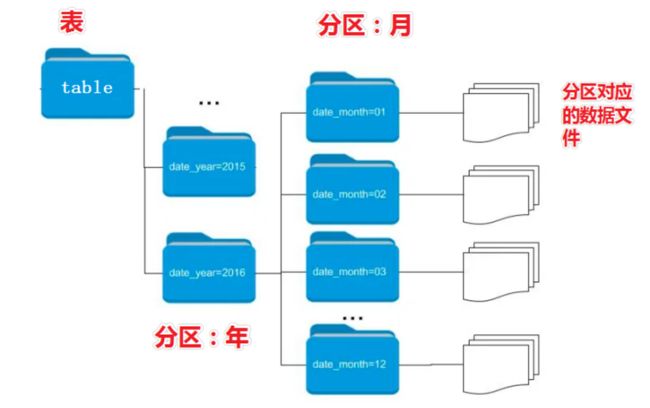

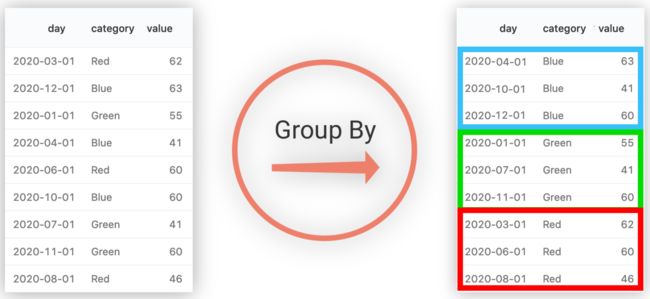

Partitions 分区

- Partition分区是hive的一种优化手段表。分区是指根据分区列(例如“日期day”)的值将表划分为不同分区。这样可以更快地对指定分区数据进行查询。

- 分区在存储层面上的表现是:table表目录下以子文件夹形式存在。

- 一个文件夹表示一个分区。子文件命名标准:分区列=分区值

- Hive还支持分区下继续创建分区,所谓的多重分区。关于分区表的使用和详细介绍,后面模块会单独展开。

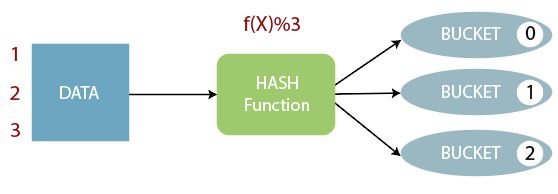

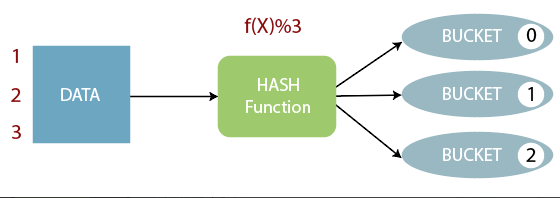

Buckets 分桶

- Bucket分桶表是hive的一种优化手段表。分桶是指根据表中字段(例如“编号ID”)的值,经过hash计算规则将数据文件划分成指定的若干个小文件。

- 分桶规则:hashfunc(字段) % 桶个数,余数相同的分到同一个文件。

- 分桶的好处是可以优化join查询和方便抽样查询。

- Bucket分桶表在HDFS中表现为同一个表目录下数据根据hash散列之后变成多个文件。

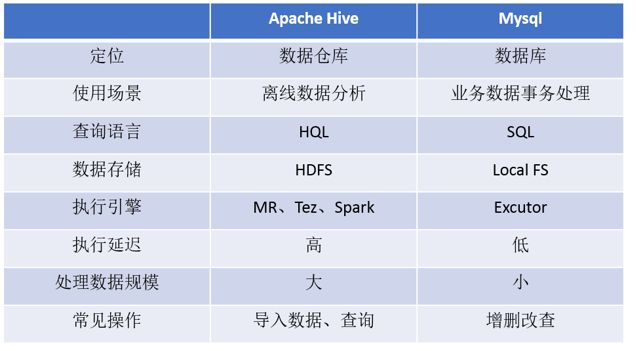

2-4.Apache Hive能否取代MySQL

- Hive虽然具有RDBMS数据库的外表,包括数据模型、SQL语法都十分相似,但hive应用场景和MySQL却完全不同。

- Hive只适合用来做海量数据的离线分析。Hive的定位是数据仓库,面向分析的OLAP系统。

- Hive不是大型数据库,也无法取代MySQL承担业务数据处理。

第3章:Apache Hive安装部署

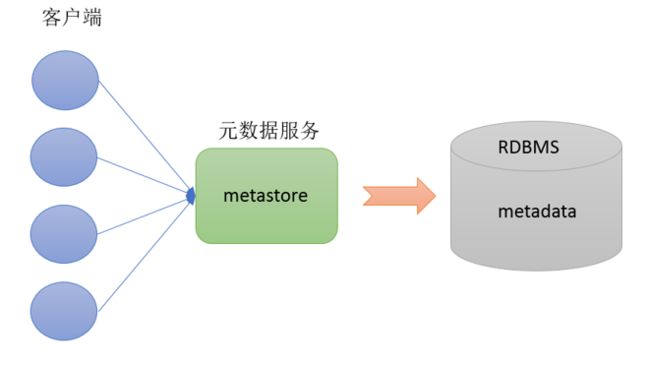

3-1.Apache Hive 元数据

什么是元数据

元数据(Metadata),又称中介数据、中继数据,为描述数据的数据(data about data),主要是描述数据属性(property)的信息,用来支持如指示存储位置、历史数据、资源查找、文件记录等功能。

Hive Metadata

- Hive Metadata即Hive的元数据。

- 包含用Hive创建的database、table、表的位置、类型、属性,字段顺序类型等元信息。

- 元数据存储在关系型数据库中。如hive内置的Derby、或者第三方如MySQL等。

Hive Metastore

- Metastore即元数据服务。Metastore服务的作用是管理metadata元数据,对外暴露服务地址,让各种客户端通过连接metastore服务,由metastore再去连接MySQL数据库来存取元数据。

- 有了metastore服务,就可以有多个客户端同时连接,而且这些客户端不需要知道MySQL数据库的用户名和密码,只需要连接metastore 服务即可。某种程度上也保证了hive元数据的安全。

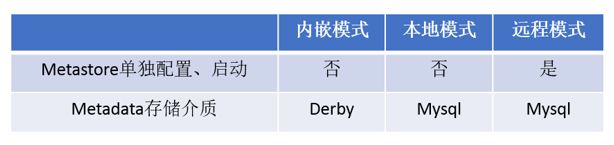

3-2.metastore三种配置方式

metastore服务配置有3种模式:内嵌模式、本地模式、远程模式。

区分3种配置方式的关键是弄清楚两个问题:

Metastore服务是否需要单独配置、单独启动?

Metadata是存储在内置的derby中,还是第三方RDBMS,比如MySQL。

内嵌模式

- 内嵌模式(Embedded Metastore)是metastore默认部署模式。

- 内嵌模式模式下,元数据存储在内置的Derby数据库,并且Derby数据库和metastore服务都嵌入在主HiveServer进程中,当启动HiveServer进程时,Derby和metastore都会启动。不需要额外起Metastore服务。

- 但是一次只能支持一个活动用户,仅仅适用于调试和测试体验,不适用于生产环境。

本地模式

- 本地模式(Local Metastore)下,Metastore服务与主HiveServer进程在同一进程中运行,但是存储元数据的数据库在单独的进程中运行,并且可以在单独的主机上。metastore服务将通过JDBC与metastore数据库进行通信。

- 本地模式采用外部数据库来存储元数据,推荐使用MySQL。

- hive根据hive.metastore.uris参数值来判断,如果为空,则为本地模式。

- 本地模式缺点是:每启动一次hive服务,都内置启动了一个metastore。

远程模式

- 远程模式(Remote Metastore)下,Metastore服务在其自己的单独JVM上运行,而不在HiveServer的JVM中运行。如果其他进程希望与Metastore服务器通信,则可以使用Thrift Network API进行通信。

- 远程模式下,需要配置hive.metastore.uris 参数来指定metastore服务运行的机器ip和端口,并且需要单独手动启动metastore服务。元数据也采用外部数据库来存储元数据,推荐使用MySQL。

- 在生产环境中,建议用远程模式来配置Hive Metastore。在这种情况下,其他依赖hive的软件都可以通过Metastore访问hive。由于还可以完全屏蔽数据库层,因此这也带来了更好的可管理性/安全性。

3-3.Apache Hive部署实战

安装前环境准备

- 由于Apache Hive是一款基于Hadoop的数据仓库软件,通常部署运行在Linux系统之上。因此不管使用何种方式配置Hive Metastore,必须要先保证服务器的基础环境正常,Hadoop集群健康可用。

- 服务器基础环境

集群时间同步、防火墙关闭、主机Host映射、免密登录、JDK安装 - Hadoop集群健康可用

启动Hive之前必须先启动Hadoop集群。特别要注意,需等待HDFS安全模式关闭之后再启动运行Hive。

Hive不是分布式安装运行的软件,其分布式的特性主要借由Hadoop完成。包括分布式存储、分布式计算。

如果还没有hadoop集群可以参考另一篇hadoop文章进行准备:

hadoop集群准备

搭建部署hadoop集群

https://blog.csdn.net/wt334502157/article/details/114916871

已有hadoop集群则可以直接进行hive的安装部署

hive3安装部署

hive安装部署

https://blog.csdn.net/wt334502157/article/details/115419462

由于篇幅原因,hive3.1.2的详细安装部署步骤可以参考hive安装部署文章

本篇幅会讲重要点标注

[注意] hive安装详解文档中附带了安装包的网盘分享:

Hive部署所有依赖包和安装包网盘链接

链接:https://pan.baidu.com/s/1kPr0uTEXqslxZ3v_r-uLQQ

提取码:bi8x

按照部署文档中步骤:

- 上传安装包

- MySQL安装

- MySQL配置

- hive安装

- 配置Metastore到MySql

- 修改hadoop环境变量

- 解决jar包冲突

- 启动hive(启动前处理guava的jar包冲突)

3-4.Apache Hive客户端使用

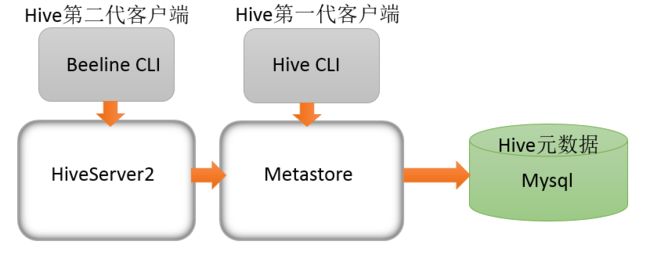

bin/hive和bin/beeline

Hive发展至今,总共历经了两代客户端工具。

- 第一代客户端(deprecated不推荐使用):$HIVE_HOME/bin/hive, 是一个 shellUtil。主要功能:一是可用于以交互或批处理模式运行Hive查询;二是用于Hive相关服务的启动,比如metastore服务。

- 第二代客户端(recommended 推荐使用):$HIVE_HOME/bin/beeline,是一个JDBC客户端,是官方强烈推荐使用的Hive命令行工具,和第一代客户端相比,性能加强安全性提高。

远程模式下beeline通过 Thrift 连接到单独的 HiveServer2服务上,这也是官方推荐在生产环境中使用的模式

bin/hive客户端

- 在hive安装包的bin目录下,有hive提供的第一代客户端 bin/hive。该客户端可以访问hive的metastore服务,从而达到操作hive的目的。

- 需要启动运行metastore服务。

- 可以直接在启动Hive metastore服务的机器上使用bin/hive客户端操作,此时不需要进行任何配置。

bin/beeline客户端

- hive经过发展,推出了第二代客户端beeline,但是beeline客户端不是直接访问metastore服务的,而是需要单独启动hiveserver2服务

- 在hive安装的服务器上,首先启动metastore服务,然后启动hiveserver2服务

- Beeline是JDBC的客户端,通过JDBC协议和Hiveserver2服务进行通信,协议的地址是:jdbc:hive2://ip:port

wangting@ops02:/home/wangting >beeline -u jdbc:hive2://ops01:10000 -n wangting

Connecting to jdbc:hive2://ops01:10000

Connected to: Apache Hive (version 3.1.2)

Driver: Hive JDBC (version 3.1.2)

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version 3.1.2 by Apache Hive

0: jdbc:hive2://ops01:10000> show databases;

+--------------------+

| database_name |

+--------------------+

| db_hive |

| default |

| hvprd_ads |

| hvprd_base |

| hvprd_basedb |

| hvprd_cdm |

| hvprd_cln |

| hvprd_ods |

| hvprd_stg |

| hvprd_tmp |

| hvprd_udl |

| test |

+--------------------+

13 rows selected (0.166 seconds)

0: jdbc:hive2://ops01:10000>

HiveServer和HiveServer2服务

- HiveServer、HiveServer2都是Hive自带的两种服务,允许客户端在不启动CLI(命令行)的情况下对Hive中的数据进行操作,且两个都允许远程客户端使用多种编程语言如java,python等向hive提交请求,取回结果。

- HiveServer不能处理多于一个客户端的并发请求。因此在Hive-0.11.0版本中重写了HiveServer代码得到了HiveServer2,进而解决了该问题。HiveServer已经被废弃。

- HiveServer2支持多客户端的并发和身份认证,旨在为开放API客户端如JDBC、ODBC提供更好的支持。

Hive服务和客户端

- HiveServer2通过Metastore服务读写元数据。所以在远程模式下,启动HiveServer2之前必须先首先启动metastore服务

- 远程模式下,Beeline客户端只能通过HiveServer2服务访问Hive。而bin/hive是通过Metastore服务访问

第4章:场景案例:Apache Hive初体验

4-1.体验1:Hive使用和MySQL对比

按照MySQL的思维,在hive中创建、切换数据库,创建表并执行插入数据操作,最后查询是否插入成功

通过beeline登录hive

# 通过beeline登录hive

wangting@ops02:/home/wangting >beeline -u jdbc:hive2://ops01:10000 -n wangting

Connecting to jdbc:hive2://ops01:10000

Connected to: Apache Hive (version 3.1.2)

Driver: Hive JDBC (version 3.1.2)

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version 3.1.2 by Apache Hive

创建一个库

# 创建hv_2022_10_13库

0: jdbc:hive2://ops01:10000> create database hv_2022_10_13;

INFO : Compiling command(queryId=wangting_20221013160018_4a1ad7d6-b66b-4e9d-b7e9-e6f602e24e5e): create database hv_2022_10_13

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=wangting_20221013160018_4a1ad7d6-b66b-4e9d-b7e9-e6f602e24e5e); Time taken: 0.043 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=wangting_20221013160018_4a1ad7d6-b66b-4e9d-b7e9-e6f602e24e5e): create database hv_2022_10_13

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=wangting_20221013160018_4a1ad7d6-b66b-4e9d-b7e9-e6f602e24e5e); Time taken: 0.034 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

No rows affected (0.133 seconds)

查看数据库

# 查看数据库

0: jdbc:hive2://ops01:10000> show databases like '*2022*';

INFO : Compiling command(queryId=wangting_20221013160105_5dfea58b-e0df-4354-b7c1-33115306e2ea): show databases like '*2022*'

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:database_name, type:string, comment:from deserializer)], properties:null)

INFO : Completed compiling command(queryId=wangting_20221013160105_5dfea58b-e0df-4354-b7c1-33115306e2ea); Time taken: 0.014 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=wangting_20221013160105_5dfea58b-e0df-4354-b7c1-33115306e2ea): show databases like '*2022*'

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=wangting_20221013160105_5dfea58b-e0df-4354-b7c1-33115306e2ea); Time taken: 0.005 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

+----------------+

| database_name |

+----------------+

| hv_2022_10_13 |

+----------------+

1 row selected (0.031 seconds)

进入数据库

# 进入数据库

0: jdbc:hive2://ops01:10000> use hv_2022_10_13;

INFO : Compiling command(queryId=wangting_20221013160126_1e501f53-09ee-4baf-8ef5-8e63079ea4eb): use hv_2022_10_13

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=wangting_20221013160126_1e501f53-09ee-4baf-8ef5-8e63079ea4eb); Time taken: 0.016 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=wangting_20221013160126_1e501f53-09ee-4baf-8ef5-8e63079ea4eb): use hv_2022_10_13

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=wangting_20221013160126_1e501f53-09ee-4baf-8ef5-8e63079ea4eb); Time taken: 0.004 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

No rows affected (0.031 seconds)

建表

# 建表

0: jdbc:hive2://ops01:10000> create table t_student(id int,name varchar(255));

INFO : Compiling command(queryId=wangting_20221013160614_888f8325-7559-4a1a-ae0b-a58f81d42b78): create table t_student(id int,name varchar(255))

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=wangting_20221013160614_888f8325-7559-4a1a-ae0b-a58f81d42b78); Time taken: 0.017 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=wangting_20221013160614_888f8325-7559-4a1a-ae0b-a58f81d42b78): create table t_student(id int,name varchar(255))

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=wangting_20221013160614_888f8325-7559-4a1a-ae0b-a58f81d42b78); Time taken: 0.073 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

No rows affected (0.098 seconds)

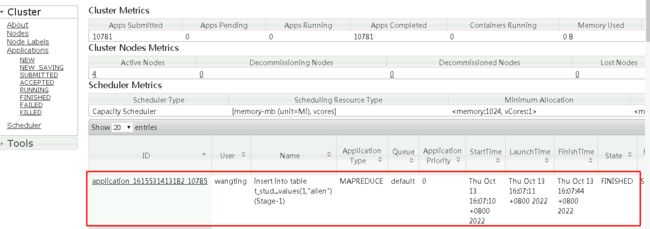

表中插入一条数据

# 插入一条数据

0: jdbc:hive2://ops01:10000> insert into table t_student values(1,"allen");

INFO : Compiling command(queryId=wangting_20221013160708_4f681b20-e521-4c4e-8fce-c1b7a68a8c9b): insert into table t_student values(1,"allen")

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:_col0, type:int, comment:null), FieldSchema(name:_col1, type:varchar(255), comment:null)], properties:null)

INFO : Completed compiling command(queryId=wangting_20221013160708_4f681b20-e521-4c4e-8fce-c1b7a68a8c9b); Time taken: 0.233 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=wangting_20221013160708_4f681b20-e521-4c4e-8fce-c1b7a68a8c9b): insert into table t_student values(1,"allen")

WARN : Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

INFO : Query ID = wangting_20221013160708_4f681b20-e521-4c4e-8fce-c1b7a68a8c9b

INFO : Total jobs = 3

INFO : Launching Job 1 out of 3

INFO : Starting task [Stage-1:MAPRED] in serial mode

INFO : Number of reduce tasks determined at compile time: 1

INFO : In order to change the average load for a reducer (in bytes):

INFO : set hive.exec.reducers.bytes.per.reducer=<number>

INFO : In order to limit the maximum number of reducers:

INFO : set hive.exec.reducers.max=<number>

INFO : In order to set a constant number of reducers:

INFO : set mapreduce.job.reduces=<number>

INFO : number of splits:1

INFO : Submitting tokens for job: job_1615531413182_10785

INFO : Executing with tokens: []

INFO : The url to track the job: http://ops02:8088/proxy/application_1615531413182_10785/

INFO : Starting Job = job_1615531413182_10785, Tracking URL = http://ops02:8088/proxy/application_1615531413182_10785/

INFO : Kill Command = /opt/module/hadoop-3.1.3/bin/mapred job -kill job_1615531413182_10785

INFO : Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

INFO : 2022-10-13 16:07:20,125 Stage-1 map = 0%, reduce = 0%

INFO : 2022-10-13 16:07:28,336 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 4.46 sec

INFO : 2022-10-13 16:07:44,683 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 7.37 sec

INFO : MapReduce Total cumulative CPU time: 7 seconds 370 msec

INFO : Ended Job = job_1615531413182_10785

INFO : Starting task [Stage-7:CONDITIONAL] in serial mode

INFO : Stage-4 is selected by condition resolver.

INFO : Stage-3 is filtered out by condition resolver.

INFO : Stage-5 is filtered out by condition resolver.

INFO : Starting task [Stage-4:MOVE] in serial mode

INFO : Moving data to directory hdfs://ops01:8020/user/hive/warehouse/hv_2022_10_13.db/t_student/.hive-staging_hive_2022-10-13_16-07-08_795_1543917524199913194-6/-ext-10000 from hdfs://ops01:8020/user/hive/warehouse/hv_2022_10_13.db/t_student/.hive-staging_hive_2022-10-13_16-07-08_795_1543917524199913194-6/-ext-10002

INFO : Starting task [Stage-0:MOVE] in serial mode

INFO : Loading data to table hv_2022_10_13.t_student from hdfs://ops01:8020/user/hive/warehouse/hv_2022_10_13.db/t_student/.hive-staging_hive_2022-10-13_16-07-08_795_1543917524199913194-6/-ext-10000

INFO : Starting task [Stage-2:STATS] in serial mode

INFO : MapReduce Jobs Launched:

INFO : Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 7.37 sec HDFS Read: 15979 HDFS Write: 250 SUCCESS

INFO : Total MapReduce CPU Time Spent: 7 seconds 370 msec

INFO : Completed executing command(queryId=wangting_20221013160708_4f681b20-e521-4c4e-8fce-c1b7a68a8c9b); Time taken: 37.022 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

No rows affected (37.266 seconds)

0: jdbc:hive2://ops01:10000>

在执行插入数据的时候,发现插入速度极慢,sql执行时间很长

最终插入一条数据,历史37.266秒的时间。查询表数据,显示数据插入成功

查询表数据

# 查询表数据

0: jdbc:hive2://ops01:10000> select * from t_student;

INFO : Compiling command(queryId=wangting_20221013162317_8bdee041-dcb8-421f-b122-c0eaf65b7994): select * from t_student

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:t_student.id, type:int, comment:null), FieldSchema(name:t_student.name,type:varchar(255), comment:null)], properties:null)

INFO : Completed compiling command(queryId=wangting_20221013162317_8bdee041-dcb8-421f-b122-c0eaf65b7994); Time taken: 0.123 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=wangting_20221013162317_8bdee041-dcb8-421f-b122-c0eaf65b7994): select * from t_student

INFO : Completed executing command(queryId=wangting_20221013162317_8bdee041-dcb8-421f-b122-c0eaf65b7994); Time taken: 0.001 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

+---------------+-----------------+

| t_student.id | t_student.name |

+---------------+-----------------+

| 1 | allen |

+---------------+-----------------+

1 row selected (0.228 seconds)

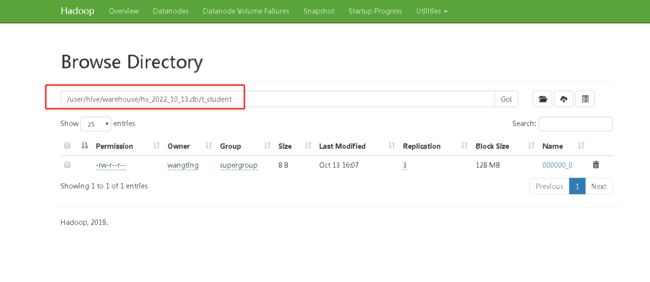

登陆Hadoop HDFS浏览文件系统,根据Hive的数据模型,表的数据最终是存储在HDFS和表对应的文件夹下的

总结

- Hive SQL语法和标准SQL很类似

- Hive底层是通过MapReduce执行的数据插入动作,所以速度慢。

- 如果大数据集这么一条一条插入的话是非常不现实的,时间成本极高。

- Hive应该具有自己特有的数据插入表方式,结构化文件映射成为表。

4-2.体验2:将结构化数据映射成为表初次体验

HDFS上传映射文件

在HDFS根目录下创建一个结构化数据文件user.txt,里面内容如下:

wangting@ops01:/home/wangting >mkdir 20221013

wangting@ops01:/home/wangting >cd 20221013

wangting@ops01:/home/wangting/20221013 >vim user.txt

1,zhangsan,18,beijing

2,lisi,25,shanghai

3,allen,30,shanghai

4,woon,15,nanjing

5,james,45,hangzhou

6,tony,26,beijing

wangting@ops01:/home/wangting/20221013 >hdfs dfs -put user.txt /

2022-10-13 17:17:48,256 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

wangting@ops01:/home/wangting/20221013 >hdfs dfs -ls /user.txt

-rw-r--r-- 3 wangting supergroup 117 2022-10-13 17:17 /user.txt

wangting@ops01:/home/wangting/20221013 >hdfs dfs -cat /user.txt

2022-10-13 17:18:28,421 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

1,zhangsan,18,beijing

2,lisi,25,shanghai

3,allen,30,shanghai

4,woon,15,nanjing

5,james,45,hangzhou

6,tony,26,beijing

创建表t_user

在hive中创建一张表t_user。注意:字段的类型顺序要和文件中字段保持一致。

0: jdbc:hive2://ops01:10000> create table t_user(id int,name varchar(255),age int,city varchar(255));

INFO : Compiling command(queryId=wangting_20221013172003_f3c0cd33-b145-4768-9c78-d2f5d177c43d): create table t_user(id int,name varchar(255),age int,city varchar(255))

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=wangting_20221013172003_f3c0cd33-b145-4768-9c78-d2f5d177c43d); Time taken: 0.015 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=wangting_20221013172003_f3c0cd33-b145-4768-9c78-d2f5d177c43d): create table t_user(id int,name varchar(255),age int,city varchar(255))

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=wangting_20221013172003_f3c0cd33-b145-4768-9c78-d2f5d177c43d); Time taken: 0.054 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

No rows affected (0.077 seconds)

0: jdbc:hive2://ops01:10000> select * from t_user;

INFO : Compiling command(queryId=wangting_20221013172211_e9eb3b6b-5ea6-4d5f-92d5-41c60c4e3d15): select * from t_user

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:t_user.id, type:int, comment:null), FieldSchema(name:t_user.name, type:varchar(255),comment:null), FieldSchema(name:t_user.age, type:int, comment:null), FieldSchema(name:t_user.city, type:varchar(255), comment:null)], properties:null)

INFO : Completed compiling command(queryId=wangting_20221013172211_e9eb3b6b-5ea6-4d5f-92d5-41c60c4e3d15); Time taken: 0.108 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=wangting_20221013172211_e9eb3b6b-5ea6-4d5f-92d5-41c60c4e3d15): select * from t_user

INFO : Completed executing command(queryId=wangting_20221013172211_e9eb3b6b-5ea6-4d5f-92d5-41c60c4e3d15); Time taken: 0.0 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

+------------+--------------+-------------+--------------+

| t_user.id | t_user.name | t_user.age | t_user.city |

+------------+--------------+-------------+--------------+

+------------+--------------+-------------+--------------+

No rows selected (0.12 seconds)

验证表t_user

执行数据查询操作,发现表中并没有数据,说明创建的t_user表和user.txt并没有形成映射关系

使用HDFS命令将数据移动到表对应的路径下

wangting@ops01:/home/wangting/20221013 >hdfs dfs -mv /user.txt /user/hive/warehouse/hv_2022_10_13.db/t_user

wangting@ops01:/home/wangting/20221013 >hdfs dfs -ls /user/hive/warehouse/hv_2022_10_13.db/t_user

Found 1 items

-rw-r--r-- 3 wangting supergroup 117 2022-10-13 17:17 /user/hive/warehouse/hv_2022_10_13.db/t_user/user.txt

再次查看表中内容

0: jdbc:hive2://ops01:10000> select * from t_user;

INFO : Compiling command(queryId=wangting_20221013172615_aff07cff-130a-4698-82e6-7e847f479d18): select * from t_user

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:t_user.id, type:int, comment:null), FieldSchema(name:t_user.name, type:varchar(255),comment:null), FieldSchema(name:t_user.age, type:int, comment:null), FieldSchema(name:t_user.city, type:varchar(255), comment:null)], properties:null)

INFO : Completed compiling command(queryId=wangting_20221013172615_aff07cff-130a-4698-82e6-7e847f479d18); Time taken: 0.111 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=wangting_20221013172615_aff07cff-130a-4698-82e6-7e847f479d18): select * from t_user

INFO : Completed executing command(queryId=wangting_20221013172615_aff07cff-130a-4698-82e6-7e847f479d18); Time taken: 0.0 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

+------------+--------------+-------------+--------------+

| t_user.id | t_user.name | t_user.age | t_user.city |

+------------+--------------+-------------+--------------+

| NULL | NULL | NULL | NULL |

| NULL | NULL | NULL | NULL |

| NULL | NULL | NULL | NULL |

| NULL | NULL | NULL | NULL |

| NULL | NULL | NULL | NULL |

| NULL | NULL | NULL | NULL |

+------------+--------------+-------------+--------------+

6 rows selected (0.127 seconds)

再次执行查询操作,值都是null,说明感知到文件,但是并没有把内容一一对应起来

建新表t_user_1指定分隔符

0: jdbc:hive2://ops01:10000> create table t_user_1(id int,name varchar(255),age int,city varchar(255)) row format delimited fields terminated by ',';

INFO : Compiling command(queryId=wangting_20221013173054_8ddcc8a6-d690-4eda-adb7-0788629ba15c): create table t_user_1(id int,name varchar(255),age int,city varchar(255)) row format delimited fields terminated by ','

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=wangting_20221013173054_8ddcc8a6-d690-4eda-adb7-0788629ba15c); Time taken: 0.015 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=wangting_20221013173054_8ddcc8a6-d690-4eda-adb7-0788629ba15c): create table t_user_1(id int,name varchar(255),age int,city varchar(255)) row format delimited fields terminated by ','

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=wangting_20221013173054_8ddcc8a6-d690-4eda-adb7-0788629ba15c); Time taken: 0.071 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

No rows affected (0.094 seconds)

上传映射文件到对应路径

wangting@ops01:/home/wangting/20221013 >hdfs dfs -put user.txt /user/hive/warehouse/hv_2022_10_13.db/t_user_1/

2022-10-13 17:32:18,236 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

wangting@ops01:/home/wangting/20221013 >hdfs dfs -cat /user/hive/warehouse/hv_2022_10_13.db/t_user_1/user.txt

2022-10-13 17:32:43,877 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

1,zhangsan,18,beijing

2,lisi,25,shanghai

3,allen,30,shanghai

4,woon,15,nanjing

5,james,45,hangzhou

6,tony,26,beijing

查询新表t_user_1内容

0: jdbc:hive2://ops01:10000> select * from t_user_1;

INFO : Compiling command(queryId=wangting_20221013173328_ee6745df-ca06-4433-9960-632250ccc5a6): select * from t_user_1

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:t_user_1.id, type:int, comment:null), FieldSchema(name:t_user_1.name, type:varchar(255), comment:null), FieldSchema(name:t_user_1.age, type:int, comment:null), FieldSchema(name:t_user_1.city, type:varchar(255), comment:null)], properties:null)

INFO : Completed compiling command(queryId=wangting_20221013173328_ee6745df-ca06-4433-9960-632250ccc5a6); Time taken: 0.108 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=wangting_20221013173328_ee6745df-ca06-4433-9960-632250ccc5a6): select * from t_user_1

INFO : Completed executing command(queryId=wangting_20221013173328_ee6745df-ca06-4433-9960-632250ccc5a6); Time taken: 0.0 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

+--------------+----------------+---------------+----------------+

| t_user_1.id | t_user_1.name | t_user_1.age | t_user_1.city |

+--------------+----------------+---------------+----------------+

| 1 | zhangsan | 18 | beijing |

| 2 | lisi | 25 | shanghai |

| 3 | allen | 30 | shanghai |

| 4 | woon | 15 | nanjing |

| 5 | james | 45 | hangzhou |

| 6 | tony | 26 | beijing |

+--------------+----------------+---------------+----------------+

6 rows selected (0.125 seconds)

创建表t_user_2

此时再创建一张表t_user_2,保存分隔符语法,但是故意使得字段类型和文件中不一致,测试一下字段约束类型不符会如何

0: jdbc:hive2://ops01:10000> create table t_user_2(id int,name int,age varchar(255),city varchar(255)) row format delimited fields terminated by ',';

INFO : Compiling command(queryId=wangting_20221013173540_c4d0759d-5120-4e77-bd1f-9cae820d5cad): create table t_user_2(id int,name int,age varchar(255),city varchar(255)) row format delimited fields terminated by ','

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=wangting_20221013173540_c4d0759d-5120-4e77-bd1f-9cae820d5cad); Time taken: 0.016 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=wangting_20221013173540_c4d0759d-5120-4e77-bd1f-9cae820d5cad): create table t_user_2(id int,name int,age varchar(255),city varchar(255)) row format delimited fields terminated by ','

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=wangting_20221013173540_c4d0759d-5120-4e77-bd1f-9cae820d5cad); Time taken: 0.055 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

No rows affected (0.077 seconds)

上传映射文件

wangting@ops01:/home/wangting/20221013 >hdfs dfs -put user.txt /user/hive/warehouse/hv_2022_10_13.db/t_user_2/

查询新表t_user_2内容

0: jdbc:hive2://ops01:10000> select * from t_user_2;

INFO : Compiling command(queryId=wangting_20221013173648_048fa03f-beb9-4387-bc09-e6062d79170f): select * from t_user_2

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:t_user_2.id, type:int, comment:null), FieldSchema(name:t_user_2.name, type:int, comment:null), FieldSchema(name:t_user_2.age, type:varchar(255), comment:null), FieldSchema(name:t_user_2.city, type:varchar(255), comment:null)], properties:null)

INFO : Completed compiling command(queryId=wangting_20221013173648_048fa03f-beb9-4387-bc09-e6062d79170f); Time taken: 0.106 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=wangting_20221013173648_048fa03f-beb9-4387-bc09-e6062d79170f): select * from t_user_2

INFO : Completed executing command(queryId=wangting_20221013173648_048fa03f-beb9-4387-bc09-e6062d79170f); Time taken: 0.001 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

+--------------+----------------+---------------+----------------+

| t_user_2.id | t_user_2.name | t_user_2.age | t_user_2.city |

+--------------+----------------+---------------+----------------+

| 1 | NULL | 18 | beijing |

| 2 | NULL | 25 | shanghai |

| 3 | NULL | 30 | shanghai |

| 4 | NULL | 15 | nanjing |

| 5 | NULL | 45 | hangzhou |

| 6 | NULL | 26 | beijing |

+--------------+----------------+---------------+----------------+

6 rows selected (0.121 seconds)

此时发现,有的列name显示null,有的列显示正常

name字段本身是字符串,但是建表的时候指定int,类型转换不成功;age是数值类型,建表指定字符串类型,可以转换成功。说明hive中具有自带的类型转换功能,但是不一定保证转换成功

结论

要想在hive中创建表跟结构化文件映射成功,需要注意以下几个方面问题:

- 创建表时,字段顺序、字段类型要和文件中保持一致。

- 如果类型不一致,hive会尝试转换,但是不保证转换成功。不成功显示null。

4-3.体验3:使用Hive进行小数据分析体验

在体验2中的t_user_1中进行数据查询

之前创建好了一张表t_user_1,现在通过Hive SQL找出当中年龄大于20岁的有几个

0: jdbc:hive2://ops01:10000> select count(*) from t_user_1 where age > 20;

INFO : Compiling command(queryId=wangting_20221013174512_2ec3e4c9-bafe-40fd-9a4c-367dbf1d899e): select count(*) from t_user_1 where age > 20

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:_c0, type:bigint, comment:null)], properties:null)

INFO : Completed compiling command(queryId=wangting_20221013174512_2ec3e4c9-bafe-40fd-9a4c-367dbf1d899e); Time taken: 0.268 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=wangting_20221013174512_2ec3e4c9-bafe-40fd-9a4c-367dbf1d899e): select count(*) from t_user_1 where age > 20

WARN : Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez)or using Hive 1.X releases.

INFO : Query ID = wangting_20221013174512_2ec3e4c9-bafe-40fd-9a4c-367dbf1d899e

INFO : Total jobs = 1

INFO : Launching Job 1 out of 1

INFO : Starting task [Stage-1:MAPRED] in serial mode

INFO : Number of reduce tasks determined at compile time: 1

INFO : In order to change the average load for a reducer (in bytes):

INFO : set hive.exec.reducers.bytes.per.reducer=<number>

INFO : In order to limit the maximum number of reducers:

INFO : set hive.exec.reducers.max=<number>

INFO : In order to set a constant number of reducers:

INFO : set mapreduce.job.reduces=<number>

INFO : number of splits:1

INFO : Submitting tokens for job: job_1615531413182_10786

INFO : Executing with tokens: []

INFO : The url to track the job: http://ops02:8088/proxy/application_1615531413182_10786/

INFO : Starting Job = job_1615531413182_10786, Tracking URL = http://ops02:8088/proxy/application_1615531413182_10786/

INFO : Kill Command = /opt/module/hadoop-3.1.3/bin/mapred job -kill job_1615531413182_10786

INFO : Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

INFO : 2022-10-13 17:45:20,779 Stage-1 map = 0%, reduce = 0%

INFO : 2022-10-13 17:45:35,109 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 4.06 sec

INFO : 2022-10-13 17:45:49,405 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 7.25 sec

INFO : MapReduce Total cumulative CPU time: 7 seconds 250 msec

INFO : Ended Job = job_1615531413182_10786

INFO : MapReduce Jobs Launched:

INFO : Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 7.25 sec HDFS Read: 14444 HDFS Write: 101 SUCCESS

INFO : Total MapReduce CPU Time Spent: 7 seconds 250 msec

INFO : Completed executing command(queryId=wangting_20221013174512_2ec3e4c9-bafe-40fd-9a4c-367dbf1d899e); Time taken: 38.032 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

+------+

| _c0 |

+------+

| 4 |

+------+

1 row selected (38.322 seconds)

从控制台输出可以发现又是通过MapReduce程序执行的数据查询功能

结论

- Hive底层的确是通过MapReduce执行引擎来处理数据的

- 执行完一个MapReduce程序需要的时间较长

- 如果是小数据集,使用hive进行分析将得不偿失,延迟很高

- 如果是大数据集,使用hive进行分析,底层MapReduce分布式计算

+后续章前准备工作+

准备工作可以根据情况选择是否准备,大部分语句可以通过命令行客户端去执行,推荐使用开发环境去熟悉。

IntelliJ IDEA开发工具安装,java开发人员必备工具

IntelliJ IDEA是JetBrains公司的产品,是java编程语言开发的集成环境。

在业界被公认为最好的java开发工具,尤其在智能代码助手、代码自动提示、重构、代码分析、 创新的GUI设计等方面的功能可以说是超常的。

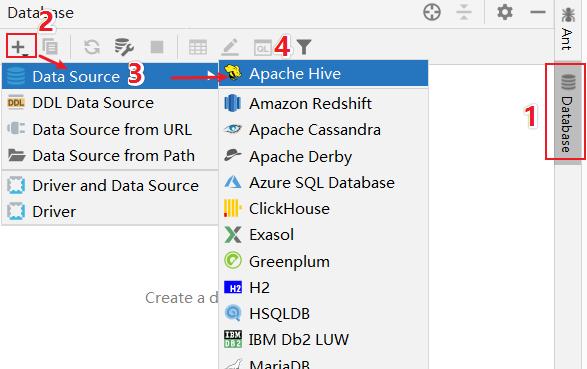

IntelliJ IDEA 还有丰富的插件,其中就内置集成了Database插件,支持操作各种主流的数据库、数据仓库

创建一个maven项目

Name: hive_test

GroupId: cn.wangting

在IDEA中的任意工程中,选择Database标签配置Hive Driver驱动

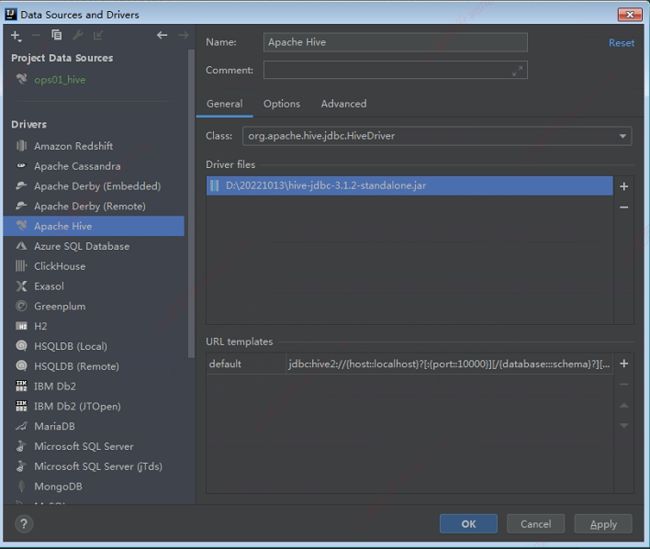

配置Hive数据源,连接HS2

驱动文件包可以关联本地对应版本jar包

链接:https://pan.baidu.com/s/14Pl4KnqjGj0nf05d7JSrxw?pwd=kfud

提取码:kfud

在线下载速度非常慢,有代理可以尝试在线下载依赖包

配置完成后,点击测试连接

test包下创建一个hive.sql,来测试功能:输入语句 show databases;

可以看到成功查询到结果输出

第5章:数据定义语言(DDL)概述

5-1.SQL中DDL语法的作用

- 数据定义语言 (Data Definition Language, DDL),是SQL语言集中对数据库内部的对象结构进行创建,删除,修改等的操作语言,这些数据库对象包括database(schema)、table、view、index等。

- DDL核心语法由CREATE、ALTER与DROP三个所组成。DDL并不涉及表内部数据的操作。

- 在某些上下文中,该术语也称为数据描述语言,因为它描述了数据库表中的字段和记录。

5-2.Hive中DDL语法的使用

- Hive SQL(HQL)与标准SQL的语法大同小异,基本相通,注意差异即可;

- 基于Hive的设计、使用特点,HQL中create语法(尤其create table)将是学习掌握Hive DDL语法的重中之重。

建表是否成功直接影响数据文件是否映射成功,进而影响后续是否可以基于SQL分析数据。

第6章:Hive SQL DDL建表基础语法

6-1.Hive建表完整语法树

完整语法树

CREATE [TEMPORARY] [EXTERNAL] TABLE [IF NOT EXISTS] [db_name.]table_name

[(col_name data_type [COMMENT col_comment], ... ]

[COMMENT table_comment]

[PARTITIONED BY (col_name data_type [COMMENT col_comment], ...)]

[CLUSTERED BY (col_name, col_name, ...) [SORTED BY (col_name [ASC|DESC], ...)] INTO num_buckets BUCKETS]

[ROW FORMAT DELIMITED|SERDE serde_name WITH SERDEPROPERTIES (property_name=property_value,...)]

[STORED AS file_format]

[LOCATION hdfs_path]

[TBLPROPERTIES (property_name=property_value, ...)];

注意事项

[ ] 中括号的语法表示可选。

| 表示使用的时候,左右语法二选一。

建表语句中的语法顺序要和语法树中顺序保持一致。

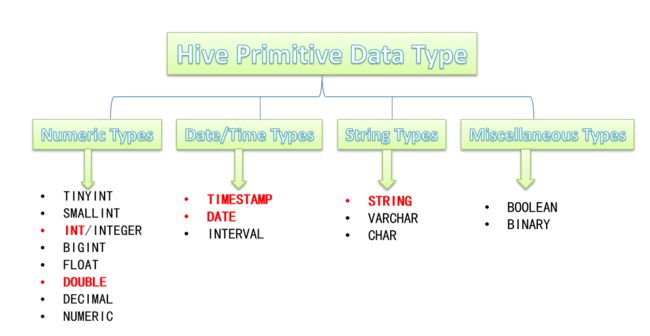

6-2.Hive数据类型详解

Hive数据类型指的是表中列的字段类型;

整体分为两类:

- 原生数据类型(primitive data type)

- 数值类型

- 时间日期类型

- 字符串类型

- 杂项数据类型

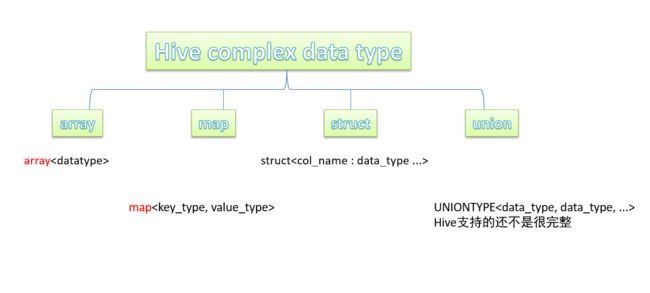

- 复杂数据类型(complex data type)

- array数组

- map映射

- struct结构

- union联合体

complex data type

- Hive SQL中,数据类型英文字母大小写不敏感;

- 除SQL数据类型外,还支持Java数据类型,比如字符串string;

- 复杂数据类型的使用通常需要和分隔符指定语法配合使用;

- 如果定义的数据类型和文件不一致,Hive会尝试隐式转换,但是不保证成功。

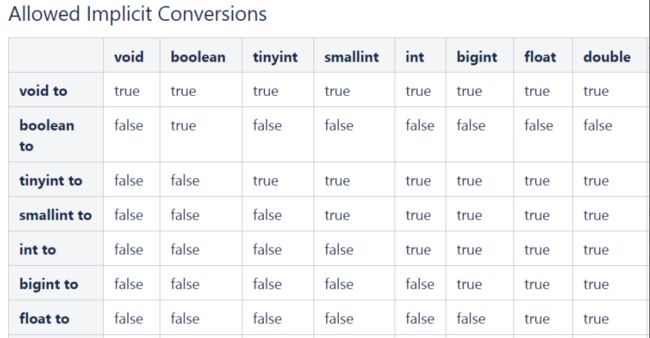

隐式转换:

- 与标准SQL类似,HQL支持隐式和显式类型转换。

- 原生类型从窄类型到宽类型的转换称为隐式转换,反之,则不允许

显示转换

显式类型转换使用CAST函数

例如,CAST('100’as INT)会将100字符串转换为100整数值

如果强制转换失败,例如CAST(‘Allen’as INT),该函数返回NULL

0: jdbc:hive2://ops01:10000> select cast ('100' as INT);

+------+

| _c0 |

+------+

| 100 |

+------+

1 row selected (0.146 seconds)

0: jdbc:hive2://ops01:10000> select cast ('wang' as INT);

+-------+

| _c0 |

+-------+

| NULL |

+-------+

1 row selected (0.132 seconds)

6-3.Hive读写文件机制

SerDe是什么:

- SerDe是Serializer、Deserializer的简称,目的是用于序列化和反序列化。

- 序列化是对象转化为字节码的过程;而反序列化是字节码转换为对象的过程。

- Hive使用SerDe(包括FileFormat)读取和写入表行对象。需要注意的是,“key”部分在读取时会被忽略,而在写入时key始终是常数。基本上行对象存储在“value”中。

Read:

HDFS files --> InputFileFormat -->

Write:

Row object --> Serializer(序列化) -->

可以通过desc formatted tablename查看表的相关SerDe信息

0: jdbc:hive2://ops01:10000> desc formatted t_user_1;

+-------------------------------+----------------------------------------------------+---------------------+

| col_name | data_type | comment |

+-------------------------------+----------------------------------------------------+---------------------+

| # Storage Information | NULL | NULL

| SerDe Library: | org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe | NULL

| InputFormat: | org.apache.hadoop.mapred.TextInputFormat | NULL

| OutputFormat: | org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat | NULL

Hive读写文件流程:

-

Hive读取文件机制:首先调用InputFormat(默认TextInputFormat),返回一条一条kv键值对记录(默认是一行对应一条键值对)。然后调用SerDe(默认LazySimpleSerDe)的Deserializer,将一条记录中的value根据分隔符切分为各个字段。

-

Hive写文件机制:将Row写入文件时,首先调用SerDe(默认LazySimpleSerDe)的Serializer将对象转换成字节序列,然后调用OutputFormat将数据写入HDFS文件中

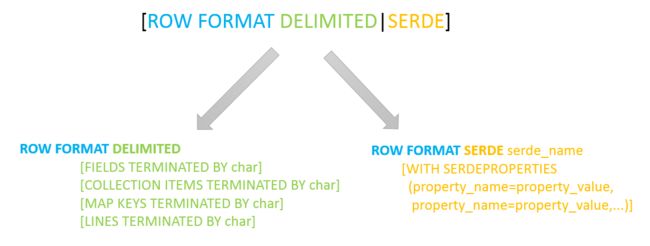

SerDe相关语法:

ROW FORMAT这一行所代表的是跟读写文件、序列化SerDe相关的语法,功能有二

- 使用哪个SerDe类进行序列化

- 如何指定分隔符

其中ROW FORMAT是语法关键字,DELIMITED和SERDE二选其一。

如果使用delimited表示使用默认的LazySimpleSerDe类来处理数据。

如果数据文件格式比较特殊可以使用ROW FORMAT SERDE serde_name指定其他的Serde类来处理数据,甚至支持用户自定义SerDe类。

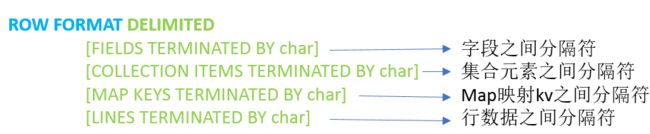

LazySimpleSerDe分隔符指定:

LazySimpleSerDe是Hive默认的序列化类,包含4种子语法,分别用于指定字段之间、集合元素之间、map映射 kv之间、换行的分隔符号

在建表的时候可以根据数据的特点灵活搭配使用

Hive默认分隔符:

Hive建表时如果没有row format语法指定分隔符,则采用默认分隔符;

默认的分割符是’\001’,是一种特殊的字符,使用的是ASCII编码的值,键盘是打不出来的

在vim编辑器中,连续按下Ctrl+v/Ctrl+a即可输入’\001’ ,显示^A,但是在正常的展示文本时则不可见

# 举例 下载一个hdfs映射文件

wangting@ops01:/home/wangting/20221013 >hdfs dfs -get /water_bill/output_ept_10W_export_0817/part-m-00000

wangting@ops01:/home/wangting/20221013 >ll

total 50284

-rw-r--r-- 1 wangting wangting 51483241 Oct 14 10:06 part-m-00000

-rw-rw-r-- 1 wangting wangting 117 Oct 13 17:16 user.txt

# 简单查看内容,并没有发现类似^开头的特殊字符

wangting@ops01:/home/wangting/20221013 >head part-m-00000 20

==> part-m-00000 <==

SEQ1org.apache.hadoop.hbase.io.ImmutableBytesWritable%org.apache.hadoop.hbase.client.Result8+u

0000132

R

0000132C1ADDRESS .(21山西省忻州市偏关县新关镇7单元124室

/

0000132C1

LATEST_DATE .(2

2020-08-02

'

0000132C1NAME .(2 蔡徐坤

-

0000132C1

NUM_CURRENT .(@p33333

# 使用vim编辑器打开文本,发现有很多^开头的特殊字符

wangting@ops01:/home/wangting/20221013 >vim part-m-00000

SEQ^F1org.apache.hadoop.hbase.io.ImmutableBytesWritable%org.apache.hadoop.hbase.client.Result^@^@^@^@^@^@8+u^V<8d>^BÕݬ<9a> ^S<81>ðN^@^@^Aû^@^@^@^K^@^@^@^G0000132î^C

R

^G0000132^R^BC1^Z^GADDRESS ÿ<8d><80><94><9f>.(^D21山西ç<9c><81>å¿»å·<9e>å¸<82>å<81><8f>å<85>³å<8e>¿æ<96>°å<85>³é<95><87>7å<8d><95>å<85><83>124室

^G0000132^R^BC1^Z^KLATEST_DATE ÿ<8d><80><94><9f>.(^D2

2020-08-02

^G0000132^R^BC1^Z^DNAME ÿ<8d><80><94><9f>.(^D2 æ<96>¹æµ©è½©

^G0000132^R^BC1^Z^KNUM_CURRENT ÿ<8d><80><94><9f>.(^D2^H@pó33333

^G0000132^R^BC1^Z^LNUM_PREVIOUS ÿ<8d><80><94><9f>.(^D2^H@}Û33333

6-4.Hive数据存储路径

默认存储路径:

Hive表默认存储路径是由${HIVE_HOME}/conf/hive-site.xml配置文件的hive.metastore.warehouse.dir属性指定,默认值是:/user/hive/warehouse

在该路径下,文件将根据所属的库、表,有规律的存储在对应的文件夹下

指定存储路径:

在Hive建表的时候,可以通过location语法来更改数据在HDFS上的存储路径,使得建表加载数据更加灵活方便

语法:LOCATION ‘

对于已经生成好的数据文件,使用location指定路径将会很方便

6-5.案例–王者荣耀数据Hive建表映射

案例相关数据素材文件均在:

https://osswangting.oss-cn-shanghai.aliyuncs.com/hive/honor_of_kings.zip

案例1

背景:

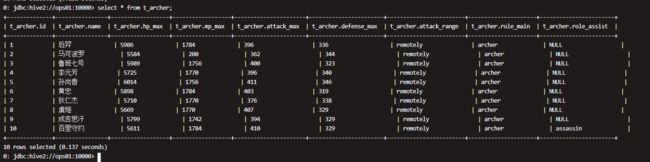

文件archer.txt中记录了手游《王者荣耀》射手的相关信息,包括生命、物防、物攻等属性信息,其中字段之间分隔符为制表符\t,要求在Hive中建表映射成功该文件

archer.txt

honor_of_kings.zip下载解压后上传即可

1 后羿 5986 1784 396 336 remotely archer

2 马可波罗 5584 200 362 344 remotely archer

3 鲁班七号 5989 1756 400 323 remotely archer

4 李元芳 5725 1770 396 340 remotely archer

5 孙尚香 6014 1756 411 346 remotely archer

6 黄忠 5898 1784 403 319 remotely archer

7 狄仁杰 5710 1770 376 338 remotely archer

8 虞姬 5669 1770 407 329 remotely archer

9 成吉思汗 5799 1742 394 329 remotely archer

10 百里守约 5611 1784 410 329 remotely archer assassin

- 字段含义:id、name(英雄名称)、hp_max(最大生命)、mp_max(最大法力)、attack_max(最高物攻)、defense_max(最大物防)、attack_range(攻击范围)、role_main(主要定位)、role_assist(次要定位)。

- 字段都是基本类型,字段的顺序需要注意。

- 字段之间的分隔符是制表符,需要使用row format语法进行指定

执行建表语句:

use hv_2022_10_13;

create table t_archer(

id int comment "ID",

name string comment "英雄名称",

hp_max int comment "最大生命",

mp_max int comment "最大法力",

attack_max int comment "最高物攻",

defense_max int comment "最大物防",

attack_range string comment "攻击范围",

role_main string comment "主要定位",

role_assist string comment "次要定位"

) comment "王者荣耀射手信息"

row format delimited

fields terminated by "\t";

建表成功之后,在Hive的默认存储路径下就生成了表对应的文件夹;

把archer.txt文件上传到对应的表文件夹下

wangting@ops01:/home/wangting/20221013 >ls

archer.txt part-m-00000 user.txt

wangting@ops01:/home/wangting/20221013 >hdfs dfs -put archer.txt /user/hive/warehouse/hv_2022_10_13.db/t_archer

查数验证:

执行查询操作,可以看出数据已经映射成功。

核心语法:row format delimited fields terminated by 指定字段之间的分隔符。

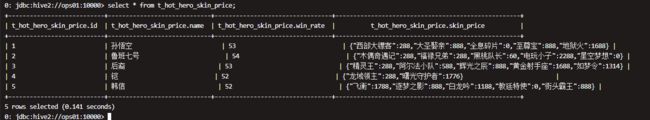

案例2

背景:

文件hot_hero_skin_price.txt中记录了手游《王者荣耀》热门英雄的相关皮肤价格信息,要求在Hive中建表映射成功该文件

hot_hero_skin_price.txt

1,孙悟空,53,西部大镖客:288-大圣娶亲:888-全息碎片:0-至尊宝:888-地狱火:1688

2,鲁班七号,54,木偶奇遇记:288-福禄兄弟:288-黑桃队长:60-电玩小子:2288-星空梦想:0

3,后裔,53,精灵王:288-阿尔法小队:588-辉光之辰:888-黄金射手座:1688-如梦令:1314

4,铠,52,龙域领主:288-曙光守护者:1776

5,韩信,52,飞衡:1788-逐梦之影:888-白龙吟:1188-教廷特使:0-街头霸王:888

- 字段:id、name(英雄名称)、win_rate(胜率)、skin_price(皮肤及价格);

- 前3个字段原生数据类型、最后一个字段复杂类型map。

- 需要指定字段之间分隔符、集合元素之间分隔符、map kv之间分隔符

执行建表语句:

use hv_2022_10_13;

create table t_hot_hero_skin_price(

id int,

name string,

win_rate int,

skin_price map<string,int>

)

row format delimited

fields terminated by ',' --字段之间分隔符

collection items terminated by '-' --集合元素之间分隔符

map keys terminated by ':'; --集合元素kv之间分隔符;

建表成功之后,在Hive的默认存储路径下就生成了表对应的文件夹;

把hot_hero_skin_price.txt文件上传到对应的表文件夹下

wangting@ops01:/home/wangting/20221013 >ls

archer.txt hot_hero_skin_price.txt part-m-00000 user.txt

wangting@ops01:/home/wangting/20221013 >hdfs dfs -put hot_hero_skin_price.txt /user/hive/warehouse/hv_2022_10_13.db/t_hot_hero_skin_price

查数验证:

执行查询操作,可以看出数据已经映射成功

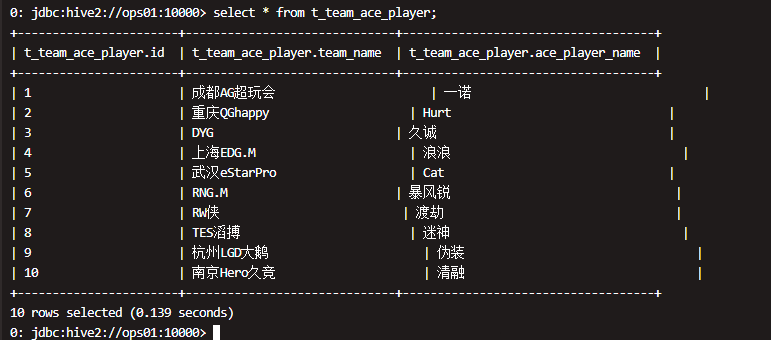

案例3

背景:

文件team_ace_player.txt中记录了手游《王者荣耀》主要战队内最受欢迎的王牌选手信息,字段之间使用的是\001作为分隔符,要求在Hive中建表映射成功该文件

team_ace_player.txt

有不可见字符,自行下载:https://osswangting.oss-cn-shanghai.aliyuncs.com/hive/honor_of_kings.zip

- 字段:id、team_name(战队名称)、ace_player_name(王牌选手名字)

- 数据都是原生数据类型,且字段之间分隔符是\001,因此在建表的时候可以省去row format语句,因为hive默认的分隔符就是\001

执行建表语句:

use hv_2022_10_13;

create table t_team_ace_player(

id int,

team_name string,

ace_player_name string

);

建表成功后,把team_ace_player.txt文件上传到对应的表文件夹下

wangting@ops01:/home/wangting/20221013 >ls

archer.txt hot_hero_skin_price.txt part-m-00000 team_ace_player.txt user.txt

wangting@ops01:/home/wangting/20221013 >hdfs dfs -put team_ace_player.txt /user/hive/warehouse/hv_2022_10_13.db/t_team_ace_player

查数验证:

执行查询操作,可以看出数据已经映射成功。

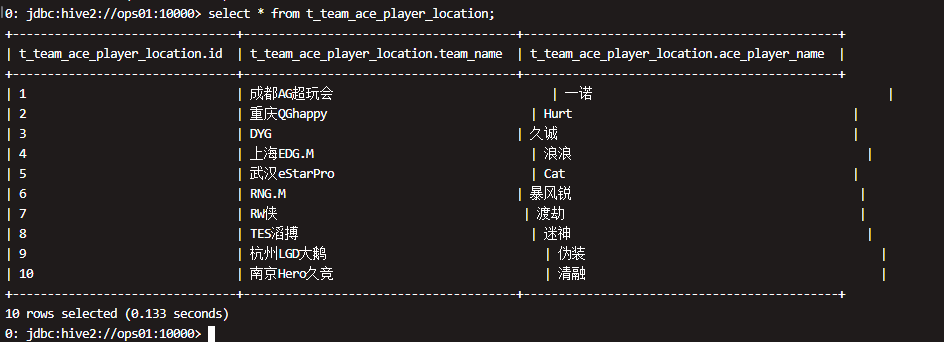

案例4

背景:

文件team_ace_player.txt中记录了手游《王者荣耀》主要战队内最受欢迎的王牌选手信息,字段之间使用的是\001作为分隔符。

要求把文件上传到HDFS任意路径下,不能移动复制,并在Hive中建表映射成功该文件相当于指定数据存储路径

执行建表语句:

create table t_team_ace_player_location(

id int,

team_name string,

ace_player_name string)

location '/20221014';

上传数据文件:

wangting@ops01:/home/wangting/20221013 >hdfs dfs -mkdir /20221014

wangting@ops01:/home/wangting/20221013 >hdfs dfs -put team_ace_player.txt /20221014/

查数验证:

执行查询操作,可以看出数据已经映射成功。

第7章:Hive SQL DDL建表高阶语法

7-1.Hive 内部表、外部表

什么是内部表

- 内部表(Internal table)也称为被Hive拥有和管理的托管表(Managed table)。

- 默认情况下创建的表就是内部表,Hive拥有该表的结构和文件。换句话说,Hive完全管理表(元数据和数据)的生命周期,类似于RDBMS中的表。

- 当删除内部表时,会删除数据以及表的元数据

可以使用DESCRIBE FORMATTED tablename,来获取表的元数据描述信息,从中可以看出表的类型

Table Type: MANAGED_TABLE

什么是外部表

- 外部表(External table)中的数据不是Hive拥有或管理的,只管理表元数据的生命周期。

- 要创建一个外部表,需要使用EXTERNAL语法关键字。

- 删除外部表只会删除元数据,而不会删除实际数据。在Hive外部仍然可以访问实际数据。

- 实际场景中,外部表搭配location语法指定数据的路径,可以让数据更安全

可以使用DESCRIBE FORMATTED tablename,来获取表的元数据描述信息,从中可以看出表的类型

Table Type: EXTERNAL_TABLE

内、外部表差异

- 无论内部表还是外部表,Hive都在Hive Metastore中管理表定义、字段类型等元数据信息。

- 删除内部表时,除了会从Metastore中删除表元数据,还会从HDFS中删除其所有数据文件。

- 删除外部表时,只会从Metastore中删除表的元数据,并保持HDFS位置中的实际数据不变。

如何选择内、外部表

- 当需要通过Hive完全管理控制表的整个生命周期时,请使用内部表。

- 当数据来之不易,防止误删,请使用外部表,因为即使删除表,文件也会被保留。

案例验证内部表、外部表区别

wangting@ops01:/home/wangting/20221013 >ls

archer.txt hot_hero_skin_price.txt part-m-00000 team_ace_player.txt user.txt

wangting@ops01:/home/wangting/20221013 >cat user.txt

1,zhangsan,18,beijing

2,lisi,25,shanghai

3,allen,30,shanghai

4,woon,15,nanjing

5,james,45,hangzhou

6,tony,26,beijing

wangting@ops01:/home/wangting/20221013 >hdfs dfs -mkdir /20221014/in

wangting@ops01:/home/wangting/20221013 >hdfs dfs -mkdir /20221014/out

建内外表

0: jdbc:hive2://ops01:10000> create table t_user_in(id int,name varchar(255),age int,city varchar(255)) row format delimited fields terminated by ',' location '/20221014/in';

No rows affected (0.083 seconds)

0: jdbc:hive2://ops01:10000> create external table t_user_out(id int,name varchar(255),age int,city varchar(255)) row format delimited fields terminated by ',' location '/20221014/out';

No rows affected (0.077 seconds)

上传映射文件

wangting@ops01:/home/wangting/20221013 >hdfs dfs -put user.txt /20221014/in/t_user_in

wangting@ops01:/home/wangting/20221013 >hdfs dfs -put user.txt /20221014/out/t_user_out

查询数据验证结果

0: jdbc:hive2://ops01:10000> select * from t_user_in;

+---------------+-----------------+----------------+-----------------+

| t_user_in.id | t_user_in.name | t_user_in.age | t_user_in.city |

+---------------+-----------------+----------------+-----------------+

| 1 | zhangsan | 18 | beijing |

| 2 | lisi | 25 | shanghai |

| 3 | allen | 30 | shanghai |

| 4 | woon | 15 | nanjing |

| 5 | james | 45 | hangzhou |

| 6 | tony | 26 | beijing |

+---------------+-----------------+----------------+-----------------+

6 rows selected (0.143 seconds)

0: jdbc:hive2://ops01:10000> select * from t_user_out;

+----------------+------------------+-----------------+------------------+

| t_user_out.id | t_user_out.name | t_user_out.age | t_user_out.city |

+----------------+------------------+-----------------+------------------+

| 1 | zhangsan | 18 | beijing |

| 2 | lisi | 25 | shanghai |

| 3 | allen | 30 | shanghai |

| 4 | woon | 15 | nanjing |

| 5 | james | 45 | hangzhou |

| 6 | tony | 26 | beijing |

+----------------+------------------+-----------------+------------------+

6 rows selected (0.138 seconds)

此时,内外表都可以成功映射

t_user_in 对应hdfs文件路径 /20221014/in/t_user_in

t_user_out 对应hdfs文件路径 /20221014/out/t_user_out

wangting@ops01:/home/wangting/20221013 >hdfs dfs -ls /20221014

Found 2 items

drwxr-xr-x - wangting supergroup 0 2022-10-14 13:53 /20221014/in

drwxr-xr-x - wangting supergroup 0 2022-10-14 13:54 /20221014/out

wangting@ops01:/home/wangting/20221013 >hdfs dfs -ls /20221014/in

Found 1 items

-rw-r--r-- 3 wangting supergroup 117 2022-10-14 13:53 /20221014/in/t_user_in

wangting@ops01:/home/wangting/20221013 >hdfs dfs -ls /20221014/out

Found 1 items

-rw-r--r-- 3 wangting supergroup 117 2022-10-14 13:54 /20221014/out/t_user_out

将内外表删除drop

0: jdbc:hive2://ops01:10000> drop table t_user_in;

No rows affected (0.114 seconds)

0: jdbc:hive2://ops01:10000> drop table t_user_out;

No rows affected (0.093 seconds)

删除表后验证hdfs映射文件情况

wangting@ops01:/home/wangting/20221013 >hdfs dfs -ls /20221014/in

ls: `/20221014/in': No such file or directory

wangting@ops01:/home/wangting/20221013 >hdfs dfs -ls /20221014/out

Found 1 items

-rw-r--r-- 3 wangting supergroup 117 2022-10-14 13:54 /20221014/out/t_user_out

内部表drop删除后,hdfs上的/20221014/in/t_user_in文件已经同步被删除

外部表drop删除后,hdfs上的/20221014/out/t_user_out文件依旧还在hdfs上

如果需要外部表重建表即可再次使用数据文件

7-2.Hive Partitioned Tables 分区表

分区表概念

- 当Hive表对应的数据量大、文件个数多时,为了避免查询时全表扫描数据,Hive支持根据指定的字段对表进行分区,分区的字段可以是日期、地域、种类等具有标识意义的字段。

- 例如把一整年的数据根据月份划分12个月(12个分区),后续就可以查询指定月份分区的数据,尽可能避免了全表扫描查询

创建分区表语法

分区字段不能是表中已经存在的字段,因为分区字段最终也会以虚拟字段的形式显示在表结构上

关键词:PARTITIONED BY

示例:

CREATE TABLE table_name (

column1 data_type,

column2 data_type,

....)

PARTITIONED BY (partition1 data_type, partition2 data_type,…);

案例验证实验分区表

背景:

针对《王者荣耀》英雄数据,创建一张分区表t_all_hero_part,以role角色作为分区字段

执行建表语句:

create table t_all_hero_part(

id int,

name string,

hp_max int,

mp_max int,

attack_max int,

defense_max int,

attack_range string,

role_main string,

role_assist string

) partitioned by (role string)

row format delimited

fields terminated by "\t";

0: jdbc:hive2://ops01:10000> desc t_all_hero_part;

+--------------------------+------------+----------+

| col_name | data_type | comment |

+--------------------------+------------+----------+

| id | int | |

| name | string | |

| hp_max | int | |

| mp_max | int | |

| attack_max | int | |

| defense_max | int | |

| attack_range | string | |

| role_main | string | |

| role_assist | string | |

| role | string | |

| | NULL | NULL |

| # Partition Information | NULL | NULL |

| # col_name | data_type | comment |

| role | string | |

+--------------------------+------------+----------+

14 rows selected (0.114 seconds)

上传数据文件:

wangting@ops01:/home/wangting/20221013/hero >ls

archer.txt assassin.txt mage.txt support.txt tank.txt warrior.txt

wangting@ops01:/home/wangting/20221013/hero >beeline -u jdbc:hive2://ops01:10000 -n wangting

Connecting to jdbc:hive2://ops01:10000

Connected to: Apache Hive (version 3.1.2)

Driver: Hive JDBC (version 3.1.2)

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version 3.1.2 by Apache Hive

0: jdbc:hive2://ops01:10000>

0: jdbc:hive2://ops01:10000> use hv_2022_10_13;

0: jdbc:hive2://ops01:10000> load data local inpath '/home/wangting/20221013/hero/archer.txt' into table t_all_hero_part partition(role='sheshou');

0: jdbc:hive2://ops01:10000> load data local inpath '/home/wangting/20221013/hero/assassin.txt' into table t_all_hero_part partition(role='cike');

0: jdbc:hive2://ops01:10000> load data local inpath '/home/wangting/20221013/hero/mage.txt' into table t_all_hero_part partition(role='fashi');

0: jdbc:hive2://ops01:10000> load data local inpath '/home/wangting/20221013/hero/support.txt' into table t_all_hero_part partition(role='fuzhu');

0: jdbc:hive2://ops01:10000> load data local inpath '/home/wangting/20221013/hero/tank.txt' into table t_all_hero_part partition(role='tanke');

0: jdbc:hive2://ops01:10000> load data local inpath '/home/wangting/20221013/hero/warrior.txt' into table t_all_hero_part partition(role='zhanshi');

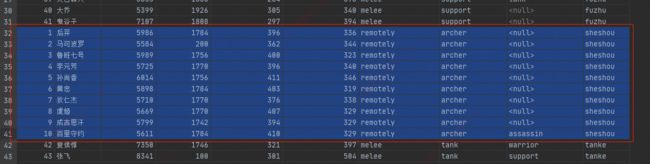

查询数据验证:

分区本质

外表上看起来分区表好像没多大变化,只不过多了一个分区字段。实际上分区表在底层管理数据的方式发生了改变。这里直接去HDFS查看区别

wangting@ops01:/home/wangting/20221013/hero >hdfs dfs -ls /user/hive/warehouse/hv_2022_10_13.db/t_all_hero_part

Found 6 items

drwxr-xr-x - wangting supergroup 0 2022-10-14 14:29 /user/hive/warehouse/hv_2022_10_13.db/t_all_hero_part/role=cike

drwxr-xr-x - wangting supergroup 0 2022-10-14 14:30 /user/hive/warehouse/hv_2022_10_13.db/t_all_hero_part/role=fashi

drwxr-xr-x - wangting supergroup 0 2022-10-14 14:30 /user/hive/warehouse/hv_2022_10_13.db/t_all_hero_part/role=fuzhu

drwxr-xr-x - wangting supergroup 0 2022-10-14 14:29 /user/hive/warehouse/hv_2022_10_13.db/t_all_hero_part/role=sheshou

drwxr-xr-x - wangting supergroup 0 2022-10-14 14:30 /user/hive/warehouse/hv_2022_10_13.db/t_all_hero_part/role=tanke

drwxr-xr-x - wangting supergroup 0 2022-10-14 14:30 /user/hive/warehouse/hv_2022_10_13.db/t_all_hero_part/role=zhanshi

- 分区的概念提供了一种将Hive表数据分离为多个文件/目录的方法。

- 不同分区对应着不同的文件夹,同一分区的数据存储在同一个文件夹下。

- 查询过滤的时候只需要根据分区值找到对应的文件夹,扫描本文件夹下本分区下的文件即可,避免全表数据扫描。

- 这种指定分区查询的方式叫做分区裁剪。

分区表的使用

分区表的使用重点在于:

- 建表时根据业务场景设置合适的分区字段。比如日期、地域、类别等;

- 查询的时候尽量先使用where进行分区过滤,查询指定分区的数据,避免全表扫描。

比如:查询英雄主要定位是射手并且最大生命大于6000的个数。使用分区表查询和使用非分区表进行查询

英雄为射手 + 生命值大于6000

0: jdbc:hive2://ops01:10000> select count(*) from t_all_hero_part where role="sheshou" and hp_max >6000;

+------+

| _c0 |

+------+

| 1 |

+------+

1 row selected (26.307 seconds)

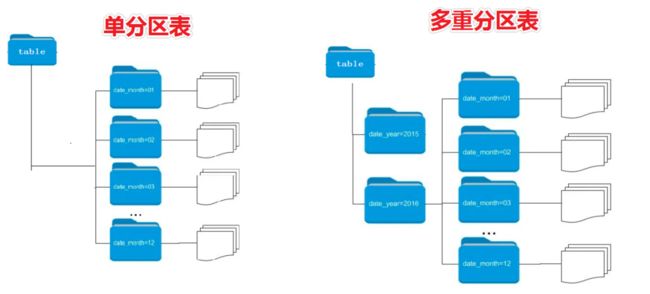

多重分区表

-

通过建表语句中关于分区的相关语法可以发现,Hive支持多个分区字段

- PARTITIONED BY (partition1 data_type, partition2 data_type,….)

-

多重分区下,分区之间是一种递进关系,可以理解为在前一个分区的基础上继续分区

-

从HDFS的角度来看就是文件夹下继续划分子文件夹。比如:把全国人口数据首先根据省进行分区,然后根据市进行划分,如果你需要甚至可以继续根据区县再划分,此时就是3分区表

创建多重分区表示例:

--单分区表,按省份分区

create table t_user_province (id int, name string,age int) partitioned by (province string);

--双分区表,按省份和市分区

--分区字段之间是一种递进的关系 因此要注意分区字段的顺序 谁在前在后

create table t_user_province_city (id int, name string,age int) partitioned by (province string, city string);

--双分区表的数据加载 静态分区加载数据

load data local inpath '/root/hivedata/user.txt' into table t_user_province_city

partition(province='zhejiang',city='hangzhou');

load data local inpath '/root/hivedata/user.txt' into table t_user_province_city

partition(province='zhejiang',city='ningbo');

load data local inpath '/root/hivedata/user.txt' into table t_user_province_city

partition(province='shanghai',city='pudong');

--双分区表的使用 使用分区进行过滤 减少全表扫描 提高查询效率

select * from t_user_province_city where province= "zhejiang" and city ="hangzhou";

分区表数据加载–动态分区

-

所谓动态分区指的是分区的字段值是基于查询结果(参数位置)自动推断出来的。核心语法就是insert+select

-

启用hive动态分区,需要在hive会话中设置两个参数

- set hive.exec.dynamic.partition=true;

- 是否开启动态分区功能

- set hive.exec.dynamic.partition.mode=nonstrict;

- 指定动态分区模式,分为nonstick非严格模式和strict严格模式。

- strict严格模式要求至少有一个分区为静态分区。

- set hive.exec.dynamic.partition=true;

-

创建一张新的分区表,执行动态分区插入。

-

动态分区插入时,分区值是根据查询返回字段位置自动推断的

创建动态分区示例:

-- 创建原始表,无分区

create table t_all_hero(

id int,

name string,

hp_max int,

mp_max int,

attack_max int,

defense_max int,

attack_range string,

role_main string,

role_assist string

)

row format delimited

fields terminated by "\t";

上传映射文件:

wangting@ops01:/home/wangting/20221013/hero >ls

archer.txt assassin.txt mage.txt support.txt tank.txt warrior.txt

wangting@ops01:/home/wangting/20221013/hero >hdfs dfs -put * /user/hive/warehouse/hv_2022_10_13.db/t_all_hero

2022-10-14 15:47:40,286 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2022-10-14 15:47:40,426 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2022-10-14 15:47:40,447 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2022-10-14 15:47:40,466 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2022-10-14 15:47:40,485 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2022-10-14 15:47:40,503 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

开启动态分区:

--动态分区

0: jdbc:hive2://ops01:10000> set hive.exec.dynamic.partition=true;

No rows affected (0.005 seconds)

0: jdbc:hive2://ops01:10000> set hive.exec.dynamic.partition.mode=nonstrict;

No rows affected (0.012 seconds)

创建一个动态分区表:

--创建一张新的分区表 t_all_hero_part_dynamic

create table t_all_hero_part_dynamic(

id int,

name string,

hp_max int,

mp_max int,

attack_max int,

defense_max int,

attack_range string,

role_main string,

role_assist string

) partitioned by (role string)

row format delimited

fields terminated by "\t";

向动态分区表中插入数据:

0: jdbc:hive2://ops01:10000> insert into table t_all_hero_part_dynamic partition(role) select tmp.*,tmp.role_main from t_all_hero tmp;

No rows affected (29.625 seconds)

查询分区状态情况:

0: jdbc:hive2://ops01:10000> select distinct(role) from t_all_hero_part_dynamic ;

+-----------+

| role |

+-----------+

| archer |

| assassin |

| mage |

| support |

| tank |

| warrior |

+-----------+

6 rows selected (24.049 seconds)

分区表总结与注意事项

- 分区表不是建表的必要语法规则,是一种优化手段表,可选;

- 分区字段不能是表中已有的字段,不能重复;

- 分区字段是虚拟字段,其数据并不存储在底层的文件中;

- 分区字段值的确定来自于用户价值数据手动指定(静态分区)或者根据查询结果位置自动推断(动态分区)

- Hive支持多重分区,也就是说在分区的基础上继续分区,划分更加细粒度

7-3.Hive Bucketed Tables 分桶表

分桶表概念

- 分桶表也叫做桶表,叫法源自建表语法中bucket单词,是一种用于优化查询而设计的表类型。

- 分桶表对应的数据文件在底层会被分解为若干个部分,通俗来说就是被拆分成若干个独立的小文件。

- 在分桶时,要指定根据哪个字段将数据分为几桶(几个部分)。

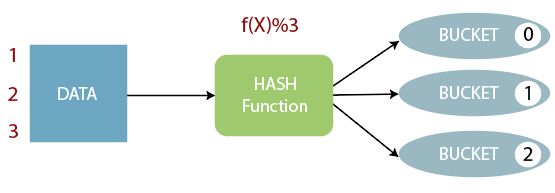

分桶规则

分桶规则如下:桶编号相同的数据会被分到同一个桶当中

Bucket number = hash_function(bucketing_column) mod num_buckets

分桶编号 = 哈希方法(分桶字段) 取模 分桶个数

hash_function取决于分桶字段bucketing_column的类型:

- 如果是int类型,hash_function(int) == int;

- 如果是其他比如bigint,string或者复杂数据类型,hash_function比较棘手,将是从该类型派生的某个数字,比如hashcode值。

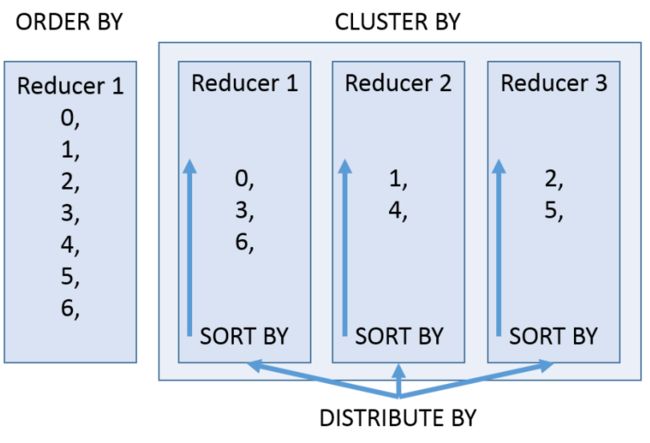

分桶表语法

语法关键词:CLUSTERED BY (col_name)

--分桶表建表语句

CREATE [EXTERNAL] TABLE [db_name.]table_name

[(col_name data_type, ...)]

CLUSTERED BY (col_name)

INTO N BUCKETS;

- CLUSTERED BY (col_name)表示根据哪个字段进行分

- INTO N BUCKETS表示分为几桶(也就是几个部分)

- 需要注意的是,分桶的字段必须是表中已经存在的字段

分桶表的创建

数据集文件连接:

链接:https://pan.baidu.com/s/1cWq6wd0pfqaCRuBijt1WKg?pwd=cc6v

提取码:cc6v

下载解压文件包:

wangting@ops01:/home/wangting/20221013/usa >unzip us-civid19.zip

wangting@ops01:/home/wangting/20221013/usa >ll

total 46864

-rw-rw-r-- 1 wangting wangting 4318157 Jan 29 2021 COVID-19-Cases-USA-By-County.csv

-rw-rw-r-- 1 wangting wangting 116254 Jan 29 2021 COVID-19-Cases-USA-By-State.csv

-rw-rw-r-- 1 wangting wangting 2988679 Jan 29 2021 COVID-19-Deaths-USA-By-County.csv

-rw-rw-r-- 1 wangting wangting 86590 Jan 29 2021 COVID-19-Deaths-USA-By-State.csv

-rw-rw-r-- 1 wangting wangting 39693686 Jan 29 2021 us-counties.csv

-rw-rw-r-- 1 wangting wangting 136795 Sep 16 23:23 us-covid19-counties.dat

-rw-rw-r-- 1 wangting wangting 9135 Jan 29 2021 us.csv

-rw-rw-r-- 1 wangting wangting 620291 Jan 29 2021 us-states.csv

示例:

背景:

现有美国2021-1-28号,各个县county的新冠疫情累计案例信息,包括确诊病例和死亡病例,数据格式如下所示;

字段含义:count_date(统计日期),county(县),state(州),fips(县编码code),cases(累计确诊病例),deaths(累计死亡病例)样例数据:

2021-01-28,Autauga,Alabama,01001,5554,69 2021-01-28,Baldwin,Alabama,01003,17779,225 2021-01-28,Barbour,Alabama,01005,1920,40 2021-01-28,Bibb,Alabama,01007,2271,51 2021-01-28,Blount,Alabama,01009,5612,98 2021-01-28,Bullock,Alabama,01011,1079,29 2021-01-28,Butler,Alabama,01013,1788,60 2021-01-28,Calhoun,Alabama,01015,11833,231 2021-01-28,Chambers,Alabama,01017,3159,76 2021-01-28,Cherokee,Alabama,01019,1682,35

根据state州把数据分为5桶,建表语句如下

CREATE TABLE t_usa_covid19_bucket(

count_date string,

county string,

state string,

fips int,

cases int,

deaths int)

CLUSTERED BY(state) INTO 5 BUCKETS;

在创建分桶表时,还可以指定分桶内的数据排序规则

CREATE TABLE t_usa_covid19_bucket_sort(

count_date string,

county string,

state string,

fips int,

cases int,

deaths int)

CLUSTERED BY(state)

sorted by (cases desc) INTO 5 BUCKETS;

把源数据加载到普通hive表中,创建普通表t_usa_covid19 :

CREATE TABLE t_usa_covid19(

count_date string,

county string,

state string,

fips int,

cases int,

deaths int)

row format delimited fields terminated by ",";

将映射文件上传hdfs对应普通表t_usa_covid19

# 将源数据上传到HDFS,t_usa_covid19表对应的路径下

wangting@ops01:/home/wangting/20221013/usa >hdfs dfs -put us-covid19-counties.dat /user/hive/warehouse/hv_2022_10_13.db/t_usa_covid19

0: jdbc:hive2://ops01:10000> select * from t_usa_covid19 limit 5;

+---------------------------+-----------------------+----------------------+---------------------+----------------------+-----------------------+

| t_usa_covid19.count_date | t_usa_covid19.county | t_usa_covid19.state | t_usa_covid19.fips | t_usa_covid19.cases | t_usa_covid19.deaths |

+---------------------------+-----------------------+----------------------+---------------------+----------------------+-----------------------+

| 2021-01-28 | Autauga | Alabama | 1001 | 5554 | 69 |

| 2021-01-28 | Baldwin | Alabama | 1003 | 17779 | 225 |

| 2021-01-28 | Barbour | Alabama | 1005 | 1920 | 40 |

| 2021-01-28 | Bibb | Alabama | 1007 | 2271 | 51 |

| 2021-01-28 | Blount | Alabama | 1009 | 5612 | 98 |

+---------------------------+-----------------------+----------------------+---------------------+----------------------+-----------------------+

5 rows selected (0.139 seconds)

使用insert+select语法将数据加载到分桶表t_usa_covid19_bucket中:

0: jdbc:hive2://ops01:10000> insert into t_usa_covid19_bucket select * from t_usa_covid19;

No rows affected (55.215 seconds)

- 到HDFS上查看t_usa_covid19_bucket底层数据结构可以发现,数据被分为了5个部分。

- 并且从结果可以发现,分桶字段一样的数据就一定被分到同一个桶中。

wangting@ops01:/home/wangting/20221013/usa >hdfs dfs -ls /user/hive/warehouse/hv_2022_10_13.db/t_usa_covid19

Found 1 items

-rw-r--r-- 3 wangting supergroup 136795 2022-10-14 16:33 /user/hive/warehouse/hv_2022_10_13.db/t_usa_covid19/us-covid19-counties.dat

wangting@ops01:/home/wangting/20221013/usa >hdfs dfs -ls /user/hive/warehouse/hv_2022_10_13.db/t_usa_covid19_bucket

Found 5 items

-rw-r--r-- 3 wangting supergroup 20013 2022-10-14 16:35 /user/hive/warehouse/hv_2022_10_13.db/t_usa_covid19_bucket/000000_0

-rw-r--r-- 3 wangting supergroup 33705 2022-10-14 16:34 /user/hive/warehouse/hv_2022_10_13.db/t_usa_covid19_bucket/000001_0

-rw-r--r-- 3 wangting supergroup 46572 2022-10-14 16:34 /user/hive/warehouse/hv_2022_10_13.db/t_usa_covid19_bucket/000002_0

-rw-r--r-- 3 wangting supergroup 11636 2022-10-14 16:34 /user/hive/warehouse/hv_2022_10_13.db/t_usa_covid19_bucket/000003_0

-rw-r--r-- 3 wangting supergroup 24766 2022-10-14 16:35 /user/hive/warehouse/hv_2022_10_13.db/t_usa_covid19_bucket/000004_0

# 简单查看一下文件情况

wangting@ops01:/home/wangting/20221013/usa >hdfs dfs -cat /user/hive/warehouse/hv_2022_10_13.db/t_usa_covid19_bucket/000000_0 | head -3

2021-01-28 RensselaerNew York 360837720116

2021-01-28 PutnamNew York 36079717179

2021-01-28 OtsegoNew York 36077194029

wangting@ops01:/home/wangting/20221013/usa >hdfs dfs -cat /user/hive/warehouse/hv_2022_10_13.db/t_usa_covid19_bucket/000001_0 | head -3

2021-01-28 SampsonNorth Carolina 37163604279

2021-01-28 RutherfordNorth Carolina 371616116166

2021-01-28 RowanNorth Carolina 3715912709241

使用粪桶表优点

- 基于分桶字段查询时,减少全表扫描

--基于分桶字段state查询来自于New York州的数据

--不再需要进行全表扫描过滤

--根据分桶的规则hash_function(New York) mod 5计算出分桶编号

--查询指定分桶里面的数据 就可以找出结果 此时是分桶扫描而不是全表扫描

select * from t_usa_covid19_bucket where state="New York";

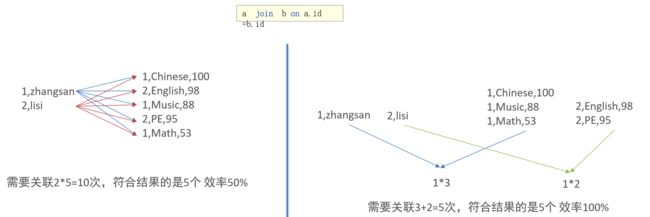

- JOIN时可以提高MR程序效率,减少笛卡尔积数量

根据join的字段对表进行分桶操作(比如下图中id是join的字段)

- 分桶表数据进行高效抽样

当数据量特别大时,对全体数据进行处理存在困难时,抽样就显得尤其重要了。抽样可以从被抽取的数据中估计和推断出整体的特性,是科学实验、质量检验、社会调查普遍采用的一种经济有效的工作和研究方法

7-4.Hive Transactional Tables事务表

Hive事务背景知识

-

Hive本身从设计之初时,就是不支持事务的,因为Hive的核心目标是将已经存在的结构化数据文件映射成为表,然后提供基于表的SQL分析处理,是一款面向分析的工具。且映射的数据通常存储于HDFS上,而HDFS是不支持随机修改文件数据的。

-

这个定位就意味着在早期的Hive的SQL语法中是没有update,delete操作的,也就没有所谓的事务支持了,因为都是select查询分析操作

-

从Hive0.14版本开始,具有ACID语义的事务已添加到Hive中,以解决以下场景下遇到的问题:

-

流式传输数据

- 使用如Apache Flume、Apache Kafka之类的工具将数据流式传输到Hadoop集群中。虽然这些工具可以每秒数百行或更多行的速度写入数据,但是Hive只能每隔15分钟到一个小时添加一次分区。如果每分甚至每秒频繁添加分区会很快导致表中大量的分区,并将许多小文件留在目录中,这将给NameNode带来压力。

- 因此通常使用这些工具将数据流式传输到已有分区中,但这有可能会造成脏读(数据传输一半失败,回滚了)。

需要通过事务功能,允许用户获得一致的数据视图并避免过多的小文件产生

-

尺寸变化缓慢

- 星型模式数据仓库中,维度表随时间缓慢变化。例如,零售商将开设新商店,需要将其添加到商店表中,或者现有商店可能会更改其平方英尺或某些其他跟踪的特征。这些更改导致需要插入单个记录或更新单条记录(取决于所选策略)

-

数据重述

- 有时发现收集的数据不正确,需要更正

-

Hive事务局限性

虽然Hive支持了具有ACID语义的事务,但是在使用起来,并没有像在MySQL中使用那样方便,有很多局限性。原因很简单,毕竟Hive的设计目标不是为了支持事务操作,而是支持分析操作,且最终基于HDFS的底层存储机制使得文件的增加删除修改操作需要动一些小心思。

- 尚不支持BEGIN,COMMIT和ROLLBACK。所有语言操作都是自动提交的。

- 仅支持ORC文件格式(STORED AS ORC)。

- 默认情况下事务配置为关闭。需要配置参数开启使用。

- 表必须是分桶表(Bucketed)才可以使用事务功能。

- 表参数transactional必须为true;

- 外部表不能成为ACID表,不允许从非ACID会话读取/写入ACID表。

创建使用Hive表尝试修改数据

背景:

在Hive中创建一张具备事务功能的表,并尝试进行增删改操作。

体验一下Hive的增删改操作和MySQL比较起来,性能如何

- 如果不做任何配置修改,直接针对Hive中已有的表进行Update、Delete、Insert操作,可以发现,只有insert语句可以执行,Update和Delete操作会报错。

- Insert插入操作能够成功的原因在于,底层是直接把数据写在一个新的文件中的

先创建一张普通的表:

create table student(

num int,

name string,

sex string,

age int,

dept string)

row format delimited

fields terminated by ',';

加载数据文件:

students.txt文件内容

95001,李勇,男,20,CS

95002,刘晨,女,19,IS

95003,王敏,女,22,MA

95004,张立,男,19,IS

95005,刘刚,男,18,MA

95006,孙庆,男,23,CS

95007,易思玲,女,19,MA

95008,李娜,女,18,CS

95009,梦圆圆,女,18,MA

95010,孔小涛,男,19,CS

95011,包小柏,男,18,MA

95012,孙花,女,20,CS

95013,冯伟,男,21,CS

95014,王小丽,女,19,CS

95015,王君,男,18,MA

95016,钱国,男,21,MA

95017,王风娟,女,18,IS

95018,王一,女,19,IS

95019,邢小丽,女,19,IS

95020,赵钱,男,21,IS

95021,周二,男,17,MA

95022,郑明,男,20,MA

wangting@ops01:/home/wangting/20221013 >hdfs dfs -put students.txt /user/hive/warehouse/hv_2022_10_13.db/student

执行数据修改操作:

0: jdbc:hive2://ops01:10000> select * from student limit 3;

+--------------+---------------+--------------+--------------+---------------+

| student.num | student.name | student.sex | student.age | student.dept |

+--------------+---------------+--------------+--------------+---------------+

| 95001 | 李勇 | 男 | 20 | CS |

| 95002 | 刘晨 | 女 | 19 | IS |

| 95003 | 王敏 | 女 | 22 | MA |

+--------------+---------------+--------------+--------------+---------------+

3 rows selected (0.12 seconds)

0: jdbc:hive2://ops01:10000> update student set age = 100 where num = 95001;

Error: Error while compiling statement: FAILED: SemanticException [Error 10294]: Attempt to do update or delete using transaction manager that does not support these operations. (state=42000,code=10294)

注意此时出现了报错

FAILED: SemanticException [Error 10294]: Attempt to do update or delete using transaction manager that does not support these operations. (state=42000,code=10294)

这是因为没有开启事务导致的抛错

配置开启事务、创建事务表

创建表

--1、开启事务配置(可以使用set设置当前session生效 也可以配置在hive-site.xml中)

-- set hive.support.concurrency = true; --Hive是否支持并发

-- set hive.enforce.bucketing = true; --从Hive2.0开始不再需要 是否开启分桶功能

-- set hive.exec.dynamic.partition.mode = nonstrict; --动态分区模式 非严格

-- set hive.txn.manager = org.apache.hadoop.hive.ql.lockmgr.DbTxnManager; --

-- set hive.compactor.initiator.on = true; --是否在Metastore实例上运行启动线程和清理线程

-- set hive.compactor.worker.threads = 1; --在此metastore实例上运行多少个压缩程序工作线程。

0: jdbc:hive2://ops01:10000> set hive.support.concurrency = true;

No rows affected (0.004 seconds)

0: jdbc:hive2://ops01:10000> set hive.enforce.bucketing = true;

No rows affected (0.003 seconds)

0: jdbc:hive2://ops01:10000> set hive.exec.dynamic.partition.mode = nonstrict;

No rows affected (0.003 seconds)

0: jdbc:hive2://ops01:10000> set hive.txn.manager = org.apache.hadoop.hive.ql.lockmgr.DbTxnManager;

No rows affected (0.003 seconds)

0: jdbc:hive2://ops01:10000> set hive.compactor.initiator.on = true;

No rows affected (0.003 seconds)

0: jdbc:hive2://ops01:10000> set hive.compactor.worker.threads = 1;

No rows affected (0.004 seconds)

--2、创建Hive事务表

create table trans_student(

id int,

name String,

age int

)clustered by (id) into 2 buckets stored as orc TBLPROPERTIES('transactional'='true');

--注意 事务表创建几个要素:开启参数、分桶表、存储格式orc、表属性

针对事务表进行增删改查操作验证:

0: jdbc:hive2://ops01:10000> insert into trans_student values(1,"wangting",666);

No rows affected (53.575 seconds)

0: jdbc:hive2://ops01:10000> select * from trans_student;

+-------------------+---------------------+--------------------+

| trans_student.id | trans_student.name | trans_student.age |

+-------------------+---------------------+--------------------+

| 1 | wangting | 666 |

+-------------------+---------------------+--------------------+

1 row selected (0.36 seconds)

0: jdbc:hive2://ops01:10000> update trans_student set age = 18 where id = 1;

No rows affected (26.142 seconds)

0: jdbc:hive2://ops01:10000> select * from trans_student;

+-------------------+---------------------+--------------------+

| trans_student.id | trans_student.name | trans_student.age |

+-------------------+---------------------+--------------------+

| 1 | wangting | 18 |

+-------------------+---------------------+--------------------+

1 row selected (0.199 seconds)

0: jdbc:hive2://ops01:10000> delete from trans_student where id =1;

No rows affected (26.221 seconds)

0: jdbc:hive2://ops01:10000> select * from trans_student;

+-------------------+---------------------+--------------------+

| trans_student.id | trans_student.name | trans_student.age |

+-------------------+---------------------+--------------------+

+-------------------+---------------------+--------------------+

No rows selected (0.203 seconds)

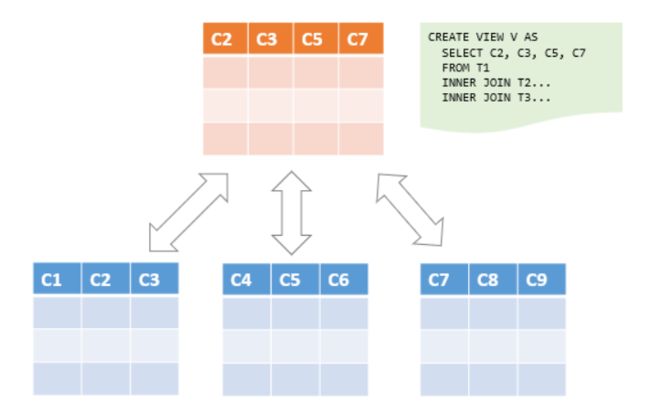

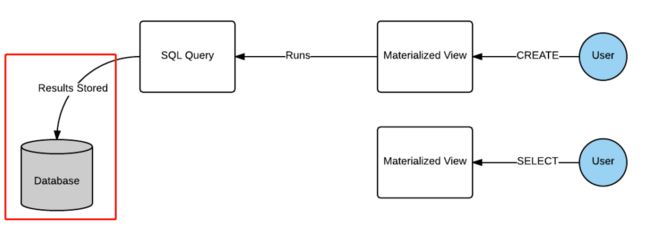

7-5.Hive Views 视图

视图概念

- Hive中的视图(view)是一种虚拟表,只保存定义,不实际存储数据。

- 通常从真实的物理表查询中创建生成视图,也可以从已经存在的视图上创建新视图。

- 创建视图时,将冻结视图的架构,如果删除或更改基础表,则视图将失败。

- 视图是用来简化操作的,不缓冲记录,也没有提高查询性能。

相关语法示例: