深度学习模型系列二——多分类和回归模型——多层感知机

多层感知机

- 1、概述

- 2、原理

- 3、多层感知机(MLP)代码详细解读(基于python+PyTorch)

1、概述

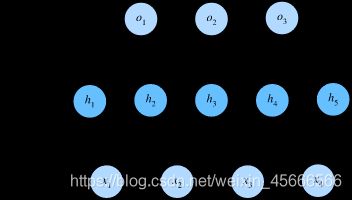

\quad \quad 多层感知机(MLP,Multilayer Perceptron)也叫人工神经网络(ANN,Artificial Neural Network),除了输入输出层,它中间可以有多个隐层,最简单的MLP只含一个隐层,即三层的结构,如下图:

\quad \quad 从上图可以看到,多层感知机层与层之间是全连接的。多层感知机最底层是输入层,中间是隐藏层,最后是输出层。

\quad \quad 隐藏层的神经元怎么得来?首先它与输入层是全连接的,假设输入层用向量X表示,则隐藏层的输出就是 f ( W 1 X + b 1 ) f (W_1X+b_1) f(W1X+b1), W 1 W_1 W1是权重(也叫连接系数), b 1 b_1 b1是偏置,函数f 可以是激活函数,比如常用的sigmoid函数或者tanh函数。常见激活函数

2、原理

\quad \quad 多层感知机就是含有至少一个隐藏层的由全连接层组成的神经网络,且每个隐藏层的输出通过激活函数进行变换。多层感知机的层数和各隐藏层中隐藏单元个数都是超参数。

\quad \quad 具体来说,给定一个小批量样本 X ∈ R n × d \boldsymbol{X} \in \mathbb{R}^{n \times d} X∈Rn×d,其批量大小为 n n n,输入个数为 d d d。假设多层感知机只有一个隐藏层,其中隐藏单元个数为 h h h。记隐藏层的输出(也称为隐藏层变量或隐藏变量)为 H \boldsymbol{H} H,有 H ∈ R n × h \boldsymbol{H} \in \mathbb{R}^{n \times h} H∈Rn×h。因为隐藏层和输出层均是全连接层,可以设隐藏层的权重参数和偏差参数分别为 W h ∈ R d × h \boldsymbol{W}_h \in \mathbb{R}^{d \times h} Wh∈Rd×h和 b h ∈ R 1 × h \boldsymbol{b}_h \in \mathbb{R}^{1 \times h} bh∈R1×h,输出层的权重和偏差参数分别为 W o ∈ R h × q \boldsymbol{W}_o \in \mathbb{R}^{h \times q} Wo∈Rh×q和 b o ∈ R 1 × q \boldsymbol{b}_o \in \mathbb{R}^{1 \times q} bo∈R1×q。

\quad \quad 以单隐藏层为例并沿用本节之前定义的符号,多层感知机隐藏层按以下方式计算输出:

H = ϕ ( X W h + b h ) , O = H W o + b o , \begin{aligned} \boldsymbol{H} &= \phi(\boldsymbol{X} \boldsymbol{W}_h + \boldsymbol{b}_h),\\ \boldsymbol{O} &= \boldsymbol{H} \boldsymbol{W}_o + \boldsymbol{b}_o, \end{aligned} HO=ϕ(XWh+bh),=HWo+bo,

其中 ϕ \phi ϕ表示激活函数。在分类问题中,我们可以对输出 O \boldsymbol{O} O做softmax运算,并使用softmax回归中的交叉熵损失函数。

在回归问题中,我们将输出层的输出个数设为1,并将输出 O \boldsymbol{O} O直接提供给线性回归中使用的平方损失函数。

3、多层感知机(MLP)代码详细解读(基于python+PyTorch)

使用多层感知机对fashion_mnist数据集数据集简介进行图像分类

1、导入库

#使用多层感知机对fashion_mnist分类

#导入库

import torch

import numpy as np

import sys

from torch import nn

from torch.nn import init

import torchvision

import torchvision.transforms as transforms

2.导入fashion_mnist数据集及类别标签

#继续使用fashion_mnist数据集

mnist_train = torchvision.datasets.FashionMNIST(root='~/Desktop/OpenCV_demo/Datasets/FashionMNIST', train=True, download=True, transform=transforms.ToTensor())

mnist_test = torchvision.datasets.FashionMNIST(root='~/Desktop/OpenCV_demo/Datasets/FashionMNIST', train=False, download=True, transform=transforms.ToTensor())

#数据集中的10个类别

def get_fashion_mnist_labels(labels):

text_labels = ['t-shirt','trouser','pullover','dress','coat',

'sandal','shirt','sneaker','bag','ankle boot']

return [text_labels[int(i)]for i in labels]

3.设置batch_size,小批量读取数据

#batch_size的设置

batch_size = 256

if sys.platform.startswith('win'):

num_workers = 0

else:

num_workers = 4

train_iter = torch.utils.data.DataLoader(

mnist_train,batch_size = batch_size,shuffle = True,num_workers = num_workers)

test_iter = torch.utils.data.DataLoader(

mnist_test,batch_size = batch_size,shuffle = True,num_workers = num_workers)

4.初始换参数 w1,w2,b1,b2 ,此时添加了一层隐藏层

Fashion-MNIST数据集中图像形状为 28 × 28 28 \times 28 28×28,类别数为10。本节中我们依然使用长度为 28 × 28 = 784 28 \times 28 = 784 28×28=784的向量表示每一张图像。因此,输入个数为784,输出个数为10。实验中,我们设超参数隐藏单元个数为256。

#设置输入28*28=784 输出设置为10(类别) 隐藏单元个数设置为256 两层的神经网络

num_inputs, num_outputs, num_hiddens = 784, 10, 256

W1 = torch.tensor(np.random.normal(0, 0.01, (num_inputs, num_hiddens)), dtype=torch.float)

b1 = torch.zeros(num_hiddens, dtype=torch.float)

W2 = torch.tensor(np.random.normal(0, 0.01, (num_hiddens, num_outputs)), dtype=torch.float)

b2 = torch.zeros(num_outputs, dtype=torch.float)

params = [W1, b1, W2, b2]

for param in params:

param.requires_grad_(requires_grad=True)

5.定义激活函数,损失函数,优化函数

#定义激活函数RELU

def relu(X):

return torch.max(input=X,other = torch.tensor(0.0))

#定义损失函数:交叉熵损失函数

loss = torch.nn.CrossEntropyLoss()

#优化算法 小批量随机梯度下降算法

def sgd(params,lr,batch_size):

for param in params:

param.data -= lr*param.grad/batch_size

#也可以由一句话代替

#loss = torch.nn.CrossEntropyLoss()

#optimizer = torch.optim.SGD(net.parameters(), lr=0.5)

6.定义网络模型 双层网络,经过激活函数传递到下一层

#定义模型 torch.matmul表示当 两者维度不一致时会自动填充到相应的维度进行点称

#定义模型 torch.matmul表示当 两者维度不一致时会自动填充到相应的维度进行点称

def net(X):

X = X.view((-1,num_inputs))

H = relu(torch.matmul(X,W1)+b1)

return torch.matmul(H,W2)+b2

'''

网络模型的简洁搭建方法

class FlattenLayer(nn.Module):

def __init__(self):

super(FlattenLayer,self).__init__()

def forward(self,x):

return x.view(x.shape[0],-1)

net = nn.Sequential(

d2l.FlattenLayer(),

nn.Linear(num_inputs, num_hiddens),

nn.ReLU(),

nn.Linear(num_hiddens, num_outputs),

)

for params in net.parameters():

init.normal_(params, mean=0, std=0.01)

'''

7.定义模型参数精度函数

#在模型上评价数据集的准确率 .item()将Tensor转换为number

def evaluate_accuracy(data_iter,net):

acc_sum,n = 0.0,0

for X,y in data_iter:

#计算判断准确的元素

acc_sum += (net(X).argmax(dim=1)==y).float().sum().item()

#通过shape 获得y的列元素

n += y.shape[0]

return acc_sum/n

8.训练模型

#训练模型

#由于这里定义的损失函数已经除了batch_size 且SGD函数也除l了batch_size(不需要的)

#因此这里的学习率设置的大一些

num_epochs,lr= 5,100

def train_softmax(net,train_iter,test_iter,loss,num_epochs,batch_size,

params=None, lr=None, optimizer=None):

for epoch in range(num_epochs):

#损失值、正确数量、总数 初始化

train_l_sum,train_acc_sum,n = 0.0,0.0,0

for X,y in train_iter:

y_hat = net(X)

l = loss(y_hat,y).sum()

# 梯度清零 损失函数和优化函数梯度清零

if optimizer is not None:

optimizer.zero_grad()

elif params is not None and params[0].grad is not None:

for param in params:

param.grad.data.zero_()

l.backward()

if optimizer is None:

sgd(params, lr, batch_size)

else:

optimizer.step()

train_l_sum += l.item()

train_acc_sum +=(y_hat.argmax(dim=1)==y).sum().item()

n += y.shape[0]

test_acc = evaluate_accuracy(test_iter,net)

print('epoch %d, loss %.4f, train acc %.3f,test acc %.3f'

%(epoch+1,train_l_sum/n,train_acc_sum/n,test_acc))

#如果使用简洁网络,则需要修改 params和lr改成 optimizer

train_softmax(net,train_iter,test_iter,loss,num_epochs,batch_size,params,lr)

epoch 1, loss 0.0031, train acc 0.710,test acc 0.756

epoch 2, loss 0.0019, train acc 0.822,test acc 0.824

epoch 3, loss 0.0017, train acc 0.844,test acc 0.838

epoch 4, loss 0.0015, train acc 0.856,test acc 0.780

epoch 5, loss 0.0015, train acc 0.863,test acc 0.843

【完整代码】

#使用多层感知机对fashion_mnist分类

#导入库

import torch

import numpy as np

import sys

from torch import nn

from torch.nn import init

import torchvision

import torchvision.transforms as transforms

#继续使用fashion_mnist数据集

mnist_train = torchvision.datasets.FashionMNIST(root='~/Desktop/OpenCV_demo/Datasets/FashionMNIST', train=True, download=True, transform=transforms.ToTensor())

mnist_test = torchvision.datasets.FashionMNIST(root='~/Desktop/OpenCV_demo/Datasets/FashionMNIST', train=False, download=True, transform=transforms.ToTensor())

#数据集中的10个类别

def get_fashion_mnist_labels(labels):

text_labels = ['t-shirt','trouser','pullover','dress','coat',

'sandal','shirt','sneaker','bag','ankle boot']

return [text_labels[int(i)]for i in labels]

#batch_size的设置

batch_size = 256

if sys.platform.startswith('win'):

num_workers = 0

else:

num_workers = 4

train_iter = torch.utils.data.DataLoader(

mnist_train,batch_size = batch_size,shuffle = True,num_workers = num_workers)

test_iter = torch.utils.data.DataLoader(

mnist_test,batch_size = batch_size,shuffle = True,num_workers = num_workers)

#设置输入28*28=784 输出设置为10(类别) 隐藏单元个数设置为256 两层的神经网络

num_inputs,num_outputs,num_hiddens = 784,10,256

W1 = torch.tensor(np.random.normal(0,0.01,(num_inputs,num_hiddens)),dtype = torch.float)

b1 = torch.zeros(num_hiddens,dtype = torch.float)

W2 = torch.tensor(np.random.normal(0,0.01,(num_hiddens,num_outputs)),dtype = torch.float)

b2 = torch.zeros(num_outputs,dtype = torch.float)

params = [W1,b1,W2,b2]

for param in params:

param.requires_grad_(requires_grad = True)

#定义激活函数RELU

def relu(X):

return torch.max(input=X,other = torch.tensor(0.0))

#定义模型 torch.matmul表示当 两者维度不一致时会自动填充到相应的维度进行点称

def net(X):

X = X.view((-1,num_inputs))

H = relu(torch.matmul(X,W1)+b1)

return torch.matmul(H,W2)+b2

#定义损失函数

loss = torch.nn.CrossEntropyLoss()

#优化算法 小批量随机梯度下降算法

def sgd(params,lr,batch_size):

for param in params:

param.data -= lr*param.grad/batch_size

#在模型上评价数据集的准确率 .item()将Tensor转换为number

def evaluate_accuracy(data_iter,net):

acc_sum,n = 0.0,0

for X,y in data_iter:

#计算判断准确的元素

acc_sum += (net(X).argmax(dim=1)==y).float().sum().item()

#通过shape 获得y的列元素

n += y.shape[0]

return acc_sum/n

#训练模型

#由于这里定义的损失函数已经除了batch_size 且SGD函数也除l了batch_size(不需要的)

#因此这里的学习率设置的大一些

num_epochs,lr= 5,100

def train_softmax(net,train_iter,test_iter,loss,num_epochs,batch_size,

params=None, lr=None, optimizer=None):

for epoch in range(num_epochs):

#损失值、正确数量、总数 初始化

train_l_sum,train_acc_sum,n = 0.0,0.0,0

for X,y in train_iter:

y_hat = net(X)

l = loss(y_hat,y).sum()

# 梯度清零 损失函数和优化函数梯度清零

if optimizer is not None:

optimizer.zero_grad()

elif params is not None and params[0].grad is not None:

for param in params:

param.grad.data.zero_()

l.backward()

if optimizer is None:

sgd(params, lr, batch_size)

else:

optimizer.step()

train_l_sum += l.item()

train_acc_sum +=(y_hat.argmax(dim=1)==y).sum().item()

n += y.shape[0]

test_acc = evaluate_accuracy(test_iter,net)

print('epoch %d, loss %.4f, train acc %.3f,test acc %.3f'

%(epoch+1,train_l_sum/n,train_acc_sum/n,test_acc))

train_softmax(net,train_iter,test_iter,loss,num_epochs,batch_size,params,lr)

参考资料:

动手学深度学习