生成对抗方式解决医学图像重建 Adversarially learned iterative reconstruction for imaging inverse problems

Introduction

Problem description

In the area of medical image reconstruction, we want to recover x ∗ ∈ X x^{*} \in \mathbb{X} x∗∈X containing critic information in the polar coordinate from data

y = A ( x ∗ ) + e ∈ Y y=\mathcal{A}\left(x^{*}\right)+e \in \mathbb{Y} y=A(x∗)+e∈Y, which exert a transform like the Randon Transforms to original data with noise e ∈ Y e \in \mathbb{Y} e∈Y,and often demonstrated in a sinogram. Both the forward operator and the distribution of the noise are typically known. Yet the inverse problem are always ill-posed,meaning that there’re more than one parameters for model due to inadequate information of measurement and we cannot recover precisely the original image.

Reserch overview

traditional method to tackle the inverse problem is variational reconstruction, combining the Euclidean distance and a regularizer.

min x ∈ X ∥ y − A ( x ) ∥ 2 2 + λ R ( x ) \min _{\boldsymbol{x} \in \mathbb{X}}\|\boldsymbol{y}-\mathcal{A}(\boldsymbol{x})\|_{2}^{2}+\lambda \mathcal{R}(\boldsymbol{x}) minx∈X∥y−A(x)∥22+λR(x)

the regularizer is effective by penalizing unlikely solutions and restore prior knowledge about the image.

With the development of DL,data-driven methods are proposed,and can be classified as follows:

- end-to-end over parameterized models,which need large amount of paired examples

- learn a regularizer encoding prior knowledge of image by networks and apply it to reconstruction tasks

Yet, all of them are faced with problems like difficulty in obtaining data, overfitting, poor genalization ability in unseen data and high computational expenses

Main contribution

loss function

Instead of training the reconstruction model in a supervised way that we train it with paired training examples { x i , y i } i = 1 N \{x_i,y_i\}_{i=1}^{N} {xi,yi}i=1N ,we propose an unsupervised method based on probabilistic models on X and Y and evaluate the reconstruction quality by the wasserstein distance W ( ( G θ ) # π Y , π X ) \mathcal{W}\left(\left(\mathcal{G}_{\theta}\right)_{\#} \pi_{Y}, \pi_{X}\right) W((Gθ)#πY,πX).The wasserstein distance is defined as W p ( μ , ν ) = ( inf γ ∈ Γ ( μ , ν ) E ( x , y ) ∼ γ d ( x , y ) p ) 1 / p W_{p}(\mu, \nu)=\left(\inf _{\gamma \in \Gamma(\mu, \nu)} \mathbf{E}_{(x, y) \sim \gamma} d(x, y)^{p}\right)^{1 / p} Wp(μ,ν)=(infγ∈Γ(μ,ν)E(x,y)∼γd(x,y)p)1/p. It can measure thedifferent of two distribution and take their geometric characteristics into account. In this way,we not necessarily need paired examples for training. We take advantage of the wasserstein distance for our regularizer. Moreover, G θ \mathcal{G}_{\theta} Gθshould be approximate to both right-inverse and left-inverse of forward operator A \mathcal{A} A.

J ( θ ) = W ( ( G θ ) # π Y , π X ) + λ Y E π Y [ ∥ A ( G θ ( Y ) ) − Y ∥ 2 2 ] + λ X E π X [ ∥ G θ ( A ( X ) ) − X ∥ 2 2 ] \begin{aligned} J(\theta)=\mathcal{W}\left(\left(\mathcal{G}_{\theta}\right)_{\#} \pi_{Y}, \pi_{X}\right)+\lambda_{\mathbb{Y}} \mathbb{E}_{\pi_{Y}}[&\left.\left\|\mathcal{A}\left(\mathcal{G}_{\theta}(Y)\right)-Y\right\|_{2}^{2}\right] &+\lambda_{\mathbb{X}} \mathbb{E}_{\pi_{X}}\left[\left\|\mathcal{G}_{\theta}(\mathcal{A}(X))-X\right\|_{2}^{2}\right] \end{aligned} J(θ)=W((Gθ)#πY,πX)+λYEπY[∥A(Gθ(Y))−Y∥22]+λXEπX[∥Gθ(A(X))−X∥22]

When we implement the training the model, we make use of the Kantorovich-Rubinstein (KR) duality for approximating the wasserstein distance (proof) as:

L ( R ) = E π X [ R ( X ) ] − E π Y [ R ( G θ ( Y ) ) ] \mathcal{L}(\mathcal{R})=\mathbb{E}_{\pi_{X}}[\mathcal{R}(X)]-\mathbb{E}_{\pi_{Y}}\left[\mathcal{R}\left(\mathcal{G}_{\theta}(Y)\right)\right] L(R)=EπX[R(X)]−EπY[R(Gθ(Y))]where inf R L ( R ) = − W ( ( G θ ) # π Y , π X ) \inf _{\mathcal{R}} \mathcal{L}(\mathcal{R})=-\mathcal{W}\left(\left(\mathcal{G}_{\theta}\right)_{\#} \pi_{Y}, \pi_{X}\right) RinfL(R)=−W((Gθ)#πY,πX)or simplified as W ( ( G θ ) # π Y , π X ) = sup E π X [ D α ( X ) ] − E ( G θ ) # π Y [ D α ( X ) ] where D α ∈ L 1 . \mathcal{W}\left(\left(\mathcal{G}_{\theta}\right)_{\#} \pi_{Y}, \pi_{X}\right)=\sup \mathbb{E}_{\pi_{X}}\left[\mathcal{D}_{\alpha}(X)\right]-\mathbb{E}_{\left(\mathcal{G}_{\theta}\right)_{\#} \pi_{Y}}\left[\mathcal{D}_{\alpha}(X)\right] \text { where } \mathcal{D}_{\alpha} \in \mathbb{L}_{1} \text {. } W((Gθ)#πY,πX)=supEπX[Dα(X)]−E(Gθ)#πY[Dα(X)] where Dα∈L1.

In this sense, we set a descriminator in the GAN as a regularizer aiming at maximize the accuracy of desciminating a sample from π X \pi_{X} πX and π Y \pi_{Y} πY,wherein the 1-Lipschitz condition is enforced by penalizing the gradient of the critic network ( ∥ ∇ D α ( x ) ∥ 2 − 1 ) 2 (\left.\left\|\nabla \mathcal{D}_{\alpha}\left(\boldsymbol{x}\right)\right\|_{2}-1\right)^{2} (∥∇Dα(x)∥2−1)2

From maximum-likehood perspective,except for the measurement of different distribution, the loss function reminiscent the tractable evidence lower-bound (ELBO) of the maximum-likehood

max θ [ 1 n 1 ∑ i = 1 n 1 log π X ( θ ) ( x i ) + 1 n 2 ∑ i = 1 n 2 log π Y ( θ ) ( y i ) ] \max _{\theta}\left[\frac{1}{n_{1}} \sum_{i=1}^{n_{1}} \log \pi_{X}^{(\theta)}\left(\boldsymbol{x}_{i}\right)+\frac{1}{n_{2}} \sum_{i=1}^{n_{2}} \log \pi_{Y}^{(\theta)}\left(\boldsymbol{y}_{i}\right)\right] maxθ[n11∑i=1n1logπX(θ)(xi)+n21∑i=1n2logπY(θ)(yi)]

denoted by

min θ K L ( π X ∣ Y = y ( r ) , π X ) + 1 2 σ e 2 ∥ y − A ( G θ ( y ) ) ∥ 2 2 + 1 2 σ 2 2 ∥ x − G θ ( A ( x ) ) ∥ 2 2 \min _{\theta} K L\left(\pi_{X \mid Y=\boldsymbol{y}}^{(r)}, \pi_{X}\right)+\frac{1}{2 \sigma_{e}^{2}}\left\|\boldsymbol{y}-\mathcal{A}\left(\mathcal{G}_{\theta}(\boldsymbol{y})\right)\right\|_{2}^{2}+\frac{1}{2 \sigma_{2}^{2}}\left\|\boldsymbol{x}-\mathcal{G}_{\theta}(\mathcal{A}(\boldsymbol{x}))\right\|_{2}^{2} minθKL(πX∣Y=y(r),πX)+2σe21∥y−A(Gθ(y))∥22+2σ221∥x−Gθ(A(x))∥22

Parameterizing the model

Learned primal-dual algorithm

The architecture of G θ \mathcal{G}_{\theta} Gθ is built upon the idea of iterative unrolling(seminal work), and adopt the learned primal-dual algorithm, which is proposed to solve wide range of inverse problems min f ∈ X [ F ( K ( f ) ) + G ( f ) ] \min _{f \in X}[\mathcal{F}(\mathcal{K}(f))+\mathcal{G}(f)] minf∈X[F(K(f))+G(f)].

As G \mathcal{G} G is typically non-smooth, an proximal operator defined by prox τ G ( f ) = arg min f ′ ∈ X [ G ( f ′ ) + 1 2 τ ∥ f ′ − f ∥ X 2 ] \operatorname{prox}_{\tau \mathcal{G}}(f)=\underset{f^{\prime} \in X}{\arg \min }\left[\mathcal{G}\left(f^{\prime}\right)+\frac{1}{2 \tau}\left\|f^{\prime}-f\right\|_{X}^{2}\right] proxτG(f)=f′∈Xargmin[G(f′)+2τ1∥f′−f∥X2] is employed, which is regarded as extensive form of gradient descent. Conjugate function has good properties, so it is used to induce a primal dual hybrid gradient to achieve the optimal goal min x max y G ( x ) + ⟨ y , K x ⟩ − F ∗ ( y ) \min _{x} \max _{y} \mathcal{G}(x)+\langle y, \mathcal{K}x\rangle-\mathcal{F}^{*}(y) minxmaxyG(x)+⟨y,Kx⟩−F∗(y) . The optimality condition is

{ 0 ∈ ∂ G ( x ) + ∂ K ( x ) T y 0 ∈ K ( x ) − ∂ F ∗ ( y ) \left\{\begin{array}{l} 0\in \partial \mathcal{G}(x)+\partial \mathcal{K}(x)^{T} y \\ 0 \in \mathcal{K} (x)-\partial \mathcal{F}^{*}(y) \end{array}\right. {0∈∂G(x)+∂K(x)Ty0∈K(x)−∂F∗(y)

Through fixed point iteration, a non-linear primal dual hybrid gradient method is proposed to solve the problem.And considering the covergence of the algorithm, it also gives initialization constraints.

In data driven deep learning method, all of the parameters including proximal operator p r o x prox prox,step length σ \sigma σ and τ \tau τ , overrelaxation parameter γ \gamma γ are learned through deep neural network. The architecture of the model follows the scheme of iterative alternating update.

The Learned Primal-Dual model is trained using a learning rate scheme according to cosine annealing η t = η 0 2 ( 1 + cos ( π t t max ) ) \eta_{t}=\frac{\eta_{0}}{2}\left(1+\cos \left(\pi \frac{t}{t_{\max }}\right)\right) ηt=2η0(1+cos(πtmaxt)).The paper also gives modifications to the model that allow it to combine previous iterative result to obtain the learned result by extend the dual (and primal) space from U U U to U N d u a l U^{N_{dual }} UNdual.

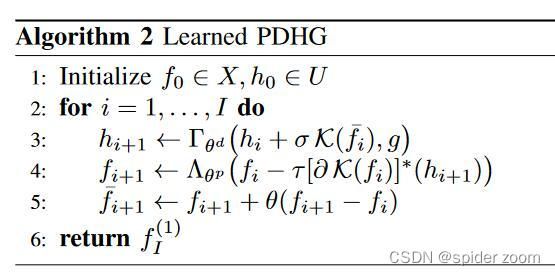

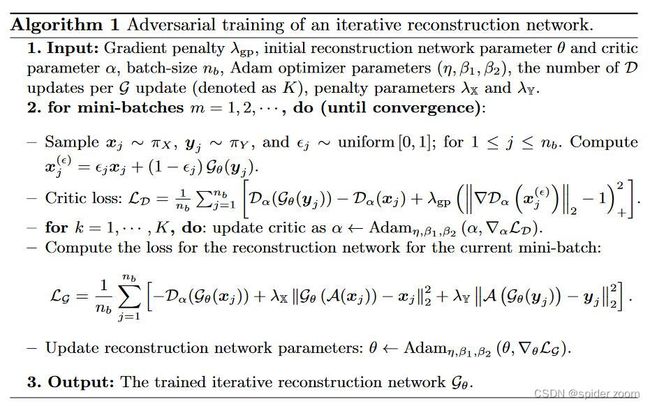

algorithm proposed

Reconstruction network and critic network are established and they’re trained in an adversarial and alternating way. First, model parameters, including critic network parameters and reconstruction parameters,max iterations,penalty parameters,are set as the input. Then we sample original data and measured data from respecive marginal distributions ,and train critic network and update critic parameters for k k k times. Finally we train reconstruction network and update reconstruction parameters for once.

Following the settings in the Learned PDHG,the reconstruction model G θ \mathcal{G}_{\theta} Gθ is conputed by applying h ( ℓ + 1 ) = Γ θ d ( ℓ ) ( h ( ℓ ) , σ ( ℓ ) A ( x ℓ ) , y ) \boldsymbol{h}^{(\ell+1)}=\Gamma_{\theta_{d}^{(\ell)}}\left(\boldsymbol{h}^{(\ell)}, \sigma^{(\ell)} \mathcal{A}\left(\boldsymbol{x}^{\ell}\right), \boldsymbol{y}\right) h(ℓ+1)=Γθd(ℓ)(h(ℓ),σ(ℓ)A(xℓ),y) and x ( ℓ + 1 ) = Λ θ p ( ℓ ) ( x ( ℓ ) , τ ( ℓ ) A ∗ ( h ℓ + 1 ) ) \boldsymbol{x}^{(\ell+1)}=\Lambda_{\theta_{\mathrm{p}}^{(\ell)}}\left(\boldsymbol{x}^{(\ell)}, \tau^{(\ell)} \mathcal{A}^{*}\left(\boldsymbol{h}^{\ell+1}\right)\right) x(ℓ+1)=Λθp(ℓ)(x(ℓ),τ(ℓ)A∗(hℓ+1)) for L L L times and h ( 0 ) = 0 \boldsymbol{h}^{(0)}=0 h(0)=0, x ( 0 ) \boldsymbol{x}^{(0)} x(0) is the filtered back projection(FBP) reconstruction. The critic model D α \mathcal{D}_{\alpha} Dα is simply a feed-forward CNN with four cascaded layer.

Numerical results and conclusion

We consider sparse CT reconstruction,classical inverse problem.We generate a set of 2000 2D phantoms containing 5 ellipses placed randomly, each of which has density chosen uniformly at random in the range [0.1,1]. Another dataset consisting of 2250 2D slices extracted from human abdominal CT scans. We conduct comparision study of ALPD with state-of-art model-driven and data-driven methods like FBP,LPD and TV.We take PSNR, SSIM, running time and number of parameters as comparision indicators, and we also evaluate reconstruction visually and compare their texture details.We draw conclusions as below.

- ALPD performs almost identical to the total-variation (TV) method in terms of reproduce the ellipses area delineated by sharp boundaries, and well discern the small tumors, outperforming LPD.

- ALPD is superior to LPD in recovering clinically important features, since it does well in delineation.

- ALPD have less parameters than AR TV, and FBP+UNet, so it may need less scale dataset. ALPD performs similar to LPD in running time, PSNR and SSIM, but it can be trained against unsupervised data.

References

[1] Adversarially Learned Iterative Reconstruction for Imaging Inverse Problems. In: Elmoataz, A., Fadili, J., Quéau, Y., Rabin, J., Simon, L. (eds) Scale Space and Variational Methods in Computer Vision. SSVM 2021.

[2]J. Adler and O. Öktem, “Learned Primal-Dual Reconstruction,” in IEEE Transactions on Medical Imaging, vol. 37, no. 6, pp. 1322-1332, June 2018, doi: 10.1109/TMI.2018.2799231.