opencv深度学习入门笔记整理

一、图像的基本操作

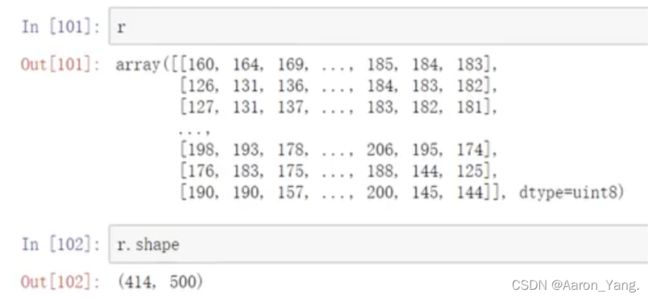

(1)读取图像

Img = cv2.imread("xx.jpg")

img的数据类型为ndarray的格式

(2)图像显示

可以多次调用,创建多个窗口

cv2.imshow("image",img)

(3)等待时间

毫秒级,0表示任意键终止,如数字10000表示10秒后自动关闭

cv2.waitKey(0)

cv2.destroyAllWindows()

(4)图片的属性

img.shape

(414,500,3)代表H,W,C (C指的是BGR,opencv里的颜色显示和顺序RGB不同)

(5)灰度图的读取

img = cv2.imread('xx.jpg',cv2.IMREAD_GRAYSCALE)

注意:灰度图的shape只有两个属性,因为图片颜色里不存在彩色

(6)保存图片

cv2.imwrite('xx.png',img)

(7)截取部分图像数据

img = cv2.imread('cat.jpg')

# 意思是截取H = 50,W = 200

cat = img[0:50,0:200]

cv_show('cat',cat)

(8)颜色通道提取

b,g,r = cv2.split(img)

b,g,r的结果不一样,但是shape相同

# 重新合并

img = cv2.merge((b,g,r))

# 只保留R BGR 将B,G均置为0

cur_img = img.copy()

cur_img[:,:,0] = 0

cur_img[:,:,1] = 0

cv_show('R',cur_img)

# 只保留G

cur_img = img.copy()

cur_img[:,:,0] = 0

cur_img[:,:,2] = 0

cv_show('G',cur_img)

# 只保留B

cur_img = img.copy()

cur_img[:,:,1] = 0

cur_img[:,:,2] = 0

cv_show('B',cur_img)

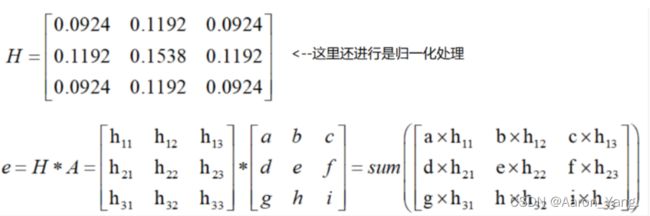

卷积:对图像特征进行提取

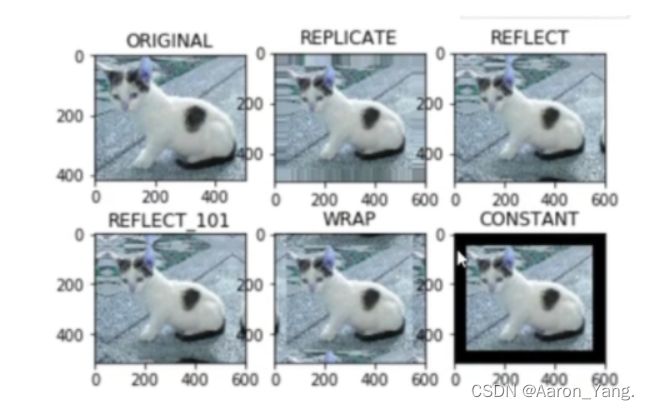

(9)边界填充

top_size,bottom_size,left_size,right_size = (50,50,50,50)

replicate = cv2.copyMakeBorder(img, top_size, bottom_size, left_size, right_size, borderType=cv2.BORDER_REPLICATE)

reflect = cv2.copyMakeBorder(img, top_size, bottom_size, left_size, right_size,cv2.BORDER_REFLECT)

reflect101 = cv2.copyMakeBorder(img, top_size, bottom_size, left_size, right_size, cv2.BORDER_REFLECT_101)

wrap = cv2.copyMakeBorder(img, top_size, bottom_size, left_size, right_size, cv2.BORDER_WRAP)

constant = cv2.copyMakeBorder(img, top_size, bottom_size, left_size, right_size,cv2.BORDER_CONSTANT, value=0)

import matplotlib.pyplot as plt

plt.subplot(231), plt.imshow(img, 'gray'), plt.title('ORIGINAL')

plt.subplot(232), plt.imshow(replicate, 'gray'), plt.title('REPLICATE')

plt.subplot(233), plt.imshow(reflect, 'gray'), plt.title('REFLECT')

plt.subplot(234), plt.imshow(reflect101, 'gray'), plt.title('REFLECT_101')

plt.subplot(235), plt.imshow(wrap, 'gray'), plt.title('WRAP')

plt.subplot(236), plt.imshow(constant, 'gray'), plt.title('CONSTANT')

plt.show()

- BORDER_REPLICATE:复制法,也就是复制最边缘像素。

- BORDER_REFLECT:反射法,对感兴趣的图像中的像素在两边进行复制例如:fedcba|abcdefgh|hgfedcb

- BORDER_REFLECT_101:反射法,也就是以最边缘像素为轴,对称,gfedcb|abcdefgh|gfedcba

- BORDER_WRAP:外包装法cdefgh|abcdefgh|abcdefg

- BORDER_CONSTANT:常量法,常数值填充。

(10)数值计算

img_cat=cv2.imread('cat.jpg')

img_dog=cv2.imread('dog.jpg')

Img_cat2 = img_cat + 10 表示在每个像素点上(ndarray)都加10

# 表示读取前5行数据,颜色为0号(R)的数值

img_cat[:5,:,0]

#相当于% 256 超过256的会取余数

(img_cat + img_cat2)[:5,:,0]

# 这个方法超过255的直接设置为255

cv2.add(img_cat,img_cat2)[:5,:,0]

(11)图像融合

不能直接 img_cat + img_dog

首先要将两个图像的形状大小设置为一致

img_cat.shape

img_dog = cv2.resize(img_dog, (500, 414))

img_dog.shape

# 图像融合的权重 αx1+βx2+c c就是亮度值

res = cv2.addWeighted(img_cat, 0.4, img_dog, 0.6, 0)

plt.imshow(res)

# 另外一种方法调图像比例

res = cv2.resize(img, (0, 0), fx=3, fy=1)

plt.imshow(res)

(12)图像阈值

#### ret, dst = cv2.threshold(src, thresh, maxval, type)

- src: 输入图,只能输入单通道图像,通常来说为灰度图

- dst: 输出图

- thresh: 阈值

- maxval: 当像素值超过了阈值(或者小于阈值,根据type来决定),所赋予的值

- type:二值化操作的类型,包含以下5种类型: cv2.THRESH_BINARY; cv2.THRESH_BINARY_INV; cv2.THRESH_TRUNC; cv2.THRESH_TOZERO;cv2.THRESH_TOZERO_INV

- cv2.THRESH_BINARY 超过阈值部分取maxval(最大值),否则取0

- cv2.THRESH_BINARY_INV THRESH_BINARY的反转

- cv2.THRESH_TRUNC 大于阈值部分设为阈值,否则不变

- cv2.THRESH_TOZERO 大于阈值部分不改变,否则设为0

- cv2.THRESH_TOZERO_INV THRESH_TOZERO的反转

#%%

ret, thresh1 = cv2.threshold(img_gray, 127, 255, cv2.THRESH_BINARY)

ret, thresh2 = cv2.threshold(img_gray, 127, 255, cv2.THRESH_BINARY_INV)

ret, thresh3 = cv2.threshold(img_gray, 127, 255, cv2.THRESH_TRUNC)

ret, thresh4 = cv2.threshold(img_gray, 127, 255, cv2.THRESH_TOZERO)

ret, thresh5 = cv2.threshold(img_gray, 127, 255, cv2.THRESH_TOZERO_INV)

titles = ['Original Image', 'BINARY', 'BINARY_INV', 'TRUNC', 'TOZERO', 'TOZERO_INV']

images = [img, thresh1, thresh2, thresh3, thresh4, thresh5]

for i in range(6):

plt.subplot(2, 3, i + 1), plt.imshow(images[i], 'gray')

plt.title(titles[i])

plt.xticks([]), plt.yticks([])

plt.show()

二、视频读取的基本操作

(1)读取视频

cv2.VideoCapture可以捕获摄像头,用数字来控制不同的设备,例如0,1。

如果是视频文件,直接指定好路径即可。

vc = cv2.VideoCapture('xxx.mp4')

# 检查是否打开正确

if vc.isOpened():

open,frame = vc.read() # 能读取到open则为true,frame表示每一帧的图像,每一张图像就类似于刚刚图像读取中的img参数,这里也可以写个循环读取每一帧的图像

else:

open = False

while open:

ret,frame = vc.read()

if frame is None: # 读不到图像了,直接退出

break

if ret == True:

# 对于每一帧图像,都给转换成黑白的灰度图

gray = cv2.cvtColor(frame,cv2.COLOR_BGR2GRAY)

# 展示结果

cv2.imshow('result',gray)

# 100代表每一帧图像的处理时间100ms,根据你想要的速度可以调,一般来说是用10

# 27这里意思是如果按退出键,就直接退出

if cv2.waitKey(100) & 0xFF == 27:

break

vc.release()

cv2.destroyAllWindows()

三、图像处理

(1)图像平滑处理

# 原噪声图片

img = cv2.imread('lenaNoise.png')

cv2.imshow('img', img)

cv2.waitKey(0)

cv2.destroyAllWindows()

# 方框滤波

# 基本和均值一样,可以选择归一化

box = cv2.boxFilter(img,-1,(3,3), normalize=True)

cv2.imshow('box', box)

cv2.waitKey(0)

cv2.destroyAllWindows()

# 方框滤波

# 基本和均值一样,可以选择归一化(大于255取余,置位True),容易越界(大于255的则显示为白色)

box = cv2.boxFilter(img,-1,(3,3), normalize=False)

cv2.imshow('box', box)

cv2.waitKey(0)

cv2.destroyAllWindows()

# 高斯滤波

# 高斯模糊的卷积核里的数值是满足高斯分布,相当于更重视中间的

# 离中心点近的权值更大,反之权值更小

aussian = cv2.GaussianBlur(img, (5, 5), 1)

cv2.imshow('aussian', aussian)

cv2.waitKey(0)

cv2.destroyAllWindows()

# 中值滤波

# 相当于用中值代替

# 例如3x3的矩阵,共9个数,将其中心点置为这9个数的中点(效果最好)

median = cv2.medianBlur(img, 5) # 中值滤波

cv2.imshow('median', median)

cv2.waitKey(0)

cv2.destroyAllWindows()

# 展示所有的

res = np.hstack((blur,aussian,median))

#print (res)

cv2.imshow('median vs average', res)

cv2.waitKey(0)

cv2.destroyAllWindows()

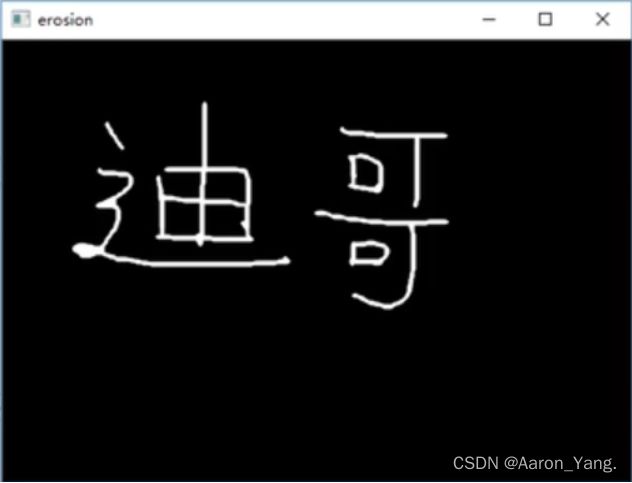

(2)形态学-腐蚀操作

img = cv2.imread('dige.png')

cv2.imshow('img', img)

cv2.waitKey(0)

cv2.destroyAllWindows()

注:一般是二值的图片,进行腐蚀操作

kernel = np.ones((3,3),np.uint8)

erosion = cv2.erode(img,kernel,iterations = 1)

cv2.imshow('erosion', erosion)

cv2.waitKey(0)

cv2.destroyAllWindows()

# iterations代表腐蚀的次数

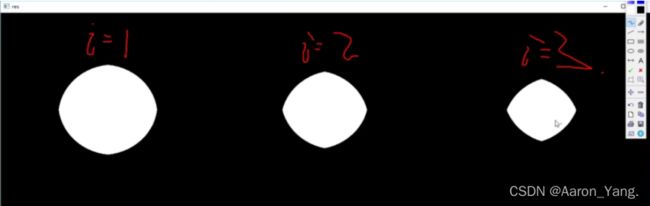

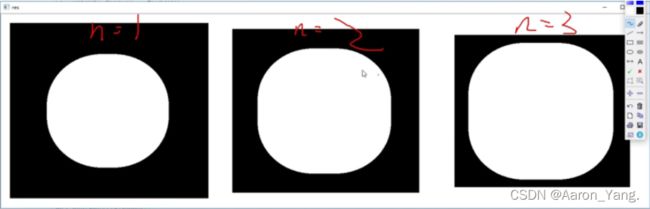

# 腐蚀操作实际上是,比如设定为3x3,则对图像中的每个3x3区域进行拟合,然后若这个区域里各个颜色一致的话,则不变。若存在颜色不一致的情况,则进行腐蚀化---全变为黑色。

kernel = np.ones((30,30),np.uint8)

erosion_1 = cv2.erode(pie,kernel,iterations = 1)

erosion_2 = cv2.erode(pie,kernel,iterations = 2)

erosion_3 = cv2.erode(pie,kernel,iterations = 3)

res = np.hstack((erosion_1,erosion_2,erosion_3))

cv2.imshow('res', res)

cv2.waitKey(0)

cv2.destroyAllWindows()

(3)形态学-膨胀操作

img = cv2.imread('dige.png')

cv2.imshow('img', img)

cv2.waitKey(0)

cv2.destroyAllWindows()

kernel = np.ones((3,3),np.uint8)

dige_erosion = cv2.erode(img,kernel,iterations = 1)

cv2.imshow('erosion', erosion)

cv2.waitKey(0)

cv2.destroyAllWindows()

# 膨胀类似于腐蚀的逆操作,腐蚀是将其变黑,而膨胀是将颜色不相同的区域全变为白色

kernel = np.ones((3,3),np.uint8)

dige_dilate = cv2.dilate(dige_erosion,kernel,iterations = 1)

cv2.imshow('dilate', dige_dilate)

cv2.waitKey(0)

cv2.destroyAllWindows()

pie = cv2.imread('pie.png')

kernel = np.ones((30,30),np.uint8)

dilate_1 = cv2.dilate(pie,kernel,iterations = 1)

dilate_2 = cv2.dilate(pie,kernel,iterations = 2)

dilate_3 = cv2.dilate(pie,kernel,iterations = 3)

res = np.hstack((dilate_1,dilate_2,dilate_3))

cv2.imshow('res', res)

cv2.waitKey(0)

cv2.destroyAllWindows()

(4)开运算与闭运算

# 开:先腐蚀,再膨胀

img = cv2.imread('dige.png')

kernel = np.ones((5,5),np.uint8)

opening = cv2.morphologyEx(img, cv2.MORPH_OPEN, kernel)

cv2.imshow('opening', opening)

cv2.waitKey(0)

cv2.destroyAllWindows()

# 闭:先膨胀,再腐蚀

img = cv2.imread('dige.png')

kernel = np.ones((5,5),np.uint8)

closing = cv2.morphologyEx(img, cv2.MORPH_CLOSE, kernel)

cv2.imshow('closing', closing)

cv2.waitKey(0)

cv2.destroyAllWindows()

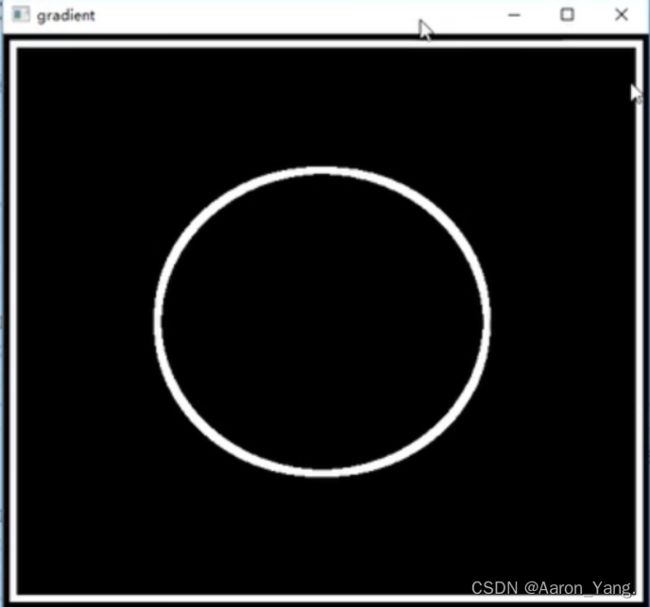

(5)梯度运算

# 梯度=膨胀-腐蚀

# 梯度就是得到两个图像做减法的结果

pie = cv2.imread('pie.png')

kernel = np.ones((7,7),np.uint8)

dilate = cv2.dilate(pie,kernel,iterations = 5)

erosion = cv2.erode(pie,kernel,iterations = 5)

res = np.hstack((dilate,erosion))

cv2.imshow('res', res)

cv2.waitKey(0)

cv2.destroyAllWindows()

gradient = cv2.morphologyEx(pie, cv2.MORPH_GRADIENT, kernel)

cv2.imshow('gradient', gradient)

cv2.waitKey(0)

cv2.destroyAllWindows()

(6)礼帽与黑帽

- 礼帽 = 原始输入-开运算结果

- 黑帽 = 闭运算-原始输入

#礼帽

#带刺-不带刺,得到的最开始的那些刺儿

img = cv2.imread('dige.png')

tophat = cv2.morphologyEx(img, cv2.MORPH_TOPHAT, kernel)

cv2.imshow('tophat', tophat)

cv2.waitKey(0)

cv2.destroyAllWindows()

#黑帽

#得到的是原始图像轮廓

img = cv2.imread('dige.png')

blackhat = cv2.morphologyEx(img,cv2.MORPH_BLACKHAT, kernel)

cv2.imshow('blackhat ', blackhat )

cv2.waitKey(0)

cv2.destroyAllWindows()

(7) 图像梯度-Sobel算子

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-bI1JthiC-1647162911564)(C:\Users\1441694381\AppData\Roaming\Typora\typora-user-images\image-20220313111255331.png)]

img = cv2.imread('pie.png',cv2.IMREAD_GRAYSCALE)

cv2.imshow("img",img)

cv2.waitKey()

cv2.destroyAllWindows()

dst = cv2.Sobel(src, ddepth, dx, dy, ksize)

- ddepth:图像的深度

- dx和dy分别表示水平和竖直方向

- ksize是Sobel算子的大小

def cv_show(img,name):

cv2.imshow(name,img)

cv2.waitKey()

cv2.destroyAllWindows()

sobelx = cv2.Sobel(img,cv2.CV_64F,1,0,ksize=3)

cv_show(sobelx,'sobelx')

白到黑是正数,黑到白就是负数了,所有的负数会被截断成0,所以要取绝对值

# x方向

sobelx = cv2.Sobel(img,cv2.CV_64F,1,0,ksize=3)

sobelx = cv2.convertScaleAbs(sobelx)

cv_show(sobelx,'sobelx')

# y方向

sobely = cv2.Sobel(img,cv2.CV_64F,0,1,ksize=3)

sobely = cv2.convertScaleAbs(sobely)

cv_show(sobely,'sobely')

分别计算x和y,再求和

# 效果更清晰

sobelxy = cv2.addWeighted(sobelx,0.5,sobely,0.5,0)

cv_show(sobelxy,'sobelxy')

不建议直接计算

# 效果比较模糊

sobelxy=cv2.Sobel(img,cv2.CV_64F,1,1,ksize=3)

sobelxy = cv2.convertScaleAbs(sobelxy)

cv_show(sobelxy,'sobelxy')

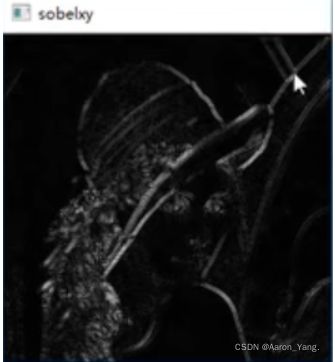

利用sobel算子 展示图像梯度图(轮廓)

img = cv2.imread('lena.jpg',cv2.IMREAD_GRAYSCALE)

cv_show(img,'img')

img = cv2.imread('lena.jpg',cv2.IMREAD_GRAYSCALE)

sobelx = cv2.Sobel(img,cv2.CV_64F,1,0,ksize=3)

sobelx = cv2.convertScaleAbs(sobelx)

sobely = cv2.Sobel(img,cv2.CV_64F,0,1,ksize=3)

sobely = cv2.convertScaleAbs(sobely)

sobelxy = cv2.addWeighted(sobelx,0.5,sobely,0.5,0)

cv_show(sobelxy,'sobelxy')

# 这个方法是直接对x和y计算的,不推荐使用,建议使用上一个

img = cv2.imread('lena.jpg',cv2.IMREAD_GRAYSCALE)

sobelxy=cv2.Sobel(img,cv2.CV_64F,1,1,ksize=3)

sobelxy = cv2.convertScaleAbs(sobelxy)

cv_show(sobelxy,'sobelxy')

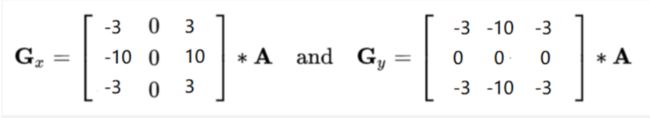

(8)图像梯度-Scharr算子

(9)图像梯度-laplacian算子

# 原图像

img = cv2.imread('lena.jpg',cv2.IMREAD_GRAYSCALE)

cv_show(img,'img')

#不同算子的差异

img = cv2.imread('lena.jpg',cv2.IMREAD_GRAYSCALE)

sobelx = cv2.Sobel(img,cv2.CV_64F,1,0,ksize=3)

sobely = cv2.Sobel(img,cv2.CV_64F,0,1,ksize=3)

sobelx = cv2.convertScaleAbs(sobelx)

sobely = cv2.convertScaleAbs(sobely)

sobelxy = cv2.addWeighted(sobelx,0.5,sobely,0.5,0)

scharrx = cv2.Scharr(img,cv2.CV_64F,1,0)

scharry = cv2.Scharr(img,cv2.CV_64F,0,1)

scharrx = cv2.convertScaleAbs(scharrx)

scharry = cv2.convertScaleAbs(scharry)

scharrxy = cv2.addWeighted(scharrx,0.5,scharry,0.5,0)

laplacian = cv2.Laplacian(img,cv2.CV_64F)

laplacian = cv2.convertScaleAbs(laplacian)

res = np.hstack((sobelxy,scharrxy,laplacian))

cv_show(res,'res')

-

可以看到,Scharr算子对图像操作更加细腻、敏感,描绘的轮廓更加丰富

-

Laplacian算子不建议单独使用,往往与之后所学到的方法联系起来使用

(10)Canny边缘检测

五步法:

-

1)使用高斯滤波器,以平滑图像,滤除噪声。

-

2)计算图像中每个像素点的梯度强度和方向。

-

3)应用非极大值(Non-Maximum Suppression)抑制,以消除边缘检测带来的杂散响应。

-

4)应用双阈值(Double-Threshold)检测来确定真实的和潜在的边缘。

-

5)通过抑制孤立的弱边缘最终完成边缘检测。

1:高斯滤波器

2:梯度和方向

3:非极大值抑制

4:双阈值检测

img=cv2.imread("lena.jpg",cv2.IMREAD_GRAYSCALE)

v1=cv2.Canny(img,80,150)

v2=cv2.Canny(img,50,100)

res = np.hstack((v1,v2))

cv_show(res,'res')

img=cv2.imread("car.png",cv2.IMREAD_GRAYSCALE)

v1=cv2.Canny(img,120,250)

v2=cv2.Canny(img,50,100)

res = np.hstack((v1,v2))

cv_show(res,'res')

-

size越大的话,得到的边缘检测点越少,要求越严格

-

size越小的话,得到的点越多,图像更细腻

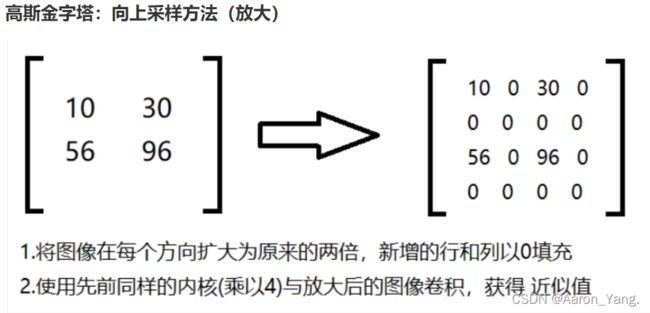

(11)图像金字塔

- 高斯金字塔

- 拉普拉斯金字塔

高斯金字塔:向下采样方法(缩小)

高斯金字塔:向上采样方法(放大)

img=cv2.imread("AM.png")

cv_show(img,'img')

print (img.shape)

(442, 340, 3)

up=cv2.pyrUp(img)

cv_show(up,'up')

print (up.shape)

(884, 680, 3)

down=cv2.pyrDown(img)

cv_show(down,'down')

print (down.shape)

(221, 170, 3)

up2=cv2.pyrUp(up)

cv_show(up2,'up2')

print (up2.shape)

(1768, 1360, 3)

# 先经过up,再经过down,得到的图像比原始要模糊一点

up=cv2.pyrUp(img)

up_down=cv2.pyrDown(up)

cv_show(up_down,'up_down')

cv_show(np.hstack((img,up_down)),'up_down')

拉普拉斯金字塔

down=cv2.pyrDown(img)

down_up=cv2.pyrUp(down)

l_1=img-down_up

cv_show(l_1,'l_1')

(12)图像轮廓

cv2.findContours(img,mode,method)

mode:轮廓检索模式(一般用最后一个)

- RETR_EXTERNAL :只检索最外面的轮廓;

- RETR_LIST:检索所有的轮廓,并将其保存到一条链表当中;

- RETR_CCOMP:检索所有的轮廓,并将他们组织为两层:顶层是各部分的外部边界,第二层是空洞的边界;

- RETR_TREE:检索所有的轮廓,并重构嵌套轮廓的整个层次;

method:轮廓逼近方法

- CHAIN_APPROX_NONE:以Freeman链码的方式输出轮廓,所有其他方法输出多边形(顶点的序列)。

- CHAIN_APPROX_SIMPLE:压缩水平的、垂直的和斜的部分,也就是,函数只保留他们的终点部分。

为了更高的准确率,使用二值图像。

img = cv2.imread('contours.png')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

ret, thresh = cv2.threshold(gray, 127, 255, cv2.THRESH_BINARY)

cv_show(thresh,'thresh')

# 最新版的opencv好像没有binary参数了,若报错去掉第一个

binary, contours, hierarchy = cv2.findContours(thresh, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE)

绘制轮廓

cv_show(img,'img')

#传入绘制图像,轮廓,轮廓索引,颜色模式,线条厚度

# 注意需要copy,要不原图会变。。。

draw_img = img.copy()

res = cv2.drawContours(draw_img, contours, -1, (0, 0, 255), 2)

cv_show(res,'res')

轮廓特征

cnt = contours[0]

#面积

cv2.contourArea(cnt)

#周长,True表示闭合的

cv2.arcLength(cnt,True)

轮廓近似

img = cv2.imread('contours2.png')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

ret, thresh = cv2.threshold(gray, 127, 255, cv2.THRESH_BINARY)

binary, contours, hierarchy = cv2.findContours(thresh, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE)

cnt = contours[0]

draw_img = img.copy()

res = cv2.drawContours(draw_img, [cnt], -1, (0, 0, 255), 2)

cv_show(res,'res')

epsilon = 0.15*cv2.arcLength(cnt,True)

approx = cv2.approxPolyDP(cnt,epsilon,True)

draw_img = img.copy()

res = cv2.drawContours(draw_img, [approx], -1, (0, 0, 255), 2)

cv_show(res,'res')

w_img, contours, -1, (0, 0, 255), 2)

cv_show(res,'res')

轮廓特征

cnt = contours[0]

#面积

cv2.contourArea(cnt)

#周长,True表示闭合的

cv2.arcLength(cnt,True)

轮廓近似

img = cv2.imread('contours2.png')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

ret, thresh = cv2.threshold(gray, 127, 255, cv2.THRESH_BINARY)

binary, contours, hierarchy = cv2.findContours(thresh, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE)

cnt = contours[0]

draw_img = img.copy()

res = cv2.drawContours(draw_img, [cnt], -1, (0, 0, 255), 2)

cv_show(res,'res')

epsilon = 0.15*cv2.arcLength(cnt,True)

approx = cv2.approxPolyDP(cnt,epsilon,True)

draw_img = img.copy()

res = cv2.drawContours(draw_img, [approx], -1, (0, 0, 255), 2)

cv_show(res,'res')