pytorch-神经网络

目录

目录

基本骨架

卷积操作

卷积层

最大池化

非线性激活

线性层

小实战

损失函数与反向传播

优化器

修改现有网络模型

网络保存加载

模型训练套路

GPU训练

完整代码

模型验证

tips:

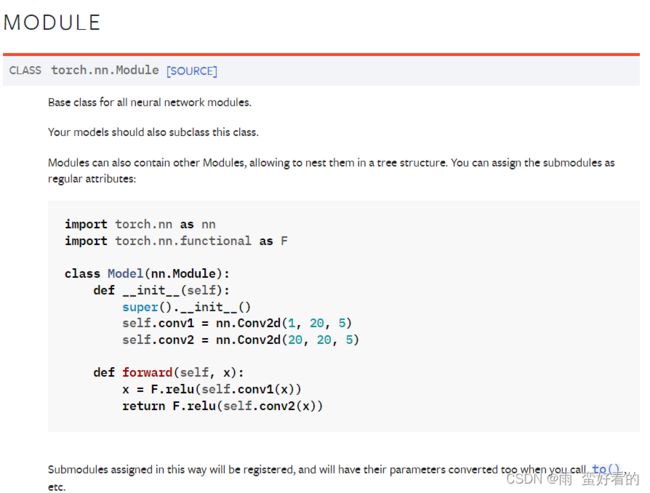

基本骨架

module相当于模板,写自己的函数时,在module中写入自己的函数,所有神经网络模块的基类。

import torch

from torch import nn

#定义神经网络模板

class abc(nn.Module):

def __init__(self) -> None:

super().__init__()

def forward(self,input):

output = input+1

return output

#这个神经网络很简单,给一个input然后输出仅加一

#创建神经网络

a = abc()

x = torch.tensor(1.0)

output = a(x) #将x输入神经网络

print(output)

卷积操作

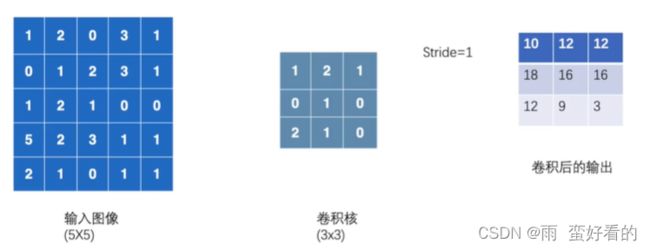

二维卷积:

import torch

import torch.nn.functional as F

input = torch.tensor([[1,2,0,3,1],

[0,1,2,3,1],

[1,2,1,0,0],

[5,2,3,1,1],

[2,1,0,1,1]])

#卷积核

kernel = torch.tensor([[1,2,1],

[0,1,0],

[2,1,0]])

print(input.shape)

print(kernel.shape)

#发现是两参,但是input和weight,需要传入4个参数,需要升维

input = torch.reshape(input,(1,1,5,5))

kernel = torch.reshape(kernel,(1,1,3,3)) #batch_size,channel,H,W

print(input.shape)

print(kernel.shape)

output = F.conv2d(input,kernel,stride=1) #横竖1为步进

print(output)

output2 = F.conv2d(input,kernel,stride=2) ##横竖2为步进

print(output2)

output3 = F.conv2d(input,kernel,stride=1,padding=1) #padding

print(output3)卷积层

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("dataset",train=False,transform=torchvision.transforms.ToTensor(),

download=True)

dataloader =DataLoader(dataset,batch_size=64)

class ABC(nn.Module):

def __init__(self):

super(ABC, self).__init__()

self.conv1 = Conv2d(in_channels=3,out_channels=6,kernel_size=3,stride=1,padding=0)

def forward(self,x):

x = self.conv1(x)

return x

abc = ABC()

print(abc)

for data in dataloader:

imgs,targets = data

output = abc(imgs)

print(imgs.shape)

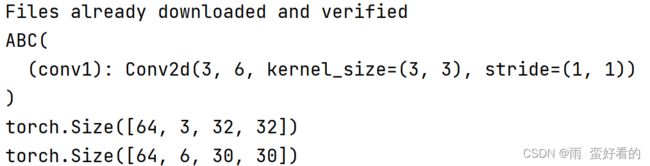

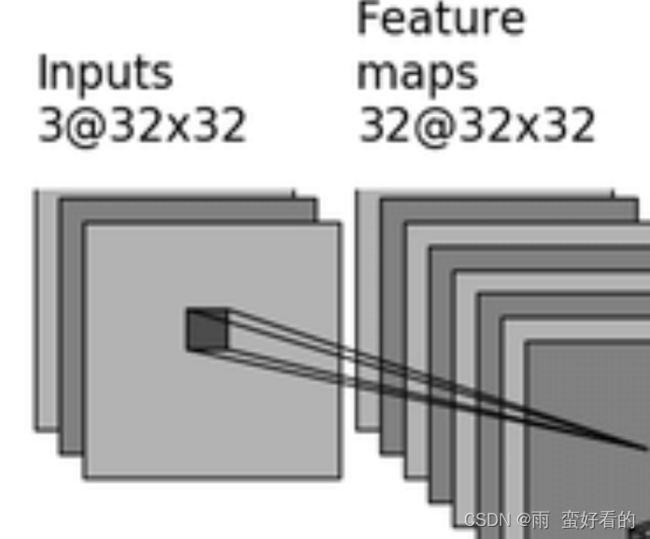

print(output.shape)神经网络名:ABC,它其中一个卷积层名conv1,输入in_channel = 3,out_channel = 6,kernel设置的是3,但是这里拆解的是3×3,为正方形的3×3的卷积核,stride为横向纵向走1步。

in_channel的时候batch_size= 64,因为加载的时候是64,in_channel = 3,产生32×32大小的图像,经过卷积之后变成了6个channel,但是因为经过卷积之后原始图像变小了,变成了30×30.

将图片展示出来

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("dataset",train=False,transform=torchvision.transforms.ToTensor(),

download=True)

dataloader =DataLoader(dataset,batch_size=64)

class ABC(nn.Module):

def __init__(self):

super(ABC, self).__init__()

self.conv1 = Conv2d(in_channels=3,out_channels=6,kernel_size=3,stride=1,padding=0)

def forward(self,x):

x = self.conv1(x)

return x

abc = ABC()

print(abc)

writer = SummaryWriter("logsa")

step = 0

for data in dataloader:

imgs,targets = data

output = abc(imgs)

# torch.Size([64, 3, 32, 32])

writer.add_images("input",imgs,step,)

# torch.Size([64, 6, 30, 30]),但是add_images要求的是3个通道,这里是6个通道

output = torch.reshape(output,(-1,3,30,30))

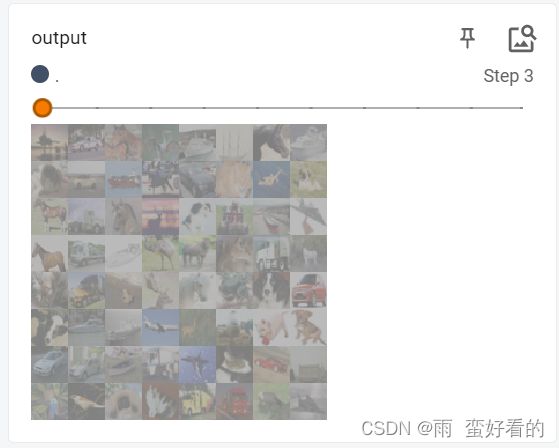

writer.add_images("output",output,step)

step = step+1

writer.close()最大池化

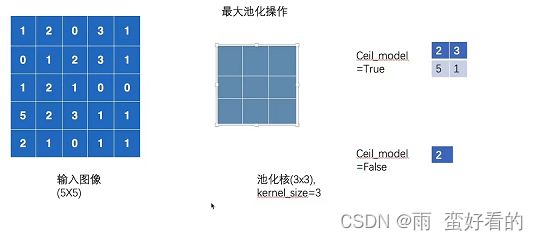

最大池化的目的在于保留原特征的同时减少神经网络训练的参数,使得训练时间减少。相当于1080p的视频变为了720p,神经网络必不可缺的,训练量大大减少,通常先卷积-池化-非线性激活

池化函数采用某一位置的相邻输出的总体统计特征来代替网络在该位置下的输出,本质是 降采样

使用池化层来缩减模型的大小,提高计算速度,同时提高所提取特征的鲁棒性。

kernel_size:设置取最大值的窗口,类似于卷积层的卷积核,如果传入参数是一个int型,则生成一个正方形,边长与参数相同;若是两个int型的元组,则生成长方形。

stride:步径,与卷积层不同,默认值是kernel_size的大小。

padding:和卷积层一样,用法类似于kernel_size。

dilation:控制窗口中元素步幅的参数,就是两两元素之间有间隔。

ceil_mode:有两种模式,为true和false的时候,true的时候,会取最大值,false的时候,如果没有满足kernel_size的大小,就不会进行保留。(下图的步长为3)ceil向上取整,floor向下取整

input = torch.tensor([[1, 2, 0, 3, 1],

[0, 1, 2, 3, 1],

[1, 2, 1, 0, 0],

[5, 2, 3, 1, 1],

[2, 1, 0, 1, 1]])input需要有四个参数,batch_size、channel、输入的高、输入的宽,则设置:设置-1,代表自己计算batch_size,因为是1层,所以是1,5×5的数据所以是5,5,运行

input = torch.reshape(input, [-1, 1, 5, 5])

print(input.shape)

#torch.Size([1,1,5,5]),一个batch_size,一个channel,一个5×5,满足输入的要求创建一个神经网络

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.maxpool1 = nn.MaxPool2d(kernel_size=3, ceil_mode=True)

def forward(self, input):

return self.maxpool1(input)运行网络:发现最大池化不支持long类型(长整数)所以要在input后面 dtype=torch.float32

a = Model()

output = a(input)

print(output)可视化:

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d, MaxPool2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("dataset",train=False,transform=torchvision.transforms.ToTensor(),

download=True) #训练数据集,变化tensor,下载

dataloader =DataLoader(dataset,batch_size=64)

class ABC(nn.Module):

def __init__(self):

super(ABC, self).__init__()

self.maxpool1 = MaxPool2d(kernel_size=3,ceil_mode=True)

def forward(self,x):

x = self.maxpool1(x)

return x

abc = ABC()

print(abc)

writer = SummaryWriter("logs_maxpool")

step = 0

for data in dataloader:

imgs,targets = data

output = abc(imgs)

writer.add_images("input",imgs,step)

writer.add_images("output",output,step)

step = step+1

writer.close()非线性激活

为神经网络引入非线性特质,常见ReLU,SIGMOID,非线性越多,才能训练出符合各种特征的模型

运行以下代码,我们可以看到,数据就被截断了

import torch

from torch import nn

from torch.nn import ReLU

input = torch.tensor([[1,-0.5],[-1,3]])

output = torch.reshape(input,(-1,1,2,2)) #batch_size,channel,H,W

print(output.shape)

class ABC(nn.Module):

def __init__(self):

super(ABC, self).__init__()

self.relu1 = ReLU()

def forward(self,input):

output = self.relu1(input)

return output

abc = ABC()

output = abc(input)

print(output)

#tensor([[1., 0.],[0., 3.]])

sigmoid函数

import torch

import torchvision

from torch import nn

from torch.nn import ReLU, Sigmoid

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

input = torch.tensor([[1,-0.5],[-1,3]])

output = torch.reshape(input,(-1,1,2,2))

print(output.shape)

dataset = torchvision.datasets.CIFAR10("data",train=False,download=True,transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset,batch_size = 64)

class ABC(nn.Module):

def __init__(self):

super(ABC, self).__init__()

self.relu1 = ReLU()

self.sigmoid1 = Sigmoid()

def forward(self,input):

output = self.sigmoid1(input)

return output

abc = ABC()

writer = SummaryWriter("uio")

step = 0

for data in dataloader:

imgs,targets = data

writer.add_images("input",imgs,global_step=step)

output = abc(imgs)

writer.add_images("output",output,step)

step += 1

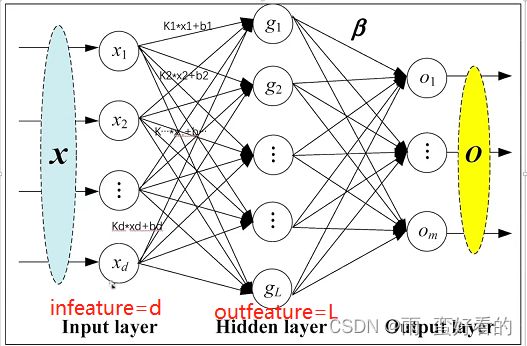

writer.close()线性层

小实战

最大池化不改变channel数

第一步卷积,in_channel是3,out_channel是32,卷积核大小是5,计算一下padding和striding,最后推导得到结果padding = 2,striding = 1

self.conv1 = Conv2d(3,32,5,padding=2)池化部分:

self.maxpool1 = MaxPool2d(kernel_size=2)展开:

self.flatten = Flatten()flatten展开后有64×4×4个数据

然后1024就是线性层的in_feature,64就是out_feature,后面还有一个线性层in_feature是64,out_feature是10,为什么是10,因为CIFAIR10有10个类别图片

线性层

self.linear1 = Linear(1024,64)

self.linear2 = Linear(64, 10)网络搭建完成:

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear

class Tuidui(nn.Module):

def __init__(self):

super(Tuidui,self).__init__()

self.conv1 = Conv2d(3,32,5,padding=2)

self.maxpool1 = MaxPool2d(kernel_size=2)

self.conv2 = Conv2d(32,32,5,padding=2)

self.maxpool2 = MaxPool2d(2)

self.conv3 = Conv2d(32,64,5,padding=2)

self.maxpool3 = MaxPool2d(2)

self.flatten = Flatten()

self.linear1 = Linear(1024,64)

self.linear2 = Linear(64, 10)

def forward(self,x):

x = self.conv1(x)

x = self.maxpool1(x)

x = self.conv2(x)

x = self.maxpool2(x)

x = self.conv3(x)

x = self.maxpool3(x)

x = self.flatten(x)

x = self.linear1(x)

x = self.linear2(x)

return x

tuidui = Tuidui()

print(tuidui)

#Tuidui(

(conv1): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(conv2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(conv3): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(flatten): Flatten(start_dim=1, end_dim=-1)

(linear1): Linear(in_features=1024, out_features=64, bias=True)

(linear2): Linear(in_features=64, out_features=10, bias=True)

)验证正确性:

tuidui = Tuidui()

print(tuidui)

input = torch.ones(64,3,32,32)

print(tuidui(input).shape)

#torch.Size([64, 10])一张图片产生10张,batch_size = 64 (想象是64张图片)

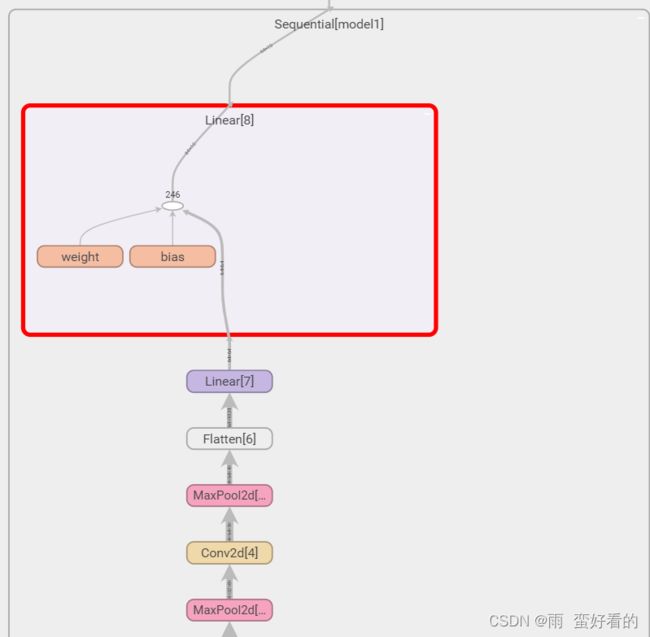

sequential

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

class Tuidui(nn.Module):

def __init__(self):

super(Tuidui,self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(kernel_size=2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x = self.model1(x)

return x

tuidui = Tuidui()

print(tuidui)

input = torch.ones(64,3,32,32)

print(tuidui(input).shape)可视化:

writer = SummaryWriter("logs")

writer.add_graph(tuidui,input)

writer.close()

tensorboard --logdir=logs

损失函数与反向传播

loss_function:计算实际输出与目标之间的差距,为更新输出提供一定的依据(反向传播)

以L1loss为例子(相减的绝对值的平均值)

import torch

from torch.nn import L1Loss

inputs = torch.tensor([1,2,3],dtype=torch.float32)

targets = torch.tensor([1,2,5],dtype=torch.float32)

inputs = torch.reshape(inputs,(1,1,1,3)) #1batch_size,1channel,1行3列

targets = torch.reshape(targets,(1,1,1,3))

loss = L1Loss()

result = loss(inputs,targets)

print(result)

#tensor(0.6667)

以平方差为例子

loss_mse = MSELoss()

result_mse = loss_mse(inputs,targets)

#tensor(1.3333)交叉熵:

It is useful when training a classification problem with C classes. If provided, the optional argument

weightshould be a 1D Tensor assigning weight to each of the classes. This is particularly useful when you have an unbalanced training set.当训练分类问题的时候,这个分类问题有C个类别

计算公式如下 :

import torch

import torchvision.datasets

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("data",train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader = DataLoader(dataset,batch_size=1)

class Tuidui(nn.Module):

def __init__(self):

super(Tuidui,self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(kernel_size=2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x = self.model1(x)

return x

tuidui = Tuidui()

for data in dataloader:

imgs,targets = data

output = tuidui(imgs)

print(output)

print(targets)我们可以看到这是一张输入图片,他会放在神经网络中,然后得到一个输出,12345678910一共10个类别,每个代表的是图片预测类别的概率,这里显示的target是3

tensor([[ 0.0849, 0.1104, 0.0028, 0.1187, 0.0465, 0.0355, 0.0250, -0.1043,

-0.0789, -0.1001]], grad_fn=)

tensor([3])

tensor([[ 0.0779, 0.0849, 0.0025, 0.1177, 0.0436, 0.0274, 0.0123, -0.0999,

-0.0736, -0.1052]], grad_fn=)

tensor([8])

现在我们介绍交叉熵,得到的数是神经网络输出和正式输出的误差

import torch

import torchvision.datasets

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("data",train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader = DataLoader(dataset,batch_size=1)

class Tuidui(nn.Module):

def __init__(self):

super(Tuidui,self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(kernel_size=2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x = self.model1(x)

return x

loss = nn.CrossEntropyLoss()

tuidui = Tuidui()

for data in dataloader:

imgs,targets = data

output = tuidui(imgs)

result_loss = loss(output,targets)

print(result_loss)Files already downloaded and verified

tensor(2.3258, grad_fn=)

tensor(2.3186, grad_fn=)

tensor(2.3139, grad_fn=)

反向传播:

result_loss.backward()

根据反向传播求出梯度,然后根据梯度进行参数的更新优化

优化器

使用损失函数的时候调用backward就可以求出每一个需要调节的参数(对应的梯度),有了梯度就可以利用优化器对梯度进行调整,对误差进行降低。

import torch

import torchvision.datasets

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

#加载数据集,转化成tensor类型

dataset = torchvision.datasets.CIFAR10("data",train=False,transform=torchvision.transforms.ToTensor(),download=True)

#dataloader加载

dataloader = DataLoader(dataset,batch_size=1)

#创建相应的网络

class Tuidui(nn.Module):

def __init__(self):

super(Tuidui,self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(kernel_size=2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x = self.model1(x)

return x

#定义loss

loss = nn.CrossEntropyLoss()

#搭建出相应的网络

tuidui = Tuidui()

#优化器,随机梯度下降

optim = torch.optim.SGD(tuidui.parameters(),lr=0.01)

#取数据

for data in dataloader:

imgs,targets = data

output = tuidui(imgs)

#计算输出和target之间的差距

result_loss = loss(output,targets)

#把梯度设置为0

optim.zero_grad()

#得到梯度

result_loss.backward()

#调用优化器进行参数优化

optim.step()

print(result_loss)

打印出来后我们发现误差并没有明显的变化,因为深度学习要进行一轮一轮的学习,因此要加一个for训练多次

#训练20次

for epoch in range(20):

running_loss = 0.0

#取数据

for data in dataloader:

imgs,targets = data

output = tuidui(imgs)

#计算输出和target之间的差距

result_loss = loss(output,targets)

#把梯度设置为0

optim.zero_grad()

#得到梯度

result_loss.backward()

#调用优化器进行参数优化

optim.step()

running_loss = running_loss+result_loss

print(running_loss)我们可以看到,每一轮的误差不断的减少,训练的很慢

修改现有网络模型

在现有网络中添加线性层,从1000类别转化为10类别

vgg16_true.add_module('add_linear',nn.Linear(1000,10))

也可以直接修改不添加

vgg16_true[6] = nn.Linear(4096,10)

网络保存加载

torch.save(vgg16,"vgg16_method1.pth") #保存网络模型结构和参数

torch.load("vgg16_method.pth") #加载

troch.save(vgg16.state_dict()) #把vgg16保存为字典形式保存参数(官方推荐内存小)

模型训练套路

1、准备数据集

train_data = torchvision.datasets.CIFAR10("data",train=True,transform=torchvision.transforms.ToTensor(),download=True)

test_data = torchvision.datasets.CIFAR10("data",train=False,transform=torchvision.transforms.ToTensor(),download=True)2、加载数据集

#dataloader加载

train_dataloader = DataLoader(train_data,batch_size=64)

test_dataloader = DataLoader(test_data,batch_size=64)3、创建神经网络

#创建神经网络

class Tuidui(nn.Module):

def __init__(self):

super(Tuidui,self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(kernel_size=2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x = self.model1(x)

return x神经网络引入主程序

from model import *要注意神经网络的文件和主程序的文件在同一个文件夹下

4、创建网络模型

#搭建出相应的网络

tuidui = Tuidui()5、损失函数

#定义loss

loss_fn = nn.CrossEntropyLoss()6、优化器

#优化器,随机梯度下降

learning_rate = 0.01

optimizer = torch.optim.SGD(tuidui.parameters(),lr=learning_rate)7、设置训练网络参数

#设置训练网络参数

#记录训练次数

total_train_step = 0

total_test_step = 0

#训练的轮数

epoch = 10

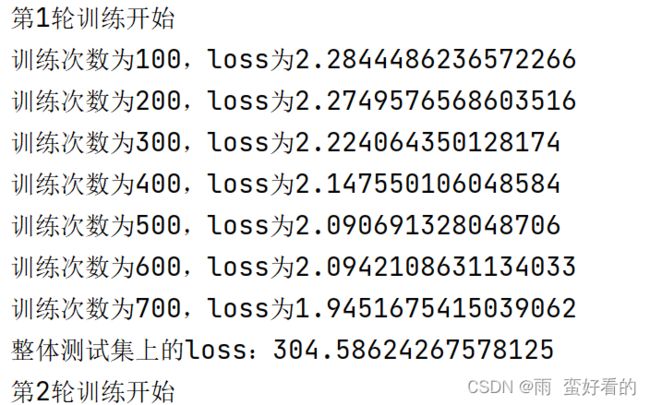

for i in range(epoch):

print(f"第{i+1}轮训练开始")

running_loss = 0.0

#训练步骤开始

for data in train_dataloader:

imgs,targets = data

output = tuidui(imgs)

#计算输出和target之间的差距

result_loss = loss_fn(output,targets)

#优化器优化模型

#把梯度设置为0

optimizer.zero_grad()

#得到梯度

result_loss.backward()

#调用优化器进行参数优化

optimizer.step()

running_loss = running_loss+result_loss

total_train_step = total_train_step+1

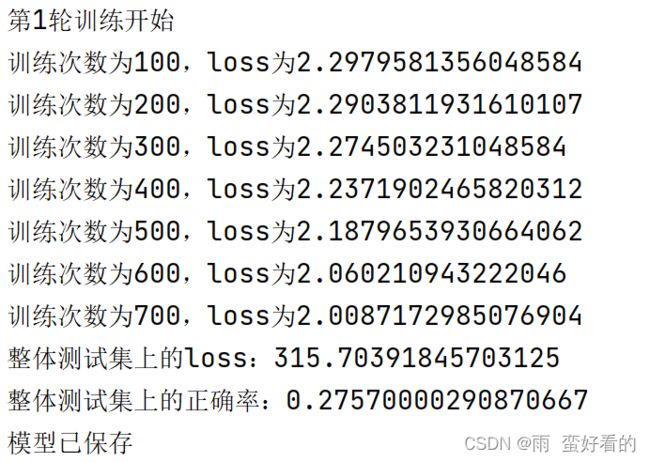

print(f"训练次数为{total_train_step},loss为{result_loss}")8、测试

#测试步骤

total_test_loss = 0

with torch.no_grad():

for data in test_dataloader:

imgs,targets = data

output = tuidui(imgs)

result_loss = loss_fn(output,targets)

total_test_loss = total_test_loss +result_loss

print(f"整体测试集上的loss:{total_test_loss}")9、可视化

writer.add_scalar("train_loss",result_loss,total_train_step)writer.add_scalar("test_loss",total_test_loss,total_test_step)

打开tensoboard

10、保存

torch.save(tuidui,f"tuidui_{i}.pth")

保存到左侧目录中

11、添加正确率

完整代码段

import torch

import torchvision.datasets

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from module import *

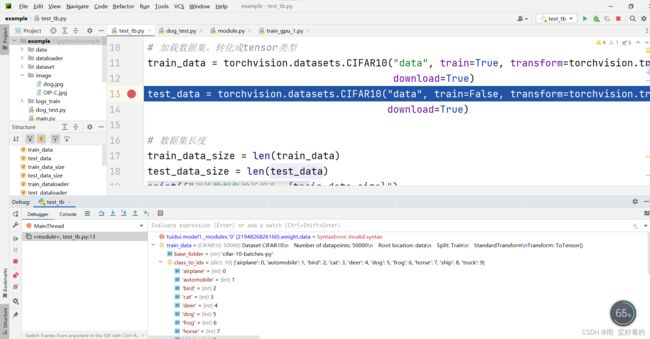

# 加载数据集,转化成tensor类型

train_data = torchvision.datasets.CIFAR10("data", train=True, transform=torchvision.transforms.ToTensor(),

download=True)

test_data = torchvision.datasets.CIFAR10("data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

# 数据集长度

train_data_size = len(train_data)

test_data_size = len(test_data)

print(f"训练数据集的长度为:{train_data_size}")

print(f"测试数据集的长度为:{test_data_size}")

# dataloader加载

train_dataloader = DataLoader(train_data, batch_size=64)

test_dataloader = DataLoader(test_data, batch_size=64)

# 定义loss

loss_fn = nn.CrossEntropyLoss()

# 搭建出相应的网络

tuidui = Tuidui()

# 优化器,随机梯度下降

learning_rate = 0.01

optimizer = torch.optim.SGD(tuidui.parameters(), lr=learning_rate)

# 设置训练网络参数

# 记录训练次数

total_train_step = 0

total_test_step = 0

# 训练的轮数

epoch = 10

# 添加tensorboard

writer = SummaryWriter("logs_train")

for i in range(epoch):

print(f"第{i + 1}轮训练开始")

running_loss = 0.0

# 训练步骤开始

for data in train_dataloader:

imgs, targets = data

output = tuidui(imgs)

# 计算输出和target之间的差距

result_loss = loss_fn(output, targets)

# 优化器优化模型

# 把梯度设置为0

optimizer.zero_grad()

# 得到梯度

result_loss.backward()

# 调用优化器进行参数优化

optimizer.step()

running_loss = running_loss + result_loss

total_train_step = total_train_step + 1

if total_train_step % 100 == 0:

print(f"训练次数为{total_train_step},loss为{result_loss}")

writer.add_scalar("train_loss", result_loss, total_train_step)

# 测试步骤

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

output = tuidui(imgs)

result_loss = loss_fn(output, targets)

total_test_loss = total_test_loss + result_loss

# 正确率

accuracy = (output.argmax(1) == targets).sum()

total_accuracy = total_accuracy + accuracy

print(f"整体测试集上的loss:{total_test_loss}")

print(f"整体测试集上的正确率:{total_accuracy / test_data_size}")

writer.add_scalar("test_loss", total_test_loss, total_test_step)

writer.add_scalar("test_accuracy", total_accuracy / test_data_size, total_test_step)

total_test_step = total_test_step + 1

# 保存

torch.save(tuidui, f"tuidui_{i}.pth")

# torch.save(tuidui.state_dict(),f"tuidui_{i}.pth")方式2保存

print("模型已保存")

writer.close()

argmax函数

In : a = np.array([[1, 3, 5, 7],[5, 7, 2, 2],[4, 6, 8, 1]])

Out: [[1, 3, 5, 7],

[5, 7, 2, 2],

[4, 6, 8, 1]]

In : b = np.argmax(a, axis=0) # 对数组按列方向搜索最大值

Out: [1 1 2 0]

In : b = np.argmax(a, axis=1) # 对数组按行方向搜索最大值

Out: [3 1 2]

import torch

outputs = torch.tensor([[0.1,0.2],

[0.05,0.4]])

print(outputs.argmax(1))

print(outputs.argmax(0))

#结果

tensor([1, 1])

tensor([0, 1])GPU训练

对网络模型,输入,损失函数添加.cuda(),使用GPU训练

为保证有GPU通常先加上下面命令 if torch.cuda.is_available():

完整代码

import torch

import torchvision.datasets

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from module import *

# 加载数据集,转化成tensor类型

train_data = torchvision.datasets.CIFAR10("data", train=True, transform=torchvision.transforms.ToTensor(),

download=True)

test_data = torchvision.datasets.CIFAR10("data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

# 数据集长度

train_data_size = len(train_data)

test_data_size = len(test_data)

print(f"训练数据集的长度为:{train_data_size}")

print(f"测试数据集的长度为:{test_data_size}")

# dataloader加载

train_dataloader = DataLoader(train_data, batch_size=64)

test_dataloader = DataLoader(test_data, batch_size=64)

#创建神经网络

class Tuidui(nn.Module):

def __init__(self):

super(Tuidui,self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(kernel_size=2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x = self.model1(x)

return x

# 搭建出相应的网络

tuidui = Tuidui()

if torch.cuda.is_available():

tuidui = tuidui.cuda()

# 定义loss

loss_fn = nn.CrossEntropyLoss()

loss_fn = loss_fn.cuda()

# 优化器,随机梯度下降

learning_rate = 0.01

optimizer = torch.optim.SGD(tuidui.parameters(), lr=learning_rate)

# 设置训练网络参数

# 记录训练次数

total_train_step = 0

total_test_step = 0

# 训练的轮数

epoch = 10

# 添加tensorboard

writer = SummaryWriter("logs_train")

for i in range(epoch):

print(f"第{i + 1}轮训练开始")

running_loss = 0.0

# 训练步骤开始

for data in train_dataloader:

imgs, targets = data

imgs = imgs.cuda()

targets = targets.cuda()

output = tuidui(imgs)

# 计算输出和target之间的差距

result_loss = loss_fn(output, targets)

# 优化器优化模型

# 把梯度设置为0

optimizer.zero_grad()

# 得到梯度

result_loss.backward()

# 调用优化器进行参数优化

optimizer.step()

running_loss = running_loss + result_loss

total_train_step = total_train_step + 1

if total_train_step % 100 == 0:

print(f"训练次数为{total_train_step},loss为{result_loss}")

writer.add_scalar("train_loss", result_loss, total_train_step)

# 测试步骤

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

imgs = imgs.cuda()

targets = targets.cuda()

output = tuidui(imgs)

result_loss = loss_fn(output, targets)

total_test_loss = total_test_loss + result_loss

# 正确率

accuracy = (output.argmax(1) == targets).sum()

total_accuracy = total_accuracy + accuracy

print(f"整体测试集上的loss:{total_test_loss}")

print(f"整体测试集上的正确率:{total_accuracy / test_data_size}")

writer.add_scalar("test_loss", total_test_loss, total_test_step)

writer.add_scalar("test_accuracy", total_accuracy / test_data_size, total_test_step)

total_test_step = total_test_step + 1

# 保存

torch.save(tuidui, f"tuidui_{i}.pth")

# torch.save(tuidui.state_dict(),f"tuidui_{i}.pth")方式2保存

print("模型已保存")

writer.close()

模型验证

现在我们下载狗狗的照片到img文件下(之前宇智波鼬的位置)

训练时用的模型注意使用CPU训练出的模型,如果使用GPU训练的的模型会报错.cuda,采用GPU训练的模型在CPU加载过程中一定要对应过来,对应到CPU上

model = torch.load("tuidui_0.pth",map_location=torch.device('cpu'))下面是CPU训练的模型

import torch

import torchvision.transforms

from PIL import Image

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

image_path = "F:\python\example\image\dog.jpg"

image = Image.open(image_path)

print(image)

transform = torchvision.transforms.Compose([torchvision.transforms.Resize((32,32)),

torchvision.transforms.ToTensor()])

image = transform(image)

print(image.shape) #torch.size([3,32,32])

#创建神经网络

class Tuidui(nn.Module):

def __init__(self):

super(Tuidui,self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(kernel_size=2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x = self.model1(x)

return x

#加载模型

model = torch.load("tuidui_0.pth") #使用CPU的训练模型

#img输入模型中

image = torch.reshape(image,(1,3,32,32))

with torch.no_grad():

output = model(image) #模型需要4维参数

print(output)

print(output.argmax(1))

torch.Size([3, 32, 32])

tensor([[-1.1488, -0.2753, 0.7588, 0.7703, 0.7203, 0.9781, 0.7678, 0.3184,

-2.0950, -1.3502]])tensor([5])

我们可以看到第6个数最大,即预测的是第6个类别,即tenso[5],通过debug我们看到相应类别是狗,表示猜对

下面是GPU训练的模型

import torch

import torchvision.transforms

from PIL import Image

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

image_path = "F:\python\example\image\dog.jpg"

image = Image.open(image_path)

print(image)

transform = torchvision.transforms.Compose([torchvision.transforms.Resize((32,32)),

torchvision.transforms.ToTensor()])

image = transform(image)

print(image.shape) #torch.size([3,32,32])

#创建神经网络

class Tuidui(nn.Module):

def __init__(self):

super(Tuidui,self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(kernel_size=2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x = self.model1(x)

return x

#加载模型

model = torch.load("tuidui_0.pth",map_location=torch.device('cpu')) #使用GPU的训练模型

#img输入模型中

image = torch.reshape(image,(1,3,32,32))

with torch.no_grad():

output = model(image) #模型需要4维参数

print(output)

print(output.argmax(1))