PyTorch-YOLO V5训练自己的VOC数据集(四)

VOC数据集目录结构

----voc

----Annotations

----ImageSets

----Main

----JPEGImages

在根目录下新建makeTXT.py,将数据集划分,并且在Main文件夹下构建4个TXT:train.txt,test.txt,trainval.txt,val.txt。代码如下:

import os

import random

trainval_percent = 0.1

train_percent = 0.9

xmlfilepath = './Annotations'

txtsavepath = './ImageSets'

total_xml = os.listdir(xmlfilepath)

num = len(total_xml)

list = range(num)

tv = int(num * trainval_percent)

tr = int(tv * train_percent)

trainval = random.sample(list, tv)

train = random.sample(trainval, tr)

ftrainval = open('./ImageSets/Main/trainval.txt', 'w')

ftest = open('./ImageSets/Main/test.txt', 'w')

ftrain = open('./ImageSets/Main/train.txt', 'w')

fval = open('./ImageSets/Main/val.txt', 'w')

for i in list:

name = total_xml[i][:-4] + '\n'

if i in trainval:

ftrainval.write(name)

if i in train:

ftest.write(name)

else:

fval.write(name)

else:

ftrain.write(name)

ftrainval.close()

ftrain.close()

fval.close()

ftest.close()

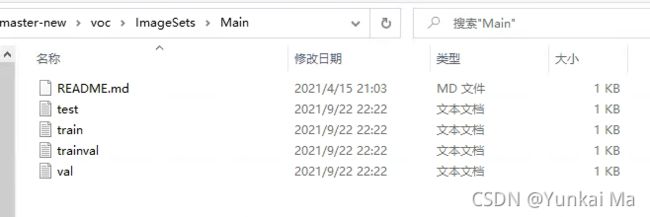

运行makeTXT.py,ImageSets\Main文件夹生成如下文件:

在根目录下新建voc_label.py,生成labels文件夹,及用于yolov5训练的train.txt,text.txt,val.txt。代码如下:

#encoding='utf-8'

import xml.etree.ElementTree as ET

import pickle

import os

from os import listdir, getcwd

from os.path import join

sets = ['train', 'test','val']

classes = ["FP"]

def convert(size, box):

dw = 1. / size[0]

dh = 1. / size[1]

x = (box[0] + box[1]) / 2.0

y = (box[2] + box[3]) / 2.0

w = box[1] - box[0]

h = box[3] - box[2]

x = x * dw

w = w * dw

y = y * dh

h = h * dh

return (x, y, w, h)

def convert_annotation(image_id):

in_file = open('Annotations/%s.xml' % (image_id), encoding='UTF-8')

out_file = open('labels/%s.txt' % (image_id), 'w')

tree = ET.parse(in_file)

root = tree.getroot()

size = root.find('size')

w = int(size.find('width').text)

h = int(size.find('height').text)

for obj in root.iter('object'):

difficult = obj.find('difficult').text

cls = obj.find('name').text

if cls not in classes or int(difficult) == 1:

continue

cls_id = classes.index(cls)

xmlbox = obj.find('bndbox')

b = (float(xmlbox.find('xmin').text), float(xmlbox.find('xmax').text), float(xmlbox.find('ymin').text),

float(xmlbox.find('ymax').text))

bb = convert((w, h), b)

out_file.write(str(cls_id) + " " + " ".join([str(a) for a in bb]) + '\n')

wd = getcwd()

print(wd)

for image_set in sets:

if not os.path.exists('labels/'):

os.makedirs('labels/')

image_ids = open('ImageSets/Main/%s.txt' % (image_set)).read().strip().split()

list_file = open('%s.txt' % (image_set), 'w')

for image_id in image_ids:

list_file.write('images/%s.bmp\n' % (image_id))

convert_annotation(image_id)

list_file.close()

运行voc_label.py,用于训练的数据集标注文件如下图:

借助COCO数据集格式,将上述文件放在COCO128数据集中:

将JPEGImages文件中的所有图像放在datasets\coco128\images\train2017文件下,将voc_label.py生成的labels文件中所有标注文件放在datasets\coco128\labels\train2017文件下。

将voc格式的数据集转换成yolo格式的数据集进行训练和预测。