Text Recognition with ML Kit

转发自:https://www.raywenderlich.com/197292/text-recognition-with-ml-kit

Text Recognition with ML Kit

At Google I/O 2018, Google announced a new library, ML Kit, for developers to easily leverage machine learning on mobile. With it, you can now add some common machine learning functionality to your app without necessarily being a machine learning expert!

In this tutorial, you’ll learn how to setup and use Google’s ML Kit in your Android apps by creating an app to open a Twitter profile from a picture of that profile’s Twitter handle. By the end you will learn:

What ML Kit is, and what it has to offer

- How to set up ML Kit with your Android app and Firebase

- How to run text recognition on-device

- How to run text recognition in the cloud

- How to use the results from running text recognition with ML Kit

Note: This tutorial assumes you have basic knowledge of Kotlin and Android. If you’re new to Android, check out our Android tutorials. If you know Android, but are unfamiliar with Kotlin, take a look at Kotlin For Android: An Introduction.

Before we get started, let’s first take a look at what ML Kit is.

What is ML Kit?

Machine learning gives computers the ability to “learn” through a process which trains a model with a set of inputs that produce known outputs. By feeding a machine learning algorithm a bunch of data, the resulting model is able to make predictions, such as whether or not a cute cat is in a photo. When you don’t have the help of awesome libraries, the machine learning training process takes lots of math and specialized knowledge.

Google provided TensorFlow and TensorFlow Lite for mobile so that developers could create their own machine learning models and use them in their apps. This helped a tremendous amount in making machine learning more approachable; however, it still feels daunting to many developers. Using TensorFlow still requires some knowledge of machine learning, and often the ability to train your own model.

In comes ML Kit! There are many common cases to use machine learning in mobile apps, and they often include some kind of image processing. Google has already been using machine learning for some of these things, and has made their work available to us through an easy to use API. They built ML Kit on top of TensorFlow Lite, the Cloud Vision API, and the Neural Networks API so that we developers can take advantage of models for:

- Text recognition

- Face detection

- Barcode scanning

- Image labeling

- Landmark recognition

Google has plans to include more APIs in the future, too! Having these options, you’re able to implement intelligent features into your app without needing to understand machine learning, or training your own models.

In this tutorial, you’re going to focus on text recognition. With ML Kit, you can provide an image, and then receive a response with the text found in the image, along with the text’s location in the image. Text recognition is one of the ML Kit APIs that can run both locally on your device and also in the cloud, so we will look at both. Some of the other APIs are only supported on one or the other.

Time to get started!

Getting Started

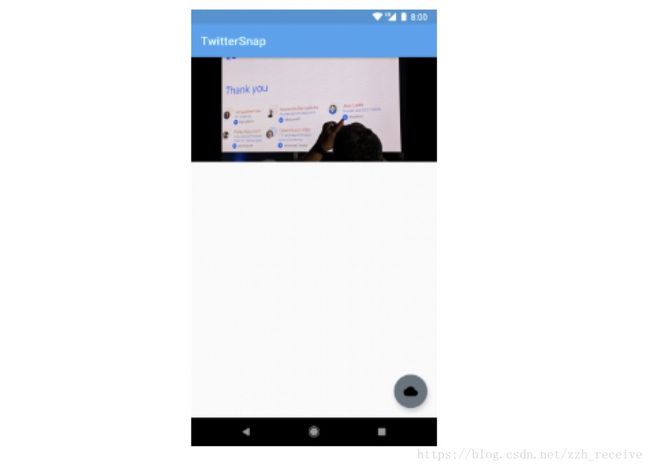

Have you ever taken a photo of someone’s Twitter handle so you could find them later? The sample app you will be working on, TwitterSnap, allows you to select a photo from your device, and run text recognition on it.

You will first work to run the text recognition locally on the device, and then follow that up with running in the cloud. After any recognition completes, a box will show up around the detected Twitter handles. You can then click these handles to open up that profile in a browser, or the Twitter app if you have it installed.

Start by downloading the starter project. You can find the link at the top or bottom of this tutorial. Then open the project in Android Studio 3.1.2 or greater by going to File > New > Import Project, and selecting the build.gradle file in the root of the project.

There’s one main file you will be working with in this tutorial, and that’s MainActivityPresenter.kt. It’s pretty empty right now with a couple helper methods. This file is for you to fill in! You’ll also need to add some things to build.gradle and app/build.grade, so make sure you can find these too.

Once the starter project finishes loading and building, run the application on a device or emulator.

You can select an image by clicking the camera icon FloatingActionButton in the bottom corner of the screen. If you don’t have an image on hand that has a Twitter handle, feel free to download the one below. You can also go to Twitter and take a screenshot.

Once you have the image selected, you can see it in view, but not much else happens. It’s time for you to implement the ML Kit functionality to make this app fun!

Setting up Firebase

ML Kit uses Firebase, so we need to set up a new app on the Firebase console before we move forward. To create the app, you need a unique app ID. In the app/build.gradle file, you’ll find a variable named uniqueAppId.

def uniqueAppId = "" Replace that string with something unique to you. You can make it your name, or something funny like “tinypurplealligators”. Make sure it’s all lowercase, with no spaces or special characters. And don’t forget what you picked! You’ll need it again soon.

Try running the app to make sure it’s okay. You’ll end up with two installs on your device, one with the old app ID, and one with the new one. If this bothers you, feel free to uninstall the old one, or uninstall both since future steps will reinstall this one.

Moving on the the Firebase Console

Open the console, and make sure you’re signed in with a Google account. From there, you need to create a new project. Click on the “Add project” card.

In the screen that pops up, you have to provide a project name. Input TwitterSnap for the name of this project. While you’re there, choose your current country from the dropdown, and accept the terms and conditions.

You should then see the project ready confirmation screen, on which you should hit Continue.

Now that you have the project set up, you need to add it to your Android app. Select Add Firebase to your Android app.

On the next screen, you need to provide the package name. This will use the app ID you changed in the app/build.gradle file. Enter com.raywenderlich.android.twittersnap.YOUR_ID_HERE being sure to replace YOUR_ID_HERE with the unique id you provided earlier. Then click the Register App button.

After this, you’ll be able to download a google-services.json file. Download it and place this file in your app/ directory.

Finally, you need to add the required dependencies to your build.gradle files. In the top level build.gradle, add the google services classpath in the dependencies block:

classpath 'com.google.gms:google-services:3.3.1'The Firebase console may suggest a newer version number, but go ahead and stick with the numbers given here so that you’ll be consistent with the rest of the tutorial.

Next, add the Firebase Core and Firebase ML vision dependencies to app/build.gradle in the dependencies block.

implementation 'com.google.firebase:firebase-core:15.0.2'

implementation 'com.google.firebase:firebase-ml-vision:15.0.0'Add this line to the bottom of app/build.gradle to apply the Google Services plugin:

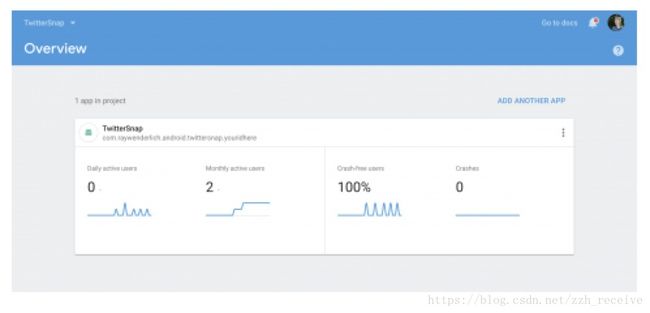

apply plugin: 'com.google.gms.google-services'Sync the project Gradle files, then build and run the app to make sure it’s all working. Nothing will change in the app, but you should see the app activated in the Firebase console if you finish the instructions for adding the Firebase to the app. You’ll then also see your app on the Firebase console Overview screen.

Enabling Firebase for in-cloud text recognition

There are a couple of extra steps to complete in order to run text recognition in the cloud. You’ll do this now, as it takes a couple minutes to propagate and be usable on your device. It should then be ready for when you get to that part of the tutorial.

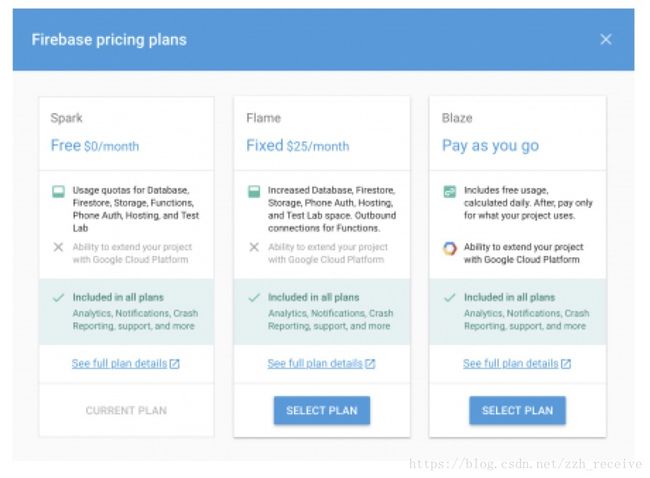

On the Firebase console for your project, ensure you’re on the Blaze plan instead of the default Spark plan. Click on the plan information in the bottom left corner of the screen to change it.

You’ll need to enter payment information for Firebase to proceed with the Blaze plan. Don’t worry, the first 1000 requests are free, so you don’t have to pay towards it over the course of following this tutorial. You can switch back to the free Spark plan when you’ve finished the tutorial.

If you’re hesitant to put in payment information, you can just stick with the Spark plan while following the on-device steps in the remainder of the tutorial, and then just skip over the instruction steps below for using ML Kit in the cloud.

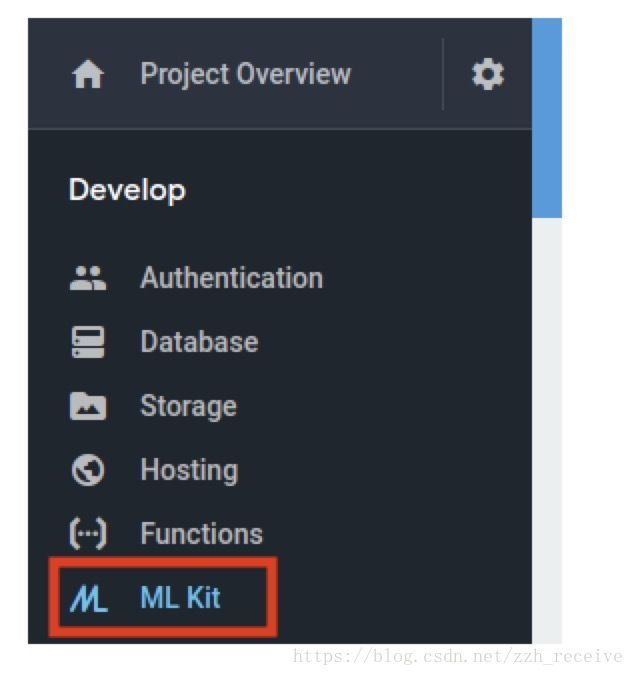

Next, you need to enable the Cloud Vision API. Choose ML Kit in the console menu at the left.

Next, choose Cloud API Usage on the resulting screen.

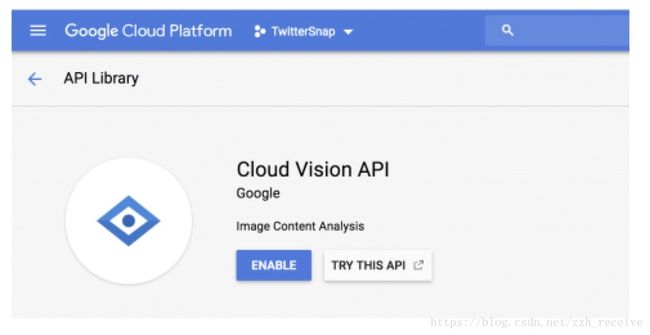

This takes you to the Cloud Vision API screen. Make sure to select your project at the top, and click the Enable button.

In a few minutes, you’ll be able to run your text recognition in the cloud!

Detecting text on-device

Now you get to dig into the code! You’ll start by adding functionality to detect text in the image on-device.

You have the option to run text recognition on both the device and in the cloud, and this flexibility allows you to use what is best for the situation. If there’s no network connectivity, or you’re dealing with sensitive data, running the model on-device might be better.

In MainActivityPresenter.kt, find the runTextRecognition() method to fill in. Add this code to the body of runTextRecognition(). Use Alt + Enter on PC or Option + Return on a Mac to import any missing dependencies.

view.showProgress()

val image = FirebaseVisionImage.fromBitmap(selectedImage)

val detector = FirebaseVision.getInstance().visionTextDetectorThis starts by signaling to the view to show the progress so you have a visual queue that work is being done. Then you instantiate two objects, a FirebaseVisionImage from the bitmap passed in, and a FirebaseVisionTextDetector that you can use to detect the text.

Now you can use that detector to detect the text in the image. Add the following code to the same runTextRecognition() method below the code you added previously. There is one method call, processTextRecognitionResult() that is not implemented yet, and will show an error because of it. Don’t worry, you’ll implement that next.

detector.detectInImage(image)

.addOnSuccessListener { texts ->

processTextRecognitionResult(texts)

}

.addOnFailureListener { e ->

// Task failed with an exception

e.printStackTrace()

}Using the detector, you detect the text by passing in the image. The method detectInImage() takes two callbacks, one for success, and one for error. In the success callback, you have that method you still need to implement.

Once you have the results, you need to use them. Create the following method:

private fun processTextRecognitionResult(texts: FirebaseVisionText) {

view.hideProgress()

val blocks = texts.blocks

if (blocks.size == 0) {

view.showNoTextMessage()

return

}

}At the start of this method you tell the view to stop showing the progress, and do a check to see if there is any text to process by checking the size of the text blocks property.

Once you know you have text, you can do something with it. Add the following to the bottom of the processTextRecognitionResult() method:

blocks.forEach { block ->

block.lines.forEach { line ->

line.elements.forEach { element ->

if (looksLikeHandle(element.text)) {

view.showHandle(element.text, element.boundingBox)

}

}

}

}The results come back as a nested structure so you can look at it in whatever kind of granularity you want. The hierarchy for on-device recognition is block > line > element > text. You iterate through each of these, check to see if it looks like a Twitter handle, using a regular expression in a helper method looksLikeHandle(), and show it if it does. Each of these elements have a boundingBox for where ML Kit found the text in the image. This is what the app uses to draw a box around where each detected handle is.

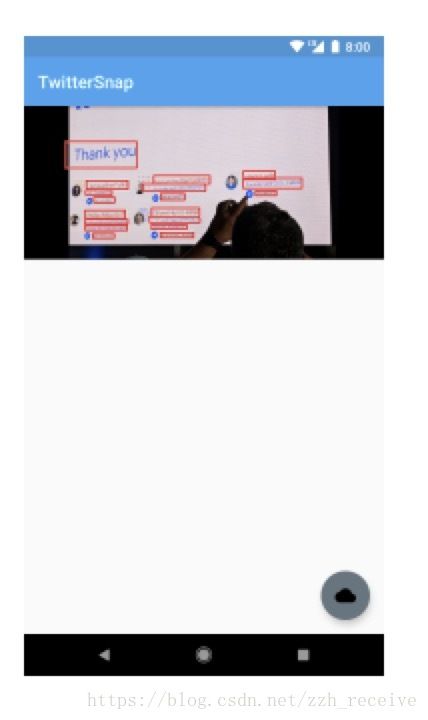

Now build and run the app, select an image containing Twitter handles, and see the results! If you tap on one of these results, it will open the Twitter profile.

You can click the above screenshot to see it full size and verify that the bounding boxes are surrounding Twitter handles.

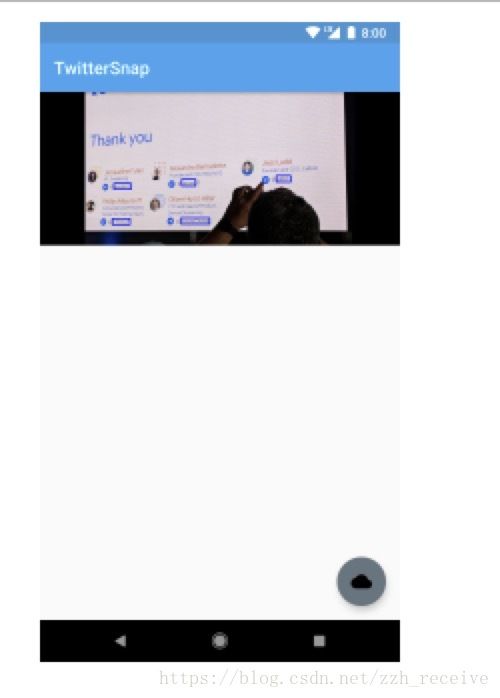

As a bonus, the view also has a generic showBox(boundingBox: Rect?) method. You can use this at any stage of the loop to show the outline of any of these groups. For example, in the line forEach, you can call view.showBox(line.boundingBox) to show boxes for all the lines found. Here’s what it would look like if you did that with the line element:

Detecting text in the cloud

After you run the on-device text recognition, you may have noticed that the image on the FAB changes to a cloud icon. This is what you’ll tap to run the in-cloud text recognition. Time to make that button do some work!

When running text recognition in the cloud, you receive more detailed and accurate predictions. You also avoid doing all that extra processing on-device, saving some of that power. Make sure you completed the Enabling Firebase for in-cloud text recognition section above so you can get started.

The first method you’ll implement is very similar to what you did for the on-device recognition. Add the following code to the runCloudTextRecognition() method:

view.showProgress()

// 1

val options = FirebaseVisionCloudDetectorOptions.Builder()

.setModelType(FirebaseVisionCloudDetectorOptions.LATEST_MODEL)

.setMaxResults(15)

.build()

val image = FirebaseVisionImage.fromBitmap(selectedImage)

// 2

val detector = FirebaseVision.getInstance()

.getVisionCloudDocumentTextDetector(options)

detector.detectInImage(image)

.addOnSuccessListener { texts ->

processCloudTextRecognitionResult(texts)

}

.addOnFailureListener { e ->

e.printStackTrace()

}There are a couple small differences from what you did for on-device recognition.

- The first is that you’re including some extra options to your detector. Building these options using a FirebaseVisionCloudDetectorOptions builder, you’re saying that you want the latest model, and to limit the results to 15.

- When you request a detector, you are also specifying that you want a FirebaseVisionCloudTextDetector, which you pass those options to. You handle the success and failure cases in the same way as on-device.

You will be processing the results similar to before, but diving in a little deeper using information that comes back from the in-cloud processing. Add the following nested class and helper functions to the presenter:

class WordPair(val word: String, val handle: FirebaseVisionCloudText.Word)

private fun processCloudTextRecognitionResult(text: FirebaseVisionCloudText?) {

view.hideProgress()

if (text == null) {

view.showNoTextMessage()

return

}

text.pages.forEach { page ->

page.blocks.forEach { block ->

block.paragraphs.forEach { paragraph ->

paragraph.words

.zipWithNext { a, b ->

// 1

val word = wordToString(a) + wordToString(b)

// 2

WordPair(word, b)

}

.filter { looksLikeHandle(it.word) }

.forEach {

// 3

view.showHandle(it.word, it.handle.boundingBox)

}

}

}

}

}

private fun wordToString(

word: FirebaseVisionCloudText.Word): String =

word.symbols.joinToString("") { it.text }If you look at the results, you’ll notice something. The structure of the text recognition result is slightly different than when it runs on the cloud. The accuracy of the cloud model can provide us with some more detailed information we didn’t have before. The hierarchy you’ll see is page > block > paragraph > word > symbol. Because of this, we need to do a little extra to process it.

- With the granularity of the results the “@” and the other characters of a handle are is separate words. Because of that, you are taking each word, creating a string from it using the symbols in wordToString(), and concatenating each neighboring word.

- The new class you see, WordPair, is a way to give names to the pair of objects, the string you just created, and the Firebase object for the handle.

- From there, you are displaying it the same as the on-device code.

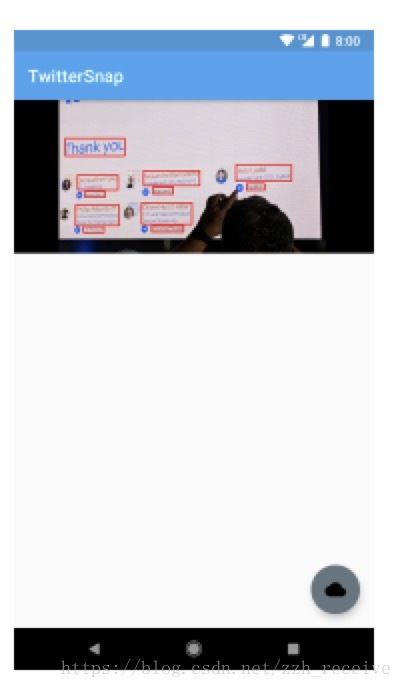

Build and run the project, and test it out! After you pick an image, and run the recognition on the device, click the cloud icon in the bottom corner to run the recognition in the cloud. You may see results that the on-device recognition missed!

Again, you can use showBox(boundingBox: Rect?) at any level of the loops to see what this level detects. This has boxes around every paragraph:

Congratulations! You can now detect text from an image using ML Kit on both the device and in the cloud. Imagine the possibilities for where you can use this and other parts of ML Kit!

Feel free to download the completed project to check it out. Find the download link at the top or bottom of this tutorial.

Note: You must complete the “Setting up Firebase” and “Enabling Firebase for in-cloud text recognition” sections in order for the final project to work. Remember to also go back to the Firebase Spark plan if you upgraded to the Blaze plan in the Firebase console.

ML Kit doesn’t stop here! You can also use it for face detection, barcode scanning, image labeling, and landmark recognition with similar ease. Be on the lookout for possible future additions to ML Kit as well. Google has talked about adding APIs for face contour and smart replies. Check out these resources as you continue on your ML Kit journey:

ML Kit documentation

Google’s ML Kit Code Lab

Exploring Firebase ML Kit on Android

Firebase Machine Learning

Feel free to share your feedback, findings or ask any questions in the comments below or in the forums. I hoped you enjoyed getting started with text recognition using ML Kit!

Happy coding!

Download Materials

Team

Each tutorial at www.raywenderlich.com is created by a team of dedicated developers so that it meets our high quality standards. The team members who worked on this tutorial are: