基于python3.6+tensorflow2.2的手写体识别案例

unzip_save.py

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' # 不显示等级2以下的提示信息

import zipfile

import csv

import numpy as np

import tensorflow as tf

from tensorflow.keras.preprocessing.image import ImageDataGenerator

# 解压

local_zip1 = 'E:/Python/pythonProject_1/sign_language_mnist/tmp/sign_mnist_train.zip' # 数据集压缩包路径

zip_ref1 = zipfile.ZipFile(local_zip1, 'r') # 打开压缩包,以读取方式

zip_ref1.extractall('E:/Python/pythonProject_1/sign_language_mnist/tmp/sign_mnist_train/') # 解压到以下路径

zip_ref1.close()

local_zip2 = 'E:/Python/pythonProject_1/sign_language_mnist/tmp/sign_mnist_test.zip' # 数据集压缩包路径

zip_ref2 = zipfile.ZipFile(local_zip2, 'r') # 打开压缩包,以读取方式

zip_ref2.extractall('E:/Python/pythonProject_1/sign_language_mnist/tmp/sign_mnist_test/') # 解压到以下路径

zip_ref2.close()

# # 从google.colab数据库加载文件

# from google.colab import files

#

#

# uploaded = files.upload()

def get_data(filename):

with open(filename) as training_file:

csv_reader = csv.reader(training_file, delimiter= ',')

# csv.reader(file, delimiter=',', quotechar='"')

# delimiter是分隔符,quotechar是引用符,当一段话中出现分隔符的时候,用引用符将这句话括起来,就能排除歧义。

first_line = True

temp_images = []

temp_labels = []

for row in csv_reader:

if first_line:

# print("Ignoring first line")

first_line = False # 让for循环跳过第一行数据

else:

temp_labels.append(row[0])

image_data = row[1:785]

image_data_as_array = np.array_split(image_data, 28) # 将一维ndarray格式转换成size为28*28的ndarray格式

temp_images.append(image_data_as_array) # 将一维数组添加到list中

images = np.array(temp_images).astype('float') # 将list格式转换为ndarray三维格式

labels = np.array(temp_labels).astype('float')

return images, labels

training_images, training_labels = get_data('E:/Python/pythonProject_1/sign_language_mnist/tmp/sign_mnist_train/sign_mnist_train.csv')

testing_images, testing_labels = get_data('E:/Python/pythonProject_1/sign_language_mnist/tmp/sign_mnist_test/sign_mnist_test.csv')

# 保存处理后的数据

np.save("E:/Python/pythonProject_1/sign_language_mnist/tmp/sign_mnist_train/training_images.npy",training_images)

np.save("E:/Python/pythonProject_1/sign_language_mnist/tmp/sign_mnist_train/training_labels.npy",training_labels)

np.save("E:/Python/pythonProject_1/sign_language_mnist/tmp/sign_mnist_test/testing_images.npy",testing_images)

np.save("E:/Python/pythonProject_1/sign_language_mnist/tmp/sign_mnist_test/testing_labels.npy",testing_labels)

print(training_images.shape)

print(training_labels.shape)

print(testing_images.shape)

print(testing_labels.shape)model_training_fit.py

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' # 不显示等级2以下的提示信息

# import shutil

# import random

import tensorflow as tf

# from tensorflow import keras

from tensorflow.keras.preprocessing.image import ImageDataGenerator

# from shutil import copyfile

# import matplotlib.image as mpimg

import matplotlib.pyplot as plt

import numpy as np

# 数据加载

training_images = np.load("E:/Python/pythonProject_1/sign_language_mnist/tmp/sign_mnist_train/training_images.npy")

training_labels = np.load("E:/Python/pythonProject_1/sign_language_mnist/tmp/sign_mnist_train/training_labels.npy")

testing_images = np.load("E:/Python/pythonProject_1/sign_language_mnist/tmp/sign_mnist_test/testing_images.npy")

testing_labels = np.load("E:/Python/pythonProject_1/sign_language_mnist/tmp/sign_mnist_test/testing_labels.npy")

# 数据预处理

training_images = np.expand_dims(training_images, axis=3) # 将三维数组扩展到4维

testing_images = np.expand_dims(testing_images, axis=3)

training_datagen = ImageDataGenerator(

# 数据增强

rescale=1. / 255,

rotation_range=40, # 旋转范围

width_shift_range=0.2, # 宽平移

height_shift_range=0.2,# 高平移

shear_range=0.2, # 剪切

zoom_range=0.2, # 缩放

horizontal_flip=True,

fill_mode='nearest'

)

validation_datagen = ImageDataGenerator(

rescale=1. / 255

)

print(training_images.shape)

print(testing_images.shape)

#======== 模型构建 =========

model = tf.keras.models.Sequential([

tf.keras.layers.Conv2D(64, (3, 3), activation = 'relu', input_shape = (28, 28, 1)), # 输入参数:过滤器数量,过滤器尺寸,激活函数:relu, 输入图像尺寸

tf.keras.layers.MaxPooling2D(2, 2), # 池化:增强特征

tf.keras.layers.Conv2D(64, (3, 3), activation = 'relu'), # 输入参数:过滤器数量、过滤器尺寸、激活函数:relu

tf.keras.layers.MaxPooling2D(2, 2),

tf.keras.layers.Flatten(), # 输入层

tf.keras.layers.Dense(128, activation = 'relu'), # 全连接隐层 神经元数量:128 ,激活函数:relu

tf.keras.layers.Dense(26, activation = 'softmax') # 英文字母分类 26 ,阿拉伯数字分类 10 输出用的是softmax 概率化函数 使得所有输出加起来为1 0-1之间

])

#======== 模型参数编译 =========

model.compile(

optimizer = 'adam',

loss = 'sparse_categorical_crossentropy', # 损失函数: 稀疏的交叉熵 binary_crossentropy

metrics = ['accuracy']

)

#======== 模型训练 =========

# Note that this may take some time.

history = model.fit_generator(

training_datagen.flow(training_images, training_labels,batch_size=32),

steps_per_epoch=len(training_images) / 32,

epochs = 15,

validation_data=validation_datagen.flow(testing_images, testing_labels,batch_size=32),

validation_steps=len(testing_images) / 32

)

model.save('E:/Python/pythonProject_1/sign_language_mnist/model.h5') # model 保存

model.evaluate(testing_images, testing_labels )# 数据(data)和金标准(label),然后将预测结果与金标准相比较,得到两者误差并输出.

#-----------------------------------------------------------

# Retrieve a list of list result on training and test data

# set for each training epoch

#-----------------------------------------------------------

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc)) # Get number of epochs

#-----------------------------------------------------------

# Plot training and validation accuracy per epoch

#-----------------------------------------------------------

plt.plot(epochs, acc, 'r', label = "tra_acc")

plt.plot(epochs ,val_acc, 'b', label = "val_acc")

plt.title("training and validation accuracy")

plt.legend()

plt.grid(ls='--') # 生成网格

plt.show()

# 曲线呈直线是因为epochs/轮次太少

#-----------------------------------------------------------

# Plot training and validation loss per epoch

#-----------------------------------------------------------

plt.plot(epochs, loss, 'r', label = "train_loss")

plt.plot(epochs ,val_loss, 'b', label = "val_loss")

plt.title("training and validation loss")

plt.legend()

plt.grid(ls='--') # 生成网格

plt.show()

# 曲线呈直线是因为epochs/轮次太少测试结果:

Epoch 15/15

858/857 [==============================] - 25s 29ms/step - loss: 0.7643 - accuracy: 0.7437 - val_loss: 0.3325 - val_accuracy: 0.8799评估结果:

225/225 [==============================] - 1s 6ms/step - loss: 240.8037 - accuracy: 0.6163predict.py

# -*- coding: UTF-8 -*-

import numpy as np

import matplotlib.image as mpig

from tensorflow import keras

from PIL import Image

model = keras.models.load_model('E:/Python/pythonProject_1/sign_language_mnist/model.h5')

im = Image.open('E:/Python/pythonProject_1/sign_language_mnist/25.png')

im_resized = im.resize((28, 28))

img = im_resized.convert('L')

img.save('E:/Python/pythonProject_1/sign_language_mnist/25.png')

img = mpig.imread('E:/Python/pythonProject_1/sign_language_mnist/25.png')

img = img * 255

img = img.astype(np.float64)

images = np.expand_dims(img, axis=0) # 将三维数组扩展到3维

images = np.expand_dims(images, axis=3) # 将三维数组扩展到4维

classes = model.predict(images, batch_size=26)

result = np.argmax(classes, axis=1)

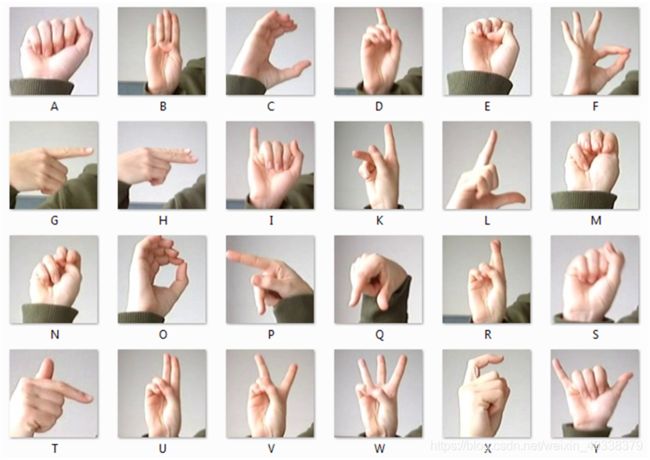

print(result)24号美式字母手势,代表字母“Y”

24种美式字母手势

预处理后:

Result:

>>> print(result)

[24]备注:

数据库链接:https://www.kaggle.com/datamunge/sign-language-mnist