【朴素贝叶斯学习记录】西瓜书数据集朴素贝叶斯实现

朴素贝叶斯学习记录2

本文主要根据西瓜书的朴素贝叶斯公式推导以及例题解答为基础进行学习。

该全过程主要解决了以下几个问题:

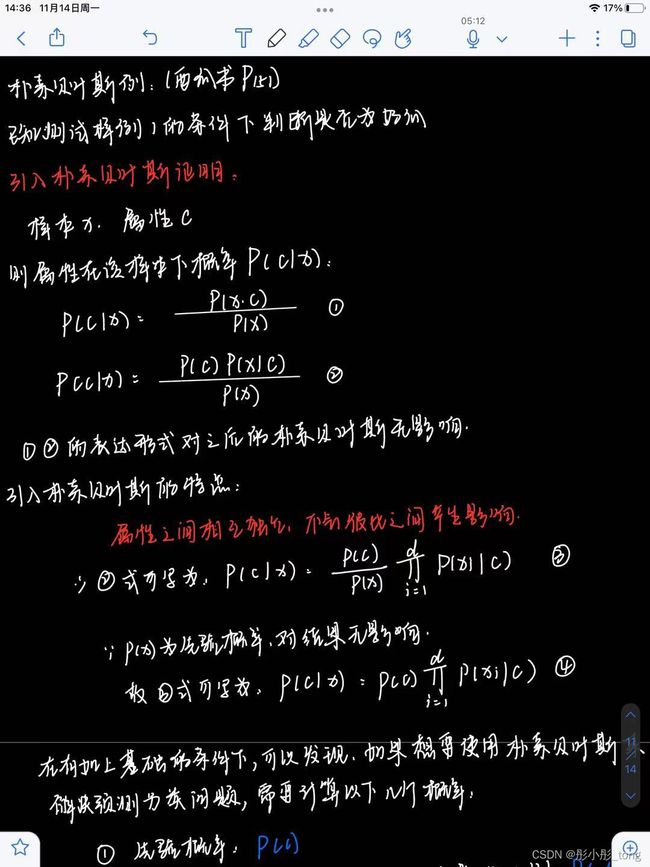

- 朴素贝叶斯的数学表达式是如何求得

- 朴素贝叶斯与贝叶斯的差别在哪里

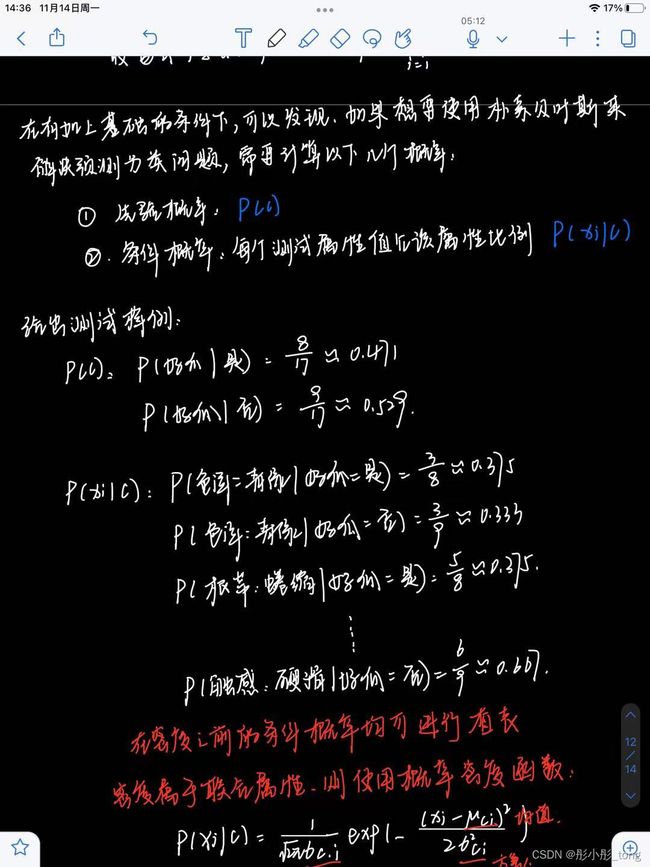

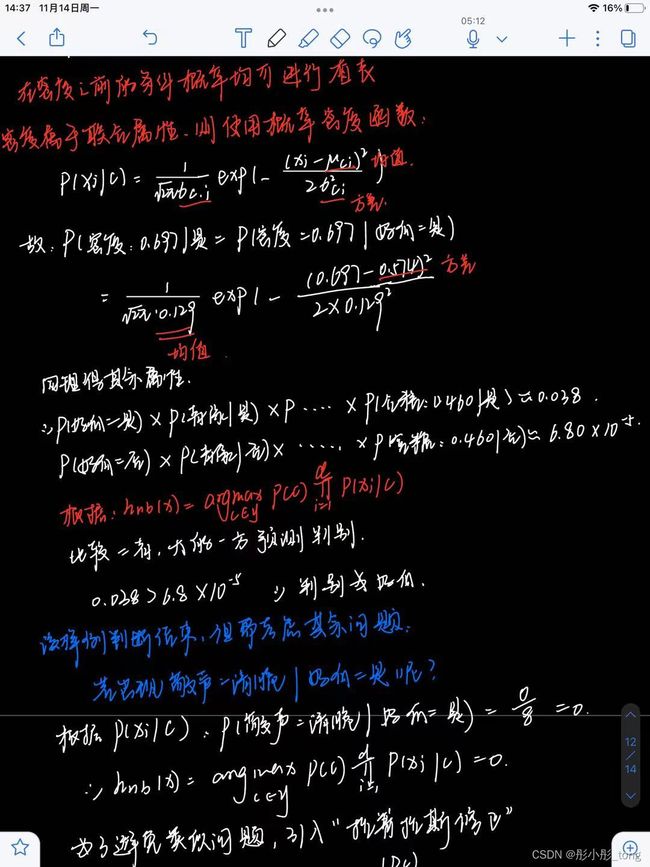

- 朴素贝叶斯的实例问题解决

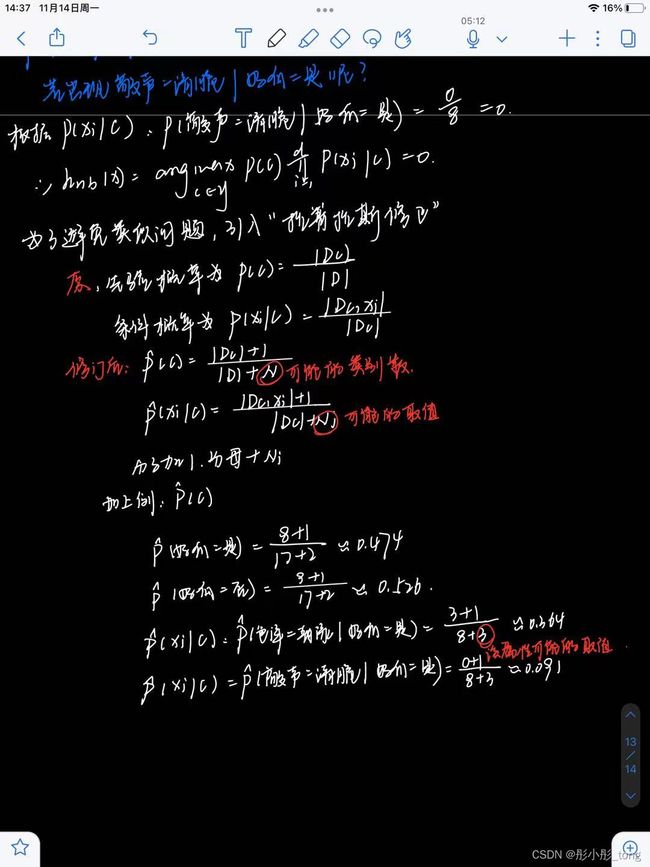

- 拉普拉斯平滑的提出与应用实例解决

- 朴素贝叶斯手推代码

朴素贝叶斯手写代码过程

import numpy as np

import pandas as pd

data=pd.read_csv("D:/大三上课程资料/机器学习/数据集/西瓜书朴素贝叶斯数据集.csv",header=None,encoding='gb2312')

lemon1=np.array(data)

data=lemon1.tolist()

data

def set_data():

data=pd.read_csv("D:/大三上课程资料/机器学习/数据集/西瓜书朴素贝叶斯数据集.csv", header=None, encoding='gb2312')

lemon1=np.array(data)

data=lemon1.tolist()

property_list = [

'青绿', '乌黑', '浅白',

'蜷缩', '稍缩', '硬挺',

'浊响', '沉闷', '清脆',

'清晰', '稍糊', '模糊',

'凹陷', '稍陷', '稍凹',

'硬滑', '软粘']

return data,property_list

def get_data(data,col,row,n):

N=[0]*n

for n in range(0,n):

N[n]=float(data[row+n][col])

return N

x=np.mean(get_data(data,6,0,5))

property_list = [

'青绿', '乌黑', '浅白',

'蜷缩', '稍缩', '硬挺',

'浊响', '沉闷', '清脆',

'清晰', '稍糊', '模糊',

'凹陷', '稍陷', '稍凹',

'硬滑', '软粘']

index=property_list.index('软粘')

```python

def train(data,property_list):

train_len=len(data)

goodmelon=0

for sample in data:

if sample=='是':

goodmelon+=1

P_C_good=goodmelon/train_len

PX_C_Positive=[]

PX_C_Negative=[]

for property in property_list:

if(property=="硬滑")or (property=="软粘"):

Sample_P=goodmelon+2

Sample_N=train_len-goodmelon+2

else:

Sample_P=goodmelon+3

Sample_N=train_len-goodmelon+3

Pos_Num=1

Neg_Num=1

for row in range(0,len(data)):

if property in data[row]:

if data[row][-1]=='是':

Pos_Num+=1

else:

Neg_Num+=1

PX_C_Positive.append(Pos_Num/Sample_P)

PX_C_Negative.append(Neg_Num/Sample_N)

PX_C_Positive.append(np.mean(get_data(data,6,0,goodmelon)))

PX_C_Positive.append(np.var(get_data(data,6,0,goodmelon))**(1/2))

PX_C_Negative.append(np.mean(get_data(data,6,goodmelon,train_len-goodmelon)))

PX_C_Negative.append(np.var(get_data(data,6,goodmelon,train_len-goodmelon)))

PX_C_Positive.append(np.mean(get_data(data,7,0,goodmelon)))

PX_C_Positive.append(np.var(get_data(data,7,0,goodmelon)))

PX_C_Negative.append(np.mean(get_data(data,7,goodmelon,train_len-goodmelon)))

PX_C_Negative.append(np.var(get_data(data,7,goodmelon,train_len-goodmelon)))

return PX_C_Positive,PX_C_Negative

def forecast(data,PX_C_Positive,property_list,PX_C_Negative):

forca_Pos=0

forca_Neg=0

for property_data in data[:-1]:

if property_data in property_list:

index=property_list.index(property_data)

forca_Pos+=np.log(PX_C_Pos[index])

forca_Pos+=np.log(PX_C_Neg[index])

forca_Pos+=np.log(((2 * np.pi) ** (-1 / 2) * PX_C_Pos[-4]) ** (-1)) + (

-1 / 2) * ((float(data[-2]) - PX_C_Pos[-4]) ** 2) / (

PX_C_Pos[-3] ** 2)

forca_Pos+=np.log(((2 * np.pi) ** (-1 / 2) * PX_C_Pos[-2]) ** (-1)) + (

-1 / 2) * ((float(data[-1]) - PX_C_Pos[-2]) ** 2) / (

PX_C_Pos[-1] ** 2)

forca_Neg+=np.log(((2 * np.pi) ** (-1 / 2) * PX_C_Pos[-4]) ** (-1)) + (

-1 / 2) * ((float(data[-2]) - PX_C_Pos[-4]) ** 2) / (

PX_C_Pos[-3] ** 2)

forca_Neg+=np.log(((2 * np.pi) ** (-1 / 2) * PX_C_Pos[-2]) ** (-1)) + (

-1 / 2) * ((float(data[-1]) - PX_C_Pos[-2]) ** 2) / (

PX_C_Pos[-1] ** 2)

if PX_C_Positive>PX_C_Negative:

return '是'

else:

return '否'

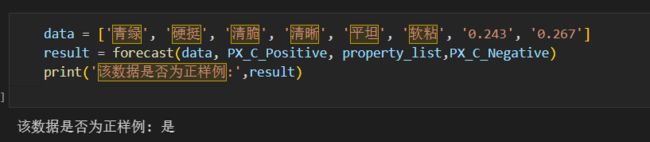

if __name__ == "__main__":

data,property_list=set_data()

PX_C_Positive,PX_C_Negative=train(data,property_list)

data = ['青绿', '硬挺', '清脆', '清晰', '平坦', '软粘', '0.243', '0.267']

result = forecast(data, PX_C_Positive, property_list,PX_C_Negative)

print('该数据是否为正样例:',result)