VGG11使用卷积层代替全连接层进行测试

一、使用全连接层训练好的VGG11模型改为全卷积层进行测试,在加载训练模型报错问题

size mismatch报错

RuntimeError: Error(s) in loading state_dict for VGG:

size mismatch for classifier.0.weight: copying a param with shape torch.Size([4096, 25088]) from checkpoint, the shape in current model is torch.Size([4096, 512, 7, 7]).

size mismatch for classifier.3.weight: copying a param with shape torch.Size([4096, 4096]) from checkpoint, the shape in current model is torch.Size([4096, 4096, 1, 1]).

size mismatch for classifier.6.weight: copying a param with shape torch.Size([5, 4096]) from checkpoint, the shape in current model is torch.Size([5, 4096, 1, 1]).

报错代码

weights_path = r"vgg11Net.pth"

state_dict = torch.load(weights_path)

model.load_state_dict(state_dict)

问题分析:

model.load_state_dict(state_dict)加载模型时,全连接层训练的模型权重torch.size和卷积层的torch.size维度是不同的。

二、解决方法

我们要将训练好的模型的权重进行升维的操作,才能与卷积层的torch.size匹配起来。

全连接层改为卷积层代码

self.classifier = nn.Sequential(

# nn.Linear(512*7*7, 4096), # classifier.0

# nn.ReLU(True),

# nn.Dropout(p=0.5),

# nn.Linear(4096, 4096), # classifier.3

# nn.ReLU(True),

# nn.Dropout(p=0.5),

# nn.Linear(4096, num_classes) # classifier.6

# 全连接层与卷积层要对应起来,可不使用dropout,可以使用池化层将两者对应起来

nn.Conv2d(in_channels=512, out_channels=4096, kernel_size=7, stride=1), # classifier.0

nn.ReLU(True),

nn.Dropout(p=0.5),

nn.Conv2d(in_channels=4096, out_channels=4096, kernel_size=1, stride=1), # classifier.3

nn.ReLU(True),

nn.Dropout(p=0.5),

nn.Conv2d(in_channels=4096, out_channels=num_classes, kernel_size=1, stride=1), # classifier.6

)

增加的代码

在加载模型state_dict = torch.load(weights_path)后面添加以下代码,使用reshape()来改变加载训练模型权重的维度,与卷积层所需要的维度一样。

state_dict = torch.load(weights_path)

for k, v in state_dict.items():

if "classifier.0.weight" == k:

state_dict[k] = v.reshape(4096, 512, 7, 7)

if "classifier.3.weight" == k:

state_dict[k] = v.reshape(4096, 4096, 1, 1)

if "classifier.6.weight" == k:

state_dict[k] = v.reshape(num_classes, 4096, 1, 1)

model.load_state_dict(state_dict)

修改之后,就可以用全卷积进行测试了。

三、为什么测试要使用卷积层代替全连接层及注意事项

1. VGG使用全连接层训练时图片为224*224,所以使用全连接层模型进行测试也要缩放图片大小224*224。

2. 使用全卷积层则不需要对测试的图片进行缩放,可以直接使用原图,但是输入的图片的最短边不能小于224(如果图片最短边小于224,可以在替换的第一层卷积加上一个padding,保证图片有足够的尺寸大小来被7*7的卷积核进行卷积操作)。

3. 使用全卷积最后经过softmax得到的输出权重不一定是一维的向量(也可以是二维和三维的)。

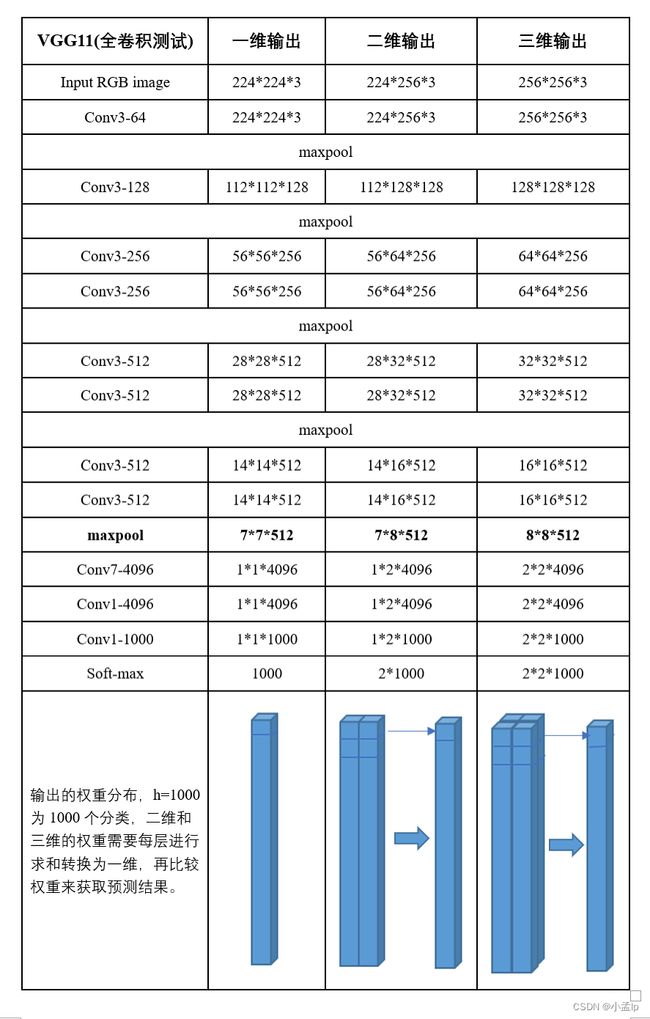

例如:输入图片大小为256*256,最后输出的为2*2*num_classes大小的输出(num_classes为测试分类数量),全卷积测试时图片大小变化及最后输出权重的形状如下。

实现代码

with torch.no_grad():

output = torch.squeeze(model(img.to(device))).cpu()

predict = torch.softmax(output, dim=0)

'''判断输出的权重为几维的 '''

if len(predict.shape) == 3:

three_dim = np.array(predict) # 将tensor数据转换为三维的numpy数组

two_dim = np.sum(three_dim, -1) # 按每个feature map进行行求和,降为二维的numpy数组

one_dim = two_dim.sum(axis=1) # axis=0 按列求和, axis=1 按行求和

predict = torch.tensor(one_dim) # 将numpy数组转换为tensor

if len(predict.shape) == 2:

two_dim = np.array(predict)

one_dim = two_dim.sum(axis=1)

predict = torch.tensor(one_dim)

'''以上为需要添加的代码'''

predict_cla = torch.argmax(predict).numpy() # 获取最大权重分类的下标

最后总结,使用全卷积层可以测试任意大小的图片,在一定程度上可以提高测试的准确度(我自己进行图片测试时,使用全卷积比使用全连接层精确度要好)

分析原因:这也是我自己的理解,使用全连接层对图片进行了Resize()缩放,把原有的图片压扁了。而使用全卷积图片没有进行缩放,可能提取的特征更加明显,而且全卷积得到的权重向量为多维的,获取得到的权重参数更多,从而使测试时精确度更高。