语义分割系列-4 DeepLabV1-V3+(pytorch实现)

DeepLabV1-V3+系列很好的展示了一个模型是如何在发现问题->模型更新->解决问题这一流程中迭代进步的。每一次发现问题,再提出新的模块去解决,在模型中实现,获得好的效果。就这样,DeepLab从V1->V2->V3->V3+,DeepLab系列就像人工智能,在一步步进步,一点点解决问题。

因此,本文将从DeepLabV1开始,DeepLabV3+结束,讲述DeepLab家族的故事。并会在CamVid数据集上进行复现。所有代码都基于Pytorch。

DeepLab1

论文地址:DeepLab1:SEMANTIC IMAGE SEGMENTATION WITH DEEP CON- VOLUTIONAL NETS AND FULLY CONNECTED CRFS

概述:

原论文作者在发现DCNN(深度神经网络,Deep Convolutional Neural Networks)可以很容易解决分类问题却难以解决像素级别的分割问题后,进行了思考。作者认为,DCNN具有很好的平移不变性,所以在分类问题上效果很好,但是多次池化和下采样导致位置信息丢失,而空间的不变性导致了细节信息的丢失。所以,论文中提出了两个要解决的问题:

- 多次池化和下采样导致位置信息丢失。

- 空间的不变性导致细节信息的丢失。

既然提出了两个需要解决的问题,作者在这两个问题上分别提出了两个解决方案:

- 空洞卷积

- 全连接条件随机场(Fully-connected Conditional Random Field,CRF)

空洞卷积

可以说空洞卷积是DeepLab系列的灵魂之作,DCNN网络苦于感受野不深的问题已久。 2016年一篇论文中提出了有效感受野这一概念。原来的办法只能通过不停的堆叠卷积层或者增大卷积核,以求达到增加感受野的目的。但是呢,增大卷积核运算量会比较大,在当时是不可接受的(现在也有人开始研究超大卷积核的模型结构,比如2022的CVPR中RepLknet)。而空洞卷积的提出,则极大解决了感受野不够大的问题(至少在当时是这样的)。

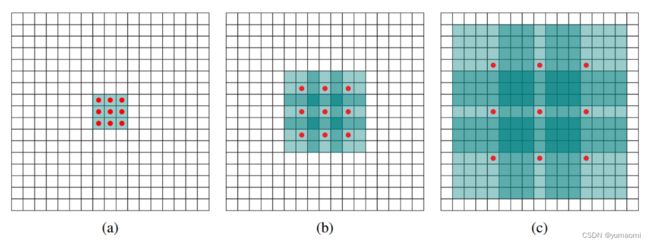

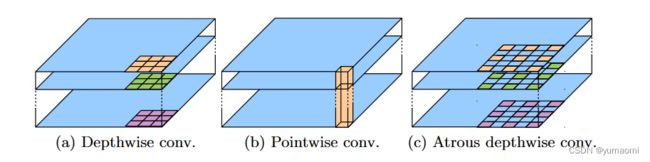

图1中,a是普通的卷积能看到的感受野大小,卷积完成后每一个红点能看到前一层图像的3x3区域。b是dialation=2的空洞卷积,可以看到,同样是9个红色点参与运算,却可以看到7x7的区域。c是dialation=4的空洞卷积,9个点参与运算却可以看到15x15的区域。

空洞卷积和普通卷积的感受野就很显而易见了,而且最重要的是,空洞卷积不会增加额外的训练参数,在当时的硬件限制的情况下,相比于扩大卷积核或叠加卷积层,这种又节省参数又提升感受野的操作是很有市场的。

但是呢,空洞卷积自然也是有缺点的,就是网格问题(gridding问题)。但后续也有人会提出新的方法来解决这个问题。在此就不再赘述。总之,空洞卷积横空出世。

全连接条件随机场

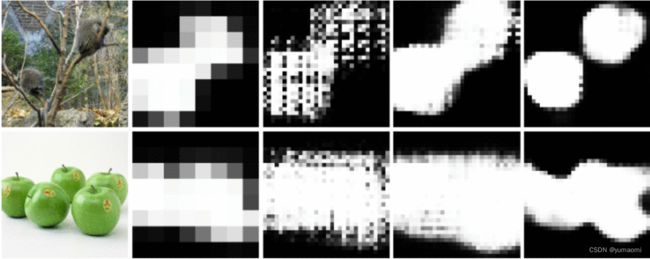

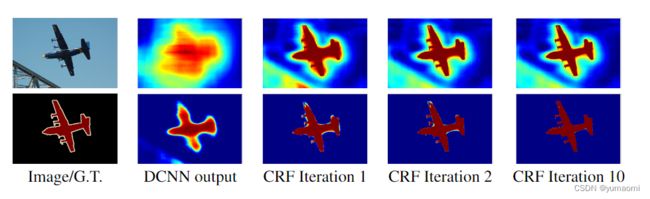

作者在论文中提出使用全连接条件随机场(CRF)来解决分割中不平滑的问题和修复一些小的结构。

可以很明显的看到,随着CRF迭代次数增加,对飞机的分割效果越来越好。

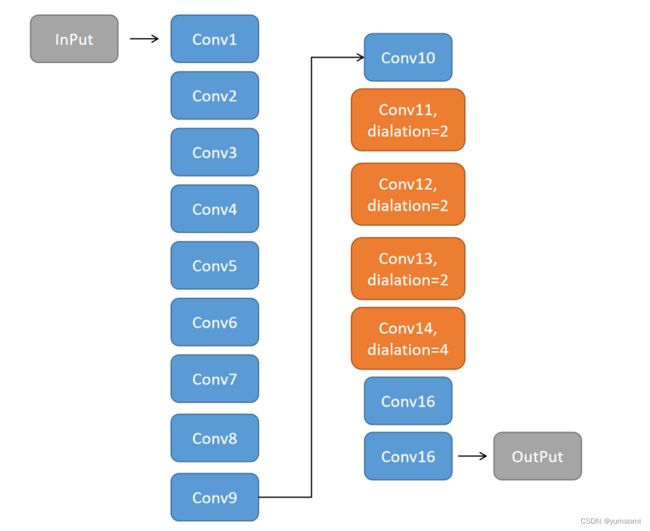

模型结构

DeepLabV1的模型结构十分简单,基于VGG16做了一些改动。将VGG16的最后三层全连接层替换为卷积层。其中,第十一、十二、十三层为dilation=2的空洞卷积,第十四层为dilation=4的空洞卷积。

图中省去了CRF的结构,虽然不用CRF,但是DeepLabV1也能实现一个很好的效果。

DeepLabV2

DeepLabV1提出后不久,作者又发现了新问题--图像中存在多尺度的物体。

对于多尺度的物体,原来的模型效果堪忧。

DeepLabV2论文地址。

于是就提出了新的解决方法,就是大名鼎鼎的ASPP(Atrous Spatial Pyramid Pooling)

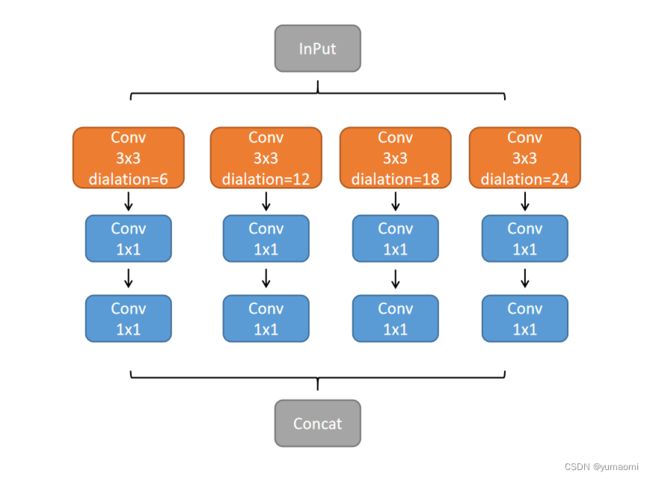

ASPP

ASPP就是在一层上并行多个不同dilation的空洞卷积,所有空洞卷积同步进行,用来获得不同尺度上的感受野,以提升不同尺度的物体分割效果。简单点说就是,让模型能同时看清楚大的物体和小的物体。

ASPP的实现也较为简单,本文给出了ASPP在pytorch框架上的实现。

#DeepLabv2使用的ASPPmodule

class ASPP_module(nn.ModuleList):

def __init__(self, in_channels, out_channels, dilation_list=[6, 12, 18, 24]):

super(ASPP_module, self).__init__()

self.dilation_list = dilation_list

for dia_rate in self.dilation_list:

self.append(

nn.Sequential(

nn.Conv2d(in_channels, out_channels, kernel_size=1 if dia_rate==1 else 3, dilation=dia_rate, padding=0 if dia_rate==1 else dia_rate),

nn.Conv2d(out_channels, out_channels, kernel_size=1),

nn.Conv2d(out_channels, out_channels, kernel_size=1),

)

)

def forward(self, x):

outputs = []

for aspp_module in self:

outputs.append(aspp_module(x))

return torch.cat(outputs, 1)DeepLabV3

当然,老问题解决了,新问题自然会出现。

因为ResNet的提出,作者开始思考,更深结构下,空洞卷积能实现什么样的效果?以及,ASPP结构是否能再度优化?

因此,在DeepLabV3的论文中,作者做了两部分的工作:

- 探索更深层的模型下,空洞卷积的效果。

- ASPP的优化。

论文的名字也取得很有含义,Rethinking Atrous Convolution for Semantic Image Segmentation

Rethinking!

DeepLab的故事告诉我们要多Thinking和Rethinking。

Going Deeper with Atrous Convolution

作者的第一个思路就是更深层的空洞卷积。ResNet的提出,让网络模型达到101(还能更深)。

作者做了一个对比试验,将ResNet深层的模块替换为空洞卷积,很显然,空洞卷积获得了比较大的感受野,而且可以捕获远端的信息,在最后一层中进行汇聚。当然,作者也提到,dilation rate的设计十分重要,不当的设计会造成精度降低。当然,作者也为我们做完实验并设计好了rate的组合。

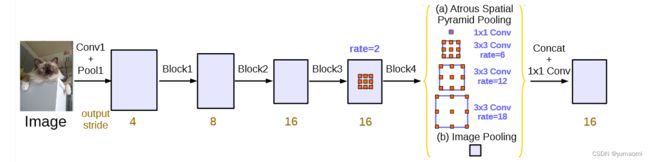

Atrous Spatial Pyramid Pooling

作者再次提到了ASPP结构,并做了一定的优化。

因为作者在上述实验的情况中发现,dilation rate组合不当的情况下,3x3的卷积核会退化成1x1的卷积。因此,作者设计实验并重新调整了rate组合,从V2中的[6, 12, 18, 24] 改进成[1, 6, 12, 18]。

当然,作者觉得空洞卷积损失了一定信息,于是在ASPP上,并行了一个全局平均池化(图8 b),来保存全局的上下文信息。

至此,V3的改进就算完成了。

#DeepLabV3版本的ASPP

class ASPP_module(nn.ModuleList):

def __init__(self, in_channels, out_channels, dilation_list=[1, 6, 12, 18]):

super(ASPP_module, self).__init__()

self.dilation_list = dilation_list

for dia_rate in self.dilation_list:

self.append(

nn.Sequential(

nn.Conv2d(in_channels, out_channels, kernel_size=1 if dia_rate==1 else 3, dilation=dia_rate, padding=0 if dia_rate==1 else dia_rate),

nn.BatchNorm2d(out_channels),

nn.ReLU()

)

)

def forward(self, x):

outputs = []

for aspp_module in self:

outputs.append(aspp_module(x))

return torch.cat(outputs, 1)DeepLabV3+

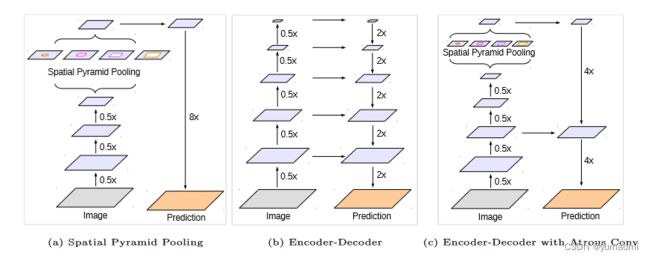

当然了,你V3 Rethinking就Rethinking了一个深层结构和ASPP,级联结构怎么不加呢?

所以DeepLabV3+考虑了级联结构,来增加对上下文的理解。

论文地址:Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation

名字就叫,语义分割中Encoder-Decoder的ASPP应用。

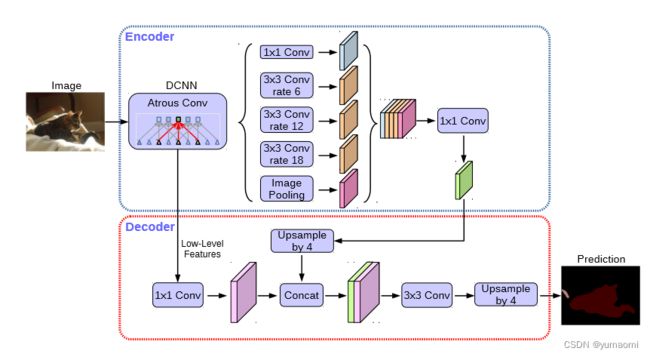

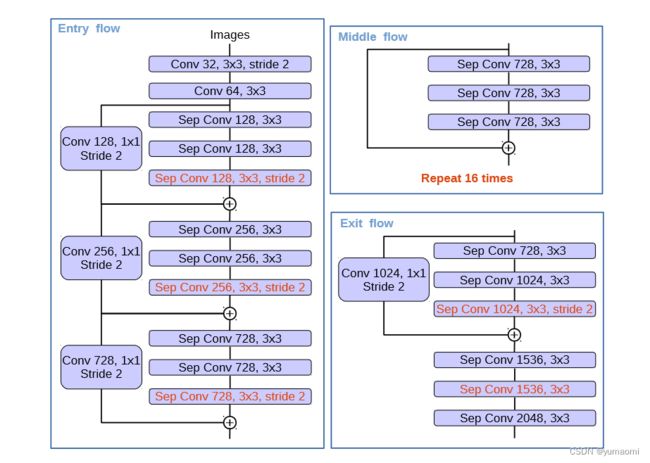

模型结构

到这一步,就比较简单了。作者将ASPP加入到Encoder-decoder结构中。

再加上级联结构,作者就提出了DeepLabV3+模型。

当然,在作者写论文那个时期,在卷积模块上,Depthwise separable convolution(深度可分离卷积)又横空出世,作者开始思考能否将深度可分离卷积结合空洞卷积?实验证明是可行的,于是,作者提出了一个修改的Xception模型。说简单点,就是在深度可分离卷积上应用空洞卷积。

替换了一些卷积层为有不同dilation的深度可分离卷积。不过这和DeepLabV3+没什么关系。这里就不再赘述。

模型复现

DeepLabV1

VGG16作为backbone

import torch

import torch.nn as nn

class VGG13(nn.Module):

def __init__(self):

super(VGG13, self).__init__()

self.stage_1 = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(2,2),

)

self.stage_2 = nn.Sequential(

nn.Conv2d(64, 128, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(),

nn.Conv2d(128, 128, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(),

nn.MaxPool2d(2,2),

)

self.stage_3 = nn.Sequential(

nn.Conv2d(128, 256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.MaxPool2d(2,2),

)

self.stage_4 = nn.Sequential(

nn.Conv2d(256, 512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(),

nn.MaxPool2d(2,stride=1, padding=1),

)

self.stage_5 = nn.Sequential(

#空洞卷积

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=2, dilation=2),

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=2, dilation=2),

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=2, dilation=2),

nn.BatchNorm2d(512),

nn.ReLU(),

nn.MaxPool2d(2, stride=1),

)

def forward(self, x):

x = x.float()

x1 = self.stage_1(x)

x2 = self.stage_2(x1)

x3 = self.stage_3(x2)

x4 = self.stage_4(x3)

x5 = self.stage_5(x4)

return [x1, x2, x3, x4, x5]class DeepLabV1(nn.Module):

def __init__(self, num_classes):

super(DeepLabV1, self).__init__()

#前13层是VGG16的前13层,分为5个stage

self.num_classes = num_classes

self.backbone = VGG13()

self.stage_1 = nn.Sequential(

#空洞卷积

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=4, dilation=4),

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=1, stride=1, padding=0),

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=1, stride=1, padding=0),

nn.BatchNorm2d(512),

nn.ReLU(),

)

self.final = nn.Sequential(

nn.Conv2d(512, self.num_classes, kernel_size=3, padding=1)

)

def forward(self, x):

#调用VGG16的前13层 VGG13

x = self.backbone(x)[-1]

x = self.stage_1(x)

x = nn.functional.interpolate(input=x,scale_factor=8,mode='bilinear')

x = self.final(x)

return xDeepLabV2

还是用VGG16作为backbone,同时写了ASPP模块。

import torch

import torch.nn as nn

class ASPP_module(nn.ModuleList):

def __init__(self, in_channels, out_channels, dilation_list=[6, 12, 18, 24]):

super(ASPP_module, self).__init__()

self.dilation_list = dilation_list

for dia_rate in self.dilation_list:

self.append(

nn.Sequential(

nn.Conv2d(in_channels, out_channels, kernel_size=1 if dia_rate==1 else 3, dilation=dia_rate, padding=0 if dia_rate==1 else dia_rate),

nn.Conv2d(out_channels, out_channels, kernel_size=1),

nn.Conv2d(out_channels, out_channels, kernel_size=1),

)

)

def forward(self, x):

outputs = []

for aspp_module in self:

outputs.append(aspp_module(x))

return torch.cat(outputs, 1)

class DeepLabV2(nn.Module):

def __init__(self, num_classes):

super(DeepLabV2, self).__init__()

self.num_classes = num_classes

self.ASPP_module = ASPP_module(512,256)

self.backbone = VGG13()

self.final = nn.Sequential(

nn.Conv2d(256*4, 256, kernel_size=3, padding=1),

nn.Conv2d(256, self.num_classes, kernel_size=1)

)

def forward(self, x):

x = self.backbone(x)[-1]

x = self.ASPP_module(x)

x = nn.functional.interpolate(x ,scale_factor=8,mode='bilinear', align_corners=True)

x = self.final(x)

return x

DeepLabV3

DeepLabV3的ASPP与之前略有不同,所有重写了一个。

当然这里作者没有使用Resnet来实现,而是继承了上部分的VGG16。所以和原文有一些出入。这里可以改为ResNet50或101。

import torch

import torch.nn as nn

class ASPP_module(nn.ModuleList):

def __init__(self, in_channels, out_channels, dilation_list=[1, 6, 12, 18]):

super(ASPP_module, self).__init__()

self.dilation_list = dilation_list

for dia_rate in self.dilation_list:

self.append(

nn.Sequential(

nn.Conv2d(in_channels, out_channels, kernel_size=1 if dia_rate==1 else 3, dilation=dia_rate, padding=0 if dia_rate==1 else dia_rate),

nn.BatchNorm2d(out_channels),

nn.ReLU()

)

)

def forward(self, x):

outputs = []

for aspp_module in self:

outputs.append(aspp_module(x))

return torch.cat(outputs, 1)

class DeepLabV3(nn.Module):

def __init__(self, num_classes):

super(DeepLabV3, self).__init__()

self.num_classes = num_classes

self.ASPP_module = ASPP_module(512,256,dilation_list=[1,6,12,18])

self.backbone = VGG13()

self.final = nn.Sequential(

nn.Conv2d(256*4+256, 256, kernel_size=1),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(256, self.num_classes, kernel_size=1)

)

self.avg_pool = nn.Sequential(

nn.AdaptiveAvgPool2d((1)),

nn.Conv2d(512, 256, 1, bias=False),

nn.BatchNorm2d(256),

nn.ReLU(inplace=True))

def forward(self, x):

x = self.backbone(x)[-1]

x_1 = self.ASPP_module(x)

x_2 = nn.functional.interpolate(self.avg_pool(x), size=(x.size(2), x.size(3)), mode='bilinear', align_corners=True)

x = torch.cat([x_1, x_2], 1)

x = nn.functional.interpolate(input=x ,scale_factor=8,mode='bilinear', align_corners=True)

x = self.final(x)

return xDeepLabV3+

同样,这里仍然使用VGG,不过做了一点变动。可以改为ResNet50或101。

import torch

import torch.nn as nn

class VGG13_16x(nn.Module):

def __init__(self):

super(VGG13_16x, self).__init__()

self.stage_1 = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(2,2),

)

self.stage_2 = nn.Sequential(

nn.Conv2d(64, 128, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(),

nn.Conv2d(128, 128, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(),

nn.MaxPool2d(2,2),

)

self.stage_3 = nn.Sequential(

nn.Conv2d(128, 256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(),

#nn.MaxPool2d(2,2),

)

self.stage_4 = nn.Sequential(

nn.Conv2d(256, 512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512),

nn.ReLU(),

nn.MaxPool2d(2,2),

)

self.stage_5 = nn.Sequential(

#空洞卷积

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=2, dilation=2),

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=2, dilation=2),

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=2, dilation=2),

nn.BatchNorm2d(512),

nn.ReLU(),

nn.MaxPool2d(2,2),

)

def forward(self, x):

x1 = self.stage_1(x)

x2 = self.stage_2(x1)

x3 = self.stage_3(x2)

x4 = self.stage_4(x3)

x5 = self.stage_5(x4)

return [x1, x2, x3, x4, x5]

class ASPP_module(nn.ModuleList):

def __init__(self, in_channels, out_channels, dilation_list=[6, 12, 18, 24]):

super(ASPP_module, self).__init__()

self.dilation_list = dilation_list

for dia_rate in self.dilation_list:

self.append(

nn.Sequential(

nn.Conv2d(in_channels, out_channels, kernel_size=1 if dia_rate==1 else 3, dilation=dia_rate, padding=0 if dia_rate==1 else dia_rate),

nn.BatchNorm2d(out_channels),

nn.ReLU()

)

)

def forward(self, x):

outputs = []

for aspp_module in self:

outputs.append(aspp_module(x))

return torch.cat(outputs, 1)

class DeepLabV3Plus(nn.Module):

def __init__(self, num_classes):

super(DeepLabV3Plus, self).__init__()

self.backbone = VGG13_16x()

self.ASPP_module = ASPP_module(512,256,[1,6,12,18])

self.low_feature = nn.Sequential(

nn.Conv2d(256, 256, kernel_size=1, bias=False),

nn.BatchNorm2d(256),

nn.ReLU(),

)

self.num_classes = num_classes

self.avg_pool = nn.Sequential(

nn.AdaptiveAvgPool2d(1),

nn.Conv2d(512, 256, 1, bias=False),

nn.BatchNorm2d(256),

nn.ReLU(inplace=True))

self.conv1 = nn.Sequential(

nn.Conv2d(256*5, 256, kernel_size=3, padding=1, bias=False),

nn.BatchNorm2d(256),

nn.ReLU(),

)

self.conv2 = nn.Sequential(

nn.Conv2d(256*2, self.num_classes, kernel_size=3, padding=1),

nn.BatchNorm2d(self.num_classes),

nn.ReLU(),

)

self.conv3 = nn.Sequential(

nn.Conv2d(self.num_classes, self.num_classes, kernel_size=3, padding=1),

)

def forward(self, x):

x = self.backbone(x)

low_feature = self.low_feature(x[-3])

x_1 = self.ASPP_module(x[-1])

x_2 = nn.functional.interpolate(self.avg_pool(x[-1]), size=(x[-1].size(2), x[-1].size(3)), mode='bilinear', align_corners=True)

x = torch.cat([x_1, x_2], 1)

x = self.conv1(x)

x = nn.functional.interpolate(input=x ,scale_factor=4,mode='bilinear')

x = torch.cat([x, low_feature], 1)

x = self.conv2(x)

x = nn.functional.interpolate(input=x ,scale_factor=4,mode='bilinear')

x = self.conv3(x)

return x

构建数据集-Camvid

构建数据集的步骤可从我的另一篇博客中找到。

# 导入库

import os

os.environ['CUDA_VISIBLE_DEVICES'] = '0'

import torch

import torch.nn as nn

import torch.optim as optim

import torch.nn.functional as F

from torch import optim

from torch.utils.data import Dataset, DataLoader, random_split

from tqdm import tqdm

import warnings

warnings.filterwarnings("ignore")

import os.path as osp

import matplotlib.pyplot as plt

from PIL import Image

import numpy as np

import albumentations as A

from albumentations.pytorch.transforms import ToTensorV2

torch.manual_seed(17)

# 自定义数据集CamVidDataset

class CamVidDataset(torch.utils.data.Dataset):

"""CamVid Dataset. Read images, apply augmentation and preprocessing transformations.

Args:

images_dir (str): path to images folder

masks_dir (str): path to segmentation masks folder

class_values (list): values of classes to extract from segmentation mask

augmentation (albumentations.Compose): data transfromation pipeline

(e.g. flip, scale, etc.)

preprocessing (albumentations.Compose): data preprocessing

(e.g. noralization, shape manipulation, etc.)

"""

def __init__(self, images_dir, masks_dir):

self.transform = A.Compose([

A.Resize(448, 448),

A.HorizontalFlip(),

A.VerticalFlip(),

A.Normalize(),

ToTensorV2(),

])

self.ids = os.listdir(images_dir)

self.images_fps = [os.path.join(images_dir, image_id) for image_id in self.ids]

self.masks_fps = [os.path.join(masks_dir, image_id) for image_id in self.ids]

def __getitem__(self, i):

# read data

image = np.array(Image.open(self.images_fps[i]).convert('RGB'))

mask = np.array( Image.open(self.masks_fps[i]).convert('RGB'))

image = self.transform(image=image,mask=mask)

return image['image'], image['mask'][:,:,0]

def __len__(self):

return len(self.ids)

# 设置数据集路径

DATA_DIR = r'dataset\camvid' # 根据自己的路径来设置

x_train_dir = os.path.join(DATA_DIR, 'train_images')

y_train_dir = os.path.join(DATA_DIR, 'train_labels')

x_valid_dir = os.path.join(DATA_DIR, 'valid_images')

y_valid_dir = os.path.join(DATA_DIR, 'valid_labels')

train_dataset = CamVidDataset(

x_train_dir,

y_train_dir,

)

val_dataset = CamVidDataset(

x_valid_dir,

y_valid_dir,

)

train_loader = DataLoader(train_dataset, batch_size=8, shuffle=True,drop_last=True)

val_loader = DataLoader(val_dataset, batch_size=8, shuffle=True,drop_last=True)模型训练

#载入预训练权重, 500M还挺大的 下载地址:https://download.pytorch.org/models/vgg16_bn-6c64b313.pth

#model = DeepLabV1(32+1).cuda()

#model = DeepLabV2(32+1).cuda()

#model = DeepLabV3(32+1).cuda()

model = DeepLabV3Plus(32+1).cuda()

model.load_state_dict(torch.load(r"checkpoints/vgg16_bn-6c64b313.pth"),strict=False)from d2l import torch as d2l

from tqdm import tqdm

#损失函数选用多分类交叉熵损失函数

lossf = nn.CrossEntropyLoss()

#选用adam优化器来训练

optimizer = optim.SGD(model.parameters(),lr=0.1)

#训练50轮

epochs_num = 50

def train_ch13(net, train_iter, test_iter, loss, trainer, num_epochs,

devices=d2l.try_all_gpus()):

timer, num_batches = d2l.Timer(), len(train_iter)

animator = d2l.Animator(xlabel='epoch', xlim=[1, num_epochs], ylim=[0, 1],

legend=['train loss', 'train acc', 'test acc'])

net = nn.DataParallel(net, device_ids=devices).to(devices[0])

for epoch in range(num_epochs):

# Sum of training loss, sum of training accuracy, no. of examples,

# no. of predictions

metric = d2l.Accumulator(4)

for i, (features, labels) in enumerate(train_iter):

timer.start()

l, acc = d2l.train_batch_ch13(

net, features, labels.long(), loss, trainer, devices)

metric.add(l, acc, labels.shape[0], labels.numel())

timer.stop()

if (i + 1) % (num_batches // 5) == 0 or i == num_batches - 1:

animator.add(epoch + (i + 1) / num_batches,

(metric[0] / metric[2], metric[1] / metric[3],

None))

test_acc = d2l.evaluate_accuracy_gpu(net, test_iter)

animator.add(epoch + 1, (None, None, test_acc))

print(f'loss {metric[0] / metric[2]:.3f}, train acc '

f'{metric[1] / metric[3]:.3f}, test acc {test_acc:.3f}')

print(f'{metric[2] * num_epochs / timer.sum():.1f} examples/sec on '

f'{str(devices)}')

#开始训练

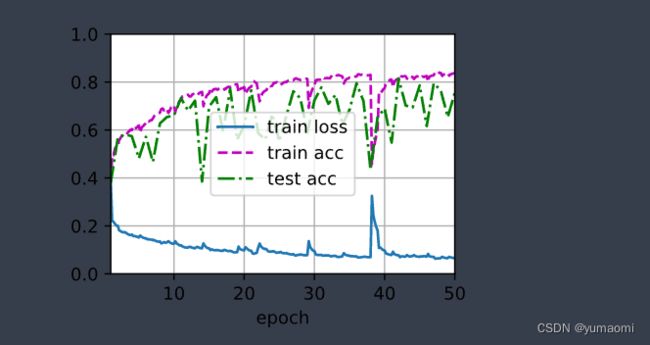

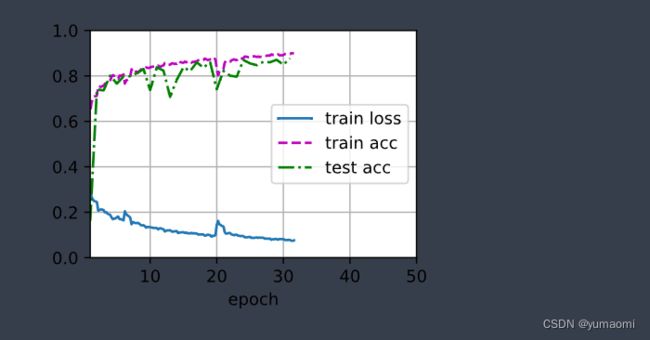

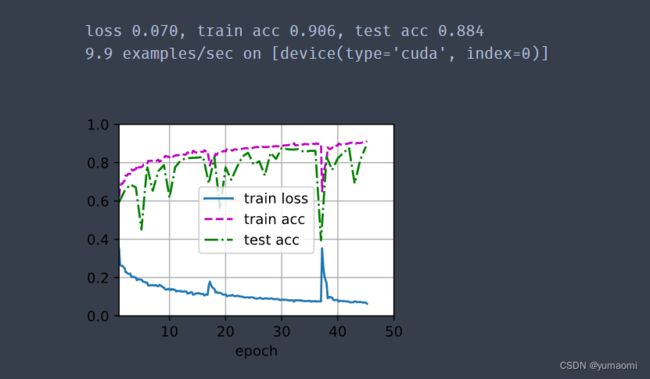

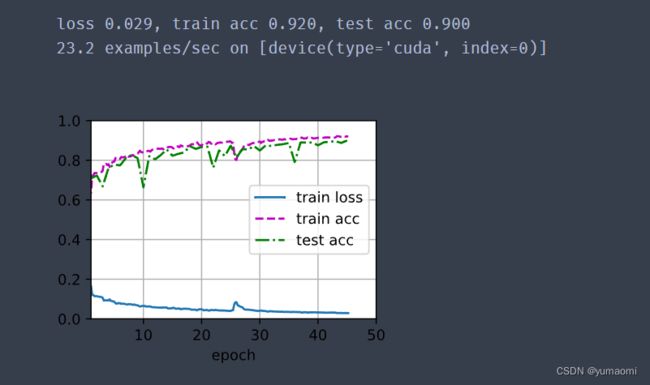

train_ch13(model, train_loader, val_loader, lossf, optimizer, epochs_num)训练结果

| 模型 | train_acc | test_acc |

| DeepLabV1 | 81.4% | 81.5% |

| DeepLabV2 | 89.6% | 87.7% |

| DeepLabV3 | 90.6% | 88.4% |

| DeepLabV3+ | 92.0% | 90.0% |