提取基本的图像特征并利用支持向量机对提取的特征进行图像分类。

1.导入库

import numpy as np

import matplotlib

from scipy.ndimage import uniform_filter2.加载数据

import os

import struct

import random

import numpy as np

import time

import matplotlib.pyplot as plt

from past.builtins import xrange

%matplotlib inline

plt.rcParams['figure.figsize'] = (12., 10.) # 设置默认大小

plt.rcParams['image.interpolation'] = 'nearest'

plt.rcParams['image.cmap'] = 'gray'

%load_ext autoreload

%autoreload 2

# 本地读取mnist数据集的函数

def load_mnist(path, kind='train'):

"""Load MNIST data from `path`"""

labels_path = os.path.join(path,'%s-labels.idx1-ubyte'% kind)

images_path = os.path.join(path,'%s-images.idx3-ubyte'% kind)

with open(labels_path, 'rb') as lbpath:

magic, n = struct.unpack('>II',lbpath.read(8))

labels = np.fromfile(lbpath,dtype=np.uint8)

#读入magic是一个文件协议的描述,也是调用fromfile 方法将字节读入NumPy的array之前在文件缓冲中的item数(n).

with open(images_path, 'rb') as imgpath:

magic, num, rows, cols = struct.unpack('>IIII',imgpath.read(16))

images = np.fromfile(imgpath,dtype=np.uint8).reshape(len(labels), 784)

return images, labels

x_train_all, y_train_all = load_mnist("D:\\data-excavate\\minist", kind='train')

print('Training rows: %d, columns: %d' % (x_train_all.shape[0], x_train_all.shape[1]))

x_test_all, y_test_all = load_mnist("D:\\data-excavate\\minist", kind='t10k')

print('Test rows: %d, columns: %d' % (x_test_all.shape[0], x_test_all.shape[1]))

# 将数据集进行可视化显示

index1 = random.randint(1,30000)

index2 = random.randint(1,30000)

print(f"下图中应该能够显示{y_train_all[index1:index1+2]} 和 {y_train_all[index2:index2+2]}")

plt.subplot(141)

plt.imshow(x_train_all[index1].reshape([28,28]), cmap=plt.get_cmap('gray'))

plt.subplot(142)

plt.imshow(x_train_all[index1+1].reshape([28,28]), cmap=plt.get_cmap('gray'))

plt.subplot(143)

plt.imshow(x_train_all[index2].reshape([28,28]), cmap=plt.get_cmap('gray'))

plt.subplot(144)

plt.imshow(x_train_all[index2+1].reshape([28,28]), cmap=plt.get_cmap('gray'))

plt.show()3.训练集的划分,将训练集缩小并划分出来test集和val集

index = random.randint(1,1000)

x_train = x_train_all[index:index+5000]

y_train = y_train_all[index:index+5000]

index = random.randint(1,1000)

x_val = x_test_all[index:index+500]

y_val = y_test_all[index:index+500]

x_test = x_test_all[index+500:index+1000]

y_test = y_test_all[index+500:index+1000]4.将图像进行0-1二值化

def binaryzation(data): # 0,1 二值化

row = data.shape[0]

col = data.shape[1]

ret = np.zeros((row , col), dtype=float)

for i in range(row):

for j in range(col):

ret[i][j] = 0

if(data[i][j] > 127):

ret[i][j] = 1

return ret

x_train = binaryzation(x_train)

x_val = binaryzation(x_val)

x_test = binaryzation(x_test)

print(x_train.shape)

print(x_val.shape)

print(x_test.shape)5.使用支持向量机进行分类,使用不同的核函数作为SVM模型的参数,选择验证集上准确率最好的SVM核函数

使用sklearn中的SVC建立模型:

from sklearn.svm import SVC

kernel_list = ['linear', 'poly', 'rbf', 'sigmoid']

accuracy_max = 0

best_kernel = None

for kernel in kernel_list:

# 定义SVM 模型并训练数据

svm = SVC(kernel = kernel)

svm.fit(x_train,y_train)

# 在验证集上测试模型

y_val_pred = svm.predict(x_val)

val_accuracy = np.sum(y_val_pred==y_val)/y_val.shape[0] #计算准确率

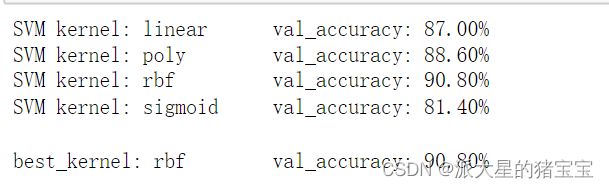

print('SVM kernel: {:8}\tval_accuracy: {:.2f}%'.format(kernel, 100*val_accuracy))

if val_accuracy > accuracy_max:

accuracy_max = val_accuracy

best_kernel = kernel

print('\nbest_kernel: {:8}\tval_accuracy: {:.2f}%'.format(best_kernel, 100*accuracy_max))6.将训练集和验证集组合训练上面选择的核函数SVM模型,在测试集上评估正确率,并查看各类别的查准率、查全率等指标

X_train_val = np.concatenate([x_train ,x_val])

y_train_val = np.concatenate([y_train,y_val]) #合并val集和train集

# 定义SVM 模型并训练数据,并在测试加以验证

SVM =SVC(kernel = 'rbf')

SVM.fit(X_train_val,y_train_val)

y_test_pred = SVM.predict(x_test)

test_accuracy = np.sum(y_test_pred==y_test)/y_test.shape[0]

print('SVM kernel: {:8}\ttest_accuracy: {:.2f}%'.format(best_kernel, 100*test_accuracy))

print(classification_report(y_test,y_test_pred))#调用classification_report进行评估

7.不同的核函数(linear, poly, rbf, sigmoid)作为SVM参数对分类结果的影响

linear:线性核函数,是在数据线性可分的情况下使用的,运算速度快,效果好。不足在于它不能处理线性不可分的数据。

poly:多项式核函数,多项式核函数可以将数据从低维空间映射到高维空间,但参数比较多,计算量大。

rbf:高斯核函数(默认),高斯核函数同样可以将样本映射到高维空间,但相比于多项式核函数来说所需的参数比较少,通常性能不错,所以是默认使用的核函数

sigmoid:sigmoid 核函数,sigmoid 经常用在神经网络的映射中。因此当选用 sigmoid 核函数时,SVM 实现的是多层神经网络。