双目相机 -- IMU联合标定

声明:一些图片是不该有水印的,CSDN把图片链接的格式改了,暂时还不知道怎么去掉,请见谅!!!

目录

-

- **声明**:一些图片是不该有水印的,CSDN把图片链接的格式改了,暂时还不知道怎么去掉,请见谅!!!

- 1、 IMU的标定

-

- 1.1 IMU数据读取

- 1.2 IMU数据滤波

- 1.3 录制rosbag包

- 1.4 kalibr_allan 标定

- 2、相机的标定

- 3、相机 - IMU联合标定

-

- 3.1 库安装

- 3.2 开始标定

- 参考

写在前面:其实联合标定用的 kalibr 可以直接完成相机标定、IMU标定、相机+IMU联合标定整个流程。这里只写了联合标定,另外两种之前不是用kalibr 做的,所以没有kalibr 的部分,感兴趣的同学可以去官网自己研究

1、 IMU的标定

1.1 IMU数据读取

首先要读取IMU数据,之前写过一篇,如果你的IMU也和我的一样没有官方ros历程的话,可以参考

1.2 IMU数据滤波

关于IMU滤波,不同IMU应该不一样,流程可以参考博客

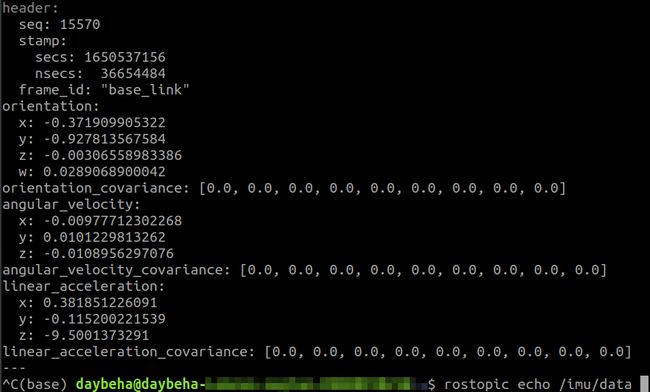

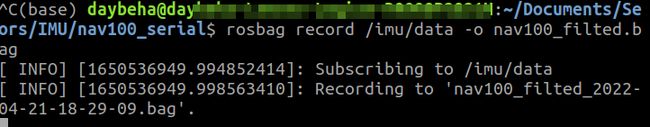

1.3 录制rosbag包

将滤波后的imu信息录制为rosbag

先查看 topic 列表

rostopic list

命令行查看 topic内容:

rostopic echo /topic

rosbag record /imu/data -o imu.bag

1.4 kalibr_allan 标定

这部分和博客1.2部分完全一致,注意修改topic话题名称为 “/imu/data” 即可。

亦可以使用上面博客的1.1部分的imu_utils,不过好像kalibr_allan 精度更高些。

2、相机的标定

之前写过两个:

MatLab的双目相机标定和orbslam双目参数匹配

ROS+Opencv的双目相机标定和orbslam双目参数匹配

也可以按照 kalibr 的来:

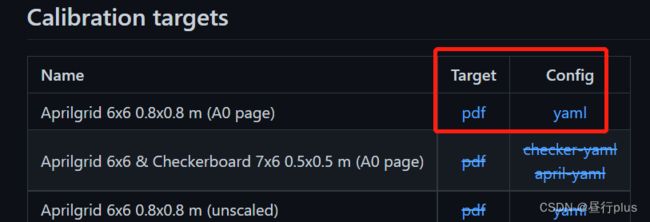

1、先下载kalibr提供的网格

2、相机发布频率改为4

rosrun topic_tools throttle messages /camera/left/image_raw 4.0 /stereo/left/image_raw

rosrun topic_tools throttle messages /camera/right/image_raw 4.0 /stereo/right/image_raw

3、录制ROS bag 包

rosbag record /stereo/left/image_raw /stereo/right/image_raw -O zed_images.bag

4、kalibr 标定:

kalibr_calibrate_cameras --target april_6x6_24x24mm.yaml --bag images.bag --bag-from-to 5 30 --models pinhole-radtan pinhole-equi --topics /stereo/left/image_raw /stereo/right/image_raw

kalibr支持多种相机模型

好像还可以用 ROS camera_calibration 包,记录一下,没有用过。

图像标定后的去畸变代码示例(来自github):

bool DuoCalibParam::initUndistortMap(const cv::Size &new_img_size) {

// compute the boundary of images in uv coordinate

/// 1、计算图像边界

this->Camera.lurd_lr.resize(2);

for (int lr = 0; lr < 2; ++lr) {

std::vector<cv::Point2f> lurd(4);

lurd[0].x = 0;

lurd[0].y = this->Camera.img_size.height / 2;

lurd[1].x = this->Camera.img_size.width / 2;

lurd[1].y = 0;

lurd[2].x = this->Camera.img_size.width;

lurd[2].y = this->Camera.img_size.height / 2;

lurd[3].x = this->Camera.img_size.width / 2;

lurd[3].y = this->Camera.img_size.height;

std::vector<cv::Point2f> lurd_undistort(4);

cv::undistortPoints(lurd, lurd_undistort,

this->Camera.cv_camK_lr[lr],

this->Camera.cv_dist_coeff_lr[lr]);

this->Camera.lurd_lr[lr][0] = lurd_undistort[0].x;

this->Camera.lurd_lr[lr][1] = lurd_undistort[1].y;

this->Camera.lurd_lr[lr][2] = lurd_undistort[2].x;

this->Camera.lurd_lr[lr][3] = lurd_undistort[3].y;

}

/// 2、计算双目校正参数

this->Camera.undistort_map_op1_lr.resize(2);

this->Camera.undistort_map_op2_lr.resize(2);

vector<cv::Mat> R(2);

vector<cv::Mat> P(2);

//转换Eigen::Matrix 为 cv:Mat类型

Matrix4f C0_T_C1 = this->Camera.D_T_C_lr[0].inverse() * this->Camera.D_T_C_lr[1];

Matrix4f C1_T_C0 = C0_T_C1.inverse();

cv::Mat C0_R_C1(3, 3, CV_64F);

cv::Mat C1_R_C0(3, 3, CV_64F);

cv::Mat C1_t_C0(3, 1, CV_64F);

cv::Mat C0_t_C1(3, 1, CV_64F);

for (int i = 0; i < 3; ++i) {

for (int j = 0; j < 3; ++j) {

C0_R_C1.at<double>(i, j) = C0_T_C1(i, j);

C1_R_C0.at<double>(i, j) = C1_T_C0(i, j);

}

C0_t_C1.at<double>(i, 0) = C0_T_C1(i, 3);

C1_t_C0.at<double>(i, 0) = C1_T_C0(i, 3);

}

cv::Matx44d Q;

cv::stereoRectify(this->Camera.cv_camK_lr[0],

this->Camera.cv_dist_coeff_lr[0],

this->Camera.cv_camK_lr[1],

this->Camera.cv_dist_coeff_lr[1],

this->Camera.img_size,

C1_R_C0, C1_t_C0,

R[0], R[1],

P[0], P[1],

Q, cv::CALIB_ZERO_DISPARITY, 0, new_img_size);

this->Camera.Q = cv::Matx44f(Q); // opencv好像对浮点类型也卡的比较死,没有自动类型转换

/// 3、计算双目去畸变map(映射)

this->Camera.cv_undist_K_lr.resize(2);

this->Camera.undist_D_T_C_lr.resize(2);

// 分别计算左右目图像的去畸变map

for (int lr = 0; lr < 2; lr++) {

cv::Mat newK(3, 3, CV_32F);

for (int i = 0; i < 3; ++i) {

for (int j = 0; j < 3; ++j) {

// note P[lr] doesn't work

newK.at<float>(i, j) = P[lr].at<double>(i, j);

}

}

cv::initUndistortRectifyMap(this->Camera.cv_camK_lr[lr],

this->Camera.cv_dist_coeff_lr[lr],

R[lr],

newK, // this->Camera.cv_camK_lr[lr], // newK

new_img_size,

CV_32FC1,

this->Camera.undistort_map_op1_lr[lr],

this->Camera.undistort_map_op2_lr[lr]);

this->Camera.cv_undist_K_lr[lr] = cv::Matx33f(newK);

this->Camera.undist_D_T_C_lr[lr].setIdentity();

}

// Compute the new *rectified* extrinsics

// In undist_D_T_C_lr, we assume D_T_C_l is identity, which means,

// the device {D} is *moved* to the new C_l (R[0] is actually Cl_new_R_Cl)

// Therefore, D_T_I needs to be adjusted as well to undist_D_T_I.

/// 4、计算去畸变后的cam0_to_cam1和 cam0_to_imu

cv::Mat new_C0_t_C1 = R[0] * C0_t_C1;

this->Camera.undist_D_T_C_lr[1](0, 3) = new_C0_t_C1.at<double>(0);

this->Camera.undist_D_T_C_lr[1](1, 3) = new_C0_t_C1.at<double>(1);

this->Camera.undist_D_T_C_lr[1](2, 3) = new_C0_t_C1.at<double>(2);

Eigen::Matrix4f new_D_T_D = Eigen::Matrix4f::Identity();

for (int i = 0; i < 3; ++i) {

for (int j = 0; j < 3; ++j) {

new_D_T_D(i, j) = static_cast<float>(R[0].at<double>(i, j));

}

}

this->Imu.undist_D_T_I = new_D_T_D * this->Imu.D_T_I;

return true;

}

3、相机 - IMU联合标定

3.1 库安装

安装依赖

sudo apt-get install python-setuptools python-rosinstall ipython libeigen3-dev libboost-all-dev doxygen libopencv-dev

sudo apt-get install libopencv-dev ros-melodic-vision-opencv ros-melodic-image-transport-plugins ros-melodic-cmake-modules software-properties-common libpoco-dev python-matplotlib python-scipy python-git python-pip ipython libtbb-dev libblas-dev liblapack-dev python-catkin-tools libv4l-dev

安装kalibr

mkdir -p kalibr_ws/src

git clone https://github.com/ethz-asl/Kalibr.git

cd ~/kalibr_ws

catkin build -DCMAKE_BUILD_TYPE=Release -j4

kalibr编译问题解决

3.2 开始标定

官方教程

先修改imu的发布频率,官方推荐为200Hz

rosrun topic_tools throttle messages /imu/data 200.0 /imu/data_raw

throttle的工具实测不好用,并不能精确达到想要的频率,特别是这种高频的数据,我运行该代码只能得到70多Hz的频率……

可能我的IMU频率本身也就200多?

1、 把IMU和相机固定在一起,录制ROS bag包

rosbag record /imu/data /camera/left/image_raw /camera/right/image_raw -o images_imu.bag

注:

1、录制的时候要注意按照官方的说法-充分激励IMU- 绕3个轴旋转和3个方向的平移,这里有个官方视频介绍,打不开的话有人把它搬到B站上了

2、三个topic按照自己的情况改。另外可以再加两个(非必要):/camera/left/camera_info /camera/right/camera_info

2、 启动校准包开始校准

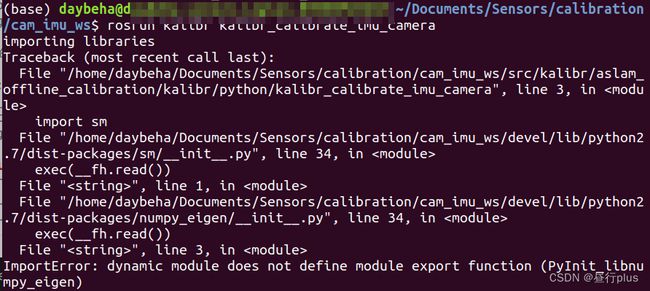

在运行上也有点问题:

可能是我系统里有anaconda导致的,解决方法是打开kalibr/aslam_offline_calibration/kalibr/python/kalibr_calibrate_imu_camera,第一行改成 #!/usr/bin/env python2(ros-melodic)

改好后运行下面代码开始标定

cd ~/kalibr_ws

source ./devel/setup.bash

rosrun kalibr kalibr_calibrate_imu_camera --target april_6x6_55x55mm.yaml --bag images_imu.bag --bag-from-to 5 50 --cam camchain.yaml --imu imu.yaml --imu-models scale-misalignment --timeoffset-padding 0.1

参数:

1)–target april_6x6_55x55mm.yaml: 描述标定板的信息

2)–bag images_imu.bag: 指定数据包

3)–bag-from-to 5 50: 设定bag包开始时间和结束时间,避开拿起和放下IMU的时间段内的数据(太人性化了!)

4)–cam camchain.yaml: 相机参数文件

5)–imu xsens_imu.yaml: 设定IMU标定信息

6)–imu-models scale-misalignment: IMU的参数模型

如果你报了优化失败的错,一定加上–timeoffset-padding,默认为0.03,我改到了0.1,也有改0.3的,视情况而定。值越大越慢,但至少没那么容易失败了。

1、另外可以加上–show extraction参数,以可视化校准目标提取过程。这可能有助于发现目标配置和提取的问题。

2、最少要有 –target,–cam,–imu,–bag四个参数

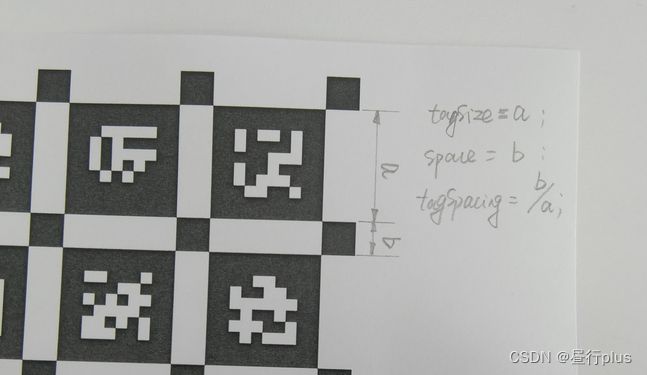

april_6x6_55x55mm.yaml文件内容:

target_type: 'aprilgrid' #gridtype

tagCols: 6 #number of apriltags

tagRows: 6 #number of apriltags

tagSize: 0.088 #size of apriltag, edge to edge [m]

tagSpacing: 0.3 #ratio of space between tags to tagSize

codeOffset: 0 #code offset for the first tag in the aprilboard

也可以用棋盘格!!!

// 棋盘格的设置与代码内参数设置一样即可

#example for checkerboard

target_type: 'checkerboard' #gridtype

targetRows: 12 #number of internal chessboard corners

targetCols: 8 #number of internal chessboard corners

rowSpacingMeters: 0.044 #size of one chessboard square [m]

colSpacingMeters: 0.044 #size of one chessboard square [m]

不过经过个人实践,实际上并不能跑通,会报错说提取不到特征点:

[FATAL] [1652801101.139525]: No corners could be extracted for camera /camera/left/image_raw! Check the calibration target configuration and dataset.

我没有去专研过源码,若有大佬经过还请为笔者解惑!!!

camchain.yaml 文件内容(注意内参和畸变参数的格式,相关参数格式可参考):

cam0:

camera_model: pinhole

intrinsics: [738.2309, 738.0446, 612.8741, 383.5233]

distortion_model: radtan

distortion_coeffs: [0.125858705085614,-0.164938923226198,-0.000829975384038096,0.000840974135014823]

resolution: [1280, 720]

rostopic: /camera/left/image_raw

cam1:

T_cn_cnm1:

- [ 9.997966e-01, 6.104940e-04, -2.015940e-02,6.024595e-02]

- [-5.637016e-04, 9.999971e-01, 2.326720e-03,-3.476903e-04]

- [2.016076e-02, -2.314883e-03, 9.997941e-01,2.469048e-02]

- [0.0, 0.0, 0.0, 1.0]

camera_model: pinhole

intrinsics: [737.7944,737.3724,634.835802105515,383.846288864773]

distortion_model: radtan

distortion_coeffs: [0.125858705085614,-0.164938923226198,-0.000564115553046124,0.000999594481541572]

resolution: [1280, 720]

rostopic: /camera/right/image_raw

注意两相机变换矩阵的单位为m,不再是相机标定时的mm!不改的话相机2的重投影误差会非常大!

如果你的两个相机是严格同步的,并且已经标定过两相机外参,则不必再有cam1,即:

cam0:

camera_model: pinhole

intrinsics: [738.2309, 738.0446, 612.8741, 383.5233]

distortion_model: radtan

distortion_coeffs: [0.125858705085614,-0.164938923226198,-0.000829975384038096,0.000840974135014823]

resolution: [1280, 720]

rostopic: /camera/left/image_raw

不要耍小聪明用立体校正后的相机内参,然后令畸变参数都为0。因为你可能会发现用到代码里会很麻烦。

imu.yaml 文件内容:

#Accelerometers

accelerometer_noise_density: 1.030e-3 #Noise density (continuous-time)

accelerometer_random_walk: 1.196e-3 #Bias random walk

#Gyroscopes

gyroscope_noise_density: 2.01e-4 #Noise density (continuous-time)

gyroscope_random_walk: 1.3e-5 #Bias random walk

rostopic: /IMU_data #the IMU ROS topic

update_rate: 200.0 #Hz (for discretization of the values above)

标定结果保存为 xxx-camchain-imucam.yaml,xxx-report-imu.yaml,xxx-report-imucam.pdf,xxx-results-imucam.txt 四个文件

其中.txt文件中的部分结果,最重要的是相机与imu的变换矩阵(相机到IMU(T_ci),IMU到相机都有(T_ic))

Calibration results

===================

Normalized Residuals

----------------------------

Reprojection error (cam0): mean 1.56945550752, median 1.44059444585, std: 0.829635923744

Gyroscope error (imu0): mean 21.1625990425, median 15.0336046344, std: 21.0766788496

Accelerometer error (imu0): mean 14.4663714006, median 11.6741933589, std: 11.0344310746

Residuals

----------------------------

Reprojection error (cam0) [px]: mean 1.56945550752, median 1.44059444585, std: 0.829635923744

Gyroscope error (imu0) [rad/s]: mean 0.0601561535077, median 0.0427340624062, std: 0.0599119194086

Accelerometer error (imu0) [m/s^2]: mean 0.220747631851, median 0.178140769815, std: 0.16837840403

Transformation (cam0):

-----------------------

T_ci: (imu0 to cam0):

[[-0.00335273 0.99990285 -0.01352977 0.00020972]

[-0.99918854 -0.00389278 -0.04008876 -0.00025106]

[-0.04013753 0.01338438 0.99910452 -0.00009292]

[ 0. 0. 0. 1. ]]

T_ic: (cam0 to imu0):

[[-0.00335273 -0.99918854 -0.04013753 -0.00025389]

[ 0.99990285 -0.00389278 0.01338438 -0.00020944]

[-0.01352977 -0.04008876 0.99910452 0.00008561]

[ 0. 0. 0. 1. ]]

timeshift cam0 to imu0: [s] (t_imu = t_cam + shift)

-0.148418387849

Gravity vector in target coords: [m/s^2]

[ 0.24594665 -9.75661592 -0.95727683]

Calibration configuration

=========================

cam0

-----

Camera model: pinhole

Focal length: [719.4553, 718.4151]

Principal point: [609.0952, 379.0658]

Distortion model: radtan

Distortion coefficients: [0.1293687, -0.1560079, -0.00257736, -0.0007919026]

Type: aprilgrid

Tags:

Rows: 6

Cols: 6

Size: 0.032 [m]

Spacing 0.009 [m]

IMU configuration

=================

IMU0:

----------------------------

Model: calibrated

Update rate: 200.0

Accelerometer:

Noise density: 0.001079

Noise density (discrete): 0.015259364338

Random walk: 0.000231

Gyroscope:

Noise density: 0.000201

Noise density (discrete): 0.00284256926037

Random walk: 1.3e-05

T_i_b

[[ 1. 0. 0. 0.]

[ 0. 1. 0. 0.]

[ 0. 0. 1. 0.]

[ 0. 0. 0. 1.]]

time offset with respect to IMU0: 0.0 [s]

参考

ros数据集录制:rosbag record

ROS读IMU数据

相机与IMU联合标定

camera-imu标定 联合标定