论文阅读-2022.1.2-A Neural Network Approach for_2016_一种用于知识驱动响应生成的神经网络方法

摘要

We present a novel response generation system.我们提出了一种新颖的响应生成系统。

The system assumes the hypothesis that participants in a conversation base their response not only on previous dialog utterances but also on their background knowledge.

系统假设对话的参与者不仅基于先前的对话话语而且基于他们的背景知识做出响应。

Our model is based on a Recurrent Neural Network (RNN) that is trained over concatenated sequences of comments, a Convolution Neural Network that is trained over Wikipedia sentences and a formulation that couples the two trained embeddings in a multimodal space.

我们的模型基于在串联评论序列上训练的循环神经网络 (RNN)、在维基百科句子上训练的卷积神经网络以及在多模态空间中耦合两个训练嵌入的公式。

We create a dataset of aligned Wikipedia sentences and sequences of Reddit utterances, which we we use to train our model.

我们创建了一个对齐的维基百科句子和 Reddit 话语序列的数据集,我们用它来训练我们的模型。

Given a sequence of past utterances and a set of sentences that represent the background knowledge, our end-to-end learnable model is able to generate context-sensitive and knowledge-driven responses by leveraging the alignment of two different data sources.

给定一系列过去的话语和一组代表背景知识的句子,我们的端到端可学习模型能够通过利用两个不同数据源的对齐来生成上下文敏感和知识驱动的响应。

Our approach achieves up to 55% improvement in perplexity compared to purely sequential models based on RNNs that are trained only on sequences of utterances.

与仅基于话语序列训练的基于 RNN 的纯序列模型相比,我们的方法将困惑度提高了 55%。

1.引言

Over the recent years, the level of users’ engagement and participation in public conversations on social media, such as Twitter, Facebook and Reddit has substantially increased.

近年来,用户在社交媒体(如 Twitter、Facebook 和 Reddit)上参与和参与公共对话的程度大大提高。

As a result, we now have large amounts of conversation data that can be used to train computer programs to be proficient conversation participants.

因此,我们现在拥有大量对话数据,可用于训练计算机程序成为熟练的对话参与者。

Automatic response generation could be immediately deployable in social media as an auto complete response suggestion feature or a conversation stimulant that adjusts the participation interest in a dialogue thread (Ritter et al., 2011).

自动响应生成可以立即部署在社交媒体中,作为自动完成响应建议功能或调整对话线程中参与兴趣的对话刺激物 (Ritter et al., 2011)。

Alan Ritter、Colin Cherry 和 William B. Dolan。 2011. 社交媒体中数据驱动的响应生成。在自然语言处理中的经验方法会议论文集,EMNLP '11,第 583-593 页,美国宾夕法尼亚州斯特劳兹堡。计算语言学协会。

It should also be beneficial in the development of Question Answering systems, by enhancing their ability to generate human-like responses (Grishman, 1979).

它还应该有利于问答系统的开发,通过增强它们生成类似人类的响应的能力(Grishman,1979)。

拉尔夫·格里什曼。 1979. 问答系统中的响应生成。在第 17 届计算语言学协会年会会议记录中,ACL '79,第 99-101 页,美国宾夕法尼亚州斯特劳兹堡。计算语言学协会。

=========================================================================

Recent work on neural networks approaches shows their great potential at tackling a wide variety of Natural Language Processing (NLP) tasks (Bengio et al., 2003; Mikolov et al., 2010).

最近关于神经网络方法的工作显示了它们在处理各种自然语言处理 (NLP) 任务方面的巨大潜力(Bengio 等人,2003 年;Mikolov 等人,2010 年)。

Yoshua Bengio、Rejean Ducharme、Pascal Vincent 和 Christian Janvin。 2003. 神经概率语言模型。 J. 马赫。学习。研究,3:1137-1155,三月。

Toma´s Mikolov、Martin Karafi ˇ at、Luk´a´s Burget、Jan Cernockˇ y 和 Sanjeev Khudanpur。 2010. 基于循环神经网络的语言模型。在INTERSPEECH 2010,国际语音通信协会第11届年会上,日本幕张,2010 年 9 月 26-30 日,第 1045-1048 页。

Since a conver[1]sation can be perceived as a sequence of utterances, recent systems that are employed in the automatic response generation domain are based on Recurrent Neural Networks (RNNs) (Sordoni et al., 2015; Shang et al., 2015; Vinyals and Le, 2015), which are powerful sequence models.

由于对话可以被视为一系列话语,最近在自动响应生成领域采用的系统基于循环神经网络 (RNN)(Sordoni 等人,2015 年;Shang 等人,2015 年;Vinyals 和 Le, 2015),它们是强大的序列模型。

Mitchell、Jian-Yun Nie、Jianfeng Gao和Bill Dolan。 2015. 一种用于上下文敏感生成会话响应的神经网络方法。计算语言学协会北美分会 2015 年会议论文集:人类语言技术,第 196-205 页,科罗拉多州丹佛,5 月至 6 月。计算语言学协会。

V. Le。 2015. 神经对话模型。 CoRR,abs/1506.05869。

尚立峰、郑东路、李航。 2015. 用于短文本对话的神经响应机。计算语言学协会第 53 届年会暨第七届自然语言处理国际联合会议论文集(第一卷:长论文),第 1577-1586 页,北京,

These systems base their generated response explicitly on a sequence of the most recent utterances of a conversation thread.

这些系统将其生成的响应明确地基于对话线程的最新话语序列。

Consequently, the sequence of characters, words, or comments, in a conversation, depending on the level of the model, is the only means with which these models achieve contextual-awareness, and in open-domain, realistic, situations it often proves inadequate (Vinyals and Le, 2015).

因此,对话中的字符、单词或评论的序列,取决于模型的级别,是这些模型实现上下文感知的唯一手段,而在开放域、现实的情况下,它通常被证明是不够的 (Vinyals 和 Le,2015 年)。

=========================================================================

In this paper we address the challenge of context-sensitive response generation.

在本文中,我们解决了上下文敏感响应生成的挑战。

We build a dataset that aligns knowledge from Wikipedia in the form of sentences with sequences of Reddit utterances. 我们构建了一个数据集,以句子的形式将来自维基百科的知识与 Reddit 话语序列对齐。

The dataset consists of sequences of comments and a number of Wikipedia sentences that were allocated randomly from the Wikipedia pages to which each sequence is aligned.该数据集由评论序列和许多维基百科句子组成,这些句子是从每个序列与之对齐的维基百科页面中随机分配的。

The resultant dataset consists of ∼ 15k sequences of comments that are aligned with ∼ 75k Wikipedia sentences. 结果数据集由 ~ 15k 条评论序列组成,与 ~ 75k 维基百科句子对齐。We make the aligned corpus available at github.com/pvougiou/Aligning-Reddit-and-Wikipedia.

我们在 github.com/pvougiou/Aligning-Reddit-and-Wikipedia 上提供对齐的语料库。

=========================================================================

We propose a novel model that leverages this alignment of two different data sources. 我们提出了一种利用两个不同数据源对齐的新颖模型。

Our architecture is based on coupling an RNN using either Long Short-Term Memory (LSTM) cells (Hochreite Schmidhuber, 1996) or Gated Recurrent Units (GRUs) (Cho et al., 2014) that processes each sequence of utterances word-by-word, and a Convolutional Neural Network (CNN) that extracts features from each set of sentences that corresponds to this sequence of utterances.

我们的架构基于使用长短期记忆 (LSTM) 单元 (Hochreite Schmidhuber, 1996) 或门控循环单元 (GRU) (Cho et al., 2014) 来耦合 RNN,该单元逐字处理每个话语序列,以及一个卷积神经网络 (CNN),它从对应于这个话语序列的每组句子中提取特征。

Sepp Hochreiter 和 Jrgen Schmidhuber。 1996. 通过重量猜测和“长短期记忆”弥合长时间滞后。在生物和人工系统中的时空模型中,第 65-72 页。 IOS出版社。

Kyunghyun Cho、Bart van Merrienboer、C? aglar Gulc?ehre、Fethi Bougares、Holger Schwenk 和 Yoshua Bengio。 ¨ 2014. 使用用于统计机器翻译的 RNN 编码器-解码器学习短语表示。 CoRR,abs/1406.1078。

We pre-train the CNN component (Kim, 2014) on a subset of the retrieved Wikipedia sentences in order to learn filters that are able to classify a sentence based on its referred topic.

我们在检索到的维基百科句子的子集上预训练 CNN 组件 (Kim, 2014),以学习能够根据引用主题对句子进行分类的过滤器。

尹金。 2014. 用于句子分类的卷积神经网络。在 2014 年自然语言处理经验方法会议 (EMNLP) 的会议记录中,第 1746-1751 页,卡塔尔多哈,10 月。计算语言学协会。

Our model assumes the hypothesis that each participant in a conversation bases their response not only on previous dialog utterances but also on their individual background knowledge. 我们的模型假设这样一个假设,即对话中的每个参与者的反应不仅基于先前的对话话语,而且基于他们的个人背景知识。

We use Wikipedia as the source of our model’s knowledge background and align Wikipedia pages and sequences of comments from Reddit based on a predefined topic of discussion.

我们使用维基百科作为我们模型知识背景的来源,并根据预定义的讨论主题对齐维基百科页面和来自 Reddit的评论序列。

=========================================================================

Our work is inspired by recent developments in the generation of textual summaries from visual data (Socher et al., 2014; Karpathy and Li, 2014). Our core insight stems from the idea that a system that is able to learn how to couple information from aligned datasets in order to produce a meaningful response, would be able to capture the context of a given conversation more accurately.

我们的工作受到视觉数据生成文本摘要的最新发展的启发(Socher 等,2014;Karpathy 和 Li,2014)。我们的核心见解源于这样一个想法:一个能够学习如何从对齐的数据集中耦合信息以产生有意义的响应的系统,将能够更准确地捕捉给定对话的上下文。

Richard Socher、Andrej Karpathy、Quoc V. Le、Christopher D. Manning 和 Andrew Y. Ng。 2014. 用于查找和描述带有句子的图像的基础组合语义。 TACL,2:207-218。

Andrej Karpathy 和李飞飞。 2014. 用于生成图像描述的深度视觉语义对齐。 CoRR,abs/1412.2306。

Our model achieves up to 55% improved perplexity compared to purely sequential equivalents. It should also be noted that our approach is domain independent; thus, it could be transferred out-of-box to a wide variety of conversation topics.

与纯顺序等效模型相比,我们的模型将困惑度提高了 55%。 还应该指出的是,我们的方法是独立于领域的; 因此,它可以开箱即用地传输到各种对话主题。

The structure of the paper is as follows. Section 2 discusses premises of our work regarding both automatic response generation and neural networks approaches for Natural Language Processing (NLP). Section 3 presents the components of the network. Section 4 describes the structure of the dataset. Sec tion 5 discusses the experiments and the evaluation of the models. Section 6 summarises the contributions of the current work and outlines future plans

论文的结构如下。 第 2 节讨论了我们在自然语言处理 (NLP) 的自动响应生成和神经网络方法方面的工作前提。 第 3 节介绍了网络的组成部分。 第 4 节描述了数据集的结构。 第 5 节讨论了模型的实验和评估。 第 6 节总结当前工作的贡献并概述未来计划

2 相关工作

Since Bengio’s introduction of neural networks in statistical language modelling (Bengio et al., 2003) and Mikolov’s demonstration of the extreme effectiveness of RNNs for sequence modelling (Mikolov et al., 2010), neural-network-based implementations have been employed for a wide variety of NLP tasks.

自从 Bengio 在统计语言建模中引入神经网络(Bengio 等人,2003 年)和 Mikolov 证明 RNN 在序列建模方面的极端有效性(Mikolov 等人,2010 年)以来,基于神经网络的实现已被用于各种各样的 NLP 任务。

In order to sidestep the exploding and vanishing gradients training problem of RNNs (Bengio et al., 1994; Pascanu et al., 2012), multi-gated RNN variants, such as the GRU (Cho et al., 2014) and the LSTM (Hochreiter and Schmidhuber, 1996), have been proposed.为了避免 RNN 的梯度爆炸和消失训练问题(Bengio 等人,1994 年;Pascanu 等人,2012 年),多门 RNN 变体,例如 GRU(Cho 等人,2014 年)和 LSTM (Hochreiter 和 Schmidhuber,1996),已经被提出。

Both GRUs and LSTMs have demonstrated state-of-the-art performance for many generative tasks, such as SMT (Cho et al., 2014; Sutskever et al., 2014), text (Graves, 2013) and image generation (Gregor et al., 2015)

GRU 和 LSTM 都展示了许多生成任务的最先进性能,例如 SMT(Cho 等人,2014 年;Sutskever 等人,2014 年)、文本(Graves,2013 年)和图像生成(Gregor 等人) 等,2015)

=========================================================================

Despite the fact that CNNs had been originally employed in the computer vision domain (LeCun et al., 1998), models based on the combination of the convolution operation with the classical Time[1]Delay Neural Network (TDNN) (Waibel et al., 1989) have proved effective on many NLP tasks, such as semantic parsing (Yih et al., 2014), Part-Of-Speech Tagging (POS) and Chunking (Collobert and Weston, 2008). Furthermore, sentence-level CNNs have been used in sentiment analysis and question type identification (Kalchbrenner et al., 2014; Kim, 2014).

尽管 CNN 最初被用于计算机视觉领域(LeCun 等人,1998),但基于卷积运算与经典时延神经网络(TDNN)相结合的模型(Waibel 等人,1989 年) 已经证明在许多 NLP 任务上是有效的,例如语义解析(Yih 等人,2014 年)、词性标注(POS)和分块(Collobert 和 Weston,2008 年)。 此外,句子级 CNN 已用于情感分析和问题类型识别(Kalchbrenner 等人,2014 年;Kim,2014 年)。

=========================================================================

The concept of a system capable of participating in human-computer conversations was initially proposed by Weizenbaum (Weizenbaum, 1966). 能够参与人机对话的系统的概念最初是由 Weizenbaum 提出的(Weizenbaum,1966)。

Weizenbaum implemented ELIZA, a keyword-based pro[1]gram that set the basis for all the descendant chatterbots. Weizenbaum 实施了 ELIZA,这是一个基于关键字的程序,为所有后代聊天机器人奠定了基础。

In the years that followed, many template-based approaches (Isbell et al., 2000; Walker et al., 2003; Shaikh et al., 2010) have been suggested in the scientific literature, as a way of transforming the computer into a proficient conversation participant.在随后的几年中,科学文献中提出了许多基于模板的方法(Isbell 等人,2000 年;Walker 等人,2003 年;Shaikh 等人,2010 年),作为将计算机转换为一个熟练的对话参与者。

However, these approaches usually adopt variants of the nearest-neighbour method to facilitate their response generation process from a number of limited sentence paradigms and, as a result, they are limited to specific topics or scenarios of conversation. 然而,这些方法通常采用最近邻方法的变体来促进它们从许多有限的句子范式中生成响应的过程,因此,它们仅限于特定的主题或对话场景。

Recently, models for Statistical Machine Translation have been used to generate short-length responses to a conversational incentive from Twitter utterances (Ritter et al., 2011).最近,统计机器翻译模型已被用于生成对 Twitter 话语的对话激励的简短响应(Ritter et al., 2011)。

In the recent literature, RNNs have been used as the fundamental component of conversational response systems (Sordoni et al., 2015; Shang et al., 2015; Vinyals and Le, 2015).在最近的文献中,RNN 已被用作对话响应系统的基本组成部分(Sordoni 等,2015;Shang 等,2015;Vinyals 和 Le,2015)。

Even though these systems exhibited significant improvements over SMT-based methods (Ritter et al., 2011), they either adopt the length-restricted-messages paradigm or are trained on idealised dataset that undermines the generation of responses in open domain realistic scenarios.

尽管这些系统比基于 SMT 的方法(Ritter 等人,2011 年)表现出显着改进,但它们要么采用长度限制消息范式,要么在理想化数据集上进行训练,这会破坏开放域现实场景中响应的生成。

We propose a novel architecture for context-sensitive response generation.我们为上下文敏感的响应生成提出了一种新颖的架构。

Our model is trained on a dataset that consists of realistic sequences of Reddit comments that aligned with sets of Wikipedia sentences.我们的模型是在一个数据集上进行训练的,该数据集由真实的 Reddit 评论序列组成,这些评论与维基百科句子集对齐。

We use an RNN and a CNN components to process the sequence of comments and their corresponding set of sentences respectively and we introduce a learnable coupling formulation.我们使用 RNN 和 CNN 组件分别处理评论序列及其相应的句子集,并引入了可学习的耦合公式

The coupling formulation is inspired by the Multimodal RNN that generates textual description from visual data (Karpathy and Li, 2014). However, unlike Karpathy’s approach, we do not allow the feature that is generated by the CNN component to diminish between distant timesteps (i.e. Section 3.3)耦合公式的灵感来自多模态 RNN,它从视觉数据生成文本描述(Karpathy 和 Li,2014 年)。 然而,与 Karpathy 的方法不同,我们不允许 CNN 组件生成的特征在远距离的时间步长之间减少(即第 3.3 节)

3 我们的模型

Our task is to generate a context-sensitive response to a sequence of comments by incorporating back ground knowledge.

我们的任务是通过结合背景知识对一系列评论生成上下文敏感的响应。

The proposed model is based on the assumption that each participant in a con versation phrases their responses by taking into consideration both the past dialog utterances and their individual knowledge background.所提出的模型基于这样一个假设,即对话中的每个参与者通过考虑过去的对话话语和他们的个人知识背景来表达他们的回答。

We train the model on a set of M sequences of Reddit comments and N summaries of Wikipedia pages that are related to the main discussed topic of a conversation. 我们在一组 M 个 Reddit 评论序列和 N 个与对话的主要讨论主题相关的维基百科页面摘要上训练模型。

During training our models takes as an input a sequence of one-hot3 vector representations of words x1, x2, ..., xT from a sequence of comments and a group of sentences S that is aligned with this se quence of utterances.

在训练期间,我们的模型将来自评论序列和与该话语序列对齐的一组句子 S 的单词 x1、x2、...、xT 的独热向量表示序列作为输入。

We use a sentence-level CNN, which processes the group of sentences, in parallel with a word-level RNN that processes the sequence of comments in batches and propose a formulation that learns to couple the two networks to produce a meaningful response to the preceding comments.

我们使用处理句子组的句子级 CNN 与分批处理评论序列的单词级 RNN 并行,并提出一个公式,该公式学习将两个网络耦合以对前面的内容产生有意义的响应

We experiment with two different commonly used RNN variants that are based on: (i) the LSTM cell and (ii) the GRU. We pre-train our CNN sentence model on a subset of the Wikipedia-sentences dataset in order for it to learn to classify a sentence based on the topic-keyword that was matched for its corre sponding page acquisition.我们试验了两种不同的常用 RNN 变体,它们基于:(i)LSTM 单元和(ii)GRU。 我们在 Wikipedia-sentences 数据集的一个子集上预训练我们的 CNN 句子模型,以便它学习根据与其相应页面获取匹配的主题关键字对句子进行分类。

Please note that since bias terms can be included in each weight-matrix multiplication (Bishop, 1995), they are not explicitly displayed in the equations that describe the models of this section.

请注意,由于偏差项可以包含在每个权重矩阵乘法中 (Bishop, 1995),它们没有明确显示在描述本节模型的方程中.

3.1 句子建模

Models based on CNNs achieve their basic functionality by convolving a sequence of inputs with a set of filters in order to extract local features. 基于 CNN 的模型通过将输入序列与一组过滤器进行卷积以提取局部特征来实现其基本功能。

We adopt the Convolutional Sentence Model from (Kim, 2014) and we expand it in order to meet our specific needs for a multi-class, rather than binary classification.

我们采用 (Kim, 2014) 的卷积句模型并对其进行扩展,以满足我们对多类而不是二元分类的特定需求。

Let t1:l the concatenation of the vectors of all the words that exist in a sentence s. A narrow type convolution operation with a filter m ∈ R k×m is applied to each m-gram in the sentence s in order to produce a feature map cmf ∈ R l−m+1 of features:

让![]() 是句子 s 中存在的所有单词的向量的串联。对句子 s 中的每个 m-gram 应用带有过滤器

是句子 s 中存在的所有单词的向量的串联。对句子 s 中的每个 m-gram 应用带有过滤器 ![]() 的窄型卷积运算,以生成特征映射

的窄型卷积运算,以生成特征映射![]() :

:

![]()

with l ≥ m. Shorter sentences are padded with zero vectors when necessary.l ≥ m。 必要时用零向量填充较短的句子。

The most relevant feature from each feature map is captured by applying the max-over-time pooling operation (Collobert and Weston, 2008).每个特征图中最相关的特征是通过应用最大时间池化操作(Collobert 和 Weston,2008)来捕获的。

The consequent matrix is the result of concatenating the max values from each feature map that has been produced by applying an f number of m length filters over the sentences.

结果矩阵是将每个特征映射的最大值连接起来的结果,该特征映射是通过在句子上应用 f 个长度为 m 的过滤器而产生的。

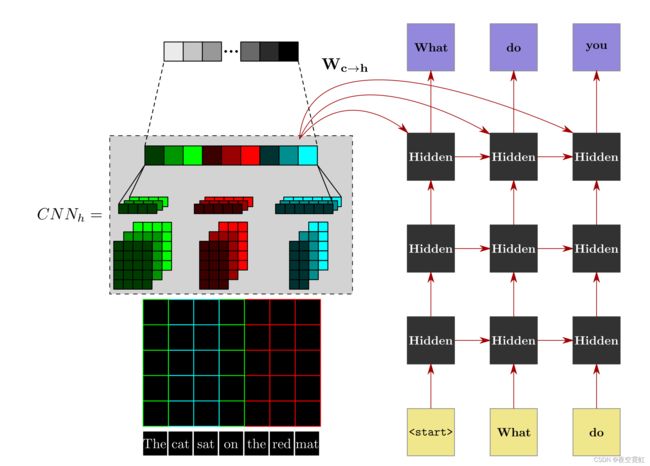

The network results in a fully-connected layer and a sof tmax that carries out the classification of the sentences. The architecture of the sentence model is illustrated on the left side of Figure 1.

该网络产生一个全连接层和一个执行句子分类的 softmax。 句子模型的架构如图 1 左侧所示。

Figure 1: The architecture of our generative model.我们的生成模型的架构。 At each timestep, the RNN is processing a word from a sequence of comments and the CNNh is extracting local features by convolving this sequence’s corresponding sentences with a set of three differently sized filters. 在每个时间步,RNN 都从一系列评论中处理一个单词,而 CNNh 通过将这个序列的相应句子与一组三个不同大小的过滤器进行卷积来提取局部特征。The red-coloured edges are the learnable parameters during training. 红色边是训练期间的可学习参数。Each comment in a sequence is augmented with start-of-comment and end-of-comment tokens.序列中的每个评论都增加了评论开始和评论结束标记。

3.2 序列建模

We describe two commonly used RNN variants that are based on: (i) the LSTM cell and (ii) the GRU. 我们描述了两种常用的 RNN 变体,它们基于:(i)LSTM 单元和(ii)GRU。

We experiment with both of them in order to explore which one serves better the sequential-modelling needs of our full architecture.我们对它们进行了试验,以探索哪一种能更好地满足我们完整架构的顺序建模需求。

Let h l t ∈ R n be the aggregated output of a hidden unit at timestep t = 1...T and layer depth l = 1...L.

设 ![]() 是隐藏单元在时间步长 t = 1...T 和层深度 l= 1...L 的聚合输出。

是隐藏单元在时间步长 t = 1...T 和层深度 l= 1...L 的聚合输出。

The vectors at zero layer depth, h 0 t = Wx→hxt , represent vectors that are given to the network as an input.

零层深度处的向量 ![]() 表示作为输入提供给网络的向量。

表示作为输入提供给网络的向量。

The parameter matrix Wx→h has dimensions [|X|, n], where |X| is the cardinality of all the potential one-hot input vectors. All the matrices that follow have dimension [n, n]

参数矩阵 ![]() 的维度为 [|X|, n],其中 |X| 是所有潜在的 one-hot 输入向量的基数。 后面的所有矩阵都具有维度 [n, n]

的维度为 [|X|, n],其中 |X| 是所有潜在的 one-hot 输入向量的基数。 后面的所有矩阵都具有维度 [n, n]

3.2.1 长短期记忆

Our LSTM cells’ architecture is adopted from (Zaremba and Sutskever, 2014):

我们的 LSTM 单元的架构来自 (Zaremba and Sutskever, 2014)

其中 ![]() 是时间步长 t 和层深度 l 处的向量,分别对应于输入门、遗忘门、输出门和单元

是时间步长 t 和层深度 l 处的向量,分别对应于输入门、遗忘门、输出门和单元

3.2.2 门控循环单元

The Gated Recurrent Unit was proposed as a less-complex implementation of the LSTM (Cho et al.,2014).门控循环单元被提议作为 LSTM 的一个不太复杂的实现(Cho 等人,2014)。

where resetl t , updatel t and eh l t are the vectors at timestep t and layer depth l that represent the values of the reset gate, the update gate and the hidden candidate respectively.

其中,![]() 是时间步长 t 和层深度 l 处的向量,分别表示重置门、更新门和隐藏候选的值。

是时间步长 t 和层深度 l 处的向量,分别表示重置门、更新门和隐藏候选的值。

3.3 耦合

After the pre-training of the CNN is complete, and the fully-connected and sof tmax layers are removed, the CNN is connected to the hidden units of the last layer L of the recurrent component. This is illustrated in Figure 1. The recurrent component is implemented with either LSTMs or GRUs. At each timestep, the RNN is processing a word from a sequence of comments and the CNN is extracting local features by convolving this sequence’s corresponding sentences with groups of differently sized filters. The red[1]coloured edges in Figure 1 represent the learnable parameters during training.

CNN的预训练完成后,去除全连接层和softmax层,将CNN连接到循环组件最后一层L的隐藏单元。 这在图 1 中进行了说明。循环组件是使用 LSTM 或 GRU 实现的。 在每个时间步,RNN 都从一系列评论中处理一个单词,而 CNN 通过将这个序列的相应句子与不同大小的过滤器组进行卷积来提取局部特征。 图 1 中的红色边代表训练期间的可学习参数。

The coupling formulation that follows is inspired by the Multimodal RNN that generates textual de[1]scription from visual data (Karpathy et al., 2014). Since, we do not want to allow the effect of sentence features, which represent the background knowledge of our model, to diminish between distant timesteps we differentiate from Karpathy’s approach; and instead of providing the feature that is generated by the CNN to the RNN only at the first timestep, we provide it at every timestep. Furthermore, Karpathy employs the simple RNN or Elman network (Elman, 1990) as the sequence modelling component of his architecture whereas we adopt multi-gated RNN variants.

随后的耦合公式受到多模态 RNN 的启发,该 RNN 从视觉数据生成文本描述(Karpathy 等,2014)。 因为,我们不想让代表我们模型背景知识的句子特征的影响在我们与 Karpathy 方法不同的遥远时间步之间减弱; 我们不是只在第一个时间步将 CNN 生成的特征提供给 RNN,而是在每个时间步提供它。 此外,Karpathy 采用简单的 RNN 或 Elman 网络(Elman,1990)作为其架构的序列建模组件,而我们采用多门 RNN 变体。

It should be noted that in the equations that follow, the term CNNh ∈ R PMF would refer to the output of the sentence-level CNN with its fully-connected and sof tmax layers disconnected, where MF is the group of all the feature maps that are generated for each different filter size. During training the resultant embeddings, which are computed by the CNNh processing a group of sentences S and the RNN variant processing the sequence of one-hot input word vectors x1, x2, ..., xT from the corresponding sequence of comments, are coupled in a hidden state h1, h2, ..., hT . The prediction for the next word is computed by projecting this hidden state ht to sequence of outputs y1, y2, ..., yT :

需要注意的是,在下面的等式中,术语![]() 指的是句子级 CNN 的输出,其全连接层和 softmax 层断开连接,其中 MF 是所有特征映射的组 为每个不同的过滤器尺寸生成。 在训练过程中,由 CNNh 处理一组句子 S 和 RNN 变体处理来自相应评论序列的单热输入词向量 x1, x2, ..., xT 的序列计算得到的结果嵌入被耦合 在隐藏状态 h1, h2, ..., hT 。 通过将此隐藏状态 ht 投影到输出序列 y1, y2, ..., yT 来计算下一个单词的预测:

指的是句子级 CNN 的输出,其全连接层和 softmax 层断开连接,其中 MF 是所有特征映射的组 为每个不同的过滤器尺寸生成。 在训练过程中,由 CNNh 处理一组句子 S 和 RNN 变体处理来自相应评论序列的单热输入词向量 x1, x2, ..., xT 的序列计算得到的结果嵌入被耦合 在隐藏状态 h1, h2, ..., hT 。 通过将此隐藏状态 ht 投影到输出序列 y1, y2, ..., yT 来计算下一个单词的预测:

4 数据集

We create a dataset4 of aligned sentences from Wikipedia and sequences of utterances from Reddit. A shared, fixed, vocabulary was used for both data sources. We treat Wikipedia as a “cleaner” data source and we formed our vocabulary in the following manner. First, we included all the words that occur 2 or more times in the Wikipedia sentences. Subsequently, from the Reddit sequences of comments, we included any words that occur at least 3 times across both data sources. The resultant shared dictionary includes 56280 of the most frequent words. Every out-of-vocabulary word is represented by a special NaN token.

我们创建了一个数据集,其中包含来自维基百科的对齐句子和来自 Reddit 的话语序列。 两个数据源都使用了一个共享的、固定的词汇表。 我们将 Wikipedia 视为“更干净”的数据源,并按以下方式形成了我们的词汇表。 首先,我们包含了在维基百科句子中出现 2 次或更多次的所有单词。 随后,从 Reddit 的评论序列中,我们包含了在两个数据源中出现至少 3 次的任何单词。 生成的共享词典包含 56280 个最常用的词。 每个词汇表外的单词都由一个特殊的 NaN 标记表示。

In constructing our dataset, our goal is to align Wikipedia sentences with sequences of comments from Reddit. We found that topics related to philosophy and literature are discussed on Reddit with the exchange of longer and more elaborative messages than the responses of the majority of conversational subjects on social media. A dataset that consists of long and detailed responses would provide more room for conversation incentives and would allow us to investigate the performance of our architectures against dialog exchanges with longer comments. We compiled a list of 35 predetermined topic-keywords from the philosophical and literary domain. By utilising the search feature of both the Reddit API5 and the MediaWiki API6 , we extracted: (i) sequences of comments from conversational threads most related to each keyword and (ii) the 300 Wikipedia pages most related to each keyword after carrying out Wikipedia’s automatic disambiguation procedure. In order to increase the homogeneity of the dataset in terms of the length of both the sequences of comments and the sentences, we excluded sequences and sentences whose length exceeded: (i) len − σ = 1140 and (ii) len − 2 · σ = 54 words respectively. The resultant dataset consists of 15460 sequences of Reddit comments and 75100 Wikipedia sentences.

在构建我们的数据集时,我们的目标是将维基百科的句子与 Reddit 的评论序列对齐。我们发现在 Reddit 上讨论与哲学和文学相关的话题时,交流的信息比社交媒体上大多数对话主题的回答更长、更详尽。由长而详细的响应组成的数据集将为对话激励提供更多空间,并使我们能够针对具有较长评论的对话交换来研究我们的架构的性能。我们编制了一份来自哲学和文学领域的 35 个预先确定的主题关键字列表。通过利用 Reddit API5 和 MediaWiki API6 的搜索功能,我们提取了:(i)来自与每个关键字最相关的对话线程的评论序列和(ii)在执行维基百科自动搜索后与每个关键字最相关的 300 个维基百科页面消歧程序。为了增加数据集在评论序列和句子长度方面的同质性,我们排除了长度超过的序列和句子:(i) len − σ = 1140 和 (ii) len − 2 · σ = 54。结果数据集由 15460 个 Reddit 评论序列和 75100 个维基百科句子组成。

4.1 Reddit

Reddit is absolved from the length-restricted-messages paradigm, facilitating the generation of longer and more meaningful responses. Furthermore, Reddit serves as an openly-available question-answering platform. Our research hypothesis is that a neural network trained on sequences of questions and their corresponding answers will be able to generate responses that escape the concept of daily-routine expressions, such as “good luck” or “have fun”, and facilitate a playground for more detailed and descriptive dialogue utterances.

Reddit 摆脱了长度限制消息范式,促进了更长、更有意义的响应的生成。 此外,Reddit 还是一个公开可用的问答平台。 我们的研究假设是,对一系列问题及其相应答案进行训练的神经网络将能够产生脱离日常表达概念的反应,例如“祝你好运”或“玩得开心”,并促进游乐场 以获得更详细和描述性的对话话语。

Each different conversation on Reddit starts with a user submitting a parent-comment on a subreddit. A sequence of utterances then succeeds this parent comment. Since we wanted to investigate how our model performs against long-term dependant dialog components, we set the depth of conversation to 5. Starting from the parent-comment, we follow the direction of the un-ordered tree of utterances until the fourth-level child-comment. If a comment (node) leads to n responses (children), we copy the observed sequence n times and for each sequence, we continue until the fourth response (leaf). Based on the above structural paradigm, we extracted sequences with at least four children-comments, of which we retained the only first four utterances along with the original parent-comment of the sequence. Note that each comment in a sequence is augmented with the respective start-of-comment and end-of[1]comment tokens.

Reddit 上的每个不同对话都始于用户在 subreddit 上提交父级评论。 一系列话语随后接续该父评论。 由于我们想研究我们的模型对长期依赖的对话组件的表现如何,我们将对话深度设置为 5。从父评论开始,我们沿着无序的话语树的方向直到第四级 子评论。 如果一个评论(节点)导致 n 个响应(子节点),我们将观察到的序列复制 n 次,对于每个序列,我们继续直到第四个响应(叶子)。 基于上述结构范式,我们提取了至少包含四个子评论的序列,其中我们仅保留了前四个话语以及序列的原始父评论。 请注意,序列中的每个评论都增加了相应的评论开始和评论结束标记。

我们的数据集对齐的示例。 一个评论序列与一组句子相结合。 这些句子是从维基百科页面中随机分配的,这些页面是根据与相应序列相同的搜索词 (Noam Chomsky) 提取的。

我们的数据集对齐的示例。 一个评论序列与一组句子相结合。 这些句子是从维基百科页面中随机分配的,这些页面是根据与相应序列相同的搜索词 (Noam Chomsky) 提取的。

4.2 Wikipedia

Wikipedia sentences are used as the knowledge background of our model. We chose to include only sentences from the Wikipedia summaries, since in preliminary experiments, we found that including all the textual material of a page introduces a lot of noise to our data. The 13410 Wikipedia summaries that matched the search criteria were split into sentences. Each sentence was labelled with the initial topic[1]keyword that was matched for its corresponding page acquisition. A subset of 30000 labelled sentences was used for pre-training the CNN component of our architecture.维基百科句子被用作我们模型的知识背景。 我们选择只包含维基百科摘要中的句子,因为在初步实验中,我们发现包含页面的所有文本材料会给我们的数据带来很多干扰。 符合搜索条件的 13410 条维基百科摘要被拆分成句子。 每个句子都标有与其相应页面获取匹配的初始主题关键字。 30000 个标记句子的子集用于预训练我们架构的 CNN 组件。

4.3 数据集对齐

We choose to align each sequence of Reddit utterances with 20 Wikipedia sentences. Both the Wikipedia pages to which the sentences correspond and the sequence of comments have been extracted using the same search-term. An example of the structure of the dataset is displayed in Table 1.

我们选择将每个 Reddit 话语序列与 20 个维基百科句子对齐。 句子对应的维基百科页面和评论序列都是使用相同的搜索词提取的。 数据集结构示例如表 1 所示。

5 实验

The full network was regularised by introducing a dropout (Zaremba et al., 2014) value of 0.4 to the non-recurrent connections between the last hidden state, ht , and the softmax layer of the network. In order to avoid any potential exploding gradients training problems, we enforce an l2 constraint on the gradients of the weights in order for them to be no greater than 5 (Sutskever et al., 2014)

通过向最后一个隐藏状态 ht 和网络的 softmax 层之间的非循环连接引入 0.4 的 dropout (Zaremba et al., 2014) 值,对整个网络进行了正则化。 为了避免任何潜在的梯度爆炸训练问题,我们对权重的梯度施加了 l2 约束,以使它们不大于 5(Sutskever 等人,2014 年)

The CNN component is trained with narrow convolutional filters of widths 3, 4, 5 and 6, with 300 feature maps each. We use the rectifier as activation function. All of the parameters were initialised with a random uniform distribution between −0.1 and 0.1. The network was trained for 10 epochs using stochastic gradient descent with a learning rate of 0.2. We regularised the network by introducing a dropout (Hinton et al., 2012) value of 0.7 to the connections between the pooling and the softmax layer of the network.

CNN 组件使用宽度为 3、4、5 和 6 的窄卷积滤波器进行训练,每个滤波器具有 300 个特征图。 我们使用rectifier 作为激活函数。 所有参数均以 -0.1 和 0.1 之间的随机均匀分布进行初始化。 该网络使用随机梯度下降以 0.2 的学习率训练了 10 个epoch。 我们通过在池化和网络的 softmax 层之间的连接中引入 0.7 的 dropout (Hinton et al., 2012) 值来规范网络。

For the recurrent component of our networks, we use 2 layers of (i) 1000 LSTM cells and (ii) 1000 GRUs, resulting in approximately 16M and 12M recurrent connections respectively. All of the pa[1]rameters are initialised with a random uniform distribution between −0.08 and 0.08. The networks were trained for 10 epochs, using stochastic gradient descent with a learning rate of 0.5. After the 7th epoch in the LSTM case and 3rd epoch in the GRU case, the learning rate was decayed by 0.2 every half epoch.

对于我们网络的循环组件,我们使用 2 层(i)1000 个 LSTM 单元和(ii)1000 个 GRU,分别产生大约 16M 和 12M 的循环连接。 所有参数都初始化为 -0.08 和 0.08 之间的随机均匀分布。 使用学习率为 0.5 的随机梯度下降对网络进行了 10 次训练。 在 LSTM 情况下的第 7 个 epoch 和 GRU 情况下的第 3 个 epoch 之后,学习率每半个 epoch 衰减 0.2。

The dataset is split into training, validation and test with respective portions of 80, 10 and 10. A sample of responses that is generated by our proposed systems is shown in Table 2.

数据集分为训练、验证和测试,比例分别为 80、10 和 10。表 2 显示了我们提出的系统生成的响应样本。

Table 2: Sample of responses that are generated by our proposed systems and their sequential equivalents. The sequences of comments and their corresponding sentences are sampled randomly from the test set.

表 2:由我们提出的系统及其顺序等价物生成的响应样本。 评论序列及其相应的句子是从测试集中随机抽取的。

table 3. Top: Automatic evaluation with the perplexity metric on the test set. Bottom: Average rating of the responses that are generated by each model based on human evaluation.

表3:顶部:使用测试集上的困惑度指标进行自动评估。 底部:每个模型根据人工评估生成的响应的平均评级。

5.1 实验结果

Examples of responses that are generated by our proposed systems and their respective purely sequential equivalents are shown in Table 2. The sequences of comments and their corresponding sentences are sampled randomly from the test set. Our architectures learn to couple information that exists in the sequence of comments with knowledge that is contained in the Wikipedia sentences and is, potentially, related to context of those comments

由我们提出的系统生成的响应示例及其各自的纯顺序等效项如表 2 所示。评论序列及其相应的句子是从测试集中随机采样的。 我们的架构学会将评论序列中存在的信息与维基百科句子中包含的知识相结合,并且可能与这些评论的上下文相关。

When a piece of information in the sequence of comments is successfully aligned with the content of its corresponding Wikipedia sentences a knowledgeable, context-sensitive response is generated. A representative example of this functionality is provided in the last sequence of comments in Table 2, where the context of the sequence is coupled with the fact that Chomsky supported Bernie Sanders in the United States presidential election (i.e. from the allocated to that sequence of Reddit utterances Wikipedia sentence: “In late 2015, Chomsky announced his support for Vermont U.S. senator Bernie Sanders in the upcoming 2016 United States presidential election.”7 ). In case no information alignment is identified between the content of the sequence of comments and the Wikipedia sentences, the generation procedure is based almost explicitly on the sequence of utterances, and a response is generated in a similar to the purely sequential models’ fashion.

当评论序列中的一条信息与其对应的维基百科句子的内容成功对齐时,就会生成知识渊博、上下文敏感的响应。 此功能的一个代表性示例在表 2 中的最后一个评论序列中提供,其中该序列的上下文与乔姆斯基在美国总统大选中支持伯尼·桑德斯的事实相结合(即从 Reddit 的分配到该序列的 话语维基百科一句话:“2015 年底,乔姆斯基宣布在即将到来的 2016 年美国总统大选中支持佛蒙特州美国参议员伯尼·桑德斯。”7)。 如果在评论序列的内容和维基百科句子之间没有确定信息对齐,生成过程几乎明确地基于话语序列,并以类似于纯序列模型的方式生成响应。

5.2 自动评估

We use perplexity on the test set to evaluate our proposed models against their purely sequential equivalents. Perplexity measures the cross-entropy between the predicted sequence of words and the actual, empirical, sequence of words. The results are illustrated in the top part of Table 3. Our proposed architectures achieve 55% and 45% improvement in perplexity compared to their respective purely LSTM[1]and GRU-based equivalents

我们在测试集上使用 perplexity 来评估我们提出的模型与其纯顺序等价物的对比。 Perplexity 衡量预测的单词序列与实际的、经验的单词序列之间的交叉熵。 结果显示在表 3 的顶部。与它们各自的纯 LSTM 和基于 GRU 的等价物相比,我们提出的架构在困惑度上分别提高了 55% 和 45%

5.3 人工评估

Human evaluation was conducted using research students and staff from the School of Electronics and Computer Science of the University of Southampton. The evaluators were provided with a table of 10 randomly selected sequences of Reddit comments along with the response that is generated by our proposed models and their purely sequential equivalents. In order to simplify our task, we included only sequences of comments with a length less than 100 words. The name of the models to which each response corresponds were anonymised. The authors excluded themselves from this evaluation procedure. The evaluators were asked to rate each generated response from 1 to 5, with 1 indicating a very bad response, based on how well it fits the context of the corresponding sequence of comments.

人类评估是使用南安普敦大学电子与计算机科学学院的研究生和工作人员进行的。 向评估者提供了一个表格,其中包含 10 个随机选择的 Reddit 评论序列,以及由我们提出的模型及其纯顺序等效模型生成的响应。 为了简化我们的任务,我们只包含长度小于 100 字的评论序列。 每个响应对应的模型名称都是匿名的。 作者将自己排除在此评估程序之外。 评估人员被要求从 1 到 5 对每个生成的响应进行评分,1 表示非常差的响应,基于它与相应评论序列的上下文的吻合程度。

Table 3 presents the average rating of each model’s responses based on human evaluation. Even though our decision to apply a restriction over the length of the sequences of utterances, which were included in the human evaluation experiment, brings us in an agreement with literature that challenges the reliability of automatic evaluation methods, such as perplexity, in the domain of short-length responses (Ritter et al., 2011), we argue that an experiment at a larger scale, absolved from significant simplification choices, would demonstrate an alignment between the human judgements and the automatic evaluation results.

表 3 显示了基于人工评估的每个模型响应的平均评分。 尽管我们决定对包含在人类评估实验中的话语序列的长度进行限制,使我们与在以下领域挑战自动评估方法的可靠性的文献达成一致,例如困惑 短响应(Ritter et al., 2011),我们认为,一个更大规模的实验,从显着的简化选择中解脱出来,将证明人类判断和自动评估结果之间的一致性。

6 结论

To the best of our knowledge this work constitutes the first attempt for building an end-to-end learnable system for automatic context-sensitive response generation by leveraging the alignment of two different data sources. We proposed a novel system that incorporates background knowledge in order to capture the context of a conversation and generate a meaningful response. This paper made the following contributions: We built a dataset that aligns knowledge from Wikipedia in the form of sentences with sequences of Reddit utterances; and, we designed a neural network archi tecture that learns to couple information from different types of textual data in order to capture the context of a conversation and generate a meaningful response. Our approach achieved up to 55% improvement in perplexity compared to purely sequential models based on RNNs that are trained only on sequences of utterances. It should also be noted that despite the fact that our dataset focuses on the philosophical and literary domain, the design procedure could be transferred out-of-the-box to a great variety of domains. Arguments could be made against the performance gain of our architectures against human evaluation. Based on the reported low performance of purely LSTM-based models on very long-term dependant datasets (Sutskever et al., 2014), we believe that an experiment at a larger scale without a restriction over the length of the sequences of utterances (Section 5.3) would emphasise the superiority of our approach. We believe that further work on the coupling formulation that is proposed in Section 3.3 could provide additional improvements to the results of this work. An additional direction for future work could be the introduction of a complimentary, to the current procedure, task that would enhance the quality of the information alignment from the two data sources

据我们所知,这项工作是通过利用两个不同数据源的对齐来构建端到端可学习系统以自动生成上下文敏感响应的第一次尝试。我们提出了一个新的系统,它结合了背景知识,以捕捉对话的上下文并产生有意义的响应。本文做出了以下贡献: 我们构建了一个数据集,以句子的形式将来自维基百科的知识与 Reddit 话语序列对齐;并且,我们设计了一个神经网络架构,该架构可以学习从不同类型的文本数据中耦合信息,以捕捉对话的上下文并生成有意义的响应。与仅基于话语序列训练的基于 RNN 的纯序列模型相比,我们的方法将困惑度提高了 55%。还应该指出的是,尽管我们的数据集专注于哲学和文学领域,但设计过程可以开箱即用地转移到各种各样的领域。可以针对我们的架构与人类评估的性能提升提出争论。基于所报告的基于 LSTM 的模型在非常长期依赖的数据集上的低性能(Sutskever 等人,2014 年),我们认为,在不限制话语序列长度的情况下进行更大规模的实验(第5.3) 将强调我们方法的优越性。我们相信,在第 3.3 节中提出的耦合公式的进一步工作可以为这项工作的结果提供额外的改进。未来工作的另一个方向可能是在当前程序的基础上引入一项补充任务,以提高来自两个数据源的信息对齐的质量。

参考文献

Y. Bengio、P. Simard 和 P. Frasconi。 1994. 用梯度下降学习长期依赖很困难。翻译神经。网络,5(2):157–166,三月。

Yoshua Bengio、Rejean Ducharme、Pascal Vincent 和 Christian Janvin。 2003. 神经概率语言模型。 J. 马赫。学习。研究,3:1137-1155,三月。

克里斯托弗·M·毕晓普。 1995. 用于模式识别的神经网络。牛津大学出版社,美国纽约州纽约市。

Kyunghyun Cho、Bart van Merrienboer、C? aglar Gulc?ehre、Fethi Bougares、Holger Schwenk 和 Yoshua Bengio。 ¨ 2014. 使用用于统计机器翻译的 RNN 编码器-解码器学习短语表示。 CoRR,abs/1406.1078。

罗南科洛伯特和杰森韦斯顿。 2008. 自然语言处理的统一架构:具有多任务学习的深度神经网络。在第 25 届机器学习国际会议论文集,ICML '08,第 160-167 页,纽约,纽约,美国。 ACM。

杰弗里·L·埃尔曼。 1990. 及时发现结构。认知科学,14(2):179-211。亚历克斯·格雷夫斯。 2013. 使用循环神经网络生成序列。 CoRR,abs/1308.0850。

Karol Gregor、Ivo Danihelka、Alex Graves 和 Daan Wierstra。 2015. DRAW:用于图像生成的循环神经网络。 CoRR,abs/1502.04623。

拉尔夫·格里什曼。 1979. 问答系统中的响应生成。在第 17 届计算语言学协会年会会议记录中,ACL '79,第 99-101 页,美国宾夕法尼亚州斯特劳兹堡。计算语言学协会。

Geoffrey E. Hinton、Nitish Srivastava、Alex Krizhevsky、Ilya Sutskever 和 Ruslan Salakhutdinov。 2012. 通过防止特征检测器的共同适应来改进神经网络。 CoRR,abs/1207.0580。

Sepp Hochreiter 和 Jrgen Schmidhuber。 1996. 通过重量猜测和“长短期记忆”弥合长时间滞后。在生物和人工系统中的时空模型中,第 65-72 页。 IOS出版社。

Charles Lee Isbell, Jr.、Michael J. Kearns、Dave Kormann、Satinder P. Singh 和 Peter Stone。 2000. lambdamoo 中的 Cobot:社会统计代理。在第十七届全国人工智能大会和第十二届人工智能创新应用大会论文集,第 36-41 页。 AAAI 出版社。

Nal Kalchbrenner、Edward Grefenstette 和 Phil Blunsom。 2014. 用于句子建模的卷积神经网络。 CoRR,abs/1404.2188。

Andrej Karpathy 和李飞飞。 2014. 用于生成图像描述的深度视觉语义对齐。 CoRR,abs/1412.2306。

Andrej Karpathy、Armand Joulin 和李飞。 2014. 双向图像句子映射的深度片段嵌入。在 Z. Ghahramani, M. Welling, C. Cortes, N.d.劳伦斯和 K.q. Weinberger,编辑,神经信息处理系统进展 27,第 1889-1897 页。 Curran Associates, Inc.

尹金。 2014. 用于句子分类的卷积神经网络。在 2014 年自然语言处理经验方法会议 (EMNLP) 的会议记录中,第 1746-1751 页,卡塔尔多哈,10 月。计算语言学协会。

Y. LeCun、L. Bottou、Y. Bengio 和 P. Haffner。 1998. 基于梯度的学习应用于文档识别。 IEEE 会议录,86(11):2278–2324,11 月

Toma´s Mikolov、Martin Karafi ˇ at、Luk´a´s Burget、Jan Cernockˇ y 和 Sanjeev Khudanpur。 2010. 基于循环神经网络的语言模型。在INTERSPEECH 2010,国际语音通信协会第11届年会上,日本幕张,2010 年 9 月 26-30 日,第 1045-1048 页。 Razvan Pascanu、Toma 的 Mikolov 和 Yoshua Bengio。 2012. 理解梯度爆炸问题。 ˇ CoRR,abs/1211.5063。

Alan Ritter、Colin Cherry 和 William B. Dolan。 2011. 社交媒体中数据驱动的响应生成。在自然语言处理中的经验方法会议论文集,EMNLP '11,第 583-593 页,美国宾夕法尼亚州斯特劳兹堡。计算语言学协会。

Samira Shaikh、Tomek Strzalkowski、Sarah Taylor 和 Nick Webb。 2010. Vca:多方虚拟聊天代理的实验。在 2010 年同伴对话系统研讨会的会议录中,CDS '10,第 43-48 页,美国宾夕法尼亚州斯特劳兹堡。计算语言学协会。

尚立峰、郑东路、李航。 2015. 用于短文本对话的神经响应机。计算语言学协会第 53 届年会暨第七届自然语言处理国际联合会议论文集(第一卷:长论文),第 1577-1586 页,北京,

中国,七月。计算语言学协会。

Richard Socher、Andrej Karpathy、Quoc V. Le、Christopher D. Manning 和 Andrew Y. Ng。 2014. 用于查找和描述带有句子的图像的基础组合语义。 TACL,2:207-218。

Alessandro Sordoni、Michel Galley、Michael Auli、Chris Brockett、Yangfeng Ji、Margaret Mitchell、Jian-Yun Nie、Jianfeng Gao和Bill Dolan。 2015. 一种用于上下文敏感生成会话响应的神经网络方法。计算语言学协会北美分会 2015 年会议论文集:人类语言技术,第 196-205 页,科罗拉多州丹佛,5 月至 6 月。计算语言学协会。

Ilya Sutskever、Oriol Vinyals 和 Quoc V Le。 2014. 使用神经网络进行序列到序列学习。在 Z. Ghahramani、M. Welling、C. Cortes、N. D. Lawrence 和 K. Q. Weinberger,编辑,神经信息处理系统进展 27,第 3104-3112 页。 Curran Associates, Inc. Oriol Vinyals 和 Quoc V. Le。 2015. 神经对话模型。 CoRR,abs/1506.05869。

A. Waibel、T. Hanazawa、G. Hinton、K. Shikano 和 K. J. Lang。 1989. 使用延时神经网络的音素识别。 IEEE Transactions on Acoustics, Speech, and Signal Processing, 37(3):328–339, Mar.

玛丽莲·沃克、拉什米·普拉萨德和支架。 2003. 多模式对话中推荐的可训练生成器。在《欧洲语言学论文集》,第 1697-1701 页。

约瑟夫·魏泽鲍姆。 1966. Eliza——一个用于研究人机自然语言交流的计算机程序。社区。 ACM,9(1):36–45,一月。

Wen-tau Yih、Xiaodong He 和 Christopher Meek。 2014. 单关系问答的语义解析。在计算语言学协会第 52 届年会的会议记录中,ACL 2014,2014 年 6 月 22-27 日,美国马里兰州巴尔的摩,第 2 卷:短论文,第 643-648 页。

Wojciech Zaremba 和 Ilya Sutskever。 2014. 学习执行。 CoRR,abs/1410.4615。

Wojciech Zaremba、Ilya Sutskever 和 Oriol Vinyals。 2014. 循环神经网络正则化。 CoRR,abs/1409.2329。

![\mathbf{c}_{\mathbf{m f}}=\left[\begin{array}{c} c_{m f_{1}} \\ \vdots \\ c_{m f_{3}} \end{array}\right]](http://img.e-com-net.com/image/info8/6c1dd8d4d6374915bee6cd3d3c364201.gif)