ffmpeg 利用AVIOContext自定义IO 输出结果写buffer

前言

工程开发中, 需要用到强大的音视频处理集成工具ffmpeg来实现音频的转码. 我们的需求是, 转码后的文件, 不落盘, 直接存到缓存中, 提供下一个模块使用.

我们是C++工程, 直接读写缓存的方式来传递音频数据, 所以直接调用ffmpeg c api来实现这个功能是更简单直接的方案.

虽说ffmpeg的例子满天飞, 真正使用api来实现缓存读写的, 真是寥寥无几. 我在doc/examples/transcoding.c的基础上, 增加了输出结果写到buffer的功能. 测试下来, 发现我buffer中的音频数据有效时长一直不对, 且文件体大小比命令行的大一倍. 针对这两点, 进行了问题排查, 花了我一周多的时间才最终修复.

名词解释

在讲解代码前, 需要对相关名词术语做个简单的介绍, 有助于理解后面的代码:

- AVFormatContext : 存储音视频数据的容器类

- Muxer: 封装器, 将编码数据, 以AVPackets的形式写入指定格式的容器文件里

- Demuxer: 分离器, 读取音视频文件, 并切分成AVPackets的形式

- codec: 编解码算法, 主要分为有损和无损

- encoder/decoder: 编码器/解码器

- container: 容器格式, 即音频流, 视频流和字幕流等多个流共存存储到特定格式的文件中的协议

- transcoding: 转码功能, 即将音视频从一种编码, 转换到另一种编码,

- transmuxing: 容器格式转换, 即将音视频从一种容器格式转换到另一种容器格式, e.g. mp4转成mkv

- sample rate : 样本率, 即每秒中的样本量, 也叫HERTZ, e.g. 48kHz就是48000样本/秒

- bit rate : 每秒传输的bit的数量

- sample format : 表达一个样本时使用的bit位数, 如16bit

FFMPEG 架构图

流程大体如下:

输入的音视频文件, 先用分离器做分离(demuxing), 将音频流, 视频流, 字幕流等分离出来. 接着将编码的数据, 以packet为单位, 发送给解码器(decoding), 开始做解码. 解码器将packet根据指定的codec_id解码解压成frame. 这些frame, 可以进一步做过滤(filtering)如声道切分, 降频等操作. 之后, 根据指定的codec_id, 将frame编码成packets. 最后, 这些packets通过封装器(muxter)进行封装打包, 输出到文件中. 这就是ffmpeg处理音视频的完整的流程.

更加详细的流程图, 可参考 雷霄桦的PPT中的图, 如下:

做个简单说明:

- protocol layer 协议层: 负责读写的文件, ffmpeg支持的文件形式较多, 如本地, HTTP或RTMP等

- format layer容器层: 通过demuxer和muxer, 从文件中读写元信息的同时, 将多个流, 包括音频流, 视频流和字幕流等做分离和封装操作

- codec layer编解码层: 通过decoder和encoder, 在编码形式的packet和解压形式的frame之间做相互转换

- pixel layer过滤层: 对frame数据做各种过滤和转换.

常见操作

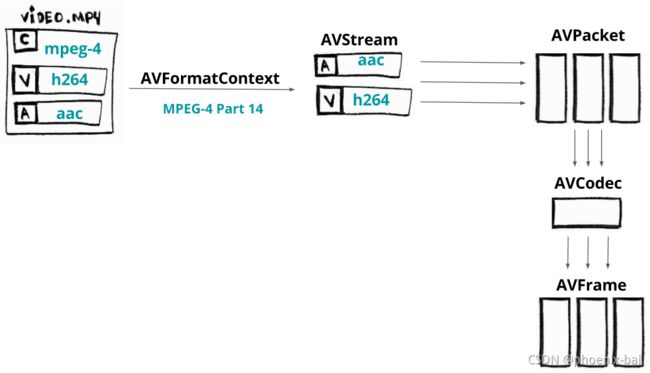

解码Decoding

如下图, video.mp4是封装了aac编码的音频流和h264编码的视频流的mp4格式的容器文件. AVFormatContext类的实例, 读取该文件, 并分离出音频流(aac AVStream)和视频流(h264 AVStream). 接着从各个AVStream中, 读取数据块AVPacket, 并根据指定的编码器(aac/h264)进行解码, 输出无压缩的AVFrame. 这就是典型的解码过程.

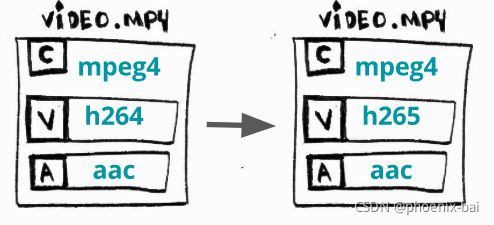

转码transcoding

转码是指从一种编码转换成另一种编码. 下图例子中, 将视频流的编码从h264转换为h265, 命令行:

ffmpeg -i video.mp4 -c:v libx265 video_h265.mp4

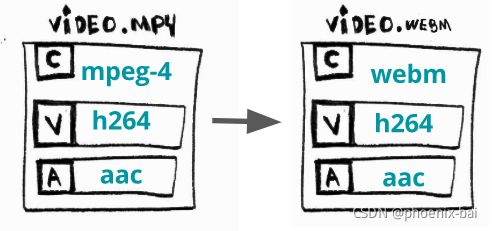

转(封装)格式 transmuxing

转封装(容器)格式是指从一种容器格式转换为另一种容器格式, 命令行如下, 其中, 转换过程中无编解码过程, 是直接拷贝原packet, 组成新的容器格式.

ffmpeg -i video.mp4 -c copy video.webm

ffmpeg源代码编译

ffmpeg源代码的doc/example目录下, ffmpeg提供了很多调用api的例子. 下面是我编译一些例子时, 遇到的一些问题:

本地跑avio_reading.c时注意事项:

○ avformat, avcodec, avutil的linking顺序很重要, 具体可参考Makefile

○ swr_convert, swr_init, swr_close等出现undefined reference, 则加 -lswresample

○ 出现sin, cosin, log等undefined reference, 加 -lm

○ 出现pthread相关undefined reference, 加-lpthread

○ 出现压缩相关的报错, 加-lz

○ 出现lzma_* 相关报错的, 加-llzma

gcc -Wall -o avio_reading avio_reading.c -I./rpm/usr/local/include -L./rpm/usr/local/lib -lavformat -lavcodec -lavutil -lswresample -lm -lpthread -lz -llzma

filtering_audio.c也是类似:

gcc -Wall -o filtering_audio doc/examples/filtering_audio.c -I./rpm/usr/local/include -L./rpm/usr/local/lib -lavfilter -lavformat -lavcodec -lavutil -lswresample -lswscale -lm -lpthread -lz -llzma

打印ffmpeg中的av_log()时, 可在main()函数中设置:

int main(int argc, char **argv)

{

av_log_set_level(AV_LOG_DEBUG);

}

代码讲解

(我们应用场景中处理的是音频, 所以讲解以音频为例)

transcoding.c代码中实现的就是典型的转码的过程, 输入到解码, 过滤, 编码, 加输出到文件.

我在transcoding.c的基础上, 修改了输出相关的逻辑, 使其不输出文件而是输出到指定的buffer中. 其中, 遇到了两个问题:

- buffer写到文件后, 发现时长总是不对, 比实际时长大了好多.

- 文件大小也与命令行输出的文件结果不同, 偏大.

经排查, 发现原因:

- 使用ffmpeg custom IO接口来自定义输出形式时, 需要提供wrie()和seek()两个函数. 音频的有效时长, 是在所有的数据写进buffer后, 知道了有效时长, 则通过seek()函数定位到文件头部, 并在指定的存储文件时长的点, 进行有效时长的更新. 这意味着, write()和seek()函数定义, 缺一不可!

- buffer内容写到文件时, 未限定有效的buffer中的数据长度, 而是整个buffer都写进文件中, 导致音频文件最后, 多了很多空值.

基于上述两个原因, 分别进行了修复后, 现在代码效果符合预期!

附上代码: 在最后!!!

本人家属在杭州零售内蒙古高品质牛羊肉, 想品尝内蒙纯正"苏尼特羔羊肉"和"科尔沁牛肉"的同学们, 可以加群.

References

- ffmpeg-libav-tutorial : https://github.com/leandromoreira/ffmpeg-libav-tutorial/blob/master/README.md

- ffmpeg AVIOContext 自定义 IO 及 seek https://segmentfault.com/a/1190000021378256

- ffmpeg documentation: https://ffmpeg.org/ffmpeg.html 再看, 讲得好清晰易懂

- ffmpeg开发代码例子: http://leixiaohua1020.github.io/#ffmpeg-development-examples

- ffmpeg PPT, 有很多例子, 讲解很清晰: https://slhck.info/ffmpeg-encoding-course/#/

- 专门做音视频处理相关研究的: http://leixiaohua1020.github.io/#ffmpeg-development-examples

- Werner Robitza’s must read about rate control: https://slhck.info/posts/

- ffmpeg custom I/O https://mw.gl/posts/ffmpeg_custom_io/

Thanks

transcoding.c 源代码

#include