pytorch四种loss函数, softmax用法

pytorch四种loss函数

nn.functional.cross_entropy vs nll_loss

适用于k分类问题

labels = torch.tensor([1, 0, 2], dtype=torch.long)

logits = torch.tensor([[2.5, -0.5, 0.1],

[-1.1, 2.5, 0.0],

[1.2, 2.2, 3.1]], dtype=torch.float)

>>> torch.nn.functional.cross_entropy(logits, labels)

tensor(2.4258)

>>> torch.nn.functional.nll_loss(torch.nn.functional.log_softmax(logits, dim=1), labels)

tensor(2.4258)

>>>l=torch.nn.CrossEntropyLoss()

>>>l(logits,labels)

tensor(2.4258)

3个分类(每行三个数),每个分类都有自己的概率.

3 samples

nll_loss需要手动softmax,把三个分类的概率归一化(相加为1,因此dim=1),再取log

cross_entropy内置了log_softmax功能

logits和labels分别是FloatTensor,LongTensor

nn.CrossEntropyLoss

需要先建立一个CrossEntropyLoss层,再像cross_entropy一样代入logits和labels,不能直接用constructor代入logits和labels

否则会有一个类似Boolean value of Tensor with more than one value is ambiguous的错误,因为constructor以为输入的是boolean参数

nn.NLLLoss

输入都是size=[N]的1Dtensor,一个是log(概率),softmax就是算概率,另一个是label

nn.KLDivLoss

输入是log(概率)和label,label也是概率

# this is the same example in wiki

P = torch.Tensor([0.36, 0.48, 0.16])

Q = torch.Tensor([0.333, 0.333, 0.333])

(P * (P / Q).log()).sum()

# tensor(0.0863)

F.kl_div(Q.log(), P, None, None, 'sum')

# tensor(0.0863)

P = torch.Tensor([[0.36, 0.48, 0.16],[0.36, 0.48, 0.16]])

Q = torch.Tensor([[0.333, 0.333, 0.333],[0.333, 0.333, 0.333]])

print((P * (P / Q).log()).sum())

# tensor(0.1726)

F.kl_div(Q.log(), P, None, None,'mean')

# tensor(0.0288),mean÷6

P = torch.Tensor([[0.36, 0.48, 0.16],[0.36, 0.48, 0.16]])

Q = torch.Tensor([[0.333, 0.333, 0.333],[0.36, 0.48, 0.16]])

print((P * (P / Q).log()).sum())

# tensor(0.0863),第二个sample,p,q分布一样,kldiv=0,

F.kl_div(Q.log(), P, None, None,reduction='batchmean')

# tensor(0.0432),mean÷2

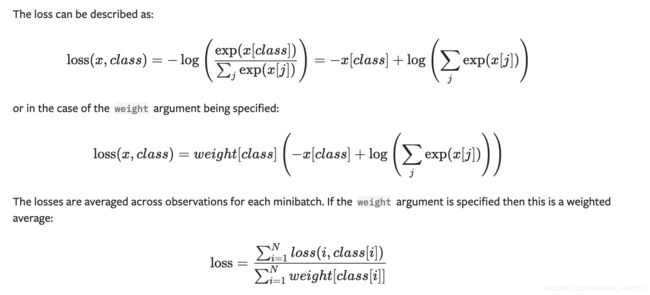

公式说明

pytorch官方文档nn.CrossEntropyLoss()的公式非常不规范,没有变量声明,前后又不一致.

看懂这个公式那么nn.NLLLoss的公式就很简单了

公式1

x是1Dtensor,对应一个instance预测出的c个logits

class是scalar,即这个instance的label(比如0,1,2)

与教材的cross entropy公式 H a ( y ) = ∑ i = 1 n ∑ k = 1 K − y k [ i ] log ( a k [ i ] ) H_{\mathbf{a}}(\mathbf{y})=\sum_{i=1}^{n} \sum_{k=1}^{K}-y_{k}^{[i]} \log \left(a_{k}^{[i]}\right) Ha(y)=∑i=1n∑k=1K−yk[i]log(ak[i])相比,没有 y i y_i yi项,因为label对应类的yi是1,其他类yi是0不产生损失.所以一个instance只产生一个loss项

cross entropy 公式另外3种表示:

H ( p , q ) = − E p [ log q ] = − ∑ x ∈ X p ( x ) log q ( x ) = H ( p ) + D K L ( p ∥ q ) H(p,q)=-\operatorname{E}_p[\log q]=-\sum_{x\in {\mathcal {X}}}p(x)\log q(x)=H(p)+D_{\mathrm {KL} }(p\|q) H(p,q)=−Ep[logq]=−∑x∈Xp(x)logq(x)=H(p)+DKL(p∥q)

Consider a classification context where q(y∣x) is the model distribution over classes, given input x. p(y∣x) is the ‘true’ distribution, defined as a delta function centered over the true class for each data point:

p ( y ∣ x i ) = { 1 y = y i 0 Otherwise p(y \mid x_i) = \left \{ \begin{array}{cl} 1 & y = y_i \\ 0 & \text{Otherwise} \\ \end{array} \right. p(y∣xi)={10y=yiOtherwise

For the ith data point, the cross entropy is H i ( p , q ) = − ∑ y p ( y ∣ x i ) log q ( y ∣ x i ) H_i(p,q) = -\sum_y p(y \mid x_i) \log q(y \mid x_i) Hi(p,q)=−∑yp(y∣xi)logq(y∣xi)

由此可知,之前几个x其实都是y|x

公式3

斜体loss是[N,C]的2Dtensor,然鹅之前的公式明明没算这么多loss,只计算了N个非零loss,其他都是0,所以这个class[i]其实是多余的.

class和公式1中的class也不是一个概念了.class是size=N的tensor, class[i]表示第i个sample的类别,只有这个类别的loss非零,整个loss(i,class[i])就是找出这个非零的loss而已

weight是size=C的tensor

这里其实有问题,根据TensorFlow的实现,分母应该是N而不是N个weight相加.也就是在instance上做平均而不是weight上.否则会导致同一个instance在不同batch中起的作用不同.

weight用于解决类不平衡问题,稀少的类可能要*10作为loss相当于出现十次,但求平均的时候不应该保留这个*10因此要÷weight

注

cross entropy不symmetric,H(p,q)!=H(q,p)

https://sebastianraschka.com/faq/docs/pytorch-crossentropy.html

https://stats.stackexchange.com/questions/331942/why-dont-we-use-a-symmetric-cross-entropy-loss/331960#331960?newreg=6864fabeab374635b795e10f0da31898