【ResNet残差网络解析】

深度残差网络(Deep residual network, ResNet)的提出是CNN图像史上的一件里程碑事件。

论文:Deep Residual Learning for Image Recognition

思想

作者根据输入将层表示为学习残差函数。实验表明,残差网络更容易优化,并且能够通过增加相当的深度来提高准确率。

核心是解决了增加深度带来的副作用(退化问题),这样能够通过单纯地增加网络深度,来提高网络性能。

深度网络的退化问题

1️⃣ 网络的深度为什么重要?

因为CNN能够提取low/mid/high-level的特征,网络的层数越多,意味着能够提取到不同level的特征越丰富。并且,越深的网络提取的特征越抽象,越具有语义信息。

2️⃣ 为什么不能简单地增加网络层数?

- 对于原来的网络,如果简单地增加深度,会导致梯度消失或梯度爆炸。

对于该问题的解决方法是正则化初始化和中间的正则化层(Batch Normalization),这样的话可以训练几十层的网络。 - 虽然通过上述方法能够训练了,但是又会出现另一个问题,就是退化问题:网络层数增加,但是在训练集上的准确率却饱和甚至下降了。这个不能解释为过拟合overfitting,因为overfit应该表现为在训练集上表现更好才对。

退化问题说明了深度网络不能很简单地被很好地优化。

3️⃣ 怎么解决退化问题?

深度残差网络。如果深网络的后面那些层是恒等映射,那么模型就退化为一个浅层网络。那现在要解决的就是学习恒等映射函数了。 但是直接让一些层去拟合一个潜在的恒等映射函数H(x) = x,比较困难,这可能就是深层网络难以训练的原因。但是,如果把网络设计为 H(x) = F(x) + x。我们可以转换为学习一个残差函数 F(x) = H(x) - x. 只要F(x)=0,就构成了一个恒等映射H(x) = x. 而且,拟合残差肯定更加容易。

ResNet结构

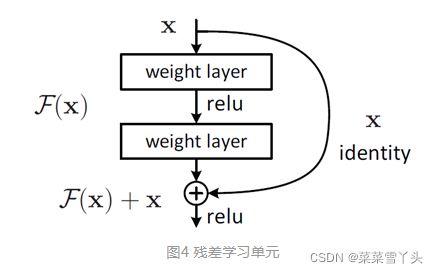

残差学习的结构

ResNet提出了两种mapping:一种是identity mapping,指的就是图1中”弯弯的曲线”,也就是所谓的短路连接 “shortcut connection”,另一种residual mapping,指的就是除了”弯弯的曲线“那部分,所以最后的输出是 y=F(x)+x。

identity mapping顾名思义,就是指本身,也就是公式中的x,而residual mapping指的是“差”,也就是y−x,所以残差指的就是F(x) 部分。

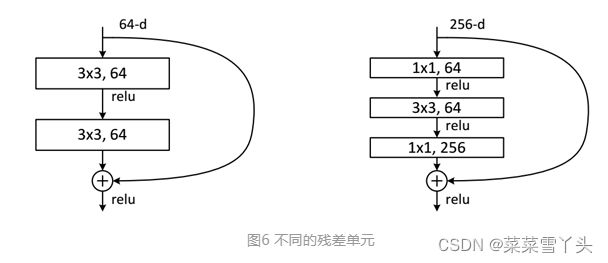

ResNet使用两种残差单元,如图6所示。左图对应的是浅层网络,而右图对应的是深层网络。

两种Shortcut Connection方式

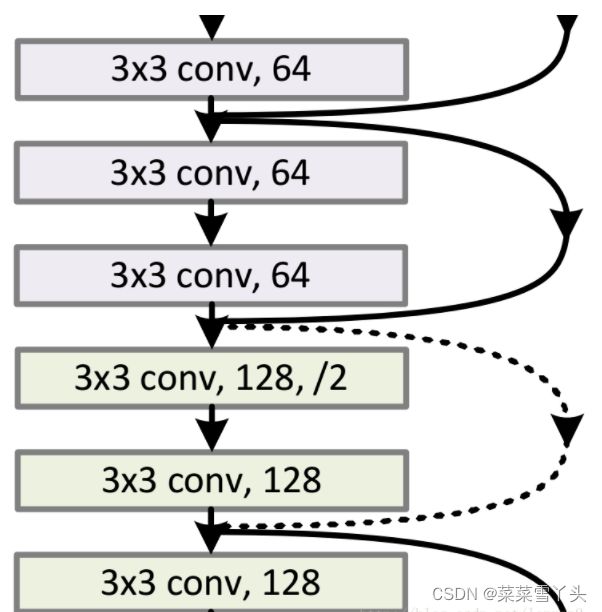

对于残差网络,维度匹配(channel个数一致) 的shortcut连接为实线,反之为虚线。

对于Shortcut Connection,当输入和输出维度一致时,可以直接将输入加到输出上。但是当维度不一致时(对应的是维度增加一倍),这就不能直接相加。有两种策略:

- 采用zero-padding增加维度。*此时一般要先做一个downsamp,可以采用strde=2的pooling,这样不会增加参数;

- 乘以W矩阵投影到新的空间。实现是用1x1卷积实现的,直接改变1x1卷积的filters数目。这种会增加参数。

网络设计规则

ResNet的一个重要设计原则是:

- 对于输出feature map大小相同的层,有相同数量的filters,即channel数相同;

- 当 feature map大小减半时(池化),filters数量翻倍。这保持了网络层的复杂度。

整体ResNet结构

ResNet的TensorFlow实现

这里给出ResNet50的TensorFlow实现,模型的实现参考了Caffe版本的实现,核心代码如下:

class ResNet50(object):

def __init__(self, inputs, num_classes=1000, is_training=True,

scope="resnet50"):

self.inputs =inputs

self.is_training = is_training

self.num_classes = num_classes

with tf.variable_scope(scope):

# construct the model

net = conv2d(inputs, 64, 7, 2, scope="conv1") # -> [batch, 112, 112, 64]

net = tf.nn.relu(batch_norm(net, is_training=self.is_training, scope="bn1"))

net = max_pool(net, 3, 2, scope="maxpool1") # -> [batch, 56, 56, 64]

net = self._block(net, 256, 3, init_stride=1, is_training=self.is_training,

scope="block2") # -> [batch, 56, 56, 256]

net = self._block(net, 512, 4, is_training=self.is_training, scope="block3")

# -> [batch, 28, 28, 512]

net = self._block(net, 1024, 6, is_training=self.is_training, scope="block4")

# -> [batch, 14, 14, 1024]

net = self._block(net, 2048, 3, is_training=self.is_training, scope="block5")

# -> [batch, 7, 7, 2048]

net = avg_pool(net, 7, scope="avgpool5") # -> [batch, 1, 1, 2048]

net = tf.squeeze(net, [1, 2], name="SpatialSqueeze") # -> [batch, 2048]

self.logits = fc(net, self.num_classes, "fc6") # -> [batch, num_classes]

self.predictions = tf.nn.softmax(self.logits)

def _block(self, x, n_out, n, init_stride=2, is_training=True, scope="block"):

with tf.variable_scope(scope):

h_out = n_out // 4

out = self._bottleneck(x, h_out, n_out, stride=init_stride,

is_training=is_training, scope="bottlencek1")

for i in range(1, n):

out = self._bottleneck(out, h_out, n_out, is_training=is_training,

scope=("bottlencek%s" % (i + 1)))

return out

def _bottleneck(self, x, h_out, n_out, stride=None, is_training=True, scope="bottleneck"):

""" A residual bottleneck unit"""

n_in = x.get_shape()[-1]

if stride is None:

stride = 1 if n_in == n_out else 2

with tf.variable_scope(scope):

h = conv2d(x, h_out, 1, stride=stride, scope="conv_1")

h = batch_norm(h, is_training=is_training, scope="bn_1")

h = tf.nn.relu(h)

h = conv2d(h, h_out, 3, stride=1, scope="conv_2")

h = batch_norm(h, is_training=is_training, scope="bn_2")

h = tf.nn.relu(h)

h = conv2d(h, n_out, 1, stride=1, scope="conv_3")

h = batch_norm(h, is_training=is_training, scope="bn_3")

if n_in != n_out:

shortcut = conv2d(x, n_out, 1, stride=stride, scope="conv_4")

shortcut = batch_norm(shortcut, is_training=is_training, scope="bn_4")

else:

shortcut = x

return tf.nn.relu(shortcut + h)