【目标检测】(8) ASPP改进加强特征提取模块,附Tensorflow完整代码

各位同学好,最近想改进一下YOLOV4的SPP加强特征提取模块,看到很多论文中都使用语义分割中的ASPP模块来改进,今天用Tensorflow复现一下代码。

YOLOV4的主干网络代码可见我上一篇文章:https://blog.csdn.net/dgvv4/article/details/123818580

将本节的ASPP代码替换原来的SPP模块代码即可

1. 方法介绍

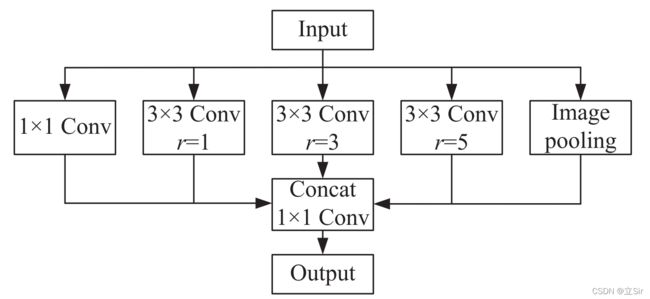

YOLOv4 中使用 SPP 模块提取不同感受野的信息,但没有充分体现全局信息和局部信息的语义关系。本文设计的 ASPP 引入不同扩张率的深度可分离卷积+空洞卷积操作,实现 SPP 中的池化操作,并将其与全局平均池化并联,组成一个新的特征金字塔模型,以此聚合多尺度上下文信息,增强模型识别不同尺寸同一物体的能力。

结合 ASPP 改进的 YOLOV4 框架如下图所示,采用扩张率分别为 1、3、5 且卷积核大小为 3*3 的空洞卷积,并且使用深度可分离卷积减少参数量。在前一层的局部特征上关联到更广阔的视野,防止小目标特征在信息传递时丢失。

将骨干网络的第三个有效特征层的输出特征图作为 ASPP 模块的输入,输入特征图的shape为 [13, 13, 1024]。第一个支路是1*1标准卷积,目的是保持原有的感受野;第二至四个支路是不同扩张率的深度可分离卷积,目的是特征提取来获得不同的感受野;第五个支路是将输入全局平均池化,从而获取全局特征。最后将五个分支的特征图在通道维度上堆叠,经过1*1标准卷积融合不同尺度的信息。

2. 空洞卷积

传统的深度卷积神经网络在处理图像任务时,一般先对图片做卷积进行特征提取和维度变化,再对图像做池化进行降低尺寸。随着网络层数的加深,池化层导致图片尺寸越来越小,当需要通过上采样操作将图片扩大到原始尺寸时,会导致内部数据结构丢失,空间层级化信息以及有关小目标重建的信息丢失等问题,因此其可能导致网络精度无法再有明显的提升。

空洞卷积的计算思想与普通卷积一致,但它是普通卷积的一种变形,在其中引入了一个新的参数,被记为“扩张率”(dilation rate)。顾名思义,扩张率代表了卷积核扩张的大小,也就是卷积核中各个参数之间的间隔距离,普通的卷积扩张率为 1,卷积核没有进行扩张。空洞卷积在不使用池化层的情况下,增大感受野,使每个卷积输出都包含较大范围的信息,进而提高网络性能。

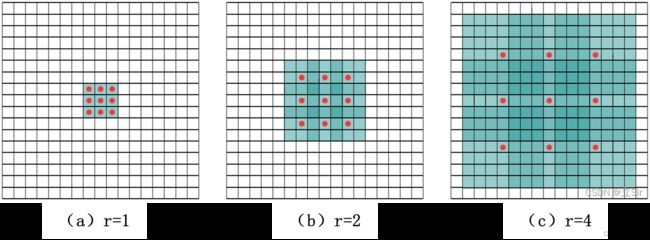

下图显示了二维数据上的空洞卷积,红色的点是 3*3 卷积核的输入,绿色区域是每个输入捕获到的感受野,感受野是指卷积每一层输出的特征图中的特征点在输入图像上映射区域的大小

图 a 对应的是一个卷积核大小为 3*3,膨胀系数 r 为 1 的卷积,与普通卷积计算方式相同。

图 b 中的 3*3 卷积对应的膨胀系数 r 为 2,代表在每两个卷积点之间插入一个空洞,可以看作是一个大小为 7*7 的卷积核,其中只有9个点的权重不为0,其余都是0。虽然卷积核的大小只有 3*3,但是感受野已经增加到 7*7。

如果膨胀系数为2的前一层卷积是一个膨胀系数为1的卷积,即图b中每个红点对应膨胀系数r=1卷积的输出,所以膨胀系数 r=1 和 r=2 在一起可以达到 7*7 的感受野。

图 c 中的 3*3 卷积对应的膨胀系数为4,同理,以膨胀系数r=1和r=2的空洞卷积作为输入,可以达到 15*15 的感受野。

3. 代码复现

首先来构建不同扩张率的深度可分离卷积块。对深度可分离卷积理论部分有疑惑的可以看我MobileNetV3的文章:https://blog.csdn.net/dgvv4/article/details/123476899

3*3深度卷积(DepthwiseConv)只处理特征图的长宽方向的信息,1*1逐点卷积(PointConv)只处理特征图通道方向的信息

#(1)深度可分离卷积+空洞卷积

def block(inputs, filters, rate):

'''

filters:1*1卷积下降的通道数

rate:空洞卷积的膨胀率

'''

# 3*3深度卷积,指定膨胀率

x = layers.DepthwiseConv2D(kernel_size=(3,3), strides=1, padding='same',

dilation_rate=rate, use_bias=False)(inputs)

x = layers.BatchNormalization()(x) # 标准化

x = layers.Activation('relu')(x) # 激活函数

# 1*1逐点卷积调整通道数

x = layers.Conv2D(filters, kernel_size=(1,1), strides=1, padding='same', use_bias=False)(x)

x = layers.BatchNormalization()(x) # 标准化

x = layers.Activation('relu')(x) # 激活函数

return x接下来搭建主干的ASPP模块,输入是主干网络的第三个有效特征层,经过一个1*1标准卷积融合通道信息,三个分支的不同膨胀率的3*3空洞卷积得到不同尺度的信息,一个分支的全局平均池化获得全局信息。

#(2)aspp加强特征提取模块,inputs是网络输出的第三个有效特征层[13,13,1024]

def aspp(inputs):

# 获取输入图像的尺寸

b,h,w,c = inputs.shape

# 1*1标准卷积降低通道数[13,13,1024]==>[13,13,512]

x1 = layers.Conv2D(filters=512, kernel_size=(1,1), strides=1, padding='same', use_bias=False)(inputs)

x1 = layers.BatchNormalization()(x1) # 标准化

x1 = layers.Activation('relu')(x1) # 激活

# 膨胀率=1

x2 = block(inputs, filters=512, rate=1)

# 膨胀率=3

x3 = block(inputs, filters=512, rate=3)

# 膨胀率=5

x4 = block(inputs, filters=512, rate=5)

# 全局平均池化[13,13,1024]==>[None,1024]

x5 = layers.GlobalAveragePooling2D()(inputs)

# [None,1024]==>[1,1,1024]

x5 = layers.Reshape(target_shape=[1,1,-1])(x5)

# 1*1卷积减少通道数[1,1,1024]==>[1,1,512]

x5 = layers.Conv2D(filters=512, kernel_size=(1,1), strides=1, padding='same', use_bias=False)(x5)

x5 = layers.BatchNormalization()(x5)

x5 = layers.Activation('relu')(x5)

# 调整图像大小[1,1,512]==>[13,13,512]

x5 = tf.image.resize(x5, size=(h,w))

# 堆叠5个并行操作[13,13,512]==>[13,13,512*5]

x = layers.concatenate([x1,x2,x3,x4,x5])

# 1*1卷积调整通道

x = layers.Conv2D(filters=512, kernel_size=(1,1), strides=1, padding='same', use_bias=False)(x)

x = layers.BatchNormalization()(x)

x = layers.Activation('relu')(x)

# 随机杀死神经元

x = layers.Dropout(rate=0.1)(x)

return x查看ASPP模块的架构,构造输入层[13,13,1024]

#(3)查看网络结构

if __name__ == '__main__':

inputs = keras.Input(shape=[13,13,1024]) # 输入层

outputs = aspp(inputs) # 结构aspp模型

# 构建网络模型

model = Model(inputs, outputs)

model.summary()模型架构如下

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 13, 13, 1024 0

__________________________________________________________________________________________________

depthwise_conv2d (DepthwiseConv (None, 13, 13, 1024) 9216 input_1[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_1 (DepthwiseCo (None, 13, 13, 1024) 9216 input_1[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_2 (DepthwiseCo (None, 13, 13, 1024) 9216 input_1[0][0]

__________________________________________________________________________________________________

global_average_pooling2d (Globa (None, 1024) 0 input_1[0][0]

__________________________________________________________________________________________________

batch_normalization_1 (BatchNor (None, 13, 13, 1024) 4096 depthwise_conv2d[0][0]

__________________________________________________________________________________________________

batch_normalization_3 (BatchNor (None, 13, 13, 1024) 4096 depthwise_conv2d_1[0][0]

__________________________________________________________________________________________________

batch_normalization_5 (BatchNor (None, 13, 13, 1024) 4096 depthwise_conv2d_2[0][0]

__________________________________________________________________________________________________

reshape (Reshape) (None, 1, 1, 1024) 0 global_average_pooling2d[0][0]

__________________________________________________________________________________________________

activation_1 (Activation) (None, 13, 13, 1024) 0 batch_normalization_1[0][0]

__________________________________________________________________________________________________

activation_3 (Activation) (None, 13, 13, 1024) 0 batch_normalization_3[0][0]

__________________________________________________________________________________________________

activation_5 (Activation) (None, 13, 13, 1024) 0 batch_normalization_5[0][0]

__________________________________________________________________________________________________

conv2d_4 (Conv2D) (None, 1, 1, 512) 524288 reshape[0][0]

__________________________________________________________________________________________________

conv2d (Conv2D) (None, 13, 13, 512) 524288 input_1[0][0]

__________________________________________________________________________________________________

conv2d_1 (Conv2D) (None, 13, 13, 512) 524288 activation_1[0][0]

__________________________________________________________________________________________________

conv2d_2 (Conv2D) (None, 13, 13, 512) 524288 activation_3[0][0]

__________________________________________________________________________________________________

conv2d_3 (Conv2D) (None, 13, 13, 512) 524288 activation_5[0][0]

__________________________________________________________________________________________________

batch_normalization_7 (BatchNor (None, 1, 1, 512) 2048 conv2d_4[0][0]

__________________________________________________________________________________________________

batch_normalization (BatchNorma (None, 13, 13, 512) 2048 conv2d[0][0]

__________________________________________________________________________________________________

batch_normalization_2 (BatchNor (None, 13, 13, 512) 2048 conv2d_1[0][0]

__________________________________________________________________________________________________

batch_normalization_4 (BatchNor (None, 13, 13, 512) 2048 conv2d_2[0][0]

__________________________________________________________________________________________________

batch_normalization_6 (BatchNor (None, 13, 13, 512) 2048 conv2d_3[0][0]

__________________________________________________________________________________________________

activation_7 (Activation) (None, 1, 1, 512) 0 batch_normalization_7[0][0]

__________________________________________________________________________________________________

activation (Activation) (None, 13, 13, 512) 0 batch_normalization[0][0]

__________________________________________________________________________________________________

activation_2 (Activation) (None, 13, 13, 512) 0 batch_normalization_2[0][0]

__________________________________________________________________________________________________

activation_4 (Activation) (None, 13, 13, 512) 0 batch_normalization_4[0][0]

__________________________________________________________________________________________________

activation_6 (Activation) (None, 13, 13, 512) 0 batch_normalization_6[0][0]

__________________________________________________________________________________________________

tf.image.resize (TFOpLambda) (None, 13, 13, 512) 0 activation_7[0][0]

__________________________________________________________________________________________________

concatenate (Concatenate) (None, 13, 13, 2560) 0 activation[0][0]

activation_2[0][0]

activation_4[0][0]

activation_6[0][0]

tf.image.resize[0][0]

__________________________________________________________________________________________________

conv2d_5 (Conv2D) (None, 13, 13, 512) 1310720 concatenate[0][0]

__________________________________________________________________________________________________

batch_normalization_8 (BatchNor (None, 13, 13, 512) 2048 conv2d_5[0][0]

__________________________________________________________________________________________________

activation_8 (Activation) (None, 13, 13, 512) 0 batch_normalization_8[0][0]

__________________________________________________________________________________________________

dropout (Dropout) (None, 13, 13, 512) 0 activation_8[0][0]

==================================================================================================

Total params: 3,984,384

Trainable params: 3,972,096

Non-trainable params: 12,288

__________________________________________________________________________________________________