图像旋转算法及 pytorch 源码解读

文章目录

- 正向映射法

-

- 推导过程

- 逆向映射法

- 基于 Bresenham 的快速旋转法

- pytorch 源码

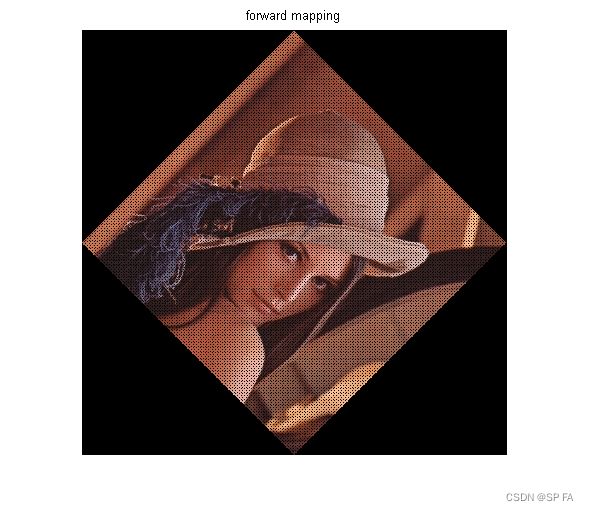

正向映射法

推导过程

设以图像的中心为原点,对于该图像的任一点 ( x 0 , y 0 ) (x_0,y_0) (x0,y0),顺时针旋转 α \alpha α 角度后变为 ( x , y ) (x,y) (x,y),则可以推出仿射矩阵:

[ cos α sin α − sin α cos α ] [ x 0 y 0 ] = [ x y ] \begin{bmatrix}\cos\alpha&\sin\alpha\\-\sin\alpha&\cos\alpha\end{bmatrix}\begin{bmatrix}x_0\\y_0\end{bmatrix}=\begin{bmatrix}x\\y\end{bmatrix} [cosα−sinαsinαcosα][x0y0]=[xy]

但是实际上屏幕的坐标是以左上角为原点,向右为 x x x 轴正方向,向下为 y y y 轴正方向的一个坐标系。因此要对这两个坐标系进行转换,设 O 1 O_1 O1 为屏幕坐标系, O 0 O_0 O0 为以图片中心为原点的坐标系,图像宽高分别为 w , h w,h w,h,则 O 1 → O 0 O_1\rightarrow O_0 O1→O0 的变换为:

[ 1 0 − 0.5 w 0 − 1 0.5 h 0 0 1 ] [ x 1 y 1 1 ] = [ x 0 y 0 1 ] \begin{bmatrix}1&0&-0.5w\\0&-1&0.5h\\0&0&1\end{bmatrix}\begin{bmatrix}x_1\\y_1\\1\end{bmatrix}=\begin{bmatrix}x_0\\y_0\\1\end{bmatrix} ⎣ ⎡1000−10−0.5w0.5h1⎦ ⎤⎣ ⎡x1y11⎦ ⎤=⎣ ⎡x0y01⎦ ⎤

在旋转完成后,又要把该图片变回以屏幕为坐标系,此时它的最大宽高已经发生了变化,所以需要重新计算,设 O 2 O_2 O2 为屏幕坐标系,新的宽高为 w 1 , h 1 w_1,h_1 w1,h1,则 O 0 → O 2 O_0\rightarrow O_2 O0→O2 为:

[ 1 0 0.5 w 1 0 − 1 0.5 h 1 0 0 1 ] [ x 0 y 0 1 ] = [ x 2 y 2 1 ] \begin{bmatrix}1&0&0.5w_1\\0&-1&0.5h_1\\0&0&1\end{bmatrix}\begin{bmatrix}x_0\\y_0\\1\end{bmatrix}=\begin{bmatrix}x_2\\y_2\\1\end{bmatrix} ⎣ ⎡1000−100.5w10.5h11⎦ ⎤⎣ ⎡x0y01⎦ ⎤=⎣ ⎡x2y21⎦ ⎤

由上面三式可得总计算公式:

[ 1 0 0.5 w 1 0 − 1 0.5 h 1 0 0 1 ] [ cos α sin α 0 − sin α cos α 0 0 0 1 ] [ 1 0 − 0.5 w 0 − 1 0.5 h 0 0 1 ] [ x 1 y 1 1 ] = [ x 2 y 2 1 ] \begin{bmatrix}1&0&0.5w_1\\0&-1&0.5h_1\\0&0&1\end{bmatrix}\begin{bmatrix}\cos\alpha&\sin\alpha&0\\-\sin\alpha&\cos\alpha&0\\0&0&1\end{bmatrix}\begin{bmatrix}1&0&-0.5w\\0&-1&0.5h\\0&0&1\end{bmatrix}\begin{bmatrix}x_1\\y_1\\1\end{bmatrix}=\begin{bmatrix}x_2\\y_2\\1\end{bmatrix} ⎣ ⎡1000−100.5w10.5h11⎦ ⎤⎣ ⎡cosα−sinα0sinαcosα0001⎦ ⎤⎣ ⎡1000−10−0.5w0.5h1⎦ ⎤⎣ ⎡x1y11⎦ ⎤=⎣ ⎡x2y21⎦ ⎤

逆向映射法

前向映射法有一个问题,就是原图的坐标变换完后可能是小数,这样它就会被取整,导致有些点没法得到对应的灰度值。

因此在实际的图像旋转中一般用逆向映射法代替正向映射法,它们原理相同,不过逆向映射法是先生成一个空白图像,再依次求出每个像素在原图的坐标,并将它的灰度复制到空白图,如果超出原图范围,则设为 255.。此过程的公式为:

[ 1 0 0.5 w 0 − 1 0.5 h 0 0 1 ] [ cos α − sin α 0 sin α cos α 0 0 0 1 ] [ 1 0 − 0.5 w 1 0 − 1 0.5 h 1 0 0 1 ] [ x 2 y 2 1 ] = [ x 1 y 1 1 ] \begin{bmatrix}1&0&0.5w\\0&-1&0.5h\\0&0&1\end{bmatrix}\begin{bmatrix}\cos\alpha&-\sin\alpha&0\\\sin\alpha&\cos\alpha&0\\0&0&1\end{bmatrix}\begin{bmatrix}1&0&-0.5w_1\\0&-1&0.5h_1\\0&0&1\end{bmatrix}\begin{bmatrix}x_2\\y_2\\1\end{bmatrix}=\begin{bmatrix}x_1\\y_1\\1\end{bmatrix} ⎣ ⎡1000−100.5w0.5h1⎦ ⎤⎣ ⎡cosαsinα0−sinαcosα0001⎦ ⎤⎣ ⎡1000−10−0.5w10.5h11⎦ ⎤⎣ ⎡x2y21⎦ ⎤=⎣ ⎡x1y11⎦ ⎤

基于 Bresenham 的快速旋转法

pytorch 源码

pytorch 中的图像旋转方法定义在 torchvision.transforms.functional.rotate()

def rotate(

img: Tensor,

angle: float,

interpolation: InterpolationMode = InterpolationMode.NEAREST,

expand: bool = False,

center: Optional[List[int]] = None,

fill: Optional[List[float]] = None,

resample: Optional[int] = None,

) -> Tensor:

"""

Args:

img (PIL Image or Tensor): image to be rotated.

angle (number): rotation angle value in degrees, counter-clockwise.

interpolation (InterpolationMode): Desired interpolation enum defined by

:class:`torchvision.transforms.InterpolationMode`. Default is ``InterpolationMode.NEAREST``.

If input is Tensor, only ``InterpolationMode.NEAREST``, ``InterpolationMode.BILINEAR`` are supported.

For backward compatibility integer values (e.g. ``PIL.Image[.Resampling].NEAREST``) are still accepted,

but deprecated since 0.13 and will be removed in 0.15. Please use InterpolationMode enum.

expand (bool, optional): Optional expansion flag.

If true, expands the output image to make it large enough to hold the entire rotated image.

If false or omitted, make the output image the same size as the input image.

Note that the expand flag assumes rotation around the center and no translation.

center (sequence, optional): Optional center of rotation. Origin is the upper left corner.

Default is the center of the image.

fill (sequence or number, optional): Pixel fill value for the area outside the transformed

image. If given a number, the value is used for all bands respectively.

Returns:

PIL Image or Tensor: Rotated image.

"""

... # 验证参数类型,暂且略过

center_f = [0.0, 0.0]

# 计算旋转中心

if center is not None:

_, height, width = get_dimensions(img)

center_f = [1.0 * (c - s * 0.5) for c, s in zip(center, [width, height])]

# due to current incoherence of rotation angle direction between affine and rotate implementations

# we need to set -angle.

matrix = _get_inverse_affine_matrix(center_f, -angle, [0.0, 0.0], 1.0, [0.0, 0.0])

return F_t.rotate(img, matrix=matrix, interpolation=interpolation.value, expand=expand, fill=fill)

其中 _get_inverse_affine_matrix() 用来计算仿射矩阵。

def _get_inverse_affine_matrix(

center: List[float], angle: float, translate: List[float], scale: float, shear: List[float], inverted: bool = True

) -> List[float]:

# Helper method to compute inverse matrix for affine transformation

# shear 为剪切变换角度,因为在图像旋转中没有剪切变换,可暂且忽略

# 角度变弧度

rot = math.radians(angle)

sx = math.radians(shear[0])

sy = math.radians(shear[1])

cx, cy = center

tx, ty = translate

# RSS without scaling

a = math.cos(rot - sy) / math.cos(sy)

b = -math.cos(rot - sy) * math.tan(sx) / math.cos(sy) - math.sin(rot)

c = math.sin(rot - sy) / math.cos(sy)

d = -math.sin(rot - sy) * math.tan(sx) / math.cos(sy) + math.cos(rot)

if inverted:

# Inverted rotation matrix with scale and shear

# det([[a, b], [c, d]]) == 1, since det(rotation) = 1 and det(shear) = 1

matrix = [d, -b, 0.0, -c, a, 0.0]

matrix = [x / scale for x in matrix]

# Apply inverse of translation and of center translation: RSS^-1 * C^-1 * T^-1

matrix[2] += matrix[0] * (-cx - tx) + matrix[1] * (-cy - ty)

matrix[5] += matrix[3] * (-cx - tx) + matrix[4] * (-cy - ty)

# Apply center translation: C * RSS^-1 * C^-1 * T^-1

matrix[2] += cx

matrix[5] += cy

else:

matrix = [a, b, 0.0, c, d, 0.0]

matrix = [x * scale for x in matrix]

# Apply inverse of center translation: RSS * C^-1

matrix[2] += matrix[0] * (-cx) + matrix[1] * (-cy)

matrix[5] += matrix[3] * (-cx) + matrix[4] * (-cy)

# Apply translation and center : T * C * RSS * C^-1

matrix[2] += cx + tx

matrix[5] += cy + ty

return matrix

之后的 F_t.rotate() 为 torchvision.transforms.functional.functional_tensor.rotate()

def rotate(

img: Tensor,

matrix: List[float],

interpolation: str = "nearest",

expand: bool = False,

fill: Optional[List[float]] = None,

) -> Tensor:

... # 验证数据,暂且忽略

w, h = img.shape[-1], img.shape[-2]

ow, oh = _compute_output_size(matrix, w, h) if expand else (w, h)

dtype = img.dtype if torch.is_floating_point(img) else torch.float32

theta = torch.tensor(matrix, dtype=dtype, device=img.device).reshape(1, 2, 3)

# grid will be generated on the same device as theta and img

grid = _gen_affine_grid(theta, w=w, h=h, ow=ow, oh=oh)

return _apply_grid_transform(img, grid, interpolation, fill=fill)

可以顺带简单看一看 _compute_output_size

def _compute_output_size(matrix: List[float], w: int, h: int) -> Tuple[int, int]:

pts = torch.tensor(

[

[-0.5 * w, -0.5 * h, 1.0],

[-0.5 * w, 0.5 * h, 1.0],

[0.5 * w, 0.5 * h, 1.0],

[0.5 * w, -0.5 * h, 1.0],

]

)

theta = torch.tensor(matrix, dtype=torch.float).view(2, 3)

new_pts = torch.matmul(pts, theta.T)

min_vals, _ = new_pts.min(dim=0)

max_vals, _ = new_pts.max(dim=0)

# shift points to [0, w] and [0, h] interval to match PIL results

min_vals += torch.tensor((w * 0.5, h * 0.5))

max_vals += torch.tensor((w * 0.5, h * 0.5))

# Truncate precision to 1e-4 to avoid ceil of Xe-15 to 1.0

tol = 1e-4

cmax = torch.ceil((max_vals / tol).trunc_() * tol)

cmin = torch.floor((min_vals / tol).trunc_() * tol)

size = cmax - cmin

return int(size[0]), int(size[1])

但是主要还是看生成仿射网格和变换部分

def _gen_affine_grid(

theta: Tensor,

w: int,

h: int,

ow: int,

oh: int,

) -> Tensor:

# 生成一个网格并填充数值

d = 0.5

base_grid = torch.empty(1, oh, ow, 3, dtype=theta.dtype, device=theta.device)

x_grid = torch.linspace(-ow * 0.5 + d, ow * 0.5 + d - 1, steps=ow, device=theta.device)

base_grid[..., 0].copy_(x_grid)

y_grid = torch.linspace(-oh * 0.5 + d, oh * 0.5 + d - 1, steps=oh, device=theta.device).unsqueeze_(-1)

base_grid[..., 1].copy_(y_grid)

base_grid[..., 2].fill_(1)

# 归一化

rescaled_theta = theta.transpose(1, 2) / torch.tensor([0.5 * w, 0.5 * h], dtype=theta.dtype, device=theta.device)

output_grid = base_grid.view(1, oh * ow, 3).bmm(rescaled_theta)

return output_grid.view(1, oh, ow, 2)

def _apply_grid_transform(img: Tensor, grid: Tensor, mode: str, fill: Optional[List[float]]) -> Tensor:

img, need_cast, need_squeeze, out_dtype = _cast_squeeze_in(img, [grid.dtype])

if img.shape[0] > 1:

# Apply same grid to a batch of images

grid = grid.expand(img.shape[0], grid.shape[1], grid.shape[2], grid.shape[3])

# Append a dummy mask for customized fill colors, should be faster than grid_sample() twice

if fill is not None:

dummy = torch.ones((img.shape[0], 1, img.shape[2], img.shape[3]), dtype=img.dtype, device=img.device)

img = torch.cat((img, dummy), dim=1)

img = grid_sample(img, grid, mode=mode, padding_mode="zeros", align_corners=False)

# 填充颜色

if fill is not None:

mask = img[:, -1:, :, :] # N * 1 * H * W

img = img[:, :-1, :, :] # N * C * H * W

mask = mask.expand_as(img)

len_fill = len(fill) if isinstance(fill, (tuple, list)) else 1

fill_img = torch.tensor(fill, dtype=img.dtype, device=img.device).view(1, len_fill, 1, 1).expand_as(img)

# 插值运算

if mode == "nearest":

mask = mask < 0.5

img[mask] = fill_img[mask]

else: # 'bilinear'

img = img * mask + (1.0 - mask) * fill_img

img = _cast_squeeze_out(img, need_cast, need_squeeze, out_dtype)

return img

在 _apply_grid_transform 方法中用到了 torch.nn.functional.gird_sample() 方法以及它调用的 torch.grid_sampler() 方法,实现了对输出图像的填充与插值运算(具体如何实现的我也不懂,因为 torch.grid_sampler() 看不懂了

def grid_sample(

input: Tensor,

grid: Tensor,

mode: str = "bilinear",

padding_mode: str = "zeros",

align_corners: Optional[bool] = None,

) -> Tensor:

r"""Given an :attr:`input` and a flow-field :attr:`grid`, computes the

``output`` using :attr:`input` values and pixel locations from :attr:`grid`.

Args:

input (Tensor): input of shape :math:`(N, C, H_\text{in}, W_\text{in})` (4-D case)

or :math:`(N, C, D_\text{in}, H_\text{in}, W_\text{in})` (5-D case)

grid (Tensor): flow-field of shape :math:`(N, H_\text{out}, W_\text{out}, 2)` (4-D case)

or :math:`(N, D_\text{out}, H_\text{out}, W_\text{out}, 3)` (5-D case)

mode (str): interpolation mode to calculate output values

``'bilinear'`` | ``'nearest'`` | ``'bicubic'``. Default: ``'bilinear'``

Note: ``mode='bicubic'`` supports only 4-D input.

When ``mode='bilinear'`` and the input is 5-D, the interpolation mode

used internally will actually be trilinear. However, when the input is 4-D,

the interpolation mode will legitimately be bilinear.

padding_mode (str): padding mode for outside grid values

``'zeros'`` | ``'border'`` | ``'reflection'``. Default: ``'zeros'``

align_corners (bool, optional): Geometrically, we consider the pixels of the

input as squares rather than points.

If set to ``True``, the extrema (``-1`` and ``1``) are considered as referring

to the center points of the input's corner pixels. If set to ``False``, they

are instead considered as referring to the corner points of the input's corner

pixels, making the sampling more resolution agnostic.

This option parallels the ``align_corners`` option in

:func:`interpolate`, and so whichever option is used here

should also be used there to resize the input image before grid sampling.

Default: ``False``

Returns:

output (Tensor): output Tensor

"""

# 验证参数,暂且略过

if mode == "bilinear":

mode_enum = 0

elif mode == "nearest":

mode_enum = 1

else: # mode == 'bicubic'

mode_enum = 2

if padding_mode == "zeros":

padding_mode_enum = 0

elif padding_mode == "border":

padding_mode_enum = 1

else: # padding_mode == 'reflection'

padding_mode_enum = 2

if align_corners is None:

warnings.warn(

"Default grid_sample and affine_grid behavior has changed "

"to align_corners=False since 1.3.0. Please specify "

"align_corners=True if the old behavior is desired. "

"See the documentation of grid_sample for details."

)

align_corners = False

return torch.grid_sampler(input, grid, mode_enum, padding_mode_enum, align_corners)

@symbolic_helper.parse_args("v", "v", "i", "i", "b")

def grid_sampler(g, input, grid, mode_enum, padding_mode_enum, align_corners):

mode_s = {v: k for k, v in GRID_SAMPLE_INTERPOLATION_MODES.items()}[mode_enum] # type: ignore[call-arg]

padding_mode_s = {v: k for k, v in GRID_SAMPLE_PADDING_MODES.items()}[padding_mode_enum] # type: ignore[call-arg]

return g.op(

"GridSample",

input,

grid,

align_corners_i=int(align_corners),

mode_s=mode_s,

padding_mode_s=padding_mode_s,

)

这一层一层套下来看到最后其实已经迷惑了,但是写的这么绕肯定有它的原因,细细琢磨下来也会有不少收获。