卷积神经网络学习笔记与经典卷积模型复现

卷积神经网络

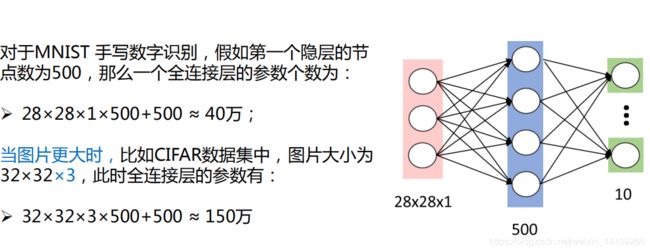

一.全连接神经网络的局限性

二.卷积神经网络背景

- 1962年Hubel和Wiesel通过对猫视觉皮层细胞的研究,提出

了感受野(receptive field)的概念。视觉皮层的神经元就是局

部接受信息的,只受某些特定区域刺激的响应,而不是对全

局图像进行感知。 - 1984年日本学者Fukushima基于感受

野概念提出神经认知机(neocognitron)。 - CNN可看作是神经认知机的推广形式。

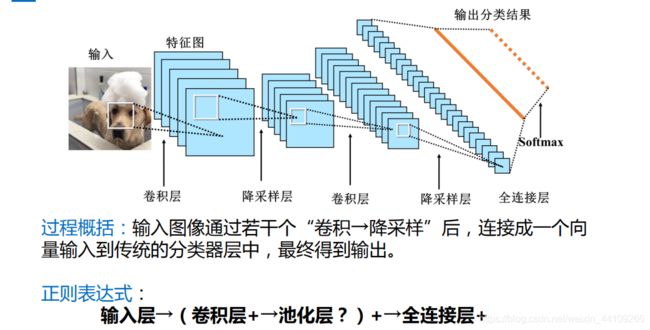

三.卷积神经网络结构

- 输入层:将每个像素代表一个特征节点输入到网络中。

- 卷积层:卷积运算的主要目的是使原信号特征增强,并降低噪音。

- 降采样层:降低网络训练参数及模型的过拟合程度。

- 注:池化层并不是必须的,通过增大卷积核的尺寸同样可以达到减少参数的效果

- 全连接层:降低网络训练参数及模型的过拟合程度

四.卷积神经网络计算过程

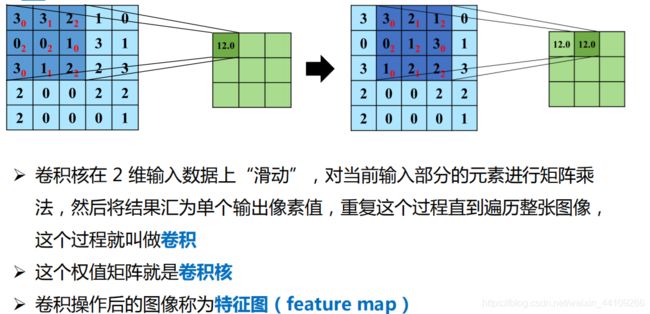

1.卷积计算

2.padding全零填充

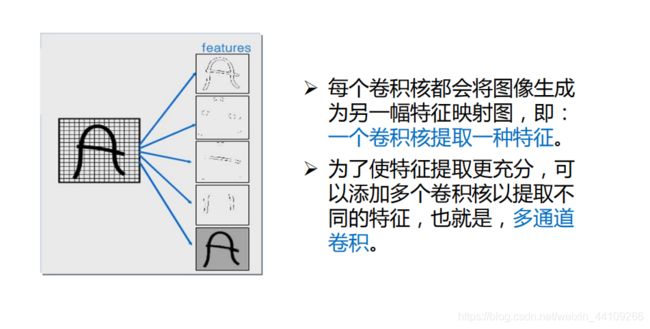

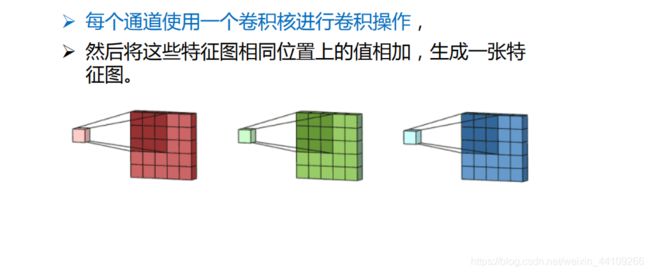

3.多通道卷积

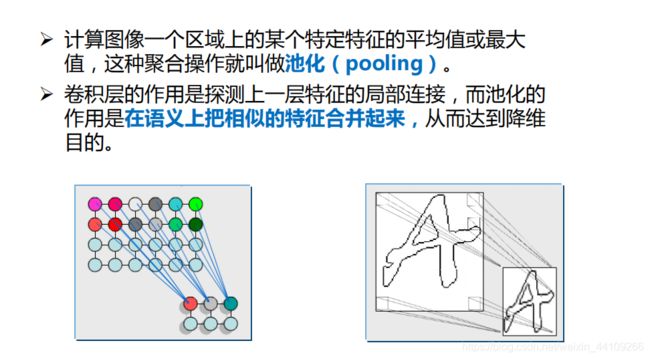

4.pooling池化层(降采样)

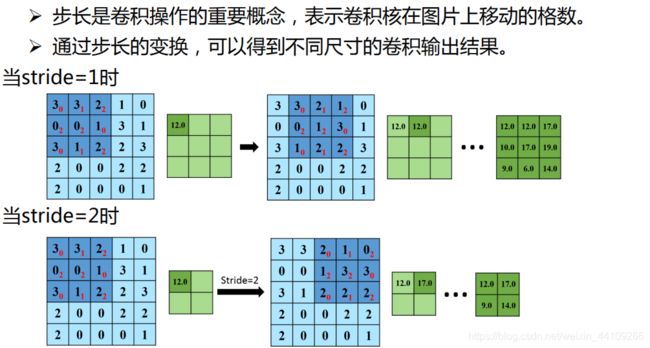

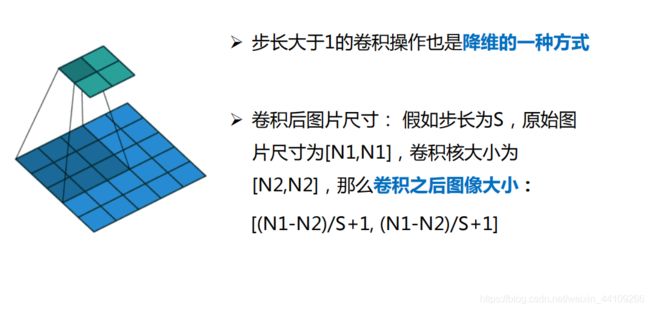

5.stride变换及其意义

- 注: 关于pooling的资料:https://www.zhihu.com/question/40727704

五.相关概念总结与代码演示

1.卷积计算

- 卷积计算可以认为是一种有效提取像素的方法

- 一般会用一个正方形的卷积核,按照指定步长,在输入特征图上滑动,遍历输入特征图中的每一个像素点。每一个步长,卷积核会与输入特征图出现重合 区域,重合区域对应像素相乘,求和再加上偏置项得到输出特征的一个像素点。

import matplotlib.pyplot as plt

#读取图片

img = plt.imread('picture2.jpg')

'''

print('img_shape:',img.shape)#读取数据的形状

print('img_size:',img.size)#读取数据的大小

print('img_type:',img.dtype)#读取数据的编码格式

print('img:',img)#打印读取的数据

plt.imshow(img)#显示图片

'''

plt.imshow(img)

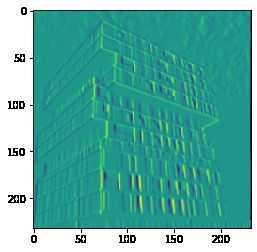

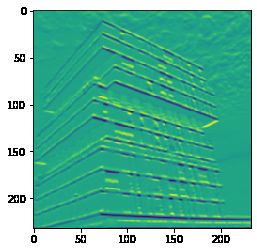

- 卷积计算过程实例,以sabel算子提取水平方向特征和拉普拉斯算子提取边缘特征为例

import numpy as np

from PIL import Image

def ImgConvolve(image_array,kernel):

'''

参数说明:

imge_array:原灰度图像矩阵

kernel:卷积核

返回值:原图像与算子进行运算后的产物

'''

image_arr = image_array.copy()

img_dim1,img_dim2 = image_arr.shape

k_dim1,k_dim2 = kernel.shape

AddW = int((k_dim1-1)/2)

AddH = int((k_dim2-1)/2)

#padding填充

temp = np.zeros([img_dim1 + AddW*2,img_dim2 + AddH*2])

#将原图像copy到临时图片的中央

temp[AddW : AddW + img_dim1, AddH : AddH + img_dim2 ] = image_arr[:,:]

#初始化一张同样大小的图片作为输出图片

output = np.zeros_like(a=temp)

#将扩充后的图和卷积核进行卷积

for i in range(AddW,AddW + img_dim1):

for j in range(AddH,AddH+img_dim2):

output[i][j] = int(np.sum(temp[i-AddW:i+AddW+1,j-AddW:j+AddW+1]*kernel))

return output[AddW:AddW + img_dim1,AddH:AddH+img_dim2]

#提取竖直方向特征

#sobel_x

kernel_1 = np.array(

[[-1,0,1],

[-2,0,2],

[-1,0,1]

])

#提取水平方向特征

#sobel_y

kernel_2 = np.array([[-1,-2,-1],

[0,0,0],

[1,2,1]

])

#Laplace扩展算子

#二阶微分算子

kernel_3 = np.array([[-1,-1,-1],

[-1,8,-1],

[-1,-1,-1]

])

from PIL import Image

#打开图像并转换成灰度图像

image = Image.open('picture2.jpg').convert('L')

#将图像转换成数组

image_array = np.array(image)

sobel_x = ImgConvolve(image_array,kernel_1)

print('竖直方向特征:\n')

plt.imshow(sobel_x)

竖直方向特征:

sobel_y = ImgConvolve(image_array,kernel_2)

print('水平方向特征:\n')

plt.imshow(sobel_y)

竖直方向特征:

laplace = ImgConvolve(image_array,kernel_3)

print('边缘方向特征:\n')

plt.imshow(laplace)

边缘方向特征:

import tensorflow as tf

from matplotlib import pyplot as plt

import numpy as np

np.set_printoptions(threshold=np.inf)

cifar10 = tf.keras.datasets.cifar10

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

# 可视化训练集输入特征的第一个元素

plt.imshow(x_train[0]) # 绘制图片

plt.show()

# 打印出训练集输入特征的第一个元素

print("x_train[0]:\n", x_train[0])

# 打印出训练集标签的第一个元素

print("y_train[0]:\n", y_train[0])

# 打印出整个训练集输入特征形状

print("x_train.shape:\n", x_train.shape)

# 打印出整个训练集标签的形状

print("y_train.shape:\n", y_train.shape)

# 打印出整个测试集输入特征的形状

print("x_test.shape:\n", x_test.shape)

# 打印出整个测试集标签的形状

print("y_test.shape:\n", y_test.shape)

2.感受野(Receptive Field)

- 卷积神经网络中各输出特征图中的每个像素点,在原始输入图像上映射区域的大小

- 当前卷积神经网络中,常采用两层33的卷积核,原因是当输入图像(XX)的x>10时,两层33卷积核优于一层55卷积核,即在特征提取能力相同的情况 下,两层33卷积核比55卷积核的计算量更少。

3.全零填充(padding)

- 若要保持输入特征图和输出特征图的形状相一致,则需要使用全零填充.TF描述的全零填充 padding = ‘same’ 或者padding = ‘VALID’

- 参数设定及计算公式 p a d d i n g = { S A M E ( 全 0 填 充 ) 入 长 / 步 长 ( 向 上 取 整 ) V A L I D ( 不 全 0 填 充 ) 入 长 − 核 长 + 1 / 步 长 ( 向 上 取 整 ) padding=\begin{cases} SAME( 全0填充 ) & 入长/步长(向上取整) \\ VALID ( 不全0填充 )& 入长 - 核长 + 1/步长(向上取整) \end{cases} padding={SAME(全0填充)VALID(不全0填充)入长/步长(向上取整)入长−核长+1/步长(向上取整)

4.TF描述卷积层

tf.keras.layers.Conv2D(

filters = 卷积核个数,

kernel_size = 卷积核尺寸,#正方形写核长整数,或(核高h,核宽w)

strides = 滑动步长,#横纵向相同写步长整数,或(纵向步长h,横向步长w),默认为1

padding = 'same'or 'valid',#使用全零填充‘same’,默认为‘valid’不使用

activation = 'relu' or 'sigmoid' or 'tanh' or 'softmax'等,#如果有BN则此处不写

input_shape = (高,宽,通道数) #输入特征图维度,可省略

)

5.批标准化(Batch Normalization,BN)

- BN层位于卷积层之后,激活层之前。

- TF描述批标准化

tf.keras.layers.BatchNormalization()

model = tf.keras.models.Sequential([

Conv2D(filters = 6,kernel_size = (5,5),padding = 'same'),#卷积层

BatchNormalizationatch(),#BN层

Activaton

])

6.池化Pooling

- 池化层用于减少特征数据量。

- 最大池化可以用于提取图片纹理特征,均值池化可以保留背景特征

- TF描述池化

#最大池化层

tf.keras.layers.MaxPool2D(

pool_size = 池化核尺寸,#正方形写核长整数,或(核高h,核宽w)

strides = 池化步长,#步长整数,或(纵向步长h,横向步长w),默认为pool_size

padding = 'valid' or 'same' #是否使用全零填充

)

#均值池化层

tf.keras.layers.AveragePooling2D(

pool_size = 池化核尺寸,#正方形写核长整数,或(核高h,核宽w)

strides = 池化步长,#步长整数,或(纵向步长h,横向步长w),默认为pool_size

padding = 'valid' or 'same' #是否使用全零填充

)

model = tf.keras.models.Sequential([

Con2D(filters = 6,kernel_sizes = (5,5),padding = 'same'),# 卷积层

BatchNormalization(),#BN层

Activation('relu')#激活层

MaxPool2D(pool_size = (2,2),strides = 2,padding = 'same') #池化层

Dropout(0.2),#dropout层

])

7.Dropout层

- 用于冻结部分神经元,减少过拟合现象的产生

8.卷积神经网络

- 卷积神经网络本质上就是一个特征提取器,就是CBAPD

- 卷积神经网络的主要模块(CBAPD),卷积Convolutional , 批标准化BN , 激活Activation , 池化Pooling ,舍弃层Dropout

- 卷积神经网络提取的特征作为全连接层的输入

9. 卷积神经网络搭建实例

import tensorflow as tf

import os

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.keras.layers import Conv2D, BatchNormalization, Activation, MaxPool2D, Dropout, Flatten, Dense

from tensorflow.keras import Model

np.set_printoptions(threshold=np.inf)

print('-------------------------——————————————-开始加载cifar10数据集————————————————————————————')

cifar10 = tf.keras.datasets.cifar10

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

print('___________________________________________________________加载完成____________________________________________________________')

class Baseline(Model):

def __init__(self):

super(Baseline, self).__init__()

self.c1 = Conv2D(filters=6, kernel_size=(5, 5), padding='same') # 卷积层

self.b1 = BatchNormalization() # BN层

self.a1 = Activation('relu') # 激活层

self.p1 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same') # 池化层

self.d1 = Dropout(0.2) # dropout层

self.flatten = Flatten()

self.f1 = Dense(128, activation='relu')

self.d2 = Dropout(0.2)

self.f2 = Dense(10, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.b1(x)

x = self.a1(x)

x = self.p1(x)

x = self.d1(x)

x = self.flatten(x)

x = self.f1(x)

x = self.d2(x)

y = self.f2(x)

return y

model = Baseline()

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

checkpoint_save_path = "./checkpoint/Baseline.ckpt"

if os.path.exists(checkpoint_save_path + '.index'):

print('-------------load the model-----------------')

model.load_weights(checkpoint_save_path)

cp_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_save_path,

save_weights_only=True,

save_best_only=True)

history = model.fit(x_train, y_train, batch_size=32, epochs=5, validation_data=(x_test, y_test), validation_freq=1,

callbacks=[cp_callback])

model.summary()

# print(model.trainable_variables)

file = open('./weights.txt', 'w')

for v in model.trainable_variables:

file.write(str(v.name) + '\n')

file.write(str(v.shape) + '\n')

file.write(str(v.numpy()) + '\n')

file.close()

############################################### show ###############################################

# 显示训练集和验证集的acc和loss曲线

acc = history.history['sparse_categorical_accuracy']

val_acc = history.history['val_sparse_categorical_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

plt.subplot(1, 2, 1)

plt.plot(acc, label='Training Accuracy')

plt.plot(val_acc, label='Validation Accuracy')

plt.title('Training and Validation Accuracy')

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(loss, label='Training Loss')

plt.plot(val_loss, label='Validation Loss')

plt.title('Training and Validation Loss')

plt.legend()

plt.show()

-------------------------——————————————-开始加载cifar10数据集————————————————————————————

Downloading data from https://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz

170500096/170498071 [==============================] - 3146s 18us/step

___________________________________________________________加载完成____________________________________________________________

Train on 50000 samples, validate on 10000 samples

Epoch 1/5

50000/50000 [==============================] - 58s 1ms/sample - loss: 1.6464 - sparse_categorical_accuracy: 0.4037 - val_loss: 1.4482 - val_sparse_categorical_accuracy: 0.4826

Epoch 2/5

50000/50000 [==============================] - 56s 1ms/sample - loss: 1.4212 - sparse_categorical_accuracy: 0.4865 - val_loss: 1.3346 - val_sparse_categorical_accuracy: 0.5237

Epoch 3/5

50000/50000 [==============================] - 55s 1ms/sample - loss: 1.3464 - sparse_categorical_accuracy: 0.5164 - val_loss: 1.2806 - val_sparse_categorical_accuracy: 0.5419

Epoch 4/5

50000/50000 [==============================] - 55s 1ms/sample - loss: 1.2974 - sparse_categorical_accuracy: 0.5360 - val_loss: 1.2809 - val_sparse_categorical_accuracy: 0.5457

Epoch 5/5

50000/50000 [==============================] - 56s 1ms/sample - loss: 1.2506 - sparse_categorical_accuracy: 0.5543 - val_loss: 1.1809 - val_sparse_categorical_accuracy: 0.5820

Model: "baseline"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) multiple 456

_________________________________________________________________

batch_normalization (BatchNo multiple 24

_________________________________________________________________

activation (Activation) multiple 0

_________________________________________________________________

max_pooling2d (MaxPooling2D) multiple 0

_________________________________________________________________

dropout (Dropout) multiple 0

_________________________________________________________________

flatten (Flatten) multiple 0

_________________________________________________________________

dense (Dense) multiple 196736

_________________________________________________________________

dropout_1 (Dropout) multiple 0

_________________________________________________________________

dense_1 (Dense) multiple 1290

=================================================================

Total params: 198,506

Trainable params: 198,494

Non-trainable params: 12

_________________________________________________________________

10.经典卷积神经网络实现

- 1998 LeNet卷积神经网络开篇之作

import tensorflow as tf

import os

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.keras.layers import Conv2D, BatchNormalization, Activation, MaxPool2D, Dropout, Flatten, Dense

from tensorflow.keras import Model

np.set_printoptions(threshold=np.inf)

cifar10 = tf.keras.datasets.cifar10

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

class LeNet5(Model):

def __init__(self):

super(LeNet5, self).__init__()

self.c1 = Conv2D(filters=6, kernel_size=(5, 5),

activation='sigmoid')

self.p1 = MaxPool2D(pool_size=(2, 2), strides=2)

self.c2 = Conv2D(filters=16, kernel_size=(5, 5),

activation='sigmoid')

self.p2 = MaxPool2D(pool_size=(2, 2), strides=2)

self.flatten = Flatten()

self.f1 = Dense(120, activation='sigmoid')

self.f2 = Dense(84, activation='sigmoid')

self.f3 = Dense(10, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.p1(x)

x = self.c2(x)

x = self.p2(x)

x = self.flatten(x)

x = self.f1(x)

x = self.f2(x)

y = self.f3(x)

return y

model = LeNet5()

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

checkpoint_save_path = "./checkpoint/LeNet5.ckpt"

if os.path.exists(checkpoint_save_path + '.index'):

print('-------------load the model-----------------')

model.load_weights(checkpoint_save_path)

cp_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_save_path,

save_weights_only=True,

save_best_only=True)

history = model.fit(x_train, y_train, batch_size=32, epochs=5, validation_data=(x_test, y_test), validation_freq=1,

callbacks=[cp_callback])

model.summary()

# print(model.trainable_variables)

file = open('./weights.txt', 'w')

for v in model.trainable_variables:

file.write(str(v.name) + '\n')

file.write(str(v.shape) + '\n')

file.write(str(v.numpy()) + '\n')

file.close()

############################################### show ###############################################

# 显示训练集和验证集的acc和loss曲线

acc = history.history['sparse_categorical_accuracy']

val_acc = history.history['val_sparse_categorical_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

plt.subplot(1, 2, 1)

plt.plot(acc, label='Training Accuracy')

plt.plot(val_acc, label='Validation Accuracy')

plt.title('Training and Validation Accuracy')

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(loss, label='Training Loss')

plt.plot(val_loss, label='Validation Loss')

plt.title('Training and Validation Loss')

plt.legend()

plt.show()

Train on 50000 samples, validate on 10000 samples

Epoch 1/5

50000/50000 [==============================] - 40s 805us/sample - loss: 2.0624 - sparse_categorical_accuracy: 0.2223 - val_loss: 1.8597 - val_sparse_categorical_accuracy: 0.3140

Epoch 2/5

50000/50000 [==============================] - 39s 777us/sample - loss: 1.7750 - sparse_categorical_accuracy: 0.3487 - val_loss: 1.6614 - val_sparse_categorical_accuracy: 0.3986

Epoch 3/5

50000/50000 [==============================] - 39s 778us/sample - loss: 1.6367 - sparse_categorical_accuracy: 0.4040 - val_loss: 1.5642 - val_sparse_categorical_accuracy: 0.4283

Epoch 4/5

50000/50000 [==============================] - 40s 791us/sample - loss: 1.5573 - sparse_categorical_accuracy: 0.4322 - val_loss: 1.5169 - val_sparse_categorical_accuracy: 0.4477

Epoch 5/5

50000/50000 [==============================] - 39s 771us/sample - loss: 1.5039 - sparse_categorical_accuracy: 0.4529 - val_loss: 1.5006 - val_sparse_categorical_accuracy: 0.4547

Model: "le_net5"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) multiple 456

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 multiple 0

_________________________________________________________________

conv2d_2 (Conv2D) multiple 2416

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 multiple 0

_________________________________________________________________

flatten_1 (Flatten) multiple 0

_________________________________________________________________

dense_2 (Dense) multiple 48120

_________________________________________________________________

dense_3 (Dense) multiple 10164

_________________________________________________________________

dense_4 (Dense) multiple 850

=================================================================

Total params: 62,006

Trainable params: 62,006

Non-trainable params: 0

_________________________________________________________________

-

AlexNet 2012年ImageNet竞赛的冠军,共有8层,使用relu激活函数,加快了训练速度,使用dropout缓解了过拟合。

-

VggNet诞生于2014年,当年ImageNet竞赛的亚军,Top5错误率减少到7.3%。使用小尺寸卷积核,减少运算次数的同时,增加了识别准确率,网络结构规整,适合硬件加速。

import tensorflow as tf

from matplotlib import pyplot as plt

import numpy as np

np.set_printoptions(threshold=np.inf)

cifar10 = tf.keras.datasets.cifar10

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

# 可视化训练集输入特征的第一个元素

plt.imshow(x_train[0]) # 绘制图片

plt.show()

# 打印出训练集输入特征的第一个元素

print("x_train[0]:\n", x_train[0])

# 打印出训练集标签的第一个元素

print("y_train[0]:\n", y_train[0])

# 打印出整个训练集输入特征形状

print("x_train.shape:\n", x_train.shape)

# 打印出整个训练集标签的形状

print("y_train.shape:\n", y_train.shape)

# 打印出整个测试集输入特征的形状

print("x_test.shape:\n", x_test.shape)

# 打印出整个测试集标签的形状

print("y_test.shape:\n", y_test.shape)

import tensorflow as tf

import os

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.keras.layers import Conv2D, BatchNormalization, Activation, MaxPool2D, Dropout, Flatten, Dense

from tensorflow.keras import Model

np.set_printoptions(threshold=np.inf)

cifar10 = tf.keras.datasets.cifar10

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

class VGG16(Model):

def __init__(self):

super(VGG16, self).__init__()

self.c1 = Conv2D(filters=64, kernel_size=(3, 3), padding='same') # 卷积层1

self.b1 = BatchNormalization() # BN层1

self.a1 = Activation('relu') # 激活层1

self.c2 = Conv2D(filters=64, kernel_size=(3, 3), padding='same', )

self.b2 = BatchNormalization() # BN层1

self.a2 = Activation('relu') # 激活层1

self.p1 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.d1 = Dropout(0.2) # dropout层

self.c3 = Conv2D(filters=128, kernel_size=(3, 3), padding='same')

self.b3 = BatchNormalization() # BN层1

self.a3 = Activation('relu') # 激活层1

self.c4 = Conv2D(filters=128, kernel_size=(3, 3), padding='same')

self.b4 = BatchNormalization() # BN层1

self.a4 = Activation('relu') # 激活层1

self.p2 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.d2 = Dropout(0.2) # dropout层

self.c5 = Conv2D(filters=256, kernel_size=(3, 3), padding='same')

self.b5 = BatchNormalization() # BN层1

self.a5 = Activation('relu') # 激活层1

self.c6 = Conv2D(filters=256, kernel_size=(3, 3), padding='same')

self.b6 = BatchNormalization() # BN层1

self.a6 = Activation('relu') # 激活层1

self.c7 = Conv2D(filters=256, kernel_size=(3, 3), padding='same')

self.b7 = BatchNormalization()

self.a7 = Activation('relu')

self.p3 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.d3 = Dropout(0.2)

self.c8 = Conv2D(filters=512, kernel_size=(3, 3), padding='same')

self.b8 = BatchNormalization() # BN层1

self.a8 = Activation('relu') # 激活层1

self.c9 = Conv2D(filters=512, kernel_size=(3, 3), padding='same')

self.b9 = BatchNormalization() # BN层1

self.a9 = Activation('relu') # 激活层1

self.c10 = Conv2D(filters=512, kernel_size=(3, 3), padding='same')

self.b10 = BatchNormalization()

self.a10 = Activation('relu')

self.p4 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.d4 = Dropout(0.2)

self.c11 = Conv2D(filters=512, kernel_size=(3, 3), padding='same')

self.b11 = BatchNormalization() # BN层1

self.a11 = Activation('relu') # 激活层1

self.c12 = Conv2D(filters=512, kernel_size=(3, 3), padding='same')

self.b12 = BatchNormalization() # BN层1

self.a12 = Activation('relu') # 激活层1

self.c13 = Conv2D(filters=512, kernel_size=(3, 3), padding='same')

self.b13 = BatchNormalization()

self.a13 = Activation('relu')

self.p5 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.d5 = Dropout(0.2)

self.flatten = Flatten()

self.f1 = Dense(512, activation='relu')

self.d6 = Dropout(0.2)

self.f2 = Dense(512, activation='relu')

self.d7 = Dropout(0.2)

self.f3 = Dense(10, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.b1(x)

x = self.a1(x)

x = self.c2(x)

x = self.b2(x)

x = self.a2(x)

x = self.p1(x)

x = self.d1(x)

x = self.c3(x)

x = self.b3(x)

x = self.a3(x)

x = self.c4(x)

x = self.b4(x)

x = self.a4(x)

x = self.p2(x)

x = self.d2(x)

x = self.c5(x)

x = self.b5(x)

x = self.a5(x)

x = self.c6(x)

x = self.b6(x)

x = self.a6(x)

x = self.c7(x)

x = self.b7(x)

x = self.a7(x)

x = self.p3(x)

x = self.d3(x)

x = self.c8(x)

x = self.b8(x)

x = self.a8(x)

x = self.c9(x)

x = self.b9(x)

x = self.a9(x)

x = self.c10(x)

x = self.b10(x)

x = self.a10(x)

x = self.p4(x)

x = self.d4(x)

x = self.c11(x)

x = self.b11(x)

x = self.a11(x)

x = self.c12(x)

x = self.b12(x)

x = self.a12(x)

x = self.c13(x)

x = self.b13(x)

x = self.a13(x)

x = self.p5(x)

x = self.d5(x)

x = self.flatten(x)

x = self.f1(x)

x = self.d6(x)

x = self.f2(x)

x = self.d7(x)

y = self.f3(x)

return y

model = VGG16()

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

checkpoint_save_path = "./checkpoint/VGG16.ckpt"

if os.path.exists(checkpoint_save_path + '.index'):

print('-------------load the model-----------------')

model.load_weights(checkpoint_save_path)

cp_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_save_path,

save_weights_only=True,

save_best_only=True)

history = model.fit(x_train, y_train, batch_size=32, epochs=5, validation_data=(x_test, y_test), validation_freq=1,

callbacks=[cp_callback])

model.summary()

# print(model.trainable_variables)

file = open('./weights.txt', 'w')

for v in model.trainable_variables:

file.write(str(v.name) + '\n')

file.write(str(v.shape) + '\n')

file.write(str(v.numpy()) + '\n')

file.close()

############################################### show ###############################################

# 显示训练集和验证集的acc和loss曲线

acc = history.history['sparse_categorical_accuracy']

val_acc = history.history['val_sparse_categorical_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

plt.subplot(1, 2, 1)

plt.plot(acc, label='Training Accuracy')

plt.plot(val_acc, label='Validation Accuracy')

plt.title('Training and Validation Accuracy')

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(loss, label='Training Loss')

plt.plot(val_loss, label='Validation Loss')

plt.title('Training and Validation Loss')

plt.legend()

plt.show()

- IncepetionNet诞生于2014年,当年ImageNet竞赛冠军,Top5错误率为6.67%

import tensorflow as tf

import os

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.keras.layers import Conv2D, BatchNormalization, Activation, MaxPool2D, Dropout, Flatten, Dense, \

GlobalAveragePooling2D

from tensorflow.keras import Model

np.set_printoptions(threshold=np.inf)

cifar10 = tf.keras.datasets.cifar10

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

class ConvBNRelu(Model):

def __init__(self, ch, kernelsz=3, strides=1, padding='same'):

super(ConvBNRelu, self).__init__()

self.model = tf.keras.models.Sequential([

Conv2D(ch, kernelsz, strides=strides, padding=padding),

BatchNormalization(),

Activation('relu')

])

def call(self, x):

x = self.model(x, training=False) #在training=False时,BN通过整个训练集计算均值、方差去做批归一化,training=True时,通过当前batch的均值、方差去做批归一化。推理时 training=False效果好

return x

class InceptionBlk(Model):

def __init__(self, ch, strides=1):

super(InceptionBlk, self).__init__()

self.ch = ch

self.strides = strides

self.c1 = ConvBNRelu(ch, kernelsz=1, strides=strides)

self.c2_1 = ConvBNRelu(ch, kernelsz=1, strides=strides)

self.c2_2 = ConvBNRelu(ch, kernelsz=3, strides=1)

self.c3_1 = ConvBNRelu(ch, kernelsz=1, strides=strides)

self.c3_2 = ConvBNRelu(ch, kernelsz=5, strides=1)

self.p4_1 = MaxPool2D(3, strides=1, padding='same')

self.c4_2 = ConvBNRelu(ch, kernelsz=1, strides=strides)

def call(self, x):

x1 = self.c1(x)

x2_1 = self.c2_1(x)

x2_2 = self.c2_2(x2_1)

x3_1 = self.c3_1(x)

x3_2 = self.c3_2(x3_1)

x4_1 = self.p4_1(x)

x4_2 = self.c4_2(x4_1)

# concat along axis=channel

x = tf.concat([x1, x2_2, x3_2, x4_2], axis=3)

return x

class Inception10(Model):

def __init__(self, num_blocks, num_classes, init_ch=16, **kwargs):

super(Inception10, self).__init__(**kwargs)

self.in_channels = init_ch

self.out_channels = init_ch

self.num_blocks = num_blocks

self.init_ch = init_ch

self.c1 = ConvBNRelu(init_ch)

self.blocks = tf.keras.models.Sequential()

for block_id in range(num_blocks):

for layer_id in range(2):

if layer_id == 0:

block = InceptionBlk(self.out_channels, strides=2)

else:

block = InceptionBlk(self.out_channels, strides=1)

self.blocks.add(block)

# enlarger out_channels per block

self.out_channels *= 2

self.p1 = GlobalAveragePooling2D()

self.f1 = Dense(num_classes, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.blocks(x)

x = self.p1(x)

y = self.f1(x)

return y

model = Inception10(num_blocks=2, num_classes=10)

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

checkpoint_save_path = "./checkpoint/Inception10.ckpt"

if os.path.exists(checkpoint_save_path + '.index'):

print('-------------load the model-----------------')

model.load_weights(checkpoint_save_path)

cp_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_save_path,

save_weights_only=True,

save_best_only=True)

history = model.fit(x_train, y_train, batch_size=32, epochs=5, validation_data=(x_test, y_test), validation_freq=1,

callbacks=[cp_callback])

model.summary()

# print(model.trainable_variables)

file = open('./weights.txt', 'w')

for v in model.trainable_variables:

file.write(str(v.name) + '\n')

file.write(str(v.shape) + '\n')

file.write(str(v.numpy()) + '\n')

file.close()

############################################### show ###############################################

# 显示训练集和验证集的acc和loss曲线

acc = history.history['sparse_categorical_accuracy']

val_acc = history.history['val_sparse_categorical_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

plt.subplot(1, 2, 1)

plt.plot(acc, label='Training Accuracy')

plt.plot(val_acc, label='Validation Accuracy')

plt.title('Training and Validation Accuracy')

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(loss, label='Training Loss')

plt.plot(val_loss, label='Validation Loss')

plt.title('Training and Validation Loss')

plt.legend()

plt.show()

- ResNet诞生于2015年,当年ImageNet的竞赛冠军,Top5错误率为3.57%

import tensorflow as tf

import os

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.keras.layers import Conv2D, BatchNormalization, Activation, MaxPool2D, Dropout, Flatten, Dense

from tensorflow.keras import Model

np.set_printoptions(threshold=np.inf)

cifar10 = tf.keras.datasets.cifar10

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

class ResnetBlock(Model):

def __init__(self, filters, strides=1, residual_path=False):

super(ResnetBlock, self).__init__()

self.filters = filters

self.strides = strides

self.residual_path = residual_path

self.c1 = Conv2D(filters, (3, 3), strides=strides, padding='same', use_bias=False)

self.b1 = BatchNormalization()

self.a1 = Activation('relu')

self.c2 = Conv2D(filters, (3, 3), strides=1, padding='same', use_bias=False)

self.b2 = BatchNormalization()

# residual_path为True时,对输入进行下采样,即用1x1的卷积核做卷积操作,保证x能和F(x)维度相同,顺利相加

if residual_path:

self.down_c1 = Conv2D(filters, (1, 1), strides=strides, padding='same', use_bias=False)

self.down_b1 = BatchNormalization()

self.a2 = Activation('relu')

def call(self, inputs):

residual = inputs # residual等于输入值本身,即residual=x

# 将输入通过卷积、BN层、激活层,计算F(x)

x = self.c1(inputs)

x = self.b1(x)

x = self.a1(x)

x = self.c2(x)

y = self.b2(x)

if self.residual_path:

residual = self.down_c1(inputs)

residual = self.down_b1(residual)

out = self.a2(y + residual) # 最后输出的是两部分的和,即F(x)+x或F(x)+Wx,再过激活函数

return out

class ResNet18(Model):

def __init__(self, block_list, initial_filters=64): # block_list表示每个block有几个卷积层

super(ResNet18, self).__init__()

self.num_blocks = len(block_list) # 共有几个block

self.block_list = block_list

self.out_filters = initial_filters

self.c1 = Conv2D(self.out_filters, (3, 3), strides=1, padding='same', use_bias=False)

self.b1 = BatchNormalization()

self.a1 = Activation('relu')

self.blocks = tf.keras.models.Sequential()

# 构建ResNet网络结构

for block_id in range(len(block_list)): # 第几个resnet block

for layer_id in range(block_list[block_id]): # 第几个卷积层

if block_id != 0 and layer_id == 0: # 对除第一个block以外的每个block的输入进行下采样

block = ResnetBlock(self.out_filters, strides=2, residual_path=True)

else:

block = ResnetBlock(self.out_filters, residual_path=False)

self.blocks.add(block) # 将构建好的block加入resnet

self.out_filters *= 2 # 下一个block的卷积核数是上一个block的2倍

self.p1 = tf.keras.layers.GlobalAveragePooling2D()

self.f1 = tf.keras.layers.Dense(10, activation='softmax', kernel_regularizer=tf.keras.regularizers.l2())

def call(self, inputs):

x = self.c1(inputs)

x = self.b1(x)

x = self.a1(x)

x = self.blocks(x)

x = self.p1(x)

y = self.f1(x)

return y

model = ResNet18([2, 2, 2, 2])

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

checkpoint_save_path = "./checkpoint/ResNet18.ckpt"

if os.path.exists(checkpoint_save_path + '.index'):

print('-------------load the model-----------------')

model.load_weights(checkpoint_save_path)

cp_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_save_path,

save_weights_only=True,

save_best_only=True)

history = model.fit(x_train, y_train, batch_size=32, epochs=5, validation_data=(x_test, y_test), validation_freq=1,

callbacks=[cp_callback])

model.summary()

# print(model.trainable_variables)

file = open('./weights.txt', 'w')

for v in model.trainable_variables:

file.write(str(v.name) + '\n')

file.write(str(v.shape) + '\n')

file.write(str(v.numpy()) + '\n')

file.close()

############################################### show ###############################################

# 显示训练集和验证集的acc和loss曲线

acc = history.history['sparse_categorical_accuracy']

val_acc = history.history['val_sparse_categorical_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

plt.subplot(1, 2, 1)

plt.plot(acc, label='Training Accuracy')

plt.plot(val_acc, label='Validation Accuracy')

plt.title('Training and Validation Accuracy')

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(loss, label='Training Loss')

plt.plot(val_loss, label='Validation Loss')

plt.title('Training and Validation Loss')

plt.legend()

plt.show()

Train on 50000 samples, validate on 10000 samples

Epoch 1/5

5376/50000 [==>...........................] - ETA: 22:03 - loss: 2.0705 - sparse_categorical_accuracy: 0.3356