PyTorch 1.x 常用知识

PyTorch 1.x常用知识

- 1. 简介

-

- 1.1 基本概念

- 1.2 检查GPU是否可用

- 1.3 设置使用的GPU (TensorFlow与PyTorch相同

- 1.4 测量GPU的计算速度

- 1.5 tqdm

- 2. Tensor

-

- 2.1 Tensor API分类

- 2.2 创建Tensor的常用方法

- 2.3 Tensor属性

- 2.4 torch.Tensor与troch.tensor的区别

- 2.5 修改Tensor形状

- 2.6 torch.view 与 torch.reshape 的区别

- 2.7 索引操作

- 2.8 Tensor与Numpy相互转化

- 2.9 PyTorch与Numpy比较

- 2.10 把Tensor放入cuda或cpu

- 2.11 torch.Tensor.permute

- 2.12 torch.Tensor.view

- 3. 自动微分(autograd)

-

- 3.1 自动微分

- 3.2 张量求导

- 3.3 梯度

- 4. 模型序列化

-

- 4.1 torch.save和torch.load定义

- 4.2 序列化模型

-

- 4.2.1 保存和加载整个模型

- 4.2.2 仅保存和加载模型参数

- 5. 模型的参数及扩展名

-

- 5.1 模型

- 5.2 model.state_dict()

- 5.3 optimizer.state_dict()

- 5.4 model.parameters()

- 5.5 model.named_parameters()

- 6. Torch函数

-

- 6.1 torch.unsqueeze

- 6.2 torch.squeeze

- 6.3 torch.Tensor扩展数据维度

-

- 6.3.1 expand

- 6.3.2 repeat

- 7. softmax

- 参考

1. 简介

1.1 基本概念

- PyTorch的定义

- PyTorch是一个基于Torch的Python开源机器学习库,用于自然语言处理等应用程序。它由Facebook的AI组开发

- 主要特点

- 不仅能够 实现强大的GPU加速,同时还支持动态神经网络,这一点是现在很多主流框架如ensorFlow都不支持的

- PyTorch提供了两个高级功能:

- 具有强大的GPU加速的

n维张量计算(类似于numpy, 但可运行在GPU上) - 包含自动求导系统的深度神经网络

- 具有强大的GPU加速的

- PyTorch应用

- 除了Facebook之外,Twitter、GMU和Salesforce等机构都采用了PyTorch

- Numpy与Tensor

- Numpy把ndarray放在CPU中进行计算

- Torch把Tensor放在GPU中进行加速运算

1.2 检查GPU是否可用

- shell命令

nvidia-smi

- 示例

import torch

import time

print(torch.__version__)

print(torch.cuda.is_available())

flag = torch.cuda.is_available()

if flag:

print("CUDA可使用")

else:

print("CUDA不可用")

ngpu= 1

# Decide which device we want to run on

device = torch.device("cuda:0" if (torch.cuda.is_available() and ngpu > 0) else "cpu")

print("驱动为:",device)

print("GPU型号: ",torch.cuda.get_device_name(0))

1.3 设置使用的GPU (TensorFlow与PyTorch相同

os.environ[“CUDA_DEVICE_ORDER”] = “PCI_BUS_ID” # 按照PCI_BUS_ID顺序从0开始排列GPU设备

os.environ[“CUDA_VISIBLE_DEVICES”] = “0” #设置当前使用的GPU设备仅为0号设备 设备名称为'/gpu:0'

os.environ[“CUDA_VISIBLE_DEVICES”] = “1” #设置当前使用的GPU设备仅为1号设备 设备名称为'/gpu:0'

os.environ[“CUDA_VISIBLE_DEVICES”] = “0,1” #设置当前使用的GPU设备为0,1号两个设备,名称依次为'/gpu:0'、'/gpu:1'

os.environ[“CUDA_VISIBLE_DEVICES”] = “1,0” #设置当前使用的GPU设备为1,0号两个设备,名称依次为'/gpu:0'、'/gpu:1'。表示优先使用1号设备,然后使用0号设备

os.environ[“CUDA_VISIBLE_DEVICES”] = “-1” # 禁用GPU

1.4 测量GPU的计算速度

- 示例

a = torch.randn(10000, 1000)

b = torch.randn(1000, 2000)

t0 = time.time()

c = torch.matmul(a, b)

t1 = time.time()

print(a.device,",time=", t1 - t0, c.norm(2))

device = torch.device('cuda')

a = a.to(device)

b = b.to(device)

t0 = time.time()

c = torch.matmul(a, b)

t2 = time.time()

print(a.device,",time=", t2 - t0, c.norm(2))

t0 = time.time()

c = torch.matmul(a, b)

t2 = time.time()

print(a.device,",time=", t2 - t0, c.norm(2))

- 输出

cpu ,time= 0.1700434684753418 tensor(141028.5938)

cuda:0 ,time= 0.006982564926147461 tensor(141429.7656, device='cuda:0')

cuda:0 ,time= 0.0 tensor(141429.7656, device='cuda:0')

1.5 tqdm

- dm 是一个快速,可扩展的Python进度条,可以在 Python 长循环中添加一个进度提示信息,用户只需要封装任意的迭代器 tqdm(iterator)

- 它是用来显示进度条的,很漂亮,使用很直观(在循环体里边加个tqdm),而且基本不影响原程序效率

- 示例

import time

from tqdm import tqdm

for i in tqdm(range(10000)):

time.sleep(0.01)

2. Tensor

2.1 Tensor API分类

-

从接口角度分类:

- torch.function:如torch.sum, torch.add

- tensor.function:如tensor.view, tensor.add

-

从输出角度分类:

- 不修改参与计算的数据,返回结果:如

x.add(y),x值不变,返回一个新的Tensor - 修改参与计算的数据:如

x.add_(y),运算结果保存在x中,即x被修改

- 不修改参与计算的数据,返回结果:如

-

示例

-

输出

import torch

x=torch.tensor([1,2])

y=torch.tensor([3,4])

z=x.add(y)

print(z)

print(x)

x.add_(y)

print(x)

tensor([4, 6])

tensor([1, 2])

tensor([4, 6])

2.2 创建Tensor的常用方法

- 这些创建方法都可以在创建的时候指定数据类型dtype和存放device(cpu/gpu)

| 函数 | 功能 |

|---|---|

| Tensor(*size) | 创建指定大小的Tensor |

| tensor(data) | torch.tensor(data, dtype=None, device=None, requires_grad=False, pin_memory=False)使用类似np.array的已存在的数据构造tensor |

| ones(*size) | 全1张量 |

| ones_like(t) | |

| zeros(*size) | 全0张量 |

| zeros_like(t) | |

| eye(*size) | 对角线 1,其它为0 |

| arange(start,end,step) | 从start到end,步长为step |

| linspace(start,end,num) | 从start到end, 均分为成num份 |

| rand/randn(*size) | 生成[0,1)均与分布/标准正态分布 |

| from_numpy(ndarray) |

- 示例

# 使用数据构造Tensor

print('使用数据构造Tensor:')

print(torch.tensor((3,5)))

print(torch.tensor([3,5]))

print(torch.tensor(3))

print(torch.tensor([[0.1, 1.2], [2.2, 3.1], [4.9, 5.2]]))

torch.tensor([[0.11111, 0.222222, 0.3333333]],

dtype=torch.float64,

device=torch.device('cuda:0'))# creates a torch.cuda.DoubleTensor

print(torch.Tensor((3,5)))

print(torch.Tensor([3,5]))

# 使用size构造Tensor

print('')

print('使用size构造Tensor:')

print(torch.Tensor(3,5))

a = torch.eye(3,2)

print(a)

print(torch.ones(3,4))

print(torch.ones_like(a))

print(torch.zeros(3,4))

print(torch.zeros_like(a))

print(torch.arange(1,9,1))

print(torch.arange(1,9,2))

print(torch.linspace(1,9,2))

print(torch.linspace(1,9,3))

print(torch.rand(3,3))

print(torch.randn(3,3))

b = np.ones((3,2))

print('b=\n',b)

print(torch.from_numpy(b))

- 输出

使用数据构造Tensor:

tensor([3, 5])

tensor([3, 5])

tensor(3)

tensor([[0.1000, 1.2000],

[2.2000, 3.1000],

[4.9000, 5.2000]])

tensor([3., 5.])

tensor([3., 5.])

使用size构造Tensor:

tensor([[9.2755e-39, 8.9082e-39, 9.9184e-39, 8.4490e-39, 9.6429e-39],

[1.0653e-38, 1.0469e-38, 4.2246e-39, 1.0378e-38, 9.6429e-39],

[9.2755e-39, 9.7346e-39, 1.0745e-38, 1.0102e-38, 9.9184e-39]])

tensor([[1., 0.],

[0., 1.],

[0., 0.]])

tensor([[1., 1., 1., 1.],

[1., 1., 1., 1.],

[1., 1., 1., 1.]])

tensor([[1., 1.],

[1., 1.],

[1., 1.]])

tensor([[0., 0., 0., 0.],

[0., 0., 0., 0.],

[0., 0., 0., 0.]])

tensor([[0., 0.],

[0., 0.],

[0., 0.]])

tensor([1, 2, 3, 4, 5, 6, 7, 8])

tensor([1, 3, 5, 7])

tensor([1., 9.])

tensor([1., 5., 9.])

tensor([[0.3097, 0.1510, 0.7616],

[0.8383, 0.1241, 0.3029],

[0.1397, 0.2673, 0.7836]])

tensor([[ 0.1529, -1.7908, -0.8428],

[ 0.9294, 0.1580, 0.7887],

[-1.2041, -0.0892, 0.7441]])

b=

[[1. 1.]

[1. 1.]

[1. 1.]]

tensor([[1., 1.],

[1., 1.],

[1., 1.]], dtype=torch.float64)

2.3 Tensor属性

- size()/shape: 输出张量的维度

- dtype:查看张量的数据类型

- 示例

a = torch.tensor([[1,2,3], [4,5,6]])

print(a)

print(a.size())

print(a.shape)

print(a.dtype)

- 输出

tensor([[1, 2, 3],

[4, 5, 6]])

torch.Size([2, 3])

torch.Size([2, 3])

torch.int64

[[1 2 3]

[4 5 6]]

2.4 torch.Tensor与troch.tensor的区别

- torch.Tensor是torch.empty 和 torch.tensor 之间的混合。传入数据时,torch.Tensor使用全局默认dtype(FloatTensor),而torch.tensor是从数据中推断数据类型

- torch.tensor(1)返回的是固定值1;torch.Tensor返回的是大小为1的张量

- 示例

print(torch.tensor(1))

print(torch.Tensor(1))

- 输出

tensor(1)

tensor([0.])

2.5 修改Tensor形状

| 函数 | 功能 |

|---|---|

| size() | 计算张量属性值,与shape等价 |

| numel(input) | 算张量的元素个数 |

| view(*shape) | 修改张量的shape,共享内存,修改一个同时修改 |

| resize() | 类似于view |

| item | 返回标量 |

| unsqueeze | 在指定维度增加一个1 |

| squeeze | 在指定维度压缩一个1 |

- 示例

x = torch.randn(2,3)

print('Size:', x.size())

print('Shape:', x.shape)

print('维度:', x.dim()) # 维度

print("这里把x展为1维向量:", x.view(-1))

print('x=',x)

y = x.view(-1)

z=torch.unsqueeze(y,0)

print('z=',z)

print("没增加维度前:",y," 的维度",y.dim())

print("增加一个维度:", z)

print("z的维度:", z.dim())

print("z的个数:", z.numel())

- 输出

Size: torch.Size([2, 3])

Shape: torch.Size([2, 3])

维度: 2

这里把x展为1维向量: tensor([ 0.3224, 1.4193, -0.4853, -0.6055, -1.1936, -0.6693])

x= tensor([[ 0.3224, 1.4193, -0.4853],

[-0.6055, -1.1936, -0.6693]])

z= tensor([[ 0.3224, 1.4193, -0.4853, -0.6055, -1.1936, -0.6693]])

没增加维度前: tensor([ 0.3224, 1.4193, -0.4853, -0.6055, -1.1936, -0.6693]) 的Size 1

增加一个维度: tensor([[ 0.3224, 1.4193, -0.4853, -0.6055, -1.1936, -0.6693]])

z的维度: 2

z的个数: 6

2.6 torch.view 与 torch.reshape 的区别

- reshape()可以由torch.reshape()或者torch.Tensor.reshape()调用;而view()只可以由torch.Tensor.view()调用

- 新的size必须与原来的size与stride兼容,否则,在view之前必须调用contiguous()方法

- 同样返回数据量相同的但形状不同的Tensor,若满足view条件,则不会copy,若不满足,就copy

- 只想重塑,就使用torch.reshape,如果考虑内存并共享,就用torch.view

2.7 索引操作

| 函数 | 功能 |

|---|---|

| index_select(input,dim,index) | 在指定维度上选择列或者行 |

| nonzero(input) | 获取非0元素的下标 |

| masked_select(input,mask) | 使用二元值进行选择 |

| gather(input,dim,index) | 指定维度选择数据,输出形状与index一致 |

| scatter_(input,dim,index,src | gather的反操作,根据指定索引补充数据 |

- 示例

# 设置一个随机种子

torch.manual_seed(100)

print(torch.manual_seed(100))

x = torch.randn(2,3)

print(x)

# 索引获取第一行所有数据x[0,:]

print('x[0,:]=',x[0,:])

# 获取最后一列的数据x[:,-1]

print('x[:,-1]=',x[:,-1])

# 生成是否大于0的张量

mask= x>0

print('mask=',mask)

# 获取大于0的值

torch.masked_select(x,mask)

print('torch.masked_select(x,mask)=',torch.masked_select(x,mask))

# 获取非0下标,即行、列的索引

torch.nonzero(mask)

print('torch.nonzero(mask)=',torch.nonzero(mask))

# 获取指定索引对应的值,输出根据以下规则得到# out[i][j] = input[index[i][j][j]] # 如果 if dim == 0# out[i][j] = input[i][index[i][j]] # 如果 if dim == 1

index = torch.LongTensor([[0,1,1]])

print(index)

torch.gather(x,0,index)

print('torch.gather(x,0,index)=',torch.gather(x,0,index))

index=torch.LongTensor([[0,1,1],[1,1,1]])

a = torch.gather(x,1,index)

print("a: ",a)

# 把a的值返回到2x3的0矩阵中

z = torch.zeros(2,3)

z.scatter_(1,index,a)

print('z=',z)

- 输出

<torch._C.Generator object at 0x000001E43F784170>

tensor([[ 0.3607, -0.2859, -0.3938],

[ 0.2429, -1.3833, -2.3134]])

x[0,:]= tensor([ 0.3607, -0.2859, -0.3938])

x[:,-1]= tensor([-0.3938, -2.3134])

mask= tensor([[ True, False, False],

[ True, False, False]])

torch.masked_select(x,mask)= tensor([0.3607, 0.2429])

torch.nonzero(mask)= tensor([[0, 0],

[1, 0]])

tensor([[0, 1, 1]])

torch.gather(x,0,index)= tensor([[ 0.3607, -1.3833, -2.3134]])

a: tensor([[ 0.3607, -0.2859, -0.2859],

[-1.3833, -1.3833, -1.3833]])

z= tensor([[ 0.3607, -0.2859, 0.0000],

[ 0.0000, -1.3833, 0.0000]])

2.8 Tensor与Numpy相互转化

- Numpy=>Tensor:

torch.from_numpy() - Tensor=>Numpy:

tensor.numpy() - 注:这两个函数所产生的的 Tensor 和NumPy中的数组共享相同的内存(所以他们之间的转换很快),改变其中⼀个时另⼀个也会改变

- 示例

a = torch.ones(2,3)

b = a.numpy()

print(a)

print(b)

a += 1

print(a)

print(b)

a = np.ones((2,3))

b = torch.from_numpy(a)

print(a)

print(b)

a += 5

print(a)

print(b)

- 输出

tensor([[1., 1., 1.],

[1., 1., 1.]])

[[1. 1. 1.]

[1. 1. 1.]]

tensor([[2., 2., 2.],

[2., 2., 2.]])

[[2. 2. 2.]

[2. 2. 2.]]

##

[[1. 1. 1.]

[1. 1. 1.]]

tensor([[1., 1., 1.],

[1., 1., 1.]], dtype=torch.float64)

[[6. 6. 6.]

[6. 6. 6.]]

tensor([[6., 6., 6.],

[6., 6., 6.]], dtype=torch.float64)

2.9 PyTorch与Numpy比较

| np.ndarry([3.2,4.3],dtype=np.float16) | torch.tensor([3.2,4.3], dtype=torch.float16 |

|---|---|

| x.copy() | x.clone() |

| np.dot | torch.mm |

| x.ndim | x.dim |

| x.size | x.nelement() |

| x.reshape | x.reshape / x.view |

| x.flatten | x.view(-1) |

| np.floor(x) | torch.floor(x), x.floor() |

| np.less | x.lt |

| np.random.seed | torch.manual_seed |

2.10 把Tensor放入cuda或cpu

- 把Tensor copy到CUDA内存

torch.Tensor.cuda(device=None, non_blocking=False, memory_format=torch.preserve_format) → Tensor

- 把Tensor copy到CPU内存

torch.Tensor.cpu(memory_format=torch.preserve_format) → Tensor

2.11 torch.Tensor.permute

- 功能

- 返回维度重新排列的张量视图,张量数据不变

- 定义

permute(*dims) → Tensor # *dims (int...): 指定的维度顺序

- 示例代码

import torch

x = torch.randn(2, 3, 5)

print(x.shape)

y = x.permute(2, 0, 1)

print(y.shape)

#torch.Size([2, 3, 5])

#torch.Size([5, 2, 3])

2.12 torch.Tensor.view

- 功能

- 返回一个新的张量,其数据与原张量相同,但形状不同

- 定义

view(*shape) → Tensor

- 示例代码

x = torch.randn(4, 4)

print(x.shape)

y = x.view(16)

print(y.shape)

z = x.view(-1, 8) # the size -1 is inferred from other dimensions

print(z.shape)

# torch.Size([4, 4])

# torch.Size([16])

# torch.Size([2, 8])

a = torch.randn(1, 2, 3, 4)

print(a.shape)

c = a.view(1, 3, 2, 4)

print(c.shape)

# torch.Size([1, 2, 3, 4])

# torch.Size([1, 3, 2, 4])

3. 自动微分(autograd)

3.1 自动微分

-

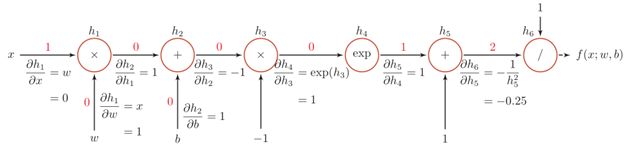

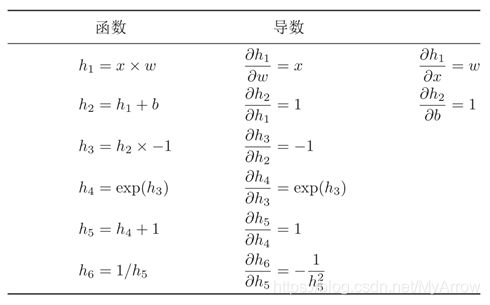

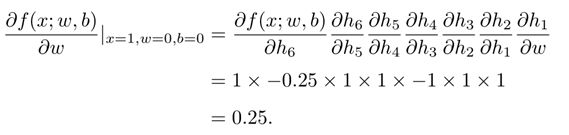

目标:求 f ( x ; w , b ) f(x;w,b) f(x;w,b)在 x = 1 , w = 0 , b = 0 x=1, w=0, b=0 x=1,w=0,b=0处的导数

f ( x ; w , b ) = 1 e − ( w x + b ) + 1 f(x;w,b) = \frac{1}{e^{-(wx+b)} + 1} f(x;w,b)=e−(wx+b)+11 -

方法:利用链式法则来自动计算一个复合函数的梯度

3.2 张量求导

- 示例1

# 加入requires_grad=True参数可追踪函数求导

x = torch.ones(2,2,requires_grad=True)

print(x)

print(x.grad_fn)

- 输出1

tensor([[1., 1.],

[1., 1.]], requires_grad=True)

None

- 示例2

# 进行运算

y = x+2

print(y)

print(y.grad_fn)

# 像x这种直接创建的称为叶子节点,叶子节点对应的 grad_fn 是 None

print(x.is_leaf,y.is_leaf)

- 输出2

tensor([[3., 3.],

[3., 3.]], grad_fn=<AddBackward0>)

<AddBackward0 object at 0x000001E42FDFF7F0>

True False

3.3 梯度

- 现在让我们反向传播:因为out包含单个标量,out.backward()所以等效于out.backward(torch.tensor(1.))

- 示例

x = torch.ones(2,2,requires_grad=True)

y = x+2

z = y * y * 3

out = z.mean()

print(z,out)

a = out.backward()

print(x.grad)

print(a)

# 再来反向传播⼀次,注意grad是累加的

print("")

out2 = x.sum()

out2.backward()

print("x.grad=",x.grad)

print("")

out3 = x.sum()

# x.grad.data.zero_()

out3.backward()

print("x.grad=",x.grad)

- 输出

tensor([[27., 27.],

[27., 27.]], grad_fn=<MulBackward0>) tensor(27., grad_fn=<MeanBackward0>)

tensor([[4.5000, 4.5000],

[4.5000, 4.5000]])

None

x.grad= tensor([[5.5000, 5.5000],

[5.5000, 5.5000]])

x.grad= tensor([[6.5000, 6.5000],

[6.5000, 6.5000]])

4. 模型序列化

| API | 功能描述 |

|---|---|

| torch.save | 保存一个对象到读盘文件 |

| torch.load | 从一个torch.save保存的文件中加载对象 |

4.1 torch.save和torch.load定义

- torch.save

save(obj, # saved object

f, # file name

pickle_module=pickle, # module used for pickling metadata and objects

pickle_protocol=DEFAULT_PROTOCOL, #can be specified to override the default protocol

_use_new_zipfile_serialization=True)

- torch.load

load(f, # file name

map_location=None, # a function, torch.device, string or a dict specifying how to remap storage locations

pickle_module=pickle, # module used for unpickling metadata and objects (has to match the pickle_module used to serialize file)

**pickle_load_args) # (Python 3 only) optional keyword arguments passed over to pickle_module.load()

4.2 序列化模型

4.2.1 保存和加载整个模型

torch.save(the_model, 'model.pkl')

the_model = torch.load('model.pkl')

4.2.2 仅保存和加载模型参数

# save

torch.save(the_model.state_dict(), 'params.pkl')

# load

the_model = TheModelClass(*args, **kwargs)

the_model.load_state_dict(torch.load('params.pkl'))

5. 模型的参数及扩展名

- 参数:

- model.state_dict()

- model.parameters()

- model.named_parameters()

- 以上三个方法都可以查看Module的参数信息,用于更新参数,或者用于模型的保存

- 模型文件扩展名:

- .pt

- .pth

- .pkl

- 这三种文件格式一样,只是文件后缀名不同而已

5.1 模型

import torch

import torch.nn.functional as F

from torch.optim import SGD

class MyNet(torch.nn.Module):

def __init__(self):

super(MyNet, self).__init__() # 第一句话,调用父类的构造函数

self.conv1 = torch.nn.Conv2d(3, 32, 3, 1, 1)

self.relu1=torch.nn.ReLU()

self.max_pooling1=torch.nn.MaxPool2d(2,1)

self.conv2 = torch.nn.Conv2d(3, 32, 3, 1, 1)

self.relu2=torch.nn.ReLU()

self.max_pooling2=torch.nn.MaxPool2d(2,1)

self.dense1 = torch.nn.Linear(32 * 3 * 3, 128)

self.dense2 = torch.nn.Linear(128, 10)

def forward(self, x):

x = self.conv1(x)

x = self.relu1(x)

x = self.max_pooling1(x)

x = self.conv2(x)

x = self.relu2(x)

x = self.max_pooling2(x)

x = self.dense1(x)

x = self.dense2(x)

return x

model = MyNet() # 构造模型

5.2 model.state_dict()

- 保存每一层对应的参数

- 只显示参数可训练的层(pool和BN层除外)

- 用途:1) 查看指定层的权值 2)模型保存

print(type(model.state_dict())) # 查看state_dict所返回的类型,是一个“顺序字典OrderedDict”

for param_tensor in model.state_dict(): # 字典的遍历默认是遍历 key,所以param_tensor实际上是键值

print(param_tensor,'\t',model.state_dict()[param_tensor].size())

'''

conv1.weight torch.Size([32, 3, 3, 3])

conv1.bias torch.Size([32])

conv2.weight torch.Size([32, 3, 3, 3])

conv2.bias torch.Size([32])

dense1.weight torch.Size([128, 288])

dense1.bias torch.Size([128])

dense2.weight torch.Size([10, 128])

dense2.bias torch.Size([10])

'''

5.3 optimizer.state_dict()

- 包含了优化器的状态以及被使用的超参数(如lr, momentum,weight_decay等)

optimizer = SGD(model.parameters(),lr=0.001,momentum=0.9)

for var_name in optimizer.state_dict():

print(var_name,'\t',optimizer.state_dict()[var_name])

'''

state {}

param_groups [{'lr': 0.001,

'momentum': 0.9,

'dampening': 0,

'weight_decay': 0,

'nesterov': False,

'params': [1412966600640, 1412966613064, 1412966613136, 1412966613208,

1412966613280, 1412966613352, 1412966613496, 1412966613568]

}]

'''

5.4 model.parameters()

- model.parameters()方法返回的是一个生成器generator,每一个元素是从开头到结尾的参数

- 与state_dict()比较:parameters没有对应的key名称,是一个由纯参数组成的generator,而state_dict是一个字典,包含了一个key

print(type(model.parameters())) # 返回的是一个generator

for para in model.parameters():

print(para.size()) # 只查看形状

'''

torch.Size([32, 3, 3, 3])

torch.Size([32])

torch.Size([32, 3, 3, 3])

torch.Size([32])

torch.Size([128, 288])

torch.Size([128])

torch.Size([10, 128])

torch.Size([10])

'''

5.5 model.named_parameters()

print(type(model.named_parameters())) # 返回的是一个generator

for para in model.named_parameters(): # 返回的每一个元素是一个元组 tuple

'''

是一个元组 tuple ,元组的第一个元素是参数所对应的名称,第二个元素就是对应的参数值

'''

print(para[0],'\t',para[1].size())

'''

conv1.weight torch.Size([32, 3, 3, 3])

conv1.bias torch.Size([32])

conv2.weight torch.Size([32, 3, 3, 3])

conv2.bias torch.Size([32])

dense1.weight torch.Size([128, 288])

dense1.bias torch.Size([128])

dense2.weight torch.Size([10, 128])

dense2.bias torch.Size([10])

'''

6. Torch函数

6.1 torch.unsqueeze

- 定义

torch.unsqueeze(input, dim, out=None)

- 功能

- 扩展维度

- 返回一个新的张量,对输入的既定位置插入维度 1

- 特性

- 返回张量与输入张量共享内存,所以改变其中一个的内容会改变另一个

- 只是维度增加,但元素个数和值不变

- 示例

x = torch.tensor([[1,2],[3,4]]) # [2,2]

print(x.shape)

y1 = torch.unsqueeze(x,0) # [1,2,2]

print(y1.shape)

y2 = torch.unsqueeze(x,1) # [2,1,2]

print(y2.shape)

print(x)

print(y1)

print(y2)

'''

torch.Size([2, 2])

torch.Size([1, 2, 2])

torch.Size([2, 1, 2])

tensor([[1, 2],

[3, 4]])

tensor([[[1, 2],

[3, 4]]])

tensor([[[1, 2]],

[[3, 4]]])

'''

- unsqueeze_和 unsqueeze 的区别

- unsqueeze_ 和 unsqueeze 实现一样的功能

- 区别在于 unsqueeze_ 是 in_place 操作,即 unsqueeze 不会对使用 unsqueeze 的 tensor 进行改变,想要获取 unsqueeze 后的值必须赋予给新值, unsqueeze_ 则会对自己改变

# unsqueeze

a = torch.Tensor([1, 2, 3, 4]) # [4]

b = torch.unsqueeze(a, 1) # [4,1]

print('a={}, a.shape={}'.format(a, a.shape))

print('b={}, b.shape={}'.format(b, b.shape))

'''

a=tensor([1., 2., 3., 4.]), a.shape=torch.Size([4])

b=tensor([[1.],

[2.],

[3.],

[4.]]), b.shape=torch.Size([4, 1])

'''

# unsqueeze_

a = torch.Tensor([1, 2, 3, 4]) # [4]

b = a.unsqueeze_(1) # [4,1]

print('a={}, a.shape={}'.format(a, a.shape))

print('b={}, b.shape={}'.format(b, b.shape))

'''

a=tensor([[1.],

[2.],

[3.],

[4.]]), a.shape=torch.Size([4, 1])

b=tensor([[1.],

[2.],

[3.],

[4.]]), b.shape=torch.Size([4, 1])

'''

6.2 torch.squeeze

- 定义

torch.squeeze(input, dim=None, out=None)

- 功能

- 降维

- 将输入张量形状中的1 去除并返回。 如果输入是形如(A×1×B×1×C×1×D),那么输出形状就为: (A×B×C×D)

- 当给定dim时,那么挤压操作只在给定维度上。例如,输入形状为: (A×1×B), squeeze(input, 0) 将会保持张量不变,只有用 squeeze(input, 1),形状会变成 (A×B)。

- 注意

- 返回张量与输入张量共享内存,所以改变其中一个的内容会改变另一个

m = torch.zeros(2, 1, 2, 1, 2)

print(m.size()) # torch.Size([2, 1, 2, 1, 2])

n = torch.squeeze(m)

print(n.size()) # torch.Size([2, 2, 2])

n = torch.squeeze(m, 0) # 当给定dim时,那么挤压操作只在给定维度上

print(n.size()) # torch.Size([2, 1, 2, 1, 2])

n = torch.squeeze(m, 1)

print(n.size()) # torch.Size([2, 2, 1, 2])

n = torch.squeeze(m, 2)

print(n.size()) # torch.Size([2, 1, 2, 1, 2])

n = torch.squeeze(m, 3)

print(n.size()) # torch.Size([2, 1, 2, 2])

6.3 torch.Tensor扩展数据维度

- torch.Tensor:是包含一种数据类型元素的多维矩阵

- torch.Tensor有两个实例方法可以用来扩展某维的数据的尺寸,分别是

- repeat()

- expand()

6.3.1 expand

- 定义

expand(*sizes) → Tensor

- 示例

x = torch.tensor([1, 2, 3])

xx = x.expand(2, 3)

print(x)

print(xx)

#tensor([1, 2, 3])

#tensor([[1, 2, 3],

# [1, 2, 3]])

6.3.2 repeat

- 功能:沿着特定的维度重复这个张量,和expand()不同的是,这个函数拷贝张量的数据

- 定义

repeat(*sizes) -> Tensor

- 示例

x = torch.tensor([1, 2, 3])

xx = x.repeat(3, 2)

print(x)

print(xx)

#tensor([1, 2, 3])

#tensor([[1, 2, 3, 1, 2, 3],

# [1, 2, 3, 1, 2, 3],

# [1, 2, 3, 1, 2, 3]])

7. softmax

- 定义

class torch.nn.Softmax(input, dim)

torch.nn.functional.softmax(input, dim)

-

参数

- dim:指明维度,dim=0表示按列计算;dim=1表示按行计算

-

功能

- 对n维输入张量运用Softmax函数,将张量的每个元素缩放到(0,1)区间且和为1

-

示例

import torch

import torch.nn.functional as F

x= torch.Tensor( [ [1,1,3,4],[1,2,3,4],[1,2,3,4]])

print(x)

b = F.softmax(x,0) #对每一列进行softmax

print(b)

c = F.softmax(x,1) #对每一行进行softmax

print(c)

'''

tensor([[1., 1., 3., 4.],

[1., 2., 3., 4.],

[1., 2., 3., 4.]])

tensor([[0.3333, 0.1554, 0.3333, 0.3333],

[0.3333, 0.4223, 0.3333, 0.3333],

[0.3333, 0.4223, 0.3333, 0.3333]])

tensor([[0.0339, 0.0339, 0.2507, 0.6815],

[0.0321, 0.0871, 0.2369, 0.6439],

[0.0321, 0.0871, 0.2369, 0.6439]])

'''

参考

- 《神经网络与深度学习》