pytorch深度学习入门之多层全连接神经网络

多层全连接神经网络——介绍篇

前面一章我们简要介绍了神经网络的一些基本知识,同时也是示范了如何用神经网络构建一个复杂的非线性二分类器,更多的情况神经网络适合使用在更加复杂的情况,比如图像分类的问题,下面我们用深度学习的入门级数据集 MNIST 手写体分类来说明一下更深层神经网络的优良表现。

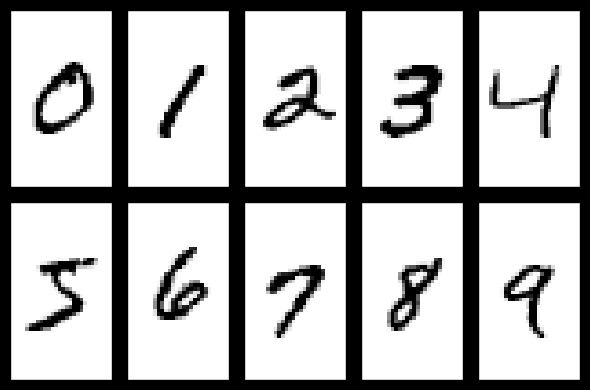

MNIST 数据集

mnist 数据集是一个非常出名的数据集,基本上很多网络都将其作为一个测试的标准,其来自美国国家标准与技术研究所, National Institute of Standards and Technology (NIST)。 训练集 (training set) 由来自 250 个不同人手写的数字构成, 其中 50% 是高中学生, 50% 来自人口普查局 (the Census Bureau) 的工作人员,一共有 60000 张图片。 测试集(test set) 也是同样比例的手写数字数据,一共有 10000 张图片。

每张图片大小是 28 x 28 的灰度图,如下:

所以我们的任务就是给出一张图片,我们希望区别出其到底属于 0 到 9 这 10 个数字中的哪一个。

多分类问题

前面我们讲过二分类问题,现在处理的问题更加复杂,是一个 10 分类问题,统称为多分类问题,对于多分类问题而言,我们的 loss 函数使用一个更加复杂的函数,叫交叉熵。

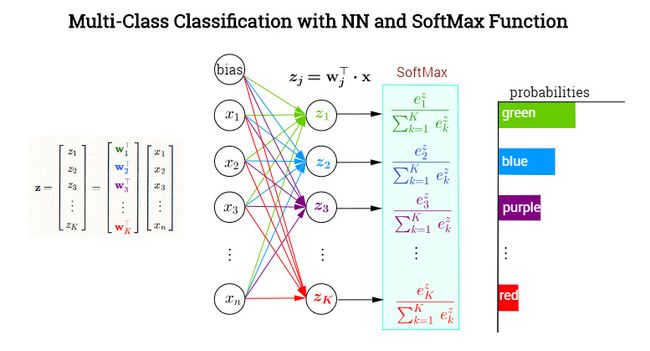

softmax

提到交叉熵,我们先讲一下 softmax 函数,前面我们见过了 sigmoid 函数,如下

s ( x ) = 1 1 + e − x s(x) = \frac{1}{1 + e^{-x}} s(x)=1+e−x1

可以将任何一个值转换到 0 ~ 1 之间,当然对于一个二分类问题,这样就足够了,因为对于二分类问题,如果不属于第一类,那么必定属于第二类,所以只需要用一个值来表示其属于其中一类概率,但是对于多分类问题,这样并不行,需要知道其属于每一类的概率,这个时候就需要 softmax 函数了。

softmax 函数示例如下

对于网络的输出 z 1 , z 2 , ⋯ z k z_1, z_2, \cdots z_k z1,z2,⋯zk,我们首先对他们每个都取指数变成 e z 1 , e z 2 , ⋯ , e z k e^{z_1}, e^{z_2}, \cdots, e^{z_k} ez1,ez2,⋯,ezk,那么每一项都除以他们的求和,也就是

z i → e z i ∑ j = 1 k e z j z_i \rightarrow \frac{e^{z_i}}{\sum_{j=1}^{k} e^{z_j}} zi→∑j=1kezjezi

如果对经过 softmax 函数的所有项求和就等于 1,所以他们每一项都分别表示属于其中某一类的概率。

交叉熵

交叉熵衡量两个分布相似性的一种度量方式,前面讲的二分类问题的 loss 函数就是交叉熵的一种特殊情况,交叉熵的一般公式为

c r o s s _ e n t r o p y ( p , q ) = E p [ − log q ] = − 1 m ∑ x p ( x ) log q ( x ) cross\_entropy(p, q) = E_{p}[-\log q] = - \frac{1}{m} \sum_{x} p(x) \log q(x) cross_entropy(p,q)=Ep[−logq]=−m1x∑p(x)logq(x)

对于二分类问题我们可以写成

− 1 m ∑ i = 1 m ( y i log s i g m o i d ( x i ) + ( 1 − y i ) log ( 1 − s i g m o i d ( x i ) ) -\frac{1}{m} \sum_{i=1}^m (y^{i} \log sigmoid(x^{i}) + (1 - y^{i}) \log (1 - sigmoid(x^{i})) −m1i=1∑m(yilogsigmoid(xi)+(1−yi)log(1−sigmoid(xi))

这就是我们之前讲的二分类问题的 loss,当时我们并没有解释原因,只是给出了公式,然后解释了其合理性,现在我们给出了公式去证明这样取 loss 函数是合理的

交叉熵是信息理论里面的内容,这里不再具体展开,更多的内容,可以看到下面的 链接

下面我们直接用 mnist 举例,讲一讲深度神经网络

import numpy as np

import torch

from torchvision.datasets import mnist # 导入 pytorch 内置的 mnist 数据

from torch import nn

from torch.autograd import Variable

# 使用内置函数下载 mnist 数据集

train_set = mnist.MNIST('./data', train=True, download=True)

test_set = mnist.MNIST('./data', train=False, download=True)

Downloading http://yann.lecun.com/exdb/mnist/train-images-idx3-ubyte.gz

Downloading http://yann.lecun.com/exdb/mnist/train-labels-idx1-ubyte.gz

Downloading http://yann.lecun.com/exdb/mnist/t10k-images-idx3-ubyte.gz

Downloading http://yann.lecun.com/exdb/mnist/t10k-labels-idx1-ubyte.gz

Processing...

Done!

我们可以看看其中的一个数据是什么样子的

a_data, a_label = train_set[0]

a_data

![]()

a_label

5

这里的读入的数据是 PIL 库中的格式,我们可以非常方便地将其转换为 numpy array

a_data = np.array(a_data, dtype='float32')

print(a_data.shape)

(28, 28)

这里我们可以看到这种图片的大小是 28 x 28

print(a_data)

[[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 3. 18.

18. 18. 126. 136. 175. 26. 166. 255. 247. 127. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 30. 36. 94. 154. 170. 253.

253. 253. 253. 253. 225. 172. 253. 242. 195. 64. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 49. 238. 253. 253. 253. 253. 253.

253. 253. 253. 251. 93. 82. 82. 56. 39. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 18. 219. 253. 253. 253. 253. 253.

198. 182. 247. 241. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 80. 156. 107. 253. 253. 205.

11. 0. 43. 154. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 14. 1. 154. 253. 90.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 139. 253. 190.

2. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 11. 190. 253.

70. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 35. 241.

225. 160. 108. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 81.

240. 253. 253. 119. 25. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

45. 186. 253. 253. 150. 27. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 16. 93. 252. 253. 187. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 249. 253. 249. 64. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

46. 130. 183. 253. 253. 207. 2. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 39. 148.

229. 253. 253. 253. 250. 182. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 24. 114. 221. 253.

253. 253. 253. 201. 78. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 23. 66. 213. 253. 253. 253.

253. 198. 81. 2. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 18. 171. 219. 253. 253. 253. 253. 195.

80. 9. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 55. 172. 226. 253. 253. 253. 253. 244. 133. 11.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 136. 253. 253. 253. 212. 135. 132. 16. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]]

我们可以将数组展示出来,里面的 0 就表示黑色,255 表示白色

对于神经网络,我们第一层的输入就是 28 x 28 = 784

参考链接

多层全连接神经网络——实践篇(pytorch)

simplenet.py

from torch import nn

# 简单的三层全连接网络

class simpleNet(nn.Module):

# simpleNet(28 * 28, 300, 100, 10)

def __init__(self,in_dim,n_hidden_1,n_hidden_2,out_dim):

super(simpleNet, self).__init__()

self.layer1 = nn.Linear(in_dim,n_hidden_1)

self.layer2 = nn.Linear(n_hidden_1,n_hidden_2)

self.layer3 = nn.Linear(n_hidden_2,out_dim)

def forward(self, x):

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

return x

def get_name(self): # 返回类名

return self.__class__.__name__

# 添加激活函数,增加网络的非线性

class activationNet(nn.Module):

# activationNet(28 * 28, 300, 100, 10)

def __init__(self,in_dim,n_hidden_1,n_hidden_2,out_dim):

super(activationNet, self).__init__()

self.layer1 = nn.Sequential(nn.Linear(in_dim,n_hidden_1),nn.ReLU(True))

self.layer2 = nn.Sequential(nn.Linear(n_hidden_1,n_hidden_2),nn.ReLU(True))

self.layer3 = nn.Sequential(nn.Linear(n_hidden_2,out_dim))

# 注: 最后一层输出层不能添加激活函数,因为输出的结果为实际的类别

def forward(self, x):

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

return x

def get_name(self):

return self.__class__.__name__

# 添加批标准化,加快收敛速度

class batchNet(nn.Module):

# batchNet(28 * 28, 300, 100, 10)

def __init__(self,in_dim,n_hidden_1,n_hidden_2,out_dim):

super(batchNet, self).__init__()

self.layer1 = nn.Sequential(

nn.Linear(in_dim,n_hidden_1),

nn.BatchNorm1d(n_hidden_1),

nn.ReLU(True)

)

self.layer2 = nn.Sequential(

nn.Linear(n_hidden_1,n_hidden_2),

nn.BatchNorm1d(n_hidden_2),

nn.ReLU(True),

)

self.layer3 = nn.Sequential(nn.Linear(n_hidden_2,out_dim))

# 注:批标准化一般放在全连接层的后面,非线性层(激活函数)的前面

def forward(self, x):

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

return x

def get_name(self):

return self.__class__.__name__

utils.py

import time

import torch

from torch import nn, optim

from torch.autograd import Variable

use_gpu = torch.cuda.is_available() #判断是否有GPU,使用cuda加速

def train(model, train_loader, test_loader, num_epochs, criterion, optimizer):

for epoch in range(num_epochs):

start = time.time()

print('epoch [{}/{}]'.format(epoch + 1, num_epochs))

print('*' * 10)

# 训练集

model.train() #训练模式

train_loss = 0.0

train_acc = 0.0

for i, data in enumerate(train_loader, start=1): # 从1开始枚举

img, label = data #img: torch.Size([64, 1, 28, 28]) label: torch.Size([64]) 每次迭代64张图片

img = img.view(img.size(0), -1) # torch.Size([64, 784])

if use_gpu:

img = img.cuda()

label = label.cuda()

# 前向传播

out = model(img) # torch.Size([64, 10])

loss = criterion(out, label)

train_loss += loss.item() # 所有的loss相加

_, pred = torch.max(out, dim=1) # 取最大值的索引

train_acc += (pred == label).float().mean() # 所有的acc均值相加

# 后向传播

optimizer.zero_grad() #归零梯度

loss.backward() # 反向传播

optimizer.step() # 更新参数

if i % 300 == 0:

print('[{}/{}] Loss: {:.6f}, Acc: {:.6f}'.format(i, len(train_loader), train_loss / i, train_acc / i))

print('Finish {} epoch, Loss: {:.6f}, Acc: {:.6f}'.format(epoch + 1, train_loss / i, train_acc / i))

# 测试集

model.eval() # 测试模式

eval_loss = 0.0

eval_acc = 0.0

for data in test_loader:

img, label = data

img = img.view(img.size(0), -1)

if torch.cuda.is_available():

img = img.cuda()

label = label.cuda()

with torch.no_grad():

out = model(img)

loss = criterion(out, label)

eval_loss += loss.item()

_, pred = torch.max(out, 1)

eval_acc += (pred == label).float().mean()

print('Test Loss: {:.6f}, Acc: {:.6f}, time: {:.4f}s'.format(eval_loss / len(test_loader),

eval_acc / len(test_loader), time.time() - start))

'''max

torch.return_types.max(

values=tensor([5.5330, 4.0168, 4.2281, 5.0293, 4.0179, 5.0598, 3.8940, 3.0942, 1.5175,

4.1047, 4.2734, 2.8363, 4.5695, 5.2592, 5.3070, 4.4359, 4.8156, 5.0195,

2.8169, 4.9822, 4.4690, 4.0372, 4.0024, 5.2169, 4.1828, 5.8338, 5.5101,

4.5567, 6.0161, 4.1724, 5.7923, 4.2611, 5.5563, 1.5881, 4.7684, 4.7749,

3.5184, 5.2478, 2.8457, 4.4556, 4.2019, 4.4447, 4.4543, 2.4892, 3.5918,

3.3944, 3.3330, 3.3624, 4.7076, 5.0788, 4.9315, 4.2758, 4.4056, 3.3710,

3.5001, 4.7317, 5.2754, 4.1093, 4.6846, 3.8728, 5.1561, 4.1930, 2.5789,

2.2516], device='cuda:0', grad_fn=),

indices=tensor([7, 2, 1, 0, 4, 1, 4, 9, 5, 9, 0, 6, 9, 0, 1, 5, 9, 7, 3, 4, 9, 6, 6, 5,

4, 0, 7, 4, 0, 1, 3, 1, 3, 6, 7, 2, 7, 1, 2, 1, 1, 7, 4, 2, 3, 5, 1, 2,

4, 4, 6, 3, 5, 5, 6, 0, 4, 1, 9, 5, 7, 8, 9, 3], device='cuda:0'))

'''

'''label

tensor([7, 2, 1, 0, 4, 1, 4, 9, 5, 9, 0, 6, 9, 0, 1, 5, 9, 7, 3, 4, 9, 6, 6, 5,

4, 0, 7, 4, 0, 1, 3, 1, 3, 4, 7, 2, 7, 1, 2, 1, 1, 7, 4, 2, 3, 5, 1, 2,

4, 4, 6, 3, 5, 5, 6, 0, 4, 1, 9, 5, 7, 8, 9, 3], device='cuda:0')

'''

main.py

import torch

from torch import nn

from torch.utils.data import DataLoader

from torchvision import datasets, transforms

import simplenet

from utils import train

'''生成mnist数据集'''

# 数据预处理

data_tf = transforms.Compose(

[transforms.ToTensor(), # PIL -> Tensor,且Tensor的范围是0-1

transforms.Normalize([0.5],[0.5]) # 标准化,减均值,除以方差 ([均值, 方差])

])

# 导入mnist数据集,传入数据预处理

# train_dataset(len): 60000, test_dataset(len): 10000

train_dataset = datasets.MNIST(

root='./datasets',train=True,transform=data_tf,download=False)

test_dataset = datasets.MNIST(

root='./datasets',train=False,transform=data_tf)

# 建立数据迭代器,按批次读取数据

# train_loader(len): 938 , test_loader(len): 157

train_loader = DataLoader(train_dataset,batch_size=batch_size,shuffle=True) # shuffle表示每次迭代数据时是否将数据打乱

test_loader = DataLoader(test_dataset,batch_size=batch_size,shuffle=False)

'''训练模型'''

# 超参数

batch_size = 64

learning_rate = 1e-2

num_epoches = 20

use_gpu = torch.cuda.is_available()

# 定义模型

in_dim, n_hidden_1, n_hidden_2, out_dim = 28*28, 300, 100, 10

model1 = simpleNet(in_dim, n_hidden_1, n_hidden_2, out_dim)

model2 = activationNet(in_dim, n_hidden_1, n_hidden_2, out_dim)

model3 = batchNet(in_dim, n_hidden_1, n_hidden_2, out_dim)

for model in [model1, model2, model3]:

if use_gpu:

model = model.cuda()

print("the {} start traing...".format(model.get_name()))

criterion = nn.CrossEntropyLoss() # 交叉熵损失函数 (多分类问题)

optimizer = torch.optim.SGD(model.parameters(), learning_rate) # 优化器,使用随机梯度下降,学习率 0.01

train(model,train_loader,test_loader,2,criterion,optimizer) # 训练模型

print("{:*^50}".format(model.get_name()+'complete training'))

输出结果:

/home/pt/miniconda3/envs/qyh/bin/python /home/pt/yaotl/PycharmProjects/main.py

the simpleNet start traing...

epoch [1/10]

**********

[300/938] Loss: 1.329844, Acc: 0.690781

[600/938] Loss: 0.941420, Acc: 0.772865

[900/938] Loss: 0.769854, Acc: 0.808924

Finish 1 epoch, Loss: 0.754717, Acc: 0.812283

Test Loss: 0.379995, Acc: 0.891515, time: 4.8858s

epoch [2/10]

**********

[300/938] Loss: 0.378551, Acc: 0.891667

[600/938] Loss: 0.368806, Acc: 0.893229

[900/938] Loss: 0.361570, Acc: 0.895469

Finish 2 epoch, Loss: 0.360406, Acc: 0.895822

Test Loss: 0.324294, Acc: 0.908327, time: 4.8837s

epoch [3/10]

**********

[300/938] Loss: 0.329006, Acc: 0.906302

[600/938] Loss: 0.328093, Acc: 0.906198

[900/938] Loss: 0.328142, Acc: 0.905434

Finish 3 epoch, Loss: 0.327265, Acc: 0.905784

Test Loss: 0.302210, Acc: 0.914359, time: 4.8824s

epoch [4/10]

**********

[300/938] Loss: 0.310762, Acc: 0.912240

[600/938] Loss: 0.316446, Acc: 0.910130

[900/938] Loss: 0.311605, Acc: 0.910833

Finish 4 epoch, Loss: 0.312086, Acc: 0.910548

Test Loss: 0.304461, Acc: 0.913172, time: 4.8807s

epoch [5/10]

**********

[300/938] Loss: 0.310722, Acc: 0.910156

[600/938] Loss: 0.303692, Acc: 0.912422

[900/938] Loss: 0.303474, Acc: 0.912674

Finish 5 epoch, Loss: 0.302887, Acc: 0.912697

Test Loss: 0.291681, Acc: 0.916139, time: 4.8943s

epoch [6/10]

**********

[300/938] Loss: 0.294564, Acc: 0.914792

[600/938] Loss: 0.299093, Acc: 0.914583

[900/938] Loss: 0.296365, Acc: 0.915313

Finish 6 epoch, Loss: 0.296641, Acc: 0.915129

Test Loss: 0.291029, Acc: 0.916436, time: 4.8377s

epoch [7/10]

**********

[300/938] Loss: 0.291095, Acc: 0.915313

[600/938] Loss: 0.295891, Acc: 0.914948

[900/938] Loss: 0.292132, Acc: 0.916806

Finish 7 epoch, Loss: 0.292571, Acc: 0.916578

Test Loss: 0.281307, Acc: 0.917722, time: 4.7784s

epoch [8/10]

**********

[300/938] Loss: 0.294645, Acc: 0.916771

[600/938] Loss: 0.290767, Acc: 0.917786

[900/938] Loss: 0.288942, Acc: 0.917726

Finish 8 epoch, Loss: 0.288723, Acc: 0.917594

Test Loss: 0.300473, Acc: 0.915348, time: 4.7692s

epoch [9/10]

**********

[300/938] Loss: 0.279384, Acc: 0.920208

[600/938] Loss: 0.285428, Acc: 0.919349

[900/938] Loss: 0.286762, Acc: 0.919514

Finish 9 epoch, Loss: 0.285923, Acc: 0.919760

Test Loss: 0.280750, Acc: 0.921776, time: 4.8108s

epoch [10/10]

**********

[300/938] Loss: 0.279690, Acc: 0.920781

[600/938] Loss: 0.282061, Acc: 0.921094

[900/938] Loss: 0.283139, Acc: 0.920556

Finish 10 epoch, Loss: 0.282981, Acc: 0.920592

Test Loss: 0.273975, Acc: 0.921479, time: 4.8408s

************simpleNet complete traing*************

the activationNet start traing...

epoch [1/10]

**********

[300/938] Loss: 1.786881, Acc: 0.522969

[600/938] Loss: 1.272527, Acc: 0.668021

[900/938] Loss: 1.015205, Acc: 0.731875

Finish 1 epoch, Loss: 0.991841, Acc: 0.737790

Test Loss: 0.422857, Acc: 0.883604, time: 4.8961s

epoch [2/10]

**********

[300/938] Loss: 0.411508, Acc: 0.884740

[600/938] Loss: 0.389647, Acc: 0.890130

[900/938] Loss: 0.379372, Acc: 0.891354

Finish 2 epoch, Loss: 0.376713, Acc: 0.892158

Test Loss: 0.327276, Acc: 0.904569, time: 4.9079s

epoch [3/10]

**********

[300/938] Loss: 0.329244, Acc: 0.903490

[600/938] Loss: 0.323284, Acc: 0.905625

[900/938] Loss: 0.319108, Acc: 0.906927

Finish 3 epoch, Loss: 0.318999, Acc: 0.907083

Test Loss: 0.285626, Acc: 0.916436, time: 4.8981s

epoch [4/10]

**********

[300/938] Loss: 0.295526, Acc: 0.915938

[600/938] Loss: 0.291442, Acc: 0.915755

[900/938] Loss: 0.287380, Acc: 0.917240

Finish 4 epoch, Loss: 0.287052, Acc: 0.917094

Test Loss: 0.275336, Acc: 0.920688, time: 4.8969s

epoch [5/10]

**********

[300/938] Loss: 0.259084, Acc: 0.922448

[600/938] Loss: 0.261759, Acc: 0.923646

[900/938] Loss: 0.260894, Acc: 0.924080

Finish 5 epoch, Loss: 0.260981, Acc: 0.924024

Test Loss: 0.259922, Acc: 0.924248, time: 4.9499s

epoch [6/10]

**********

[300/938] Loss: 0.249923, Acc: 0.927448

[600/938] Loss: 0.243477, Acc: 0.929818

[900/938] Loss: 0.236503, Acc: 0.931302

Finish 6 epoch, Loss: 0.237199, Acc: 0.930904

Test Loss: 0.228554, Acc: 0.933149, time: 4.9602s

epoch [7/10]

**********

[300/938] Loss: 0.227158, Acc: 0.934479

[600/938] Loss: 0.219079, Acc: 0.936458

[900/938] Loss: 0.216487, Acc: 0.937240

Finish 7 epoch, Loss: 0.216691, Acc: 0.937300

Test Loss: 0.217955, Acc: 0.933742, time: 4.9625s

epoch [8/10]

**********

[300/938] Loss: 0.203418, Acc: 0.941823

[600/938] Loss: 0.201948, Acc: 0.941719

[900/938] Loss: 0.197544, Acc: 0.943038

Finish 8 epoch, Loss: 0.197688, Acc: 0.942847

Test Loss: 0.191240, Acc: 0.941653, time: 4.9339s

epoch [9/10]

**********

[300/938] Loss: 0.183470, Acc: 0.947500

[600/938] Loss: 0.184399, Acc: 0.946667

[900/938] Loss: 0.182280, Acc: 0.947274

Finish 9 epoch, Loss: 0.181536, Acc: 0.947761

Test Loss: 0.178211, Acc: 0.947686, time: 4.9194s

epoch [10/10]

**********

[300/938] Loss: 0.171319, Acc: 0.949115

[600/938] Loss: 0.170203, Acc: 0.950208

[900/938] Loss: 0.168132, Acc: 0.951476

Finish 10 epoch, Loss: 0.167819, Acc: 0.951543

Test Loss: 0.159468, Acc: 0.953224, time: 4.9065s

**********activationNet complete traing***********

the batchNet start traing...

epoch [1/10]

**********

[300/938] Loss: 1.374498, Acc: 0.728698

[600/938] Loss: 1.002082, Acc: 0.806380

[900/938] Loss: 0.797396, Acc: 0.843542

Finish 1 epoch, Loss: 0.778387, Acc: 0.846948

Test Loss: 0.278106, Acc: 0.938291, time: 5.1177s

epoch [2/10]

**********

[300/938] Loss: 0.282466, Acc: 0.935885

[600/938] Loss: 0.259163, Acc: 0.939974

[900/938] Loss: 0.242087, Acc: 0.942656

Finish 2 epoch, Loss: 0.239574, Acc: 0.943330

Test Loss: 0.158975, Acc: 0.960641, time: 5.0585s

epoch [3/10]

**********

[300/938] Loss: 0.174743, Acc: 0.957083

[600/938] Loss: 0.164080, Acc: 0.960156

[900/938] Loss: 0.157884, Acc: 0.961319

Finish 3 epoch, Loss: 0.156686, Acc: 0.961754

Test Loss: 0.120937, Acc: 0.968849, time: 5.0297s

epoch [4/10]

**********

[300/938] Loss: 0.118044, Acc: 0.971510

[600/938] Loss: 0.118948, Acc: 0.971042

[900/938] Loss: 0.117522, Acc: 0.970816

Finish 4 epoch, Loss: 0.117640, Acc: 0.970849

Test Loss: 0.101570, Acc: 0.973695, time: 5.0324s

epoch [5/10]

**********

[300/938] Loss: 0.097335, Acc: 0.976094

[600/938] Loss: 0.095146, Acc: 0.976380

[900/938] Loss: 0.093661, Acc: 0.976719

Finish 5 epoch, Loss: 0.093731, Acc: 0.976646

Test Loss: 0.091125, Acc: 0.976068, time: 5.0503s

epoch [6/10]

**********

[300/938] Loss: 0.077459, Acc: 0.982083

[600/938] Loss: 0.077764, Acc: 0.981302

[900/938] Loss: 0.078367, Acc: 0.980469

Finish 6 epoch, Loss: 0.078491, Acc: 0.980410

Test Loss: 0.085019, Acc: 0.976859, time: 5.1310s

epoch [7/10]

**********

[300/938] Loss: 0.063800, Acc: 0.984896

[600/938] Loss: 0.064793, Acc: 0.983854

[900/938] Loss: 0.066355, Acc: 0.983524

Finish 7 epoch, Loss: 0.066860, Acc: 0.983242

Test Loss: 0.079175, Acc: 0.977156, time: 5.1715s

epoch [8/10]

**********

[300/938] Loss: 0.055737, Acc: 0.986719

[600/938] Loss: 0.056531, Acc: 0.986302

[900/938] Loss: 0.057141, Acc: 0.986042

Finish 8 epoch, Loss: 0.057566, Acc: 0.985891

Test Loss: 0.073072, Acc: 0.978343, time: 5.1971s

epoch [9/10]

**********

[300/938] Loss: 0.051404, Acc: 0.988021

[600/938] Loss: 0.050743, Acc: 0.988151

[900/938] Loss: 0.050949, Acc: 0.987899

Finish 9 epoch, Loss: 0.050946, Acc: 0.987840

Test Loss: 0.073823, Acc: 0.976859, time: 5.1957s

epoch [10/10]

**********

[300/938] Loss: 0.042816, Acc: 0.990260

[600/938] Loss: 0.045081, Acc: 0.988906

[900/938] Loss: 0.045148, Acc: 0.988906

Finish 10 epoch, Loss: 0.045310, Acc: 0.988773

Test Loss: 0.071047, Acc: 0.978046, time: 5.1661s

*************batchNet complete traing*************

Process finished with exit code 0

提取层结构,打印模型:

for model in [model1, model2, model3]:

print(model,'\n')

输出结果:

simpleNet(

(layer1): Linear(in_features=784, out_features=300, bias=True)

(layer2): Linear(in_features=300, out_features=100, bias=True)

(layer3): Linear(in_features=100, out_features=10, bias=True)

)

activationNet(

(layer1): Sequential(

(0): Linear(in_features=784, out_features=300, bias=True)

(1): ReLU(inplace)

)

(layer2): Sequential(

(0): Linear(in_features=300, out_features=100, bias=True)

(1): ReLU(inplace)

)

(layer3): Sequential(

(0): Linear(in_features=100, out_features=10, bias=True)

)

)

batchNet(

(layer1): Sequential(

(0): Linear(in_features=784, out_features=300, bias=True)

(1): BatchNorm1d(300, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace)

)

(layer2): Sequential(

(0): Linear(in_features=300, out_features=100, bias=True)

(1): BatchNorm1d(100, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace)

)

(layer3): Sequential(

(0): Linear(in_features=100, out_features=10, bias=True)

)

)

参考链接