一、pytorch搭建实战以及sequential的使用

一、pytorch搭建实战以及sequential的使用

- 1.A sequential container

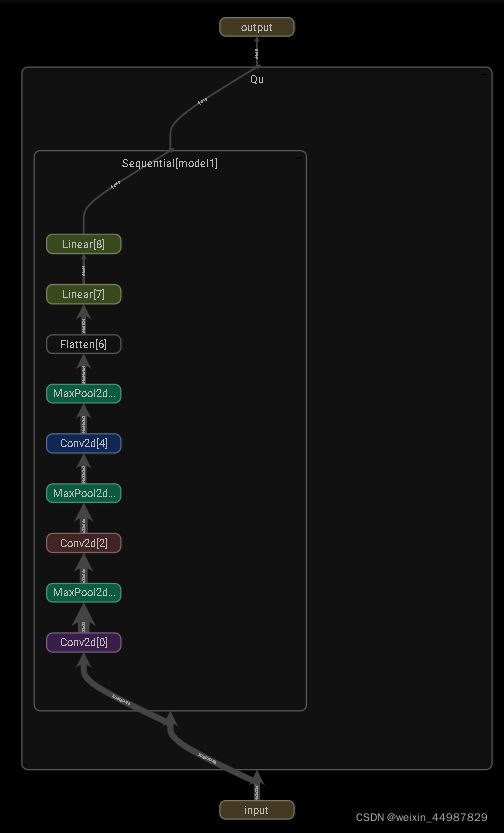

- 2.搭建cifar10 model structure

- 3.创建实例进行测试(可以检查网络是否正确)

- 3.tensorboard图可视化

1.A sequential container

官网说明文档 : https://pytorch.org/docs/stable/generated/torch.nn.Sequential.html#sequential

Example

model = nn.Sequential(

nn.Conv2d(1,20,5),

nn.ReLU(),

nn.Conv2d(20,64,5),

nn.ReLU()

)

# Using Sequential with OrderedDict. This is functionally the

# same as the above code

model = nn.Sequential(OrderedDict([

('conv1', nn.Conv2d(1,20,5)),

('relu1', nn.ReLU()),

('conv2', nn.Conv2d(20,64,5)),

('relu2', nn.ReLU())

]))

2.搭建cifar10 model structure

共有9层网络结构,顺序如下:

(0): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(4): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(5): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(6): Flatten(start_dim=1, end_dim=-1)

(7): Linear(in_features=1024, out_features=64, bias=True)

(8): Linear(in_features=64, out_features=10, bias=True)

搭建网络结构:

class Qu(nn.Module):

def __init__(self):

super(Qu, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

其中

torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True, padding_mode=‘zeros’, device=None, dtype=None)

例如第一个卷积层conv2d的 输入通道=3,输出通道=32,卷积核个数=5x5, padding=2

由于卷积作用后HW不变,仍为32X32,故需计算padding,padding的计算方法如下:

3.创建实例进行测试(可以检查网络是否正确)

qu = Qu()

#print(qu)

input = torch.ones(64, 3, 32, 32)

output = qu(input)

print(output.shape)

输出结果

torch.Size([64, 10])

3.tensorboard图可视化

writer = SummaryWriter("./logs_seq")

writer.add_graph(qu, input)

writer.close()