搭建小实战和sequential的使用

这一节是神经网络系列之搭建小实战和sequential的使用

前言

神经网络系列之搭建小实战和sequential的使用,是十分重要的。我觉得要想学好神经网络,必须得通过实践,即边编写代码边理解,这样会加快我们入门Pytorch的步伐!

一、sequential是什么?

模块将按照在构造函数中传递的顺序添加到它。或者,可以传入一系列模块。Sequential的forward()方法接受任何输入,并将其转发到它包含的第一个模块。然后,它将输出按顺序“链接”到每个后续模块的输入,最后返回最后一个模块的输出。

二、使用步骤

1.正常搭建网络

代码如下(示例):

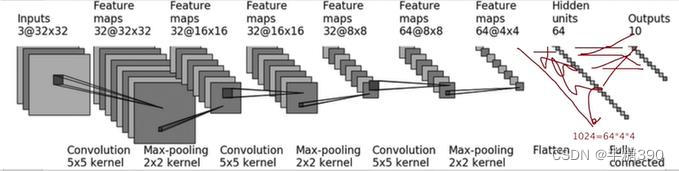

正常搭建网络,图及代码对应如下。

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.conv1=Conv2d(3,32,5,padding=2)

self.maxpool1=MaxPool2d(2)

self.conv2=Conv2d(32,32,5,padding=2)

self.maxpool2=MaxPool2d(2)

self.conv3=Conv2d(32,64,5,padding=2)

self.maxpool3=MaxPool2d(2)

self.flatten=Flatten()

self.linear1=Linear(1024,64)

self.linear2=Linear(64,10)

def forward(self,x):

x=self.conv1(x)

x=self.maxpool1(x)

x=self.conv2(x)

x=self.maxpool2(x)

x=self.conv3(x)

x=self.maxpool3(x)

x=self.flatten(x)

x=self.linear1(x)

x=self.linear2(x)

return x

tudui=Tudui()

print(tudui)进行检验:加了两行代码

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.conv1=Conv2d(3,32,5,padding=2)

self.maxpool1=MaxPool2d(2)

self.conv2=Conv2d(32,32,5,padding=2)

self.maxpool2=MaxPool2d(2)

self.conv3=Conv2d(32,64,5,padding=2)

self.maxpool3=MaxPool2d(2)

self.flatten=Flatten()

self.linear1=Linear(1024,64)

self.linear2=Linear(64,10)

def forward(self,x):

x=self.conv1(x)

x=self.maxpool1(x)

x=self.conv2(x)

x=self.maxpool2(x)

x=self.conv3(x)

x=self.maxpool3(x)

x=self.flatten(x)

x=self.linear1(x)

x=self.linear2(x)

return x

tudui=Tudui()

print(tudui)

input=torch.ones(64,3,32,32) #进行检验

output=tudui(input)

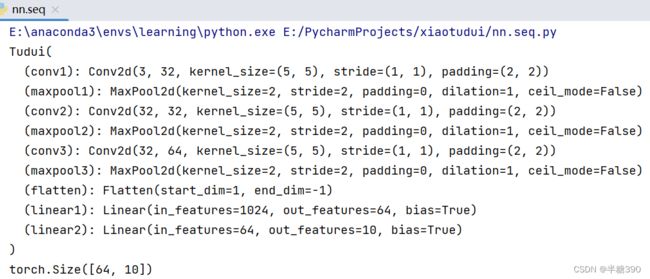

print(output.shape)成果展示:

torch.Size([64,10])说明之前写的代码与输出对上了,无误。

2.引入sequential来搭建网络

引入Sequential序列,就是简化了正常搭建网络的步骤,但表达的意思,框架基本相同,最后输出的结果也都一样,这里对结果不做展示,但对应的sequential来搭建网络的代码如下所示。

代码如下(示例):

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.module1=Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

) #引入Sequential序列

def forward(self,x):

x=self.module1(x)

return x

tudui=Tudui()

print(tudui)

input=torch.ones(64,3,32,32) #进行检验

output=tudui(input)

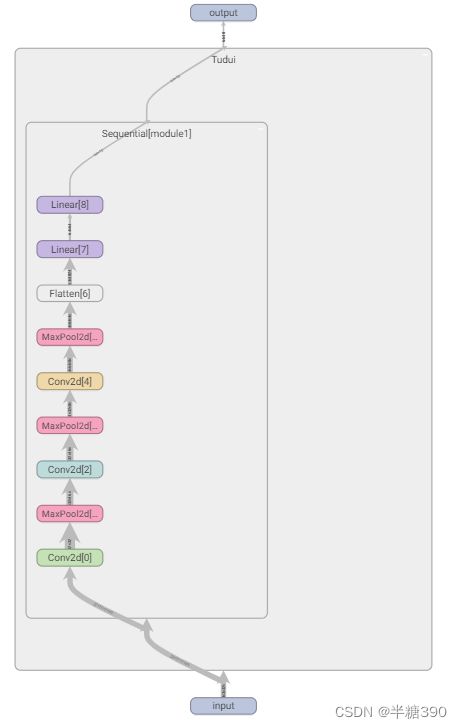

print(output.shape)当用图表来可视化时,对应的代码如下:

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.tensorboard import SummaryWriter

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.module1=Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x=self.module1(x)

return x

tudui=Tudui()

print(tudui)

input=torch.ones(64,3,32,32) #进行检验

output=tudui(input)

print(output.shape)

writer=SummaryWriter("p3")

writer.add_graph(tudui,input) #可视化,通过图表来显示

writer.close()可视化结果:

三、总结

这一篇笔记是根据土堆老师视频所编写的。神经网络-搭建小实战和Sequential的使用_哔哩哔哩_bilibili