RHCA-RH436 V1.1.12-PCS集群

目录

一、什么是集群?

二、集群分类

HA:High Availability 高可用

LBC:负载均衡

HPC:High Performance Cluster 高性能集群

三、HA集群

四、搭建一个小集群

1.安装软件包(所有节点)

2.设置防火墙(所有节点)

3.启动服务(所有节点)

4.修改密码(所有节点)

5.添加信任(任一节点)

6.创建集群

7.查看集群信息

8.集群开机自启

9.集群相关

在红帽的集群中配置fence

开始配置

进行测试

集群扩容

仲裁机制

集群的存储:实验环境使用workstation充当集群的SAN存储

存储多路径,多路径算法

1.轮询:可以减少主机的压力,当路径空闲时可用这个

2.最小队列长度

3.最小服务时间

udev规则: Dynamic device management,防止存储设备名更改

启动一个集群服务需要包含三个集群资源

1.VIP

2.共享文件系统(共享磁盘)

3.服务

资源组:使用资源组管理集群资源,centos7开始,集群资源分为三类

LSB:红帽6管理服务的方式

OCF:系统自带的一些开源的基础架构

Systemd:红帽7管理服务的方式

集群级逻辑卷:CLuster LVM(clvmd)

HA LVM:文件系统为ext4、xfs等单机文件系统使用这个

clvmd:文件系统为gfs2、vims等集群级文件系统使用这个

集群级文件系统:带锁机制,保证文件系统一致性

VMware-Vsphere:VMFS5

Huawei-FusionComputer:VIMS

RedHat:GFS2

GFS2拉伸

查看日志区信息

修复GFS2文件系统

RH436考试解答

1、建立集群

2、配置fence

3、配置日志文件

4、配置监控

5、配置http服务和ip

6、配置集群的行为

7、配置iscsi存储

8、配置多路径

9、创建gfs2文件系统

10、挂载文件系统到/var/www,并按照第五题要求吧/var/www作为http服务的根

一、什么是集群?

由两台或两台以上的计算机组成在一起共同完成一个目标,cluster(集群,群集)

二、集群分类

根据商业目标,可分为三类:

HA:High Availability 高可用

微软:MSCS

国产:RoseHA

Zookeeper、keepalive

IBM:PowerHA

RHCS、pacemarker

Oracle:RAC

应用场景:业务不能宕机的场景,比如银行,证券交易

LBC:负载均衡

LVS:Linux Virtual Server 缺省算法:WLC:加权最少连接 中国章文嵩写的

HAProxy

Nginx

F5:硬件

四层负载均衡:

七层负载均衡:

主流架构:keepalive+LVS

HPC:High Performance Cluster 高性能集群

适用于气象局、研究所、分布式计算场景

北京并行超算云

集群不是万能的,只能解决硬件故障等问题,不能解决软件导致的问题,即便有集群,也要备份数据,集群不能保证数据不丢失

三、HA集群

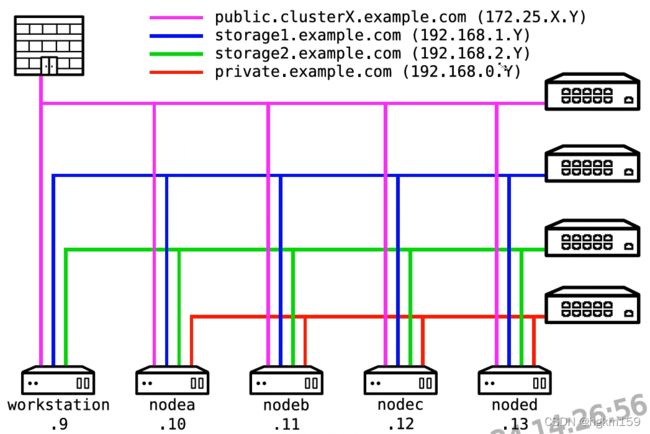

教室环境介绍

| 对外 |

HostOnly |

|||

| 节点 |

业务ip/eth0 |

心跳ip |

存储ip |

存储ip |

| classrome |

172.25.0.254 |

/ |

/ |

|

| workstation |

172.25.0.9 |

/ |

192.168.1.9 |

192.168.2.9 |

| nodea |

172.25.0.10 |

192.168.0.10 |

192.168.1.10 |

192.168.2.10 |

| nodeb |

172.25.0.11 |

192.168.0.11 |

192.168.1.11 |

192.168.2.11 |

| nodec |

172.25.0.12 |

192.168.0.12 |

192.168.1.12 |

192.168.2.12 |

| noded |

172.25.0.13 |

192.168.0.13 |

192.168.1.13 |

192.168.2.13 |

根据ip添加hosts表

[root@foundation0 ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.25.0.254 classrome

172.25.0.9 workstation

172.25.0.10 nodea

172.25.0.11 nodeb

172.25.0.12 nodec

172.25.0.13 noded四、搭建一个小集群

1.安装软件包(所有节点)

[root@foundation0 ~]#ssh root@nodea

[root@nodea ~]# yum install -y pcs fence-agents-all.x86_64 fence-agents-rht.x86_64 //安装相应的包2.设置防火墙(所有节点)

[root@nodea ~]# firewall-cmd --permanent --add-service=high-availability

success

[root@nodea ~]# firewall-cmd --reload

success

[root@nodea ~]# //防火墙放行相应的端口记得退出重登陆

3.启动服务(所有节点)

[root@nodea ~]# systemctl enable pcsd.service

[root@nodea ~]# systemctl start pcsd.service //启动服务

[root@nodea ~]# id hacluster //集群的管理用户4.修改密码(所有节点)

[root@nodea ~]# passwd hacluster //更改密码

[root@nodea ~]# vim /etc/hosts //添加下面的内容,指定集群间的心跳、通讯网络

192.168.0.10 nodea.private.example.com

192.168.0.11 nodeb.private.example.com

192.168.0.12 nodec.private.example.com

192.168.0.13 noded.private.example.com5.添加信任(任一节点)

[root@nodea ~]# pcs cluster auth nodea.private.example.com nodeb.private.example.com -u hacluster -p q1! //将节点加入多拨域

nodea.private.example.com: Authorized

nodeb.private.example.com: Authorized6.创建集群

[root@nodea ~]# pcs cluster setup --start --name cluster0 nodea.private.example.com nodeb.private.example.com //--start 创建完启动集群 --name 集群名称

Shutting down pacemaker/corosync services...

Redirecting to /bin/systemctl stop pacemaker.service

Redirecting to /bin/systemctl stop corosync.service

Killing any remaining services...

Removing all cluster configuration files...

nodea.private.example.com: Succeeded

nodeb.private.example.com: Succeeded

Starting cluster on nodes: nodea.private.example.com, nodeb.private.example.com...

nodeb.private.example.com: Starting Cluster...

nodea.private.example.com: Starting Cluster...7.查看集群信息

[root@nodea ~]# pcs cluster status //查看集群状态

Cluster Status:

Last updated: Thu Apr 15 09:20:13 2021

Last change: Thu Apr 15 09:20:11 2021

Current DC: NONE

2 Nodes configured

0 Resources configured

PCSD Status:

nodea.private.example.com: Online

nodeb.private.example.com: Online

[root@nodea ~]# pcs status //查看集群详细信息

Cluster name: cluster0 //集群名称

WARNING: no stonith devices and stonith-enabled is not false

Last updated: Thu Apr 15 09:21:43 2021

Last change: Thu Apr 15 09:20:32 2021

Stack: corosync

Current DC: nodeb.private.example.com (2) - partition with quorum

Version: 1.1.12-a14efad

2 Nodes configured //集群总共几个节点

0 Resources configured

Online: [ nodea.private.example.com nodeb.private.example.com] //几个节点在线

Full list of resources:

PCSD Status:

nodea.private.example.com: Online

nodeb.private.example.com: Online

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled8.集群开机自启

[root@nodea ~]# pcs cluster enable --all

nodea.private.example.com: Cluster Enabled

nodeb.private.example.com: Cluster Enabled9.集群相关

[root@nodea ~]# vim /etc/corosync/corosync.conf //集群配置文件

[root@nodea ~]# pcs cluster sync //修改完之后用这个同步配置文件到其他集群节点当某一台主机cpu使用率达到100%,集群中其他主机发送心跳对方无响应,认为这台主机死机,则会抢占资源,包括浮动IP,共享存储等,当这台负载下降时,也会抢占资源,这时会发生脑裂。

fence机制:隔离主机到存储的连接,红帽的集群心跳不能直接相连

如果没有fence的驱动,要去各大服务器厂商官网下载相应的源码包进行安装

在红帽的集群中配置fence

[root@nodea ~]# yum install -y fence-agents-all.x86_64 fence-agents-rht.x86_64 //安装相应的fence代理包,这里使用的红帽教室环境,就安装所有,实际情况根据服务器来安装

[root@nodea ~]# pcs stonith create fence_nodea fence_ilo3 ipaddr=192.168.10.1 login=root passwd=redhat123

//fence_nodea:fence的标识,可以随便写,fence_ilo3:fence代理,必须和服务器的BMC口一致,后跟的参数可以使用describe查看出来,这里没加action,因为action默认是reboot

[root@nodea ~]# /sbin/fence_ //查找相应的fence代理

fence_apc fence_cisco_ucs fence_ibmblade fence_ilo3 fence_ilo_ssh fence_kdump fence_scsi

fence_apc_snmp fence_drac5 fence_idrac fence_ilo3_ssh fence_imm fence_pcmk fence_virt

fence_bladecenter fence_eaton_snmp fence_ifmib fence_ilo4 fence_intelmodular fence_rhevm fence_vmware_soap

fence_brocade fence_eps fence_ilo fence_ilo4_ssh fence_ipdu fence_rht fence_wti

fence_cisco_mds fence_hpblade fence_ilo2 fence_ilo_mp fence_ipmilan fence_rsb fence_xvm

[root@nodea ~]# pcs stonith describe fence_ilo3 //describe:查看相应的fence代理后可以跟那些参数,此处以ilo3为例

Stonith options for: fence_ilo3

ipport: TCP/UDP port to use for connection with device

inet6_only: Forces agent to use IPv6 addresses only

ipaddr (required): IP Address or Hostname //ip地址必须填

passwd_script: Script to retrieve password

method: Method to fence (onoff|cycle)

inet4_only: Forces agent to use IPv4 addresses only

passwd: Login password or passphrase

lanplus: Use Lanplus to improve security of connection

auth: IPMI Lan Auth type.

cipher: Ciphersuite to use (same as ipmitool -C parameter)

privlvl: Privilege level on IPMI device

action (required): Fencing Action //动作

login: Login Name

verbose: Verbose mode

debug: Write debug information to given file

version: Display version information and exit

help: Display help and exit

power_wait: Wait X seconds after issuing ON/OFF

login_timeout: Wait X seconds for cmd prompt after login

power_timeout: Test X seconds for status change after ON/OFF

delay: Wait X seconds before fencing is started

ipmitool_path: Path to ipmitool binary

shell_timeout: Wait X seconds for cmd prompt after issuing command

retry_on: Count of attempts to retry power on

sudo: Use sudo (without password) when calling 3rd party sotfware.

stonith-timeout: How long to wait for the STONITH action to complete per a stonith device.

priority: The priority of the stonith resource. Devices are tried in order of highest priority to lowest.

pcmk_host_map: A mapping of host names to ports numbers for devices that do not support host names.

pcmk_host_list: A list of machines controlled by this device (Optional unless pcmk_host_check=static-list).

pcmk_host_check: How to determine which machines are controlled by the device.

[root@nodea ~]# pcs stonith show --full //查看fence状态

Resource: fence_nodea (class=stonith type=fence_ilo3)

Attributes: ipaddr=192.168.10.1 login=root passwd=redhat123

Operations: monitor interval=60s (fence_nodea-monitor-interval-60s)

Resource: fence_nodeb (class=stonith type=fence_ilo3)

Attributes: ipaddr=192.168.10.2 login=root passwd=redhat123

Operations: monitor interval=60s (fence_nodeb-monitor-interval-60s)

[root@nodea ~]# pcs stonith delete fence_nodea //删除nodea的fence_agent,需要填写的是节点fence的标识

Deleting Resource - fence_nodea

[root@nodea ~]# pcs stonith delete fence_nodeb

Deleting Resource - fence_nodeb

[root@nodea ~]# 开始配置

[root@nodea ~]# pcs stonith create fence_nodea_rht fence_rht port="nodea.private.example.com" pcmk_host_list="nodea.private.example.com" ipaddr="classrome.example.com"

[root@nodea ~]# pcs stonith create fence_nodeb_rht fence_rht port="nodeb.private.example.com" pcmk_host_list="nodeb.private.example.com" ipaddr="classrome.example.com"

[root@nodea ~]# pcs stonith show

fence_nodea_rht (stonith:fence_rht): Started

fence_nodeb_rht (stonith:fence_rht): Started

[root@nodea ~]# pcs stonith fence nodeb.private.example.com //通用的,用来手动fence节点,其实质就是用BMC口强制重启系统

测试fencr是否配置成功:关闭某个节点的网卡,5s后会自动重启,运行的服务也会自动切换

配置宿主机,使得宿主机为一个fence设备

[root@nodea ~]# mkdir /etc/cluster

[root@nodea ~]# firewall-cmd --permanent --add-port=1229/tcp //防火墙放行相应的端口

[root@nodea ~]# firewall-cmd --permanent --add-port=1229/udp //防火墙放行相应的端口

[root@nodea ~]# firewall-cmd --reload

[root@nodeb ~]# mkdir /etc/cluster

[root@nodeb ~]# firewall-cmd --permanent --add-port=1229/tcp

[root@nodeb ~]# firewall-cmd --permanent --add-port=1229/udp

[root@nodeb ~]# firewall-cmd --reload

[root@foundation0 ~]# yum install -y fence-virtd fence-virtd-libvirt fence-virtd-multicast //安装fence软件

[root@foundation0 ~]# mkdir -p /etc/cluster //密钥默认保存在这个目录

[root@foundation0 ~]# dd if=/dev/urandom of=/etc/cluster/fence_xvm.key bs=1k count=4 //生成密钥

记录了4+0 的读入

记录了4+0 的写出

4096字节(4.1 kB)已复制,0.00353812 秒,1.2 MB/秒

[root@foundation0 ~]# scp /etc/cluster/fence_xvm.key root@nodea:/etc/cluster/

[root@foundation0 ~]# scp /etc/cluster/fence_xvm.key root@nodeb:/etc/cluster/

[root@foundation0 ~]# virsh net-edit privnet

[root@foundation0 ~]# cat /etc/libvirt/qemu/networks/privnet.xml

privnet

aab634e5-0ca0-494b-a6be-c3a4ece4a314

[root@foundation0 ~]#reboot

[root@foundation0 ~]# fence_virtd -c

Module search path [/usr/lib64/fence-virt]: //默认使用这个模块

Available backends:

libvirt 0.1

Available listeners:

multicast 1.2

Listener modules are responsible for accepting requests

from fencing clients.

Listener module [multicast]: //使用多拨

The multicast listener module is designed for use environments

where the guests and hosts may communicate over a network using

multicast.

The multicast address is the address that a client will use to

send fencing requests to fence_virtd.

Multicast IP Address [225.0.0.12]: //多拨地址,默认即可

Using ipv4 as family.

Multicast IP Port [1229]: //多拨地址端口,默认即可

Setting a preferred interface causes fence_virtd to listen only

on that interface. Normally, it listens on all interfaces.

In environments where the virtual machines are using the host

machine as a gateway, this *must* be set (typically to virbr0).

Set to 'none' for no interface.

Interface [virbr0]: virbr1 //使用virbr1进行监测

The key file is the shared key information which is used to

authenticate fencing requests. The contents of this file must

be distributed to each physical host and virtual machine within

a cluster.

Key File [/etc/cluster/fence_xvm.key]: //默认这个密钥

Backend modules are responsible for routing requests to

the appropriate hypervisor or management layer.

Backend module [libvirt]:

Configuration complete.

=== Begin Configuration ===

backends {

libvirt {

uri = "qemu:///system";

}

}

listeners {

multicast {

port = "1229";

family = "ipv4";

interface = "virbr1"; //使用virbr1监控虚拟机里面的1229端口

address = "225.0.0.12";

key_file = "/etc/cluster/fence_xvm.key"; //使用这个密钥进行身份验证

}

}

fence_virtd {

module_path = "/usr/lib64/fence-virt";

backend = "libvirt";

listener = "multicast";

}

=== End Configuration ===

Replace /etc/fence_virt.conf with the above [y/N]? y

[root@foundation0 ~]# systemctl restart fence_virtd.service

[root@foundation0 ~]# systemctl enable fence_virtd.service

[root@nodea ~]# pcs stonith create fence_nodea fence_xvm port="nodea" pcmk_host_list="nodea.private.example.com"

[root@nodea ~]# pcs stonith create fence_nodeb fence_xvm port="nodeb" pcmk_host_list="nodeb.private.example.com"

//port:domain_name 即创建虚拟机的domain名称

[root@nodea ~]# pcs stonith show

fence_nodea (stonith:fence_xvm): Started

fence_nodeb (stonith:fence_xvm): Started 进行测试

[root@foundation0 ~]# ssh root@nodea

Last login: Sat May 8 05:13:01 2021 from 172.25.0.250

[root@nodea ~]# ifdown eth1 //进行测试,5s后会自动重启

Device 'eth1' successfully disconnected.

[root@nodea ~]#

[root@nodea ~]#

[root@nodea ~]#

[root@nodea ~]#

[root@nodea ~]#

[root@nodea ~]#

[root@nodea ~]# Write failed: Broken pipe

[root@foundation0 ~]# ssh root@nodea

Last login: Sat May 8 05:48:53 2021 from 172.25.0.250

[root@nodea ~]#集群扩容

[root@nodec ~]# yum install -y pcs fence-agents-all fence-agents-rht //安装软件包

[root@nodec ~]# firewall-cmd --permanent --add-service=high-availability //在防火墙开启服务

[root@nodec ~]# firewall-cmd --reload

[root@nodec ~]# systemctl enable pcsd.service

[root@nodec ~]# systemctl restart pcsd.service

[root@nodec ~]# passwd hacluster //修改hacluster用户的密码

更改用户 hacluster 的密码 。

新的 密码:

无效的密码: 密码少于 6 个字符

重新输入新的 密码:

passwd:所有的身份验证令牌已经成功更新。

[root@nodec ~]# exit

[root@nodec ~]# pcs cluster auth nodec.private.example.com //加入认证(集群互相做认证)

Username: hacluster

Password:

nodec.private.example.com: Authorized

[root@nodec ~]# pcs cluster auth nodea.private.example.com nodeb.private.example.com nodec.private.example.com

Username: hacluster

Password:

[root@nodea ~]# pcs cluster auth nodec.private.example.com

Username: hacluster

Password:

[root@nodeb ~]# pcs cluster auth nodec.private.example.com

Username: hacluster

Password:

[root@nodec ~]# pcs cluster node add nodec.private.example.com //向集群中增加节点

[root@nodec ~]# pcs status

[root@nodec ~]# mkdir /etc/cluster

[root@nodec ~]# firewall-cmd --permanent --add-port=1229/tcp //防火墙放行相应的端口

[root@nodec ~]# firewall-cmd --permanent --add-port=1229/udp //防火墙放行相应的端口

[root@nodec ~]# firewall-cmd --reload

[root@nodea ~]# scp /etc/cluster/fence_xvm.key [email protected]:/etc/cluster/

[root@nodec ~]# pcs stonith create fence_nodec fence_xvm port="nodec" pcmk_host_list="nodec.private.example.com" //添加fence代理

[root@nodec ~]# pcs stonith show仲裁机制

[root@nodea ~]# corosync-quorumtool

Quorum information

------------------

Date: Sun May 9 08:42:03 2021

Quorum provider: corosync_votequorum

Nodes: 3 //三个节点

Node ID: 1 //节点ID为1

Ring ID: 24

Quorate: Yes //集群状态,正常

Votequorum information

----------------------

Expected votes: 3 //期望票数

Highest expected: 3 //最高票数

Total votes: 3 //总票数

Quorum: 2 //存活需要的票数

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

1 1 nodea.private.example.com (local)

2 1 nodeb.private.example.com

3 1 nodec.private.example.com

[root@nodea ~]# vim /etc/corosync/corosync.conf

[root@nodea ~]# cat /etc/corosync/corosync.conf

totem {

version: 2

secauth: off

cluster_name: cluster0

transport: udpu

}

nodelist {

node {

ring0_addr: nodea.private.example.com

nodeid: 1

}

node {

ring0_addr: nodeb.private.example.com

nodeid: 2

}

node {

ring0_addr: nodec.private.example.com

nodeid: 3

}

}

quorum {

provider: corosync_votequorum

auto_tie_breaker: 1 //开启这个功能

auto_tie_breaker_node: lowest //节点ID低的那个存活

}

logging {

to_syslog: yes

}

[root@nodea ~]# pcs cluster sync

[root@nodea ~]# pcs cluster stop --all

[root@nodea ~]# pcs cluster start --all

[root@nodea ~]# corosync-quorumtool

Quorum information

------------------

Date: Sun May 9 10:20:34 2021

Quorum provider: corosync_votequorum

Nodes: 3

Node ID: 1

Ring ID: 56

Quorate: Yes

Votequorum information

----------------------

Expected votes: 3

Highest expected: 3

Total votes: 3

Quorum: 2

Flags: Quorate AutoTieBreaker //配置成功

Membership information

----------------------

Nodeid Votes Name

1 1 nodea.private.example.com (local)

2 1 nodeb.private.example.com

3 1 nodec.private.example.com

计算票数,是防止闹裂的解决方案 集群的存储:实验环境使用workstation充当集群的SAN存储

[root@foundation0 ~]# cat /etc/hosts //添加hosts表

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.25.0.10 nodea

172.25.0.11 nodeb

172.25.0.12 nodec

172.25.0.13 noded

172.25.0.9 workstation

[root@nodea ~]# yum install -y iscsi-initiator-utils

[root@nodea ~]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.1994-05.com.redhat:60a2f05b9349

[root@nodeb ~]# yum install -y iscsi-initiator-utils

[root@nodeb ~]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.1994-05.com.redhat:b3746a984c46

[root@nodec ~]# yum install -y iscsi-initiator-utils

[root@nodec ~]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.1994-05.com.redhat:9937eabd1637

[root@workstation ~]# yum clean all && yum list all

[root@workstation ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

vda 253:0 0 10G 0 disk

└─vda1 253:1 0 10G 0 part /

vdb 253:16 0 8G 0 disk

给vdb分区

[root@workstation ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

vda 253:0 0 10G 0 disk

└─vda1 253:1 0 10G 0 part /

vdb 253:16 0 8G 0 disk

└─vdb1 253:17 0 8G 0 part

[root@workstation ~]# yum install -y targetcli

[root@workstation ~]# systemctl start target

[root@workstation ~]# firewall-cmd --permanent --add-port=3260/tcp

[root@workstation ~]# firewall-cmd --reload

[root@workstation ~]# targetcli //配置iscsi

Warning: Could not load preferences file /root/.targetcli/prefs.bin.

targetcli shell version 2.1.fb37

Copyright 2011-2013 by Datera, Inc and others.

For help on commands, type 'help'.

/> /backstores/block create storage1 /dev/vdb1 //创建一个block

Created block storage object storage1 using /dev/vdb1.

/> iscsi/ create iqn.2021-05.com.example.workstation //创建iqn

Created target iqn.2021-05.com.example.workstation.

Created TPG 1.

Global pref auto_add_default_portal=true

Created default portal listening on all IPs (0.0.0.0), port 3260.

/> /iscsi/iqn.2021-05.com.example.workstation/tpg1/luns create /backstores/block/storage1 //将iqn与block关联

Created LUN 0.

/> /iscsi/iqn.2021-05.com.example.workstation/tpg1/acls create iqn.1994-05.com.redhat:60a2f05b9349 //设置acl规则

Created Node ACL for iqn.1994-05.com.redhat:60a2f05b9349

Created mapped LUN 0.

/> /iscsi/iqn.2021-05.com.example.workstation/tpg1/acls create iqn.1994-05.com.redhat:b3746a984c46

Created Node ACL for iqn.1994-05.com.redhat:b3746a984c46

Created mapped LUN 0.

/> /iscsi/iqn.2021-05.com.example.workstation/tpg1/acls create iqn.1994-05.com.redhat:9937eabd1637

Created Node ACL for iqn.1994-05.com.redhat:9937eabd1637

Created mapped LUN 0.

/> /iscsi/iqn.2021-05.com.example.workstation/tpg1/portals/ delete 0.0.0.0 3260 //删除默认的监听地址

Deleted network portal 0.0.0.0:3260

/> /iscsi/iqn.2021-05.com.example.workstation/tpg1/portals create ip_address=192.168.1.9 ip_port=3260

Using default IP port 3260

Created network portal 192.168.1.9:3260.

/> /iscsi/iqn.2021-05.com.example.workstation/tpg1/portals create ip_address=192.168.2.9 ip_port=3260

Using default IP port 3260

Created network portal 192.168.2.9:3260.

/> ls

o- / ............................................................................................... [...]

o- backstores .................................................................................... [...]

| o- block ........................................................................ [Storage Objects: 1]

| | o- storage1 .............................................. [/dev/vdb1 (8.0GiB) write-thru activated]

| o- fileio ....................................................................... [Storage Objects: 0]

| o- pscsi ........................................................................ [Storage Objects: 0]

| o- ramdisk ...................................................................... [Storage Objects: 0]

o- iscsi .................................................................................. [Targets: 1]

| o- iqn.2021-05.com.example.workstation ..................................................... [TPGs: 1]

| o- tpg1 ..................................................................... [no-gen-acls, no-auth]

| o- acls ................................................................................ [ACLs: 3]

| | o- iqn.1994-05.com.redhat:60a2f05b9349 ........................................ [Mapped LUNs: 1]

| | | o- mapped_lun0 .................................................... [lun0 block/storage1 (rw)]

| | o- iqn.1994-05.com.redhat:9937eabd1637 ........................................ [Mapped LUNs: 1]

| | | o- mapped_lun0 .................................................... [lun0 block/storage1 (rw)]

| | o- iqn.1994-05.com.redhat:b3746a984c46 ........................................ [Mapped LUNs: 1]

| | o- mapped_lun0 .................................................... [lun0 block/storage1 (rw)]

| o- luns ................................................................................ [LUNs: 1]

| | o- lun0 ........................................................... [block/storage1 (/dev/vdb1)]

| o- portals .......................................................................... [Portals: 2]

| o- 192.168.1.9:3260 ....................................................................... [OK]

| o- 192.168.2.9:3260 ....................................................................... [OK]

o- loopback ............................................................................... [Targets: 0]

/> saveconfig

Last 10 configs saved in /etc/target/backup.

Configuration saved to /etc/target/saveconfig.json

/> exit

Global pref auto_save_on_exit=true

Last 10 configs saved in /etc/target/backup.

Configuration saved to /etc/target/saveconfig.json

[root@workstation ~]# systemctl restart target

[root@nodea ~]# iscsiadm -m discovery -t st -p 192.168.1.9:3260

[root@nodea ~]# iscsiadm -m discovery -t st -p 192.168.2.9:3260

[root@nodea ~]# iscsiadm -m node -T iqn.2021-05.com.example.workstation -p 192.168.1.9 -l

[root@nodea ~]# iscsiadm -m node -T iqn.2021-05.com.example.workstation -p 192.168.2.9 -l //所有节点连接存储

[root@nodea ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 8G 0 disk

sdb 8:16 0 8G 0 disk

vda 253:0 0 10G 0 disk

└─vda1 253:1 0 10G 0 part //三个节点都连接到了存储

[root@nodea ~]# iscsiadm -m node -u //临时取消登陆,下次开机依然生效

[root@nodea ~]# iscsiadm -m node -o delete //永久断开连接存储多路径,多路径算法

1.轮询:可以减少主机的压力,当路径空闲时可用这个

2.最小队列长度

3.最小服务时间

[root@nodea ~]# yum install -y device-mapper-multipath //所有节点安装红帽多路径软件

[root@nodea ~]# mpathconf --enable

[root@nodea ~]# systemctl restart multipathd.service

[root@nodea ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 8G 0 disk

└─mpatha 252:0 0 8G 0 mpath

sdb 8:16 0 8G 0 disk

└─mpatha 252:0 0 8G 0 mpath

vda 253:0 0 10G 0 disk

└─vda1 253:1 0 10G 0 part /

[root@nodea ~]# fdisk /dev/mapper/mpatha //分区,+5G

欢迎使用 fdisk (util-linux 2.23.2)。

更改将停留在内存中,直到您决定将更改写入磁盘。

使用写入命令前请三思。

Device does not contain a recognized partition table

使用磁盘标识符 0xc2f53f08 创建新的 DOS 磁盘标签。

命令(输入 m 获取帮助):n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p):

Using default response p

分区号 (1-4,默认 1):

起始 扇区 (8192-16775167,默认为 8192):+5G

Last 扇区, +扇区 or +size{K,M,G} (10485760-16775167,默认为 16775167):

将使用默认值 16775167

分区 1 已设置为 Linux 类型,大小设为 3 GiB

命令(输入 m 获取帮助):q

[root@nodea ~]# fdisk /dev/mapper/mpatha

欢迎使用 fdisk (util-linux 2.23.2)。

更改将停留在内存中,直到您决定将更改写入磁盘。

使用写入命令前请三思。

Device does not contain a recognized partition table

使用磁盘标识符 0x11f3cd48 创建新的 DOS 磁盘标签。

命令(输入 m 获取帮助):n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p):

Using default response p

分区号 (1-4,默认 1):

起始 扇区 (8192-16775167,默认为 8192):

将使用默认值 8192

Last 扇区, +扇区 or +size{K,M,G} (8192-16775167,默认为 16775167):+5G

分区 1 已设置为 Linux 类型,大小设为 5 GiB

命令(输入 m 获取帮助):w

The partition table has been altered!

Calling ioctl() to re-read partition table.

WARNING: Re-reading the partition table failed with error 22: 无效的参数.

The kernel still uses the old table. The new table will be used at

the next reboot or after you run partprobe(8) or kpartx(8)

正在同步磁盘。

[root@nodea ~]# partprobe //写回硬盘生效

[root@nodea ~]# fdisk -l

磁盘 /dev/vda:10.7 GB, 10737418240 字节,20971520 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 512 字节

磁盘标签类型:dos

磁盘标识符:0x000272f4

设备 Boot Start End Blocks Id System

/dev/vda1 * 2048 20970332 10484142+ 83 Linux

磁盘 /dev/sda:8588 MB, 8588886016 字节,16775168 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 4194304 字节

磁盘标签类型:dos

磁盘标识符:0x11f3cd48

设备 Boot Start End Blocks Id System

/dev/sda1 8192 10493951 5242880 83 Linux

磁盘 /dev/sdb:8588 MB, 8588886016 字节,16775168 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 4194304 字节

磁盘标签类型:dos

磁盘标识符:0x11f3cd48

设备 Boot Start End Blocks Id System

/dev/sdb1 8192 10493951 5242880 83 Linux

磁盘 /dev/mapper/mpatha:8588 MB, 8588886016 字节,16775168 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 4194304 字节

磁盘标签类型:dos

磁盘标识符:0x11f3cd48

设备 Boot Start End Blocks Id System

/dev/mapper/mpatha1 8192 10493951 5242880 83 Linux

磁盘 /dev/mapper/mpatha1:5368 MB, 5368709120 字节,10485760 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 4194304 字节

[root@nodea ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 8G 0 disk

└─mpatha 252:0 0 8G 0 mpath

└─mpatha1 252:1 0 5G 0 part

sdb 8:16 0 8G 0 disk

└─mpatha 252:0 0 8G 0 mpath

└─mpatha1 252:1 0 5G 0 part

vda 253:0 0 10G 0 disk

└─vda1 253:1 0 10G 0 part /

[root@nodeb ~]# partprobe

[root@nodec ~]# partprobe

[root@nodea ~]# mkfs.ext4 /dev/mapper/mpatha1

[root@nodea ~]# mkdir /data

[root@nodea ~]# mount /dev/mapper/mpatha1 /data/

[root@nodeb ~]# mkdir /data

[root@nodeb ~]# mount /dev/mapper/mpatha1 /data/

[root@nodec ~]# mkdir /data

[root@nodec ~]# mount /dev/mapper/mpatha1 /data/

[root@nodea ~]# multipath -ll //查看多路径信息

May 10 01:32:06 | vda: No fc_host device for 'host-1'

May 10 01:32:06 | vda: No fc_host device for 'host-1'

May 10 01:32:06 | vda: No fc_remote_port device for 'rport--1:-1-0'

mpatha (36001405e3018df0669a4b6db8c91718b) dm-0 LIO-ORG ,storage1

size=8.0G features='0' hwhandler='0' wp=rw

|-+- policy='service-time 0' prio=1 status=active

| `- 2:0:0:0 sda 8:0 active ready running

`-+- policy='service-time 0' prio=1 status=enabled

`- 3:0:0:0 sdb 8:16 active ready running

[root@nodea ~]# ifdown eth2 //测试多路径是否正常

Device 'eth2' successfully disconnected.

[root@nodea ~]# multipath -ll

May 10 01:36:03 | vda: No fc_host device for 'host-1'

May 10 01:36:03 | vda: No fc_host device for 'host-1'

May 10 01:36:03 | vda: No fc_remote_port device for 'rport--1:-1-0'

mpatha (36001405e3018df0669a4b6db8c91718b) dm-0 LIO-ORG ,storage1

size=8.0G features='0' hwhandler='0' wp=rw

|-+- policy='service-time 0' prio=1 status=active

| `- 2:0:0:0 sda 8:0 active ready running

`-+- policy='service-time 0' prio=1 status=enabled

`- 3:0:0:0 sdb 8:16 active undef running //链路断开

[root@nodea ~]# ifup eth2

[root@nodea ~]# multipath -ll

May 10 01:37:33 | vda: No fc_host device for 'host-1'

May 10 01:37:33 | vda: No fc_host device for 'host-1'

May 10 01:37:33 | vda: No fc_remote_port device for 'rport--1:-1-0'

mpatha (36001405e3018df0669a4b6db8c91718b) dm-0 LIO-ORG ,storage1

size=8.0G features='0' hwhandler='0' wp=rw

|-+- policy='service-time 0' prio=1 status=active

| `- 2:0:0:0 sda 8:0 active ready running

`-+- policy='service-time 0' prio=1 status=enabled

`- 3:0:0:0 sdb 8:16 active ready running //链路正常

[root@nodea ~]# man multipath.conf //多路径的配置帮助

[root@nodea ~]# vim /etc/multipath.conf //multipath的配置文件

[root@nodea ~]# cat /etc/multipath.conf

# This is a basic configuration file with some examples, for device mapper

# multipath.

#

# For a complete list of the default configuration values, run either

# multipath -t

# or

# multipathd show config

#

# For a list of configuration options with descriptions, see the multipath.conf

# man page

## By default, devices with vendor = "IBM" and product = "S/390.*" are

## blacklisted. To enable mulitpathing on these devies, uncomment the

## following lines.

#blacklist_exceptions { //黑名单排除的设备

# device {

# vendor "IBM"

# product "S/390.*"

# }

#}

## Use user friendly names, instead of using WWIDs as names.

defaults {

user_friendly_names yes

find_multipaths yes

}

##

## Here is an example of how to configure some standard options.

##

#

#defaults {

# udev_dir /dev

# polling_interval 10

# selector "round-robin 0"

# path_grouping_policy multibus

# uid_attribute ID_SERIAL

# prio alua

# path_checker readsector0

# rr_min_io 100

# max_fds 8192

# rr_weight priorities

# failback immediate

# no_path_retry fail

# user_friendly_names yes

#}

##

## The wwid line in the following blacklist section is shown as an example

## of how to blacklist devices by wwid. The 2 devnode lines are the

## compiled in default blacklist. If you want to blacklist entire types

## of devices, such as all scsi devices, you should use a devnode line.

## However, if you want to blacklist specific devices, you should use

## a wwid line. Since there is no guarantee that a specific device will

## not change names on reboot (from /dev/sda to /dev/sdb for example)

## devnode lines are not recommended for blacklisting specific devices.

##

#blacklist { //黑名单列表,加入这里的设备则不会进行整合

# wwid 26353900f02796769

# devnode "^(ram|raw|loop|fd|md|dm-|sr|scd|st)[0-9]*"

# devnode "^hd[a-z]"

#}

multipaths {

# multipath {

# wwid 3600508b4000156d700012000000b0000

# alias yellow //别名

# path_grouping_policy multibus

# path_checker readsector0

# path_selector "round-robin 0" //轮询

# failback manual

# rr_weight priorities

# no_path_retry 5

# } //模板,下面的是真正的配置

multipath {

wwid 36001405e3018df0669a4b6db8c91718b //使用这条命令查询LUN ID /usr/lib/udev/scsi_id -g -u /dev/sda

alias storage //别名

}

}

#devices {

# device {

# vendor "COMPAQ "

# product "HSV110 (C)COMPAQ"

# path_grouping_policy multibus

# path_checker readsector0

# path_selector "round-robin 0"

# hardware_handler "0"

# failback 15

# rr_weight priorities

# no_path_retry queue

# }

# device {

# vendor "COMPAQ "

# product "MSA1000 "

# path_grouping_policy multibus

# }

#}

blacklist {

}

优先级:multipaths>devices>defaults

[root@nodea ~]# systemctl restart multipathd.service

[root@nodea ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 8G 0 disk

└─storage 252:0 0 8G 0 mpath //这里就变成别名了

└─storage1 252:1 0 5G 0 part /data

sdb 8:16 0 8G 0 disk

└─storage 252:0 0 8G 0 mpath

└─storage1 252:1 0 5G 0 part /data

vda 253:0 0 10G 0 disk

└─vda1 253:1 0 10G 0 part /

[root@nodea ~]# scp /etc/multipath.conf [email protected]:/etc/

[root@nodea ~]# scp /etc/multipath.conf [email protected]:/etc/

[root@nodeb ~]# systemctl restart multipathd.service

[root@nodec ~]# systemctl restart multipathd.service udev规则: Dynamic device management,防止存储设备名更改

系统udev规则:默认在/usr/lib/udev/rules.d/ 路径下

自定义规则写在/etc/udev/rules.d 下

udev规则命名:xx-yy.rules xx:自定义的最好写 99 yy:规则名称

udev帮助:man 7 udev

[root@nodea ~]# udevadm info -a -p /block/sda //查看内核识别到的磁盘信息 -a:所有 ,-p:路径 目的:寻找可以写入udev规则的条目

[root@foundation0 ~]# cat /etc/udev/rules.d/99-myusb.rules //简单的udev规则示范

SUBSYSTEMS=="usb",ATTRS{serial}=="200605160855",SYMLINK+="myusb%n"

//当设备时usb,序列号是200605160855时,取一个别名myusb %n:加上分区编号,这样可以表示这个设备的唯一性

[root@nodea ~]# /usr/lib/udev/scsi_id -g -u /dev/sda

36001405e3018df0669a4b6db8c91718b

[root@nodea ~]# cat /etc/udev/rules.d/99-myscsi.rules

KERNEL=="sd*",PROGRAM=="/usr/lib/udev/scsi_id --whitelisted --replace--whitespace --device=/dev/sda",RESULT=="36001405e3018df0669a4b6db8c91718b",SYMLINK+="myscsi%n"

//内核识别到的设备sd*,当执行命令/usr/lib/udev/scsi_id 时,返回的结果是36001405e3018df0669a4b6db8c91718b的,取个别名为myscsi

[root@nodea ~]# udevadm trigger --type=devices --action=change //使得udev规则生效

网卡名称就是udev规则来控制的,centos7的规则在 /usr/lib/udev/rules.d/80-net-name-slot.rules 下,如果需要修改网卡被内核识别到的名称,修改这个文件,

centos6的规则在/usr/lib/udev/rules.d/70-persistent-net.rules,如果需要制作模板,需要将此文件删除,使用模板部署的机器会根据网卡的mac地址自动生成udev规则文件

[root@nodea ~]# scp /etc/udev/rules.d/99-myscsi.rules [email protected]:/etc/udev/rules.d/

[root@nodea ~]# scp /etc/udev/rules.d/99-myscsi.rules [email protected]:/etc/udev/rules.d/ //复制udev规则到其他节点

[root@nodeb ~]# udevadm trigger --type=devices --action=change

[root@nodec ~]# udevadm trigger --type=devices --action=change //使得udev规则生效

[root@nodea ~]# ll /dev/myscsi

lrwxrwxrwx. 1 root root 3 5月 10 06:35 /dev/myscsi -> sda //udev规则生效了启动一个集群服务需要包含三个集群资源

1.VIP

2.共享文件系统(共享磁盘)

3.服务

资源组:使用资源组管理集群资源,centos7开始,集群资源分为三类

LSB:红帽6管理服务的方式

OCF:系统自带的一些开源的基础架构

Systemd:红帽7管理服务的方式

创建一个集群资源组

[root@nodea ~]# pcs resource list //查看系统当中的集群资源

[root@nodea ~]# mount /dev/mapper/storage1 /data/

[root@nodea ~]# chcon -R -t httpd_sys_content_t /data

[root@nodea ~]# umount /data/

[root@nodea ~]# yum install -y httpd //在所有节点上安装httpd

[root@nodea ~]# firewall-cmd --permanent --add-port=80/tcp

[root@nodea ~]# firewall-cmd --reload //在所有节点上设置防火墙策略

[root@nodea ~]# pcs resource create myip IPaddr2 ip=172.25.0.80 cidr_netmask=24 --group myweb //创建ip集群资源,指定ip为172.25.0.80 加入资源组myweb

[root@nodea ~]# pcs resource show //查看资源组

Resource Group: myweb

myip (ocf::heartbeat:IPaddr2): Started

[root@nodea ~]# pcs resource create myfs Filesystem device=/dev/mapper/storage1 directory=/var/www/html fstype=ext4 --group myweb //创建文件系统集群资源,指定设备,挂载点,文件系统格式

[root@nodea ~]# pcs resource show

Resource Group: myweb

myip (ocf::heartbeat:IPaddr2): Started

myfs (ocf::heartbeat:Filesystem): Started

[root@nodea ~]# pcs resource create myhttpd systemd:httpd --group myweb //创建服务集群资源

[root@nodea ~]# pcs resource show

Resource Group: myweb

myip (ocf::heartbeat:IPaddr2): Started

myfs (ocf::heartbeat:Filesystem): Started

myhttpd (systemd:httpd): Started

[root@nodea ~]# pcs status

Cluster name: cluster0

WARNING: no stonith devices and stonith-enabled is not false

Last updated: Mon May 10 11:31:10 2021

Last change: Mon May 10 11:24:52 2021

Stack: corosync

Current DC: nodec.private.example.com (3) - partition with quorum

Version: 1.1.12-a14efad

3 Nodes configured

3 Resources configured

Online: [ nodea.private.example.com nodeb.private.example.com nodec.private.example.com ]

Full list of resources:

fence_nodea (stonith:fence_xvm): Started nodea.private.example.com

fence_nodeb (stonith:fence_xvm): Started nodeb.private.example.com

fence_nodec (stonith:fence_xvm): Started nodec.private.example.com

Resource Group: myweb

myip (ocf::heartbeat:IPaddr2): Started nodea.private.example.com

myfs (ocf::heartbeat:Filesystem): Started nodea.private.example.com

myhttpd (systemd:httpd): Started nodea.private.example.com

PCSD Status:

nodea.private.example.com: Online

nodeb.private.example.com: Online

nodec.private.example.com: Online

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

[root@nodea ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 8G 0 disk

└─storage 252:0 0 8G 0 mpath

└─storage1 252:1 0 5G 0 part /var/www/html

sdb 8:16 0 8G 0 disk

└─storage 252:0 0 8G 0 mpath

└─storage1 252:1 0 5G 0 part /var/www/html

vda 253:0 0 10G 0 disk

└─vda1 253:1 0 10G 0 part /

[root@nodea ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:00:00:0a brd ff:ff:ff:ff:ff:ff

inet 172.25.0.10/24 brd 172.25.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet 172.25.0.80/24 brd 172.25.0.255 scope global secondary eth0 //浮动ip

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe00:a/64 scope link

valid_lft forever preferred_lft forever

[root@nodea ~]# systemctl status httpd

[root@nodea ~]# pcs resource show myfs //查看集群资源的详细信息

Resource: myfs (class=ocf provider=heartbeat type=Filesystem)

Attributes: device=/dev/mapper/storage1 directory=/var/www/html fstype=ext4

Operations: start interval=0s timeout=60 (myfs-start-timeout-60) //interval=0s:事实检测资源状态,没有设置默认是60s,timeout=60:如果60s资源都没有启动起来,则会将业务按照on-fail的参数来重启或者切换

stop interval=0s timeout=60 (myfs-stop-timeout-60) //timeout=60:60s资源没有停止即超时

monitor interval=20 timeout=40 (myfs-monitor-interval-20) //监控,默认on-fail=restart 失效了重启

[root@nodea ~]# pcs resource update myfs device=/dev/mapper/storag2 //修改集群资源

集群可以监测那些东西

1、集群资源,默认当集群资源失效时,会强制将集群资源重启,重启不了则会切换

2、心跳网络,创建集群时指定的网络为心跳网络,节点出现问题会被fence

集群不能检测存储链路,只能由多路径检测存储链路,存储出现问题只能手动修复

资源失效解决方法

on-fail=restart //资源失效会停止并启动资源,如果restart不行则会将资源切换

[root@nodea ~]# pcs resource update myfs op monitor on-fail=fence //修改这个集群资源的on-fail为fence

资源组的管理

[root@nodea ~]# pcs resource disable myweb //停止资源组

[root@nodea ~]# pcs resource enable myweb //启动资源组

[root@nodea ~]# pcs resource move myweb nodea.private.example.com //移动资源组到nodea 这样操作,nodea的优先级最大,就算业务在其他节点运行,当nodea正常时也会自动切换到nodea,正无穷

[root@nodea ~]# pcs resource move myweb //不指定移动到那个节点,则此节点的优先级最低,哪怕此节点正常,业务也不会切换到此节点,负无穷

[root@nodea ~]# pcs constraint //查看资源优先级

[root@nodea ~]# pcs constraint --remove id

[root@nodea ~]# pcs constraint location myweb prefers nodea.private.example.com=200 //手动设置nodea的优先级为200

[root@nodea ~]# pcs constraint location myweb prefers nodeb.private.example.com=300 //手动设置nodeb的优先级为300

[root@nodea ~]# pcs constraint location myweb prefers nodec.private.example.com=400 //手动设置nodec的优先级为400,此节点鼓掌,服务会运行在nodeb,当节点重新正常运行时,服务会运行在此节点

[root@nodea ~]# pcs constraint

Location Constraints:

Resource: myweb

Enabled on: nodea.private.example.com (score:200)

Enabled on: nodeb.private.example.com (score:300)

Enabled on: nodec.private.example.com (score:400)

Ordering Constraints:

Colocation Constraints:

[root@nodea ~]# pcs resource clear myweb //清理临时的规则

集群资源的精细化控制

Order:控制集群资源的启动顺序

Location:定义集群资源运行的节点

Colocation:两个集群资源必须运行在同一个节点上,如:集群级文件系统

[root@nodea ~]# pcs constraint order myip then myfs //先启动myip,再启动myfs

Adding myip myfs (kind: Mandatory) (Options: first-action=start then-action=start)

[root@nodea ~]# pcs constraint order myfs then myhttpd /先启动myfs,再启动myhttpd

Adding myfs myhttpd (kind: Mandatory) (Options: first-action=start then-action=start)

[root@nodea ~]# pcs constraint

Location Constraints:

Resource: myweb

Enabled on: nodea.private.example.com (score:200)

Enabled on: nodeb.private.example.com (score:300)

Enabled on: nodec.private.example.com (score:400)

Ordering Constraints:

start myip then start myfs (kind:Mandatory)

start myfs then start myhttpd (kind:Mandatory) //集群资源启动顺序:myip---->myfs---->myhttpd

Colocation Constraints:

[root@nodea ~]# pcs constraint colocation add myip with myfs //myip必须和myfs运行在同一节点上

[root@nodea ~]# pcs constraint --full

Location Constraints:

Resource: myweb

Enabled on: nodea.private.example.com (score:200) (id:location-myweb-nodea.private.example.com-200)

Enabled on: nodeb.private.example.com (score:300) (id:location-myweb-nodeb.private.example.com-300)

Enabled on: nodec.private.example.com (score:400) (id:location-myweb-nodec.private.example.com-400)

Ordering Constraints:

start myip then start myfs (kind:Mandatory) (id:order-myip-myfs-mandatory)

start myfs then start myhttpd (kind:Mandatory) (id:order-myfs-myhttpd-mandatory)

Colocation Constraints:

myip with myfs (score:INFINITY) (id:colocation-myip-myfs-INFINITY)

[root@nodea ~]# pcs constraint remove colocation-myip-myfs-INFINITY //移除规则 集群级逻辑卷:CLuster LVM(clvmd)

HA LVM:文件系统为ext4、xfs等单机文件系统使用这个

[root@workstation ~]# lab lvm setup

[root@nodea ~]# pvcreate /dev/mapper/storage1

[root@nodea ~]# vgcreate clustervg /dev/mapper/storage1

[root@nodea ~]# lvcreate -L 2G -n data clustervg

[root@nodea ~]# lvdisplay

--- Logical volume ---

LV Path /dev/clustervg/data

LV Name data

VG Name clustervg

LV UUID 817z3s-3hOt-Zj0d-S6cp-YMjv-sJJf-ho5gej

LV Write Access read/write

LV Creation host, time nodea.cluster0.example.com, 2021-05-21 01:21:38 -0400

LV Status available

# open 0

LV Size 2.00 GiB

Current LE 512

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 252:2

[root@nodea ~]# fdisk -l

磁盘 /dev/vda:10.7 GB, 10737418240 字节,20971520 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 512 字节

磁盘标签类型:dos

磁盘标识符:0x000272f4

设备 Boot Start End Blocks Id System

/dev/vda1 * 2048 20970332 10484142+ 83 Linux

磁盘 /dev/sda:8588 MB, 8588886016 字节,16775168 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 4194304 字节

磁盘标签类型:dos

磁盘标识符:0x11f3cd48

设备 Boot Start End Blocks Id System

/dev/sda1 8192 10493951 5242880 83 Linux

磁盘 /dev/mapper/storage:8588 MB, 8588886016 字节,16775168 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 4194304 字节

磁盘标签类型:dos

磁盘标识符:0x11f3cd48

设备 Boot Start End Blocks Id System

/dev/mapper/storage1 8192 10493951 5242880 83 Linux

磁盘 /dev/sdb:8588 MB, 8588886016 字节,16775168 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 4194304 字节

磁盘标签类型:dos

磁盘标识符:0x11f3cd48

设备 Boot Start End Blocks Id System

/dev/sdb1 8192 10493951 5242880 83 Linux

磁盘 /dev/mapper/storage1:5368 MB, 5368709120 字节,10485760 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 4194304 字节

磁盘 /dev/mapper/clustervg-data:2147 MB, 2147483648 字节,4194304 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 4194304 字节

[root@nodea ~]#

[root@nodeb ~]# lvdisplay

[root@nodeb ~]# vgdisplay

[root@nodeb ~]# pvdisplay

[root@nodeb ~]# pvscan

No matching physical volumes found

[root@nodeb ~]# vgscan

Reading all physical volumes. This may take a while...

[root@nodeb ~]# fdisk -l

磁盘 /dev/vda:10.7 GB, 10737418240 字节,20971520 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 512 字节

磁盘标签类型:dos

磁盘标识符:0x000272f4

设备 Boot Start End Blocks Id System

/dev/vda1 * 2048 20970332 10484142+ 83 Linux

磁盘 /dev/sdb:8588 MB, 8588886016 字节,16775168 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 4194304 字节

磁盘标签类型:dos

磁盘标识符:0x11f3cd48

设备 Boot Start End Blocks Id System

/dev/sdb1 8192 10493951 5242880 83 Linux

磁盘 /dev/sda:8588 MB, 8588886016 字节,16775168 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 4194304 字节

磁盘标签类型:dos

磁盘标识符:0x11f3cd48

设备 Boot Start End Blocks Id System

/dev/sda1 8192 10493951 5242880 83 Linux

磁盘 /dev/mapper/storage:8588 MB, 8588886016 字节,16775168 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 4194304 字节

磁盘标签类型:dos

磁盘标识符:0x11f3cd48

设备 Boot Start End Blocks Id System

/dev/mapper/storage1 8192 10493951 5242880 83 Linux

磁盘 /dev/mapper/storage1:5368 MB, 5368709120 字节,10485760 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 4194304 字节

[root@nodeb ~]#

源数据只记录在集群的一个节点上,其他节点看不到创建的逻辑卷

[root@nodea ~]# mkfs.xfs /dev/clustervg/data

[root@nodea ~]# partprobe

[root@nodeb ~]# partprobe

[root@nodec ~]# partprobe

[root@nodea ~]# cat /etc/lvm/lvm.conf | grep locking_type

# to use it instead of the configured locking_type. Do not use lvmetad or

# supported in clustered environment. If use_lvmetad=1 and locking_type=3

locking_type = 1 //1为单机锁,3为集群锁

# NB. This option only affects locking_type = 1 viz. local file-based

# The external locking library to load if locking_type is set to 2.

# supported in clustered environment. If use_lvmetad=1 and locking_type=3

[root@nodea ~]# cat /etc/lvm/lvm.conf | grep volume_list

volume_list= [] //系统当中的卷组,写入这里即开机激活,集群级逻辑卷不要写到这里,因为希望使用集群来激活集群级逻辑卷,不要开机使用系统激活集群级卷组

[root@nodea ~]# dracut -H -f /boot/initramfs-$(uname -r).img $(uname -r); reboot //将系统当前的内核内容写入/boot/initramfs文件中,开机时就会读取/etc/lvm/lvm.cong,就会忽略volume_list=[] 定义的卷组

在所有节点进行上面两步操作

[root@nodea ~]# pcs resource create halvm LVM volgrpname=clustervg exclusive=true --group halvmfs //创建一个集群资源是halvm,卷组名为clustervg,独占(只能在一个节点开机),

[root@nodea ~]# pcs resource create xfsfs Filesystem device="/dev/clustervg/clusterlv" directory="/mnt" fstype="xfs" --group halvmfs //创建一个集群资源,xfsfs,设备名/dev/clustervg/clusteralv,挂载点/mnt,文件系统xfs,加入组halvmfs

[root@nodea ~]# pcs status

[root@nodea ~]# lvremove /dev/clustervg/data

[root@nodea ~]# vgremove clustervg

[root@nodea ~]# pvremove /dev/mapper/storage1

[root@nodea ~]# pcs resource deleteclvmd:文件系统为gfs2、vims等集群级文件系统使用这个

[root@nodea ~]# cat /etc/lvm/lvm.conf | grep locking_type

locking_type = 3 //1为单机锁,3为集群锁

use_lvmetad = 0 //1打开,0关闭,单机版为提升性能,会把matedata放在cache中,集群保存状态

[root@nodea ~]# lvmconf --eenable-cluster //启用集群级逻辑卷

[root@nodea ~]# systemctl stop lvm2-lvmetad //关闭这个服务

[root@nodea ~]# pcs resource create mydlm controld op monitor interval=30s on-fail=fence clone interleave=true ordered=true //创建集群资源,名字为controld,监控30s失效就被fence。clone可以同时运行在多个节点

[root@nodea ~]# pcs resource create myclvmd clvm op monitor interval=30s on-file=fence clone interleave=true ordered=true //创建集群资源,名字为clvm,监控30s失效就被fence。clone可以同时运行在多个节点

[root@nodea ~]# pcs constraint order start mydlm-clone then myclvmd-clone //先启动mydlm-clone再启动myclvmd-clone

[root@nodea ~]# pcs constraint colocation add myclvmd-clone with mydlm-clone //他们两个必须运行在同一个节点

[root@nodea ~]# yum install -y dlm lvm2-cluster

[root@nodea ~]# pcs property set no-quorum-policy=freeze //全局参数,缺省为stop,当集群不存活了,集群会自动停止所有资源,freeze为等所有集群节点在线后,激活所有集群资源

[root@nodea ~]# lvmconf --enable-cluster //将逻辑卷锁改为3

[root@nodea ~]# systemctl stop lvm2-lvmetad //关闭这个服务

[root@nodea ~]# pcs resource create dlm controld op monitor interval=30s on-fail=fence clone interleave=true ordered=true //创建集群资源,名字为controld,监控30s失效就被fence。clone可以同时运行在多个节点

[root@nodea ~]# pcs resource create clvmd clvm op monitor interval=30s on-file=fence clone interleave=true ordered=true //创建集群资源,名字为clvm,监控30s失效就被fence。clone可以同时运行在多个节点

[root@nodea ~]# pcs constraint order start dlm-clone then clvmd-clone //先启动dlm-clone再启动clvmd-clone

[root@nodea ~]# pcs constraint colocation add clvmd-clone with dlm-clone //他们两个必须运行在同一个节点

[root@nodea ~]# pvcreate /dev/mapper/storage1

[root@nodea ~]# vgcreate myvg /dev/mapper/storage1

[root@nodea ~]# lvcreate -L 1G -n mylv myvg集群级文件系统:带锁机制,保证文件系统一致性

VMware-Vsphere:VMFS5

Huawei-FusionComputer:VIMS

RedHat:GFS2

[root@nodea ~]# yum install -y gfs2-utils (所有节点)

[root@nodea ~]# mkfs.gfs2 -p lock_dlm -t cluster0:data -j 4 -J 64 /dev/vg0/data //-p lock_dlm 指定锁类型为dlm,cluster0集群的名称,指定错误会挂载错误,data文件系统(无所谓),-j 3 日志区的数量为4,一般为节点数+1,-J 64 一个日志区大小为64M

[root@nodea ~]# mount /dev/vg0/data /mnt/gfs2

系统崩溃,恢复数据

[root@nodea ~]# mount -o lockproto=lock_nolock /dev/vg0/data /mnt/gfs2 //单击锁,当集群出现故障,文件系统挂载失败,用来恢复数据

创建集群资源

[root@nodea ~]# pcs resource create mygfs2 Filesystem device-/dev/vg0/data directory-/mnt/data fstype=gfs2 colne op monitor interval=30s on-file=fence interleave=true ordered=true

三个节点会自动挂载,三个节点同时写入数据进行测试,保证文件系统的一致性GFS2拉伸

[root@nodea ~]# lvextend -L +1G /dev/vg0/data

[root@nodea ~]# gfs2_grow /dev/vg0/data查看日志区信息

[root@nodea ~]# gfs2_edit -p jindex /dev/vg0/data

[root@nodea ~]# gfs2_jadd -j 1 /dev/vg0/data //从文件系统扩的修复GFS2文件系统

[root@nodea ~]# fsck.gfs2

[root@nodea ~]# tunegfs2 -l /dev/vgo/data //查看gfs2文件系统的元数据

[root@nodea ~]# tunegfs2 -o locktable=cluster1:data /dev/vg0/data //修改gfs2文件系统的超级快信息,用来给另一个集群cluster1使用维护集群使用hosts表,不建议使用dns

RH436考试解答

1、建立集群

使用三个节点创建集群clusterx

[root@nodea ~]# yum install -y pcs fence-agents-all && systemctl enable pcsd.service && systemctl start pcsd.service

[root@nodeb ~]# yum install -y pcs fence-agents-all && systemctl enable pcsd.service && systemctl start pcsd.service

[root@nodec ~]# yum install -y pcs fence-agents-all && systemctl enable pcsd.service && systemctl start pcsd.service

[root@workstation ~]# yum install -y targetcli

[root@workstation ~]# systemctl enable target && systemctl start target

ln -s '/usr/lib/systemd/system/target.service' '/etc/systemd/system/multi-user.target.wants/target.service'

[root@workstation ~]# firewall-cmd --permanent --add-port=3260/tcp && firewall-cmd --reload

success

success

[root@workstation ~]#

[root@nodea ~]# echo q1! | passwd --stdin hacluster //考试时请使用提供的密码

更改用户 hacluster 的密码 。

passwd:所有的身份验证令牌已经成功更新。

[root@nodeb ~]# echo q1! | passwd --stdin hacluster //考试时请使用提供的密码

更改用户 hacluster 的密码 。

passwd:所有的身份验证令牌已经成功更新。

[root@nodec ~]# echo q1! | passwd --stdin hacluster //考试时请使用提供的密码

更改用户 hacluster 的密码 。

passwd:所有的身份验证令牌已经成功更新。

[root@nodea ~]# firewall-cmd --permanent --add-service=high-availability && firewall-cmd --reload

success

success

[root@nodeb ~]# firewall-cmd --permanent --add-service=high-availability && firewall-cmd --reload

success

success

[root@nodec ~]# firewall-cmd --permanent --add-service=high-availability && firewall-cmd --reload

success

success

[root@nodea ~]# pcs cluster auth nodea.private.example.com nodeb.private.example.com nodec.private.example.com -u hacluster -p q1!

nodea.private.example.com: Authorized

nodeb.private.example.com: Authorized

nodec.private.example.com: Authorized

[root@nodea ~]# pcs cluster setup --name cluster1 nodea.private.example.com nodeb.private.example.com nodec.private.example.com

Shutting down pacemaker/corosync services...

Redirecting to /bin/systemctl stop pacemaker.service

Redirecting to /bin/systemctl stop corosync.service

Killing any remaining services...

Removing all cluster configuration files...

nodea.private.example.com: Succeeded

nodeb.private.example.com: Succeeded

nodec.private.example.com: Succeeded

[root@nodea ~]# pcs cluster enable --all && pcs cluster start --all

nodea.private.example.com: Cluster Enabled

nodeb.private.example.com: Cluster Enabled

nodec.private.example.com: Cluster Enabled

nodeb.private.example.com: Starting Cluster...

nodea.private.example.com: Starting Cluster...

nodec.private.example.com: Starting Cluster...

[root@nodea ~]# pcs status

Cluster name: cluster1

WARNING: no stonith devices and stonith-enabled is not false

Last updated: Fri May 21 12:13:36 2021

Last change: Fri May 21 12:13:31 2021

Stack: corosync

Current DC: nodeb.private.example.com (2) - partition with quorum

Version: 1.1.12-a14efad

3 Nodes configured

0 Resources configured

Online: [ nodea.private.example.com nodeb.private.example.com nodec.private.example.com ]

Full list of resources:

PCSD Status:

nodea.private.example.com: Online

nodeb.private.example.com: Online

nodec.private.example.com: Online

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled2、配置fence

[root@nodea ~]# firewall-cmd --permanent --add-port=1229/tcp && firewall-cmd --permanent --add-port=1229/udp && firewall-cmd --reload && mkdir /etc/cluster

success

success

success

[root@nodeb ~]# firewall-cmd --permanent --add-port=1229/tcp && firewall-cmd --permanent --add-port=1229/udp && firewall-cmd --reload && mkdir /etc/cluster

success

success

success

[root@nodec ~]# firewall-cmd --permanent --add-port=1229/tcp && firewall-cmd --permanent --add-port=1229/udp && firewall-cmd --reload && mkdir /etc/cluster

success

success

success

{

[root@foundation0 ~]# yum install -y fence-virtd fence-virtd-libvirt fence-virtd-multicast //安装fence软件

[root@foundation0 ~]# mkdir -p /etc/cluster //密钥默认保存在这个目录

[root@foundation0 ~]# dd if=/dev/urandom of=/etc/cluster/fence_xvm.key bs=1k count=4 //生成密钥

记录了4+0 的读入

记录了4+0 的写出

4096字节(4.1 kB)已复制,0.00353812 秒,1.2 MB/秒

} //考试不需要操作

[root@foundation0 ~]# scp /etc/cluster/fence_xvm.key root@nodea:/etc/cluster/

[root@foundation0 ~]# scp /etc/cluster/fence_xvm.key root@nodeb:/etc/cluster/

[root@foundation0 ~]# scp /etc/cluster/fence_xvm.key root@nodec:/etc/cluster/

[root@nodea ~]# pcs stonith create fence_nodea fence_xvm port="nodea" pcmk_host_list="nodea.private.example.com"

[root@nodea ~]# pcs stonith create fence_nodeb fence_xvm port="nodeb" pcmk_host_list="nodeb.private.example.com"

[root@nodea ~]# pcs stonith create fence_nodec fence_xvm port="nodec" pcmk_host_list="nodec.private.example.com"

[root@nodea ~]# pcs stonith show

fence_nodea (stonith:fence_xvm): Started

fence_nodeb (stonith:fence_xvm): Started

fence_nodec (stonith:fence_xvm): Started

[root@nodea ~]# pcs stonith fence nodec.private.example.com //进行fence测试 3、配置日志文件

[root@nodea ~]# cat /etc/corosync/corosync.conf //所有节点配置

totem {

version: 2

secauth: off

cluster_name: cluster1

transport: udpu

}

nodelist {

node {

ring0_addr: nodea.private.example.com

nodeid: 1

}

node {

ring0_addr: nodeb.private.example.com

nodeid: 2

}

node {

ring0_addr: nodec.private.example.com

nodeid: 3

}

}

quorum {

provider: corosync_votequorum

}

logging {

to_syslog: yes

to_file: yes

logfile:/var/log/EX436-nodea.log //添加这两行内容

}

[root@nodea ~]# pcs cluster reload corosync

[root@nodea ~]# ll /var/log/EX436-nodea.log

-rw-r--r--. 1 root root 0 5月 21 23:49 /var/log/EX436-nodea.log

[root@nodeb ~]# ll /var/log/EX436-nodeb.log

-rw-r--r--. 1 root root 0 5月 21 23:49 /var/log/EX436-nodeb.log

[root@nodec ~]# ll /var/log/EX436-nodec.log

-rw-r--r--. 1 root root 0 5月 21 23:49 /var/log/EX436-nodec.log4、配置监控

[root@workstation ~]# vim /etc/postfix/main.cf

#inet_interfaces = localhost //注销掉这行

[root@workstation ~]# service postfix restart

[root@workstation ~]# firewall-cmd --permanent --add-service=smtp && firewall-cmd --reload

success

success

[root@nodea ~]# pcs resource create clustermonitor MailTo email="[email protected]" subject="mail send" --group myweb

[root@nodea ~]# pcs resource show

Resource Group: myweb

clustermonitor (ocf::heartbeat:MailTo): Started 5、配置http服务和ip

[root@nodea ~]# yum install -y httpd

[root@nodea ~]# firewall-cmd --permanent --add-port=80/tcp && firewall-cmd --reload

success

success

[root@nodeb ~]# yum install -y httpd

[root@nodeb ~]# firewall-cmd --permanent --add-port=80/tcp && firewall-cmd --reload

success

success

[root@nodec ~]# yum install -y httpd

[root@nodec ~]# firewall-cmd --permanent --add-port=80/tcp && firewall-cmd --reload

success

success

[root@nodea ~]# pcs resource create myip IPaddr2 ip=172.25.0.80 cidr_netmask=24 --group myweb

[root@nodea ~]# pcs resource create myservice systemd:httpd --group myweb

[root@nodea ~]# pcs resource show

Resource Group: myweb

clustermonitor (ocf::heartbeat:MailTo): Started

myip (ocf::heartbeat:IPaddr2): Started

myservice (systemd:httpd): Started 6、配置集群的行为

[root@nodea ~]# pcs constraint location myweb prefers nodea.private.example.com

[root@nodea ~]# pcs constraint location myweb avoids nodec.private.example.com

[root@nodea ~]# pcs constraint

Location Constraints:

Resource: myweb

Enabled on: nodea.private.example.com (score:INFINITY) //+无穷

Disabled on: nodec.private.example.com (score:-INFINITY) //-无穷

Ordering Constraints:

Colocation Constraints:7、配置iscsi存储

[root@nodea ~]# yum install -y iscsi-initiator-utils && cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.1994-05.com.redhat:abf23b82d8b5

[root@nodeb ~]# yum install -y iscsi-initiator-utils && cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.1994-05.com.redhat:eedae1d04225

[root@nodec ~]# yum install -y iscsi-initiator-utils && cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.1994-05.com.redhat:8e7297557ba

[root@workstation ~]# fdisk /dev/vdb

欢迎使用 fdisk (util-linux 2.23.2)。

更改将停留在内存中,直到您决定将更改写入磁盘。

使用写入命令前请三思。

Device does not contain a recognized partition table

使用磁盘标识符 0x53d10e7d 创建新的 DOS 磁盘标签。

命令(输入 m 获取帮助):n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p):

Using default response p

分区号 (1-4,默认 1):

起始 扇区 (2048-16777215,默认为 2048):

将使用默认值 2048

Last 扇区, +扇区 or +size{K,M,G} (2048-16777215,默认为 16777215):

将使用默认值 16777215

分区 1 已设置为 Linux 类型,大小设为 8 GiB

命令(输入 m 获取帮助):w

The partition table has been altered!

Calling ioctl() to re-read partition table.

正在同步磁盘。

[root@workstation ~]# targetcli

Warning: Could not load preferences file /root/.targetcli/prefs.bin.

targetcli shell version 2.1.fb37

Copyright 2011-2013 by Datera, Inc and others.

For help on commands, type 'help'.

/> /backstores/block create storage /dev/vdb1

Created block storage object storage using /dev/vdb1.

/> /iscsi create iqn.2021-05.com.example.workstation

Created target iqn.2021-05.com.example.workstation.

Created TPG 1.

Global pref auto_add_default_portal=true

Created default portal listening on all IPs (0.0.0.0), port 3260.

/> /iscsi/iqn.2021-05.com.example.workstation/tpg1/luns create /backstores/block/storage

Created LUN 0.

/> /iscsi/iqn.2021-05.com.example.workstation/tpg1/acls create iqn.1994-05.com.redhat:abf23b82d8b5

Created Node ACL for iqn.1994-05.com.redhat:abf23b82d8b5

Created mapped LUN 0.

/> /iscsi/iqn.2021-05.com.example.workstation/tpg1/acls create iqn.1994-05.com.redhat:eedae1d04225

Created Node ACL for iqn.1994-05.com.redhat:eedae1d04225

Created mapped LUN 0.

/> /iscsi/iqn.2021-05.com.example.workstation/tpg1/acls create iqn.1994-05.com.redhat:8e7297557ba

Created Node ACL for iqn.1994-05.com.redhat:8e7297557ba

Created mapped LUN 0.

/> saveconfig

Last 10 configs saved in /etc/target/backup.

Configuration saved to /etc/target/saveconfig.json

/> exit

Global pref auto_save_on_exit=true

Last 10 configs saved in /etc/target/backup.

Configuration saved to /etc/target/saveconfig.json

[root@workstation ~]# systemctl restart target.service

[root@workstation ~]# netstat -tunlp | grep :3260

tcp 0 0 0.0.0.0:3260 0.0.0.0:* LISTEN -

[root@nodea ~]# iscsiadm -m discovery -t st -p 192.168.1.9 && iscsiadm -m discovery -t st -p 192.168.2.9

192.168.1.9:3260,1 iqn.2021-05.com.example.workstation

192.168.2.9:3260,1 iqn.2021-05.com.example.workstation

[root@nodea ~]# iscsiadm -m node -l

Logging in to [iface: default, target: iqn.2021-05.com.example.workstation, portal: 192.168.1.9,3260] (multiple)

Logging in to [iface: default, target: iqn.2021-05.com.example.workstation, portal: 192.168.2.9,3260] (multiple)

Login to [iface: default, target: iqn.2021-05.com.example.workstation, portal: 192.168.1.9,3260] successful.

Login to [iface: default, target: iqn.2021-05.com.example.workstation, portal: 192.168.2.9,3260] successful.

[root@nodea ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 8G 0 disk

sdb 8:16 0 8G 0 disk

vda 253:0 0 10G 0 disk

└─vda1 253:1 0 10G 0 part /

[root@nodea ~]# 8、配置多路径

[root@nodea ~]# yum install -y device-mapper-multipath

[root@nodea ~]# mpathconf --enable && systemctl restart multipathd.service

[root@nodea ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 8G 0 disk

└─mpatha 252:0 0 8G 0 mpath

sdb 8:16 0 8G 0 disk

└─mpatha 252:0 0 8G 0 mpath

vda 253:0 0 10G 0 disk

└─vda1 253:1 0 10G 0 part /

[root@nodeb ~]# yum install -y device-mapper-multipath

[root@nodeb ~]# mpathconf --enable && systemctl restart multipathd.service

[root@nodeb ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 8G 0 disk

└─mpatha 252:0 0 8G 0 mpath

sdb 8:16 0 8G 0 disk

└─mpatha 252:0 0 8G 0 mpath

vda 253:0 0 10G 0 disk

└─vda1 253:1 0 10G 0 part /

[root@nodec ~]# yum install -y device-mapper-multipath

[root@nodec ~]# mpathconf --enable && systemctl restart multipathd.service

[root@nodec ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 8G 0 disk

└─mpatha 252:0 0 8G 0 mpath

sdb 8:16 0 8G 0 disk

└─mpatha 252:0 0 8G 0 mpath

vda 253:0 0 10G 0 disk

└─vda1 253:1 0 10G 0 part /9、创建gfs2文件系统

[root@nodea ~]# yum install -y dlm lvm2-cluster gfs2-utils && lvmconf --enable-cluster

[root@nodeb ~]# yum install -y dlm lvm2-cluster gfs2-utils && lvmconf --enable-cluster

[root@nodec ~]# yum install -y dlm lvm2-cluster gfs2-utils && lvmconf --enable-cluster

[root@nodea ~]# systemctl stop lvm2-lvmetad

[root@nodeb ~]# systemctl stop lvm2-lvmetad

[root@nodec ~]# systemctl stop lvm2-lvmetad

[root@nodea ~]# pcs property set no-quorum-policy=freeze

[root@nodea ~]# pcs resource create dlm controld op monitor interval=30s on-fail=fence clone interleave=true ordered=true

[root@nodea ~]# pcs resource create clvmd clvm op monitor interval=30s on-fail=fence clone interleave=true ordered=true

[root@nodea ~]# pcs resource show

Resource Group: myweb

clustermonitor (ocf::heartbeat:MailTo): Started

myip (ocf::heartbeat:IPaddr2): Started

myservice (systemd:httpd): Started

Clone Set: dlm-clone [dlm]

Started: [ nodea.private.example.com nodeb.private.example.com nodec.private.example.com ]

Clone Set: clvmd-clone [clvmd]

Started: [ nodea.private.example.com nodeb.private.example.com nodec.private.example.com ]

[root@nodea ~]# pvcreate /dev/mapper/mpatha

Physical volume "/dev/mapper/mpatha" successfully created

[root@nodea ~]# vgcreate vg0 /dev/mapper/mpatha

Clustered volume group "vg0" successfully created

[root@nodea ~]# lvcreate -L 4G -n data vg0

Logical volume "data" created.

[root@nodea ~]# lvdisplay

--- Logical volume ---

LV Path /dev/vg0/data

LV Name data

VG Name vg0

LV UUID 0AQXWw-3qSB-eeh7-tiOL-xU70-EWSu-0BiCFF

LV Write Access read/write

LV Creation host, time nodea.cluster0.example.com, 2021-05-22 00:54:56 -0400

LV Status available

# open 0

LV Size 4.00 GiB

Current LE 1024

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 252:1

[root@nodea ~]# mkfs.gfs2 -p lock_dlm -t cluster1:gfsdata -j 4 /dev/vg0/data

/dev/vg0/data is a symbolic link to /dev/dm-1

This will destroy any data on /dev/dm-1

Are you sure you want to proceed? [y/n]y

Device: /dev/vg0/data

Block size: 4096

Device size: 4.00 GB (1048576 blocks)

Filesystem size: 4.00 GB (1048574 blocks)

Journals: 4

Resource groups: 18

Locking protocol: "lock_dlm"

Lock table: "cluster1:gfsdata"

UUID: fcdca207-f951-8c69-9a38-10722861091710、挂载文件系统到/var/www,并按照第五题要求吧/var/www作为http服务的根

[root@nodea ~]# pcs resource create myfs Filesystem device=/dev/mapper/vg0-data directory=/var/www fstype=gfs2 op monitor interval=30s on-fail=fence clone interleave=true ordered=true

[root@nodea ~]# pcs resource show

Resource Group: myweb

clustermonitor (ocf::heartbeat:MailTo): Started

myip (ocf::heartbeat:IPaddr2): Started

myservice (systemd:httpd): Started

Clone Set: dlm-clone [dlm]

Started: [ nodea.private.example.com nodeb.private.example.com nodec.private.example.com ]

Clone Set: clvmd-clone [clvmd]

Started: [ nodea.private.example.com nodeb.private.example.com nodec.private.example.com ]

Clone Set: myfs-clone [myfs]

Started: [ nodea.private.example.com nodeb.private.example.com nodec.private.example.com ]

[root@nodea ~]# df -Th

文件系统 类型 容量 已用 可用 已用% 挂载点

/dev/vda1 xfs 10G 1.2G 8.9G 12% /

devtmpfs devtmpfs 904M 0 904M 0% /dev

tmpfs tmpfs 921M 76M 846M 9% /dev/shm

tmpfs tmpfs 921M 17M 904M 2% /run

tmpfs tmpfs 921M 0 921M 0% /sys/fs/cgroup

/dev/mapper/vg0-data gfs2 4.0G 518M 3.5G 13% /var/www

[root@nodea ~]# pcs constraint order start dlm-clone then clvmd-clone

Adding dlm-clone clvmd-clone (kind: Mandatory) (Options: first-action=start then-action=start)

[root@nodea ~]# pcs constraint order start clvmd-clone then myfs-clone

Adding clvmd-clone myfs-clone (kind: Mandatory) (Options: first-action=start then-action=start)

[root@nodea ~]# pcs constraint colocation add dlm-clone with clvmd-clone

[root@nodea ~]# pcs constraint colocation add clvmd-clone with myfs-clone

[root@nodea ~]# pcs constraint

Location Constraints:

Resource: myweb

Enabled on: nodea.private.example.com (score:INFINITY)

Disabled on: nodec.private.example.com (score:-INFINITY)

Ordering Constraints:

start dlm-clone then start clvmd-clone (kind:Mandatory)

start clvmd-clone then start myfs-clone (kind:Mandatory)

Colocation Constraints:

dlm-clone with clvmd-clone (score:INFINITY)

clvmd-clone with myfs-clone (score:INFINITY)

[root@nodea ~]# vim /etc/httpd/conf/httpd.conf

DocumentRoot "/var/www/" //修改

#

# Relax access to content within /var/www.

#

AllowOverride None

# Allow open access:

Require all granted

# Further relax access to the default document root:

//修改

[root@nodea ~]# scp /etc/httpd/conf/httpd.conf [email protected]:/etc/httpd/conf/httpd.conf

[root@nodea ~]# scp /etc/httpd/conf/httpd.conf [email protected]:/etc/httpd/conf/httpd.conf

[root@nodea ~]# pcs cluster stop --all

nodec.private.example.com: Stopping Cluster (pacemaker)...

nodeb.private.example.com: Stopping Cluster (pacemaker)...

nodea.private.example.com: Stopping Cluster (pacemaker)...

nodeb.private.example.com: Stopping Cluster (corosync)...

nodea.private.example.com: Stopping Cluster (corosync)...

nodec.private.example.com: Stopping Cluster (corosync)...

[root@nodea ~]# pcs cluster start --all

nodec.private.example.com: Starting Cluster...

nodeb.private.example.com: Starting Cluster...

nodea.private.example.com: Starting Cluster...

[root@nodea ~]# pcs status

Cluster name: cluster1

Last updated: Sat May 22 01:13:27 2021

Last change: Sat May 22 01:08:15 2021

Stack: corosync

Current DC: nodeb.private.example.com (2) - partition with quorum

Version: 1.1.12-a14efad

3 Nodes configured

15 Resources configured

Online: [ nodea.private.example.com nodeb.private.example.com nodec.private.example.com ]

Full list of resources:

fence_nodea (stonith:fence_xvm): Started

fence_nodeb (stonith:fence_xvm): Started

fence_nodec (stonith:fence_xvm): Started

Resource Group: myweb

clustermonitor (ocf::heartbeat:MailTo): Started nodea.private.example.com

myip (ocf::heartbeat:IPaddr2): Started nodea.private.example.com

myservice (systemd:httpd): Started nodea.private.example.com

Clone Set: dlm-clone [dlm]

Started: [ nodea.private.example.com nodeb.private.example.com nodec.private.example.com ]

Clone Set: clvmd-clone [clvmd]

Started: [ nodea.private.example.com nodeb.private.example.com nodec.private.example.com ]

Clone Set: myfs-clone [myfs]

Started: [ nodea.private.example.com nodeb.private.example.com nodec.private.example.com ]

PCSD Status:

nodea.private.example.com: Online

nodeb.private.example.com: Online

nodec.private.example.com: Online

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

[root@nodea ~]# ip add | grep 172.25.0.80

inet 172.25.0.80/24 brd 172.25.0.255 scope global secondary eth0

[root@nodea ~]# echo Hello,Cluster1 > /var/www/index.html

[root@nodea ~]# curl http://172.25.0.80

Hello,Cluster1

考试时index.html需要下载,不需要手动敲