深度学习第四周培训

文章目录

一、视频学习和论文阅读

1.MobileNet V1 & V2

2.MobileNet V3

3.SENet

二、代码学习

1.使用 SENet 对 CIFAR10 数据集进行分类

1.1定义网络结构

1.2训练数据

1.3测试数据

2.HybridSN 高光谱分类

2.1第一次测试得到的分类结果

2.2 第二次测试得到的分类结果

2.3第三次测试得到的分类结果

问题思考

1.3D卷积和2D卷积的区别?

2.为什么每次分类的结果都不一样?

3.想要进一步提升高光谱图像的分类性能,可以如何改进?

一、视频学习和论文阅读

1.MobileNet V1 & V2

- 使用了深度可分离卷积构建轻量级CNN,由depthwise(DW)和pointwise(PW)两个部分结合起来提取特征;

- 引入了两个超参数来分别控制卷积核的数量以及输入图片尺寸来进一步降低计算成本;

- 和传统神经网络相比,可以大大减少参数量和计算量;

- 深度可分离由深度卷积 + 逐点卷积组成;

- 深度卷积(DW卷积):把卷积核变成单通道,输入有 M 个通道数,就需要 M 个卷积核,每个通道分别进行卷积,最后做叠加;

- 逐点卷积(PW 卷积):用 1x1 的卷积核进行卷积,用来对深度卷积后的特征进行升维;

网络结构:

- MobileNet 结构建立在深度可分离卷积之上,所有层都遵循 ReLU 非线性,但最终全连接层除外,它没有非线性并反馈到 softmax 层进行分类。另外,将深度卷积和点卷积计算为单独的层;

- 和GoogleNet和VGG网络相比,MobileNet所需计算量和参数量更少;

- 随着α的降低,在准确率小幅降低的情况下计算成本和参数成本越来越少;随着输入图像的分辨率降低,在准确率小幅降低的情况下计算成本越来越少;

- MobileNet v2提出了一个倒残差结构,和传统残差机构相比,它是用1x1 的卷积先升维再降维的。倒残差结构在内存效率上显著提高;

- 作者经过研究发现在 V1 中 depthwise 中有 0 卷积的原因就是 Relu 造成的,因此换成 Linear 解决这个问题;

作者提出了线性瓶颈层(Linear Bottlenecks)

从上图可以看出,在低维时,Relu 对信号的损失非常大;随着维度增加,损失越来越小;因此,作者认为在输出维度较低是使用 ReLU 函数,很容易造成信息的丢失,故而选择在末层使用线性激活。

bottleneck的结构图

MobileNetV2 的架构包含具有 32 个过滤器的初始全卷积层。我们使用 ReLU6 作为非线性,因为它在与低精度计算一起使用时具有鲁棒性。除了第一层,我们在整个网络中使用恒定的拓展率t=6。

2.MobileNet V3

- 更新Block,加入了SE模块(通道注意力模块),主要包含Squeeze和Excitation两部分。W,H表示特征图宽,高。C表示通道数,输入特征图大小为W×H×C;

- 更换激活函数,引入了一种新的非线性:h-swish,计算速度更快,量化更友好;

- 压缩:全局平均池化(global average pooling)。经过压缩操作后特征图被压缩为1×1×C向量;

- 激励(Excitation)操作:由两个全连接层组成,其中SERatio是一个缩放参数,这个参数的目的是为了减少通道个数从而降低计算量;

- scale操作:在得到1×1×C向量之后,就可以对原来的特征图进行scale操作了,也就是通道权重相乘;

- 由于sigmoid的计算耗时较长,特别是在移动端,这些耗时就会比较明显,所以作者使用ReLU6(x+3)/6来近似替代sigmoid;

- 使用h-swish替换swith,在量化模式下会提高大约15%的效率,另外,h-swish在深层网络中更加明显;

介绍了Mobile netV3的一个变体:Mobile net -Large ,其对应高资源情况;

3.SENet

基本原理:

- Squeeze 操作:我们顺着空间维度来进行特征压缩,将每个二维的特征通道变成一个实数,这个实数某种程度上具有全局的感受野,并且输出的维度和输入的特征通道数相匹配;

- Excitation 操作:它是一个类似于循环神经网络中门的机制。通过参数来为每个特征通道生成权重,其中参数被学习用来显式地建模特征通道间的相关性;

- Reweight的操作:我们将Excitation的输出的权重看做是进过特征选择后的每个特征通道的重要性,然后通过乘法逐通道加权到先前的特征上,完成在通道维度上的对原始特征的重标定;

二、代码学习

1.使用 SENet 对 CIFAR10 数据集进行分类

1.1定义网络结构

#创建SENet网络

class SENet(nn.Module):

def __init__(self):

super(SENet, self).__init__()

#最终分类的种类数

self.num_classes = 10

#输入深度为64

self.in_channels = 64

#先使用64*3*3的卷积核

self.conv1 = nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(64)

#卷积层的设置,BasicBlock

#2,2,2,2为每个卷积层需要的block块数

self.layer1 = self._make_layer(BasicBlock, 64, 2, stride=1)

self.layer2 = self._make_layer(BasicBlock, 128, 2, stride=2)

self.layer3 = self._make_layer(BasicBlock, 256, 2, stride=2)

self.layer4 = self._make_layer(BasicBlock, 512, 2, stride=2)

#全连接

self.linear = nn.Linear(512, self.num_classes)

#实现每一层卷积

#blocks为大layer中的残差块数

#定义每一个layer有几个残差块,resnet18是2,2,2,2

def _make_layer(self, block, out_channels, blocks, stride):

strides = [stride] + [1]*(blocks-1)

layers = []

for stride in strides:

layers.append(block(self.in_channels, out_channels, stride))

self.in_channels = out_channels

return nn.Sequential(*layers)

#定义网络结构

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = self.layer1(out)

out = self.layer2(out)

out = self.layer3(out)

out = self.layer4(out)

out = F.avg_pool2d(out, 4)

out = out.view(out.size(0), -1)

out = self.linear(out)

return out

#网络放到GPU上

net = SENet().to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(net.parameters(), lr=0.001)1.2训练数据

for epoch in range(10): # 重复多轮训练

for i, (inputs, labels) in enumerate(trainloader):

inputs = inputs.to(device)

labels = labels.to(device)

# 优化器梯度归零

optimizer.zero_grad()

# 正向传播 + 反向传播 + 优化

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# 输出统计信息

if i % 100 == 0:

print('Epoch: %d Minibatch: %5d loss: %.3f' %(epoch + 1, i + 1, loss.item()))

print('Finished Training')

1.3测试数据

correct = 0

total = 0

for data in testloader:

images, labels = data

images, labels = images.to(device), labels.to(device)

outputs = net(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy of the network on the 10000 test images: %d %%' % (

100 * correct / total))可以明显看出,SENet训练的网络得到的准确率要远高于普通CNN,性能更好。

2.HybridSN 高光谱分类

补充网络部分代码:

class_num = 16

class HybridSN(nn.Module):

def __init__(self):

super(HybridSN, self).__init__()

# 3D卷积层

self.conv1 = nn.Conv3d(1, 8, (7, 3, 3))

self.conv2 = nn.Conv3d(8, 16, (5, 3, 3))

self.conv3 = nn.Conv3d(16, 32, (3, 3, 3))

# 2D卷积层

self.conv4 = nn.Conv2d(576, 64, (3, 3))

# 全连接层

self.liner1 = nn.Linear(18496, 256)

self.liner2 = nn.Linear(256, 128)

self.liner3 = nn.Linear(128, 16)

# Dropout

self.dropout = nn.Dropout(0.4)

# 激活函数

self.relu = nn.LeakyReLU()

self.logsoftmax = nn.LogSoftmax(dim=1)

def forward(self, x):

out = self.conv1(x)

out = self.relu(out)

out = self.conv2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.relu(out)

out = out.view(-1, out.shape[1]*out.shape[2], out.shape[3], out.shape[4])

out = self.conv4(out)

out = self.relu(out)

out = out.view(x.size(0), -1)

out = self.liner1(out)

out = self.relu(out)

out = self.dropout(out)

out = self.liner2(out)

out = self.relu(out)

out = self.dropout(out)

out = self.liner3(out)

out = self.logsoftmax(out)

return out

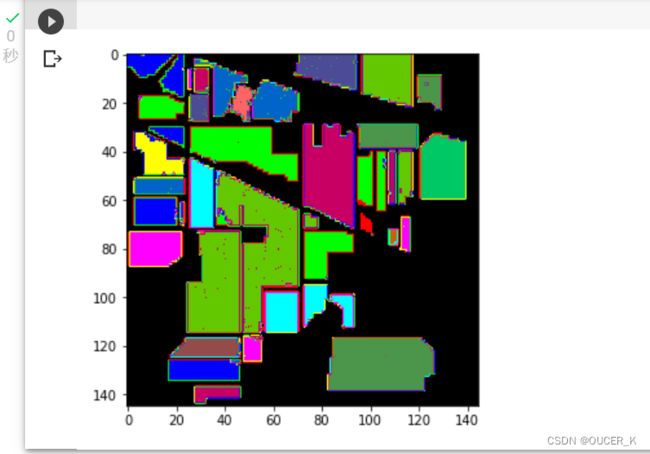

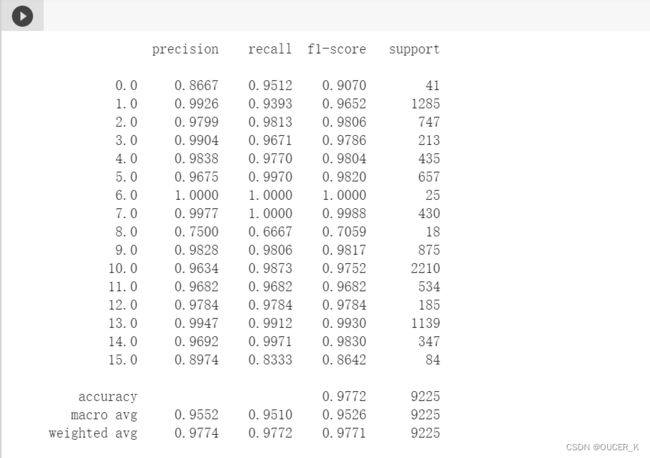

2.1第一次测试得到的分类结果

2.2 第二次测试得到的分类结果

2.3第三次测试得到的分类结果

问题思考

1.3D卷积和2D卷积的区别?

- 由于高光谱图像是体积数据并且也具有光谱维度,2D-CNN 无法从光谱维度中提取良好的区分特征图。只能提取二维特征;

- 3D-CNN 在计算上更复杂,并且对于在许多光谱带上具有相似纹理的类而言,单独的表现似乎更差。而2D-CNN 相对3D-CNN 计算量更少;

2.为什么每次分类的结果都不一样?

- 初始阈值和权值不同,导致每次训练结果不同;

3.想要进一步提升高光谱图像的分类性能,可以如何改进?

- 可以从空间注意力和通道注意力两个方面入手,增加分类性能。将注意力机制加入到网络当中,一般都会显著提高性能。例如本次学习中的SEnet;