【机器学习实战】对加州住房价格数据集进行数据清洗

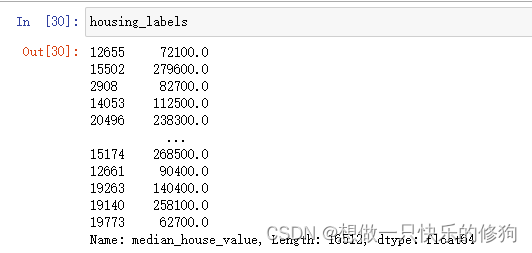

1. 先将X和Y(标签值)分开

# 预测器

housing = start_train_set.drop("median_house_value", axis=1)

#标签

housing_labels = start_train_set["median_house_value"].copy()

- 预测器:

2. 对缺失值进行处理

2.1 通常对缺失值进行处理的三种方法

# 1. 放弃这些相应的地区,即删掉包含缺失值的每一行样本

housing.dropna(subset=["total_bedrooms"])

# 2. 放弃这个属性,即删除total_bedrooms这个特征列

housing.drop("total_bedrooms", axis=1)

# 3. 将缺失的值设置为某个值(例如:中位数)

median = housing["total_bedrooms"].median()

housing["total_bedrooms"].fillna(median)

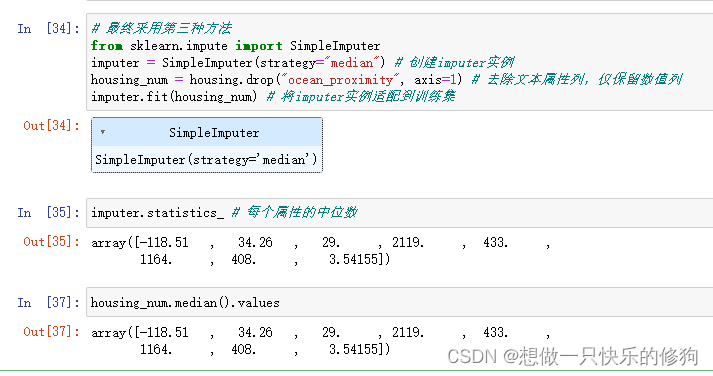

2.2 书中使用中位数替换

# 最终采用第三种方法

from sklearn.impute import SimpleImputer

imputer = SimpleImputer(strategy="median") # 创建imputer实例

housing_num = housing.drop("ocean_proximity", axis=1) # 去除文本属性列,仅保留数值列

imputer.fit(housing_num) # 将imputer实例适配到训练集

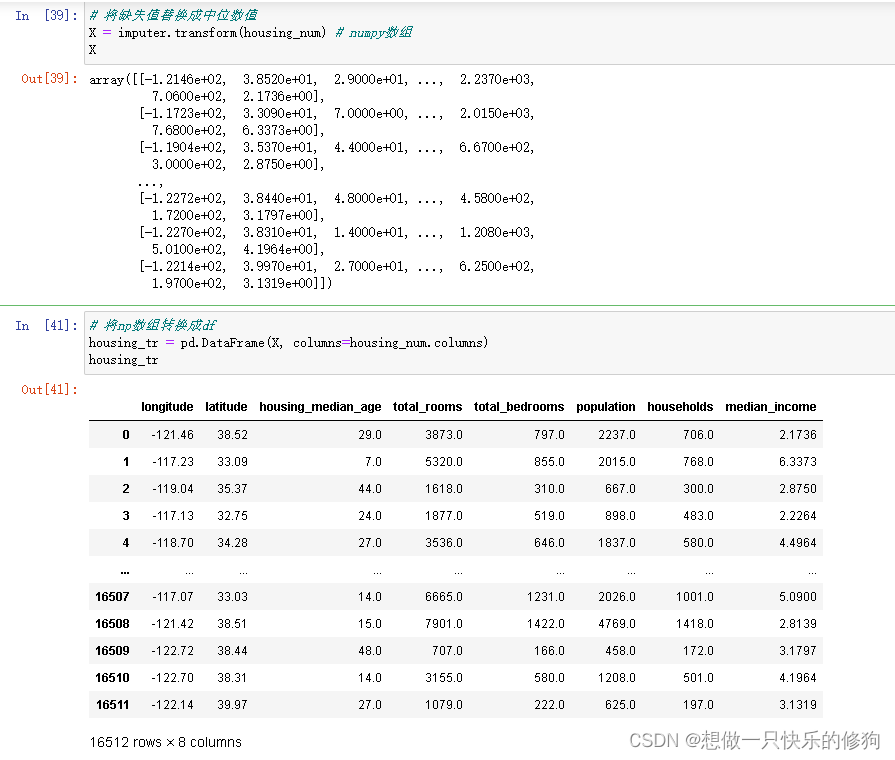

# 将缺失值替换成中位数值

X = imputer.transform(housing_num) # numpy数组

# 将np数组转换成df

housing_tr = pd.DataFrame(X, columns=housing_num.columns)

housing_tr

3. 对文本属性进行处理(One-hot编码)

# 用来添加组合后的属性

from sklearn.base import BaseEstimator, TransformerMixin

rooms_ix, bedrooms_ix, population_ix, household_ix = 3, 4, 5, 6

class CombinedAttributesAdder(BaseEstimator, TransformerMixin):

def __init__(self, add_bedrooms_per_room = True):

self.add_bedrooms_per_room = add_bedrooms_per_room

def fit(self, X, y=None):

return self

def transform(self, X, y=None):

rooms_per_household = X[:, rooms_ix] / X[:, household_ix]

population_per_household = X[:, population_ix] / X[:, household_ix]

if self.add_bedrooms_per_room:

bedrooms_per_room = X[:, bedrooms_ix] / X[:, rooms_ix]

return np.c_[X, rooms_per_household, population_per_household, bedrooms_per_room]

else:

return np.c_[X, rooms_per_household, population_per_household]

attr_adder = CombinedAttributesAdder(add_bedrooms_per_room=False)

housing_extra_attribs = attr_adder.transform(housing.values)

class CustomLabelBinarizer(BaseEstimator, TransformerMixin):

def __init__(self, sparse_output=False):

self.sparse_output = sparse_output

def fit(self, X, y=None):

return self

def transform(self, X, y=None):

enc = LabelBinarizer(sparse_output=self.sparse_output)

return enc.fit_transform(X)

# 自定义转换器

class DataFrameSelector(BaseEstimator, TransformerMixin):

def __init__(self, attribute_names):

self.attribute_names = attribute_names

def fit(self, X, y=None):

return self

def transform(self, X):

return X[self.attribute_names].values

from sklearn.pipeline import Pipeline

# from sklearn import DataFrameSelector

from sklearn.preprocessing import StandardScaler

from sklearn.pipeline import FeatureUnion

from sklearn.preprocessing import LabelBinarizer

num_attribs = list(housing_num)

# num_attribs

cat_attribs = ["ocean_proximity"]

# cat_attribs

num_pipeline = Pipeline([

('selector', DataFrameSelector(num_attribs)),

('imputer', SimpleImputer(strategy='median')),

('attribs_adder', CombinedAttributesAdder()),

('std_scalar', StandardScaler())

])

cat_pipeline = Pipeline([

('selector', DataFrameSelector(cat_attribs)),

('label_binarizer', CustomLabelBinarizer())

])

full_pipeline = FeatureUnion(transformer_list = [

('num_pipeline', num_pipeline),

('cat_pipeline', cat_pipeline)

])

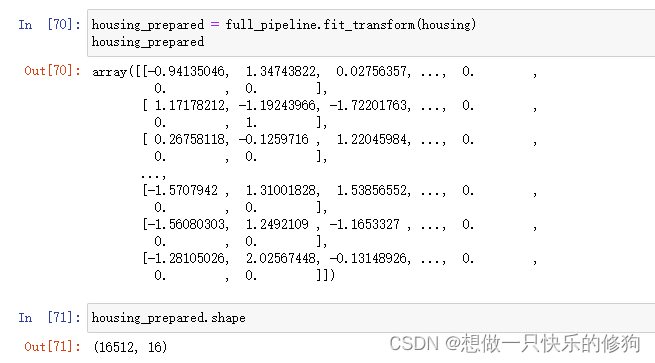

housing_prepared = full_pipeline.fit_transform(housing)

housing_prepared

这部分的代码可以看原作者发布的jupyter book的源码:02_end_to_end_machine_learning_project.ipynb

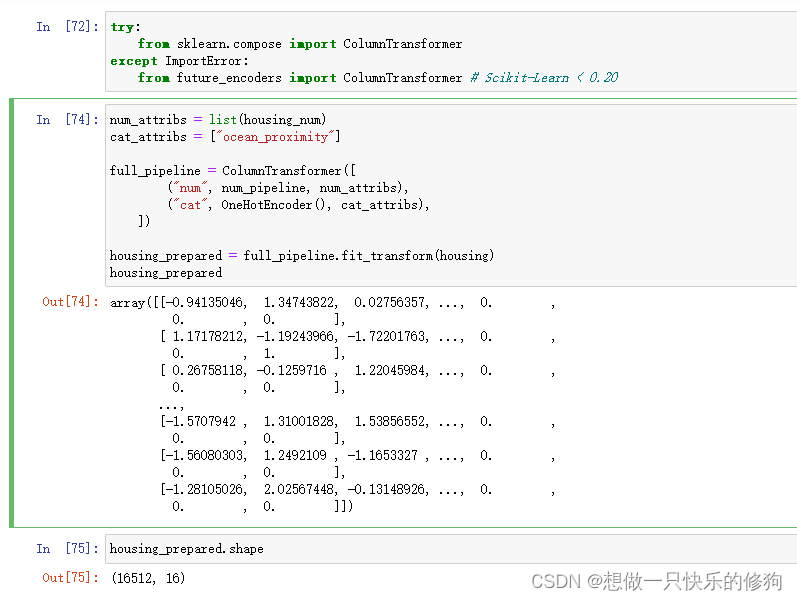

我对我的数据也将原作者的代码复制过来跑了一下,结果如下:

4. 参考

fit_transform() takes 2 positional arguments but 3 were given with LabelBinarizer