机器学习算法C++实现

目录

感知机

main.cpp

perceptron.cpp

perceptron.h

model_base.h

K近邻

main.cpp

knn.cpp

knn.h

朴素贝叶斯

main.cpp

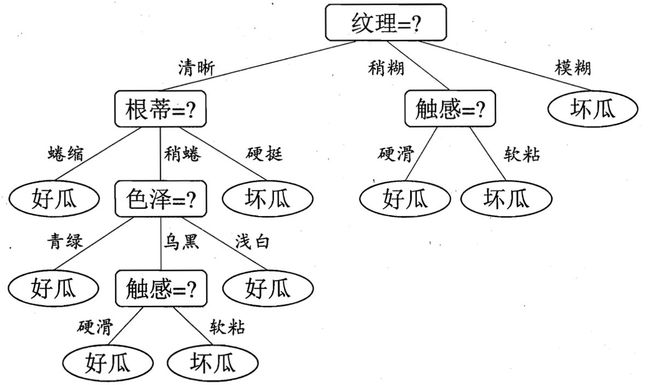

决策树

main.h

decisiontree.cpp

DecisionTree.h

逻辑回归

main.h

logistic.cpp

Logistic.h

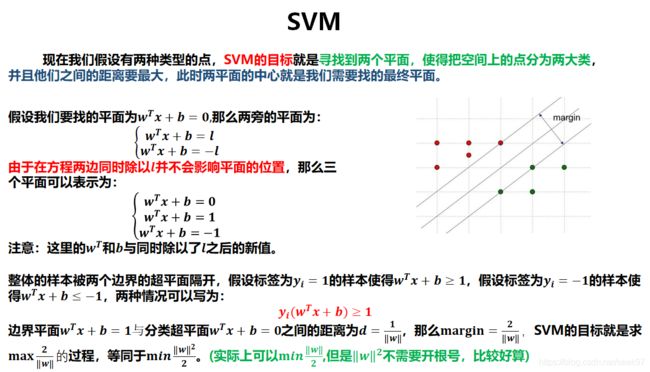

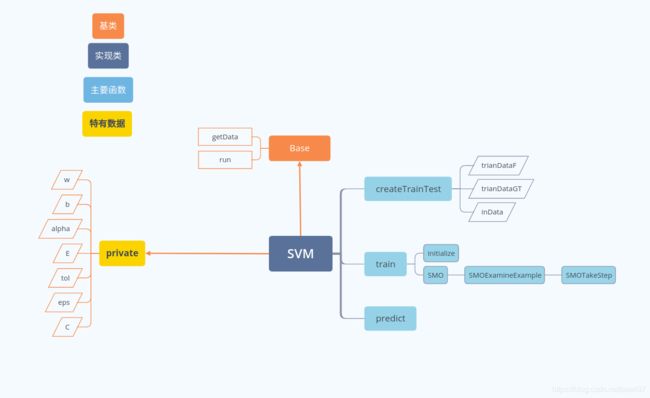

支持向量机

main.cpp

svm.cpp

SVM.h

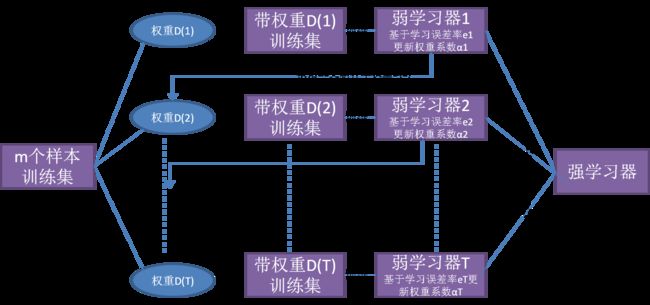

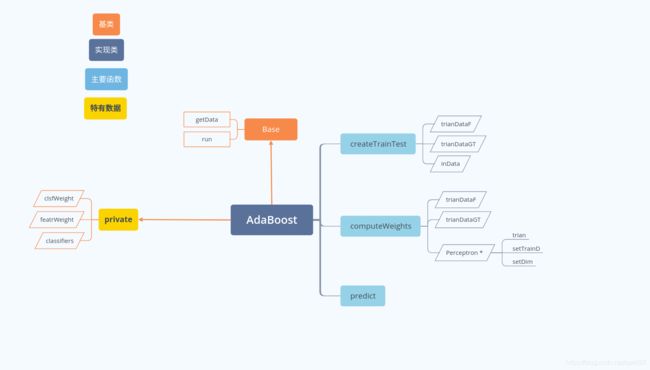

adaBoost

main.h

AdaBoost.cpp

AdaBoost.h

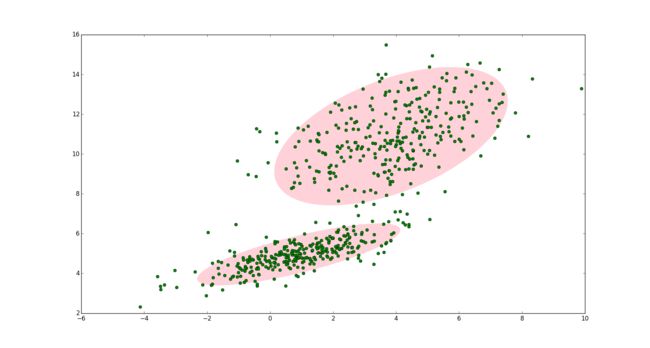

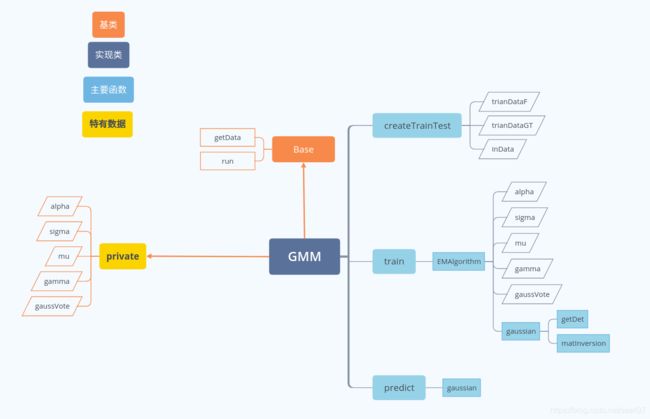

GMM

main.h

GMM.cpp

GMM.h

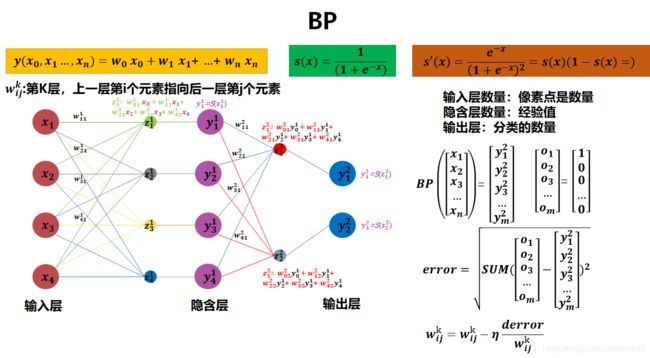

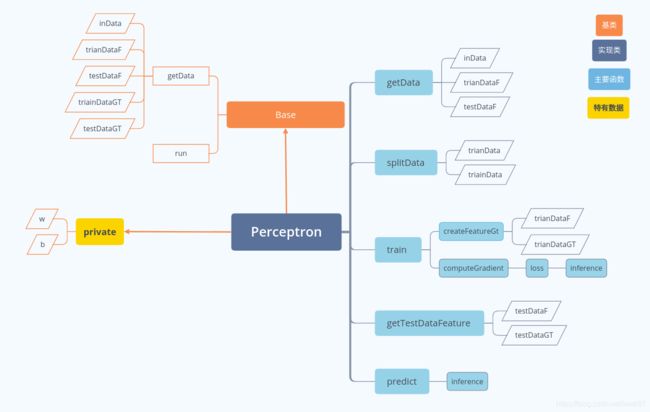

感知机

https://www.cnblogs.com/liuhuacai/p/11973036.html

main.cpp

#include

#include

#include "perceptron.h"

using std::vector;

using std::cout;

using std::endl;

int main() {

Base* obj = new Perceptron();

obj->run();

delete obj;

return 0;

}

perceptron.cpp

#include "perceptron.h"

using std::string;

using std::vector;

using std::pair;

void Perceptron::getData(const std::string &filename) {

//load data to a vector

std::vector temData;

double onepoint;

std::string line;

inData.clear();

std::ifstream infile(filename);

std::cout<<"reading ..."<> onepoint){

temData.push_back(onepoint);

}

indim = temData.size();

indim -= 1;

inData.push_back(temData);

}

std::cout<<"total data is "< trainf;

trainf.assign(data.begin(), data.end()-1);

trainDataF.push_back(trainf);

trainDataGT.push_back(*(data.end()-1));

}

for (const auto& data:testData){

std::vector testf;

testf.assign(data.begin(), data.end()-1);

testDataF.push_back(testf);

testDataGT.push_back(*(data.end()-1));

}

}

void Perceptron::initialize(std::vector& init) {

// must initialize parameter first, using vector to initialize

if(init.size()!=indim+1) {

std::cout<<"input dimension is should be "+std::to_string(indim+1)<& inputData){

//just compute wx+b , for compute loss and predict.

if (inputData.size()!=indim){

std::cout<<"input dimension is incorrect. "<& inputData, const double& groundTruth){

double infer = inference(inputData);

double loss = -1.0 * groundTruth * infer;

std::cout<<"loss is "<< loss <, double> Perceptron::computeGradient(const std::vector& inputData, const double& groundTruth) {

double lossVal = loss(inputData, groundTruth);

std::vector wi;

double bi;

if (lossVal >= 0.0)

{

for(auto indata:inputData) {

wi.push_back(indata*groundTruth);

}

bi = groundTruth;

}

else{

for(auto indata:inputData) {

wi.push_back(0.0);

}

bi = 0.0;

}

return std::pair, double>(wi, bi);//here, for understandable, we use pair to represent w and b.

//you also could return a vector which contains w and b.

}

void Perceptron::train(const int & step, const float & lr) {

std::vector init = {1.0,1.0,1.0};

initialize(init);

int count = 0;

for(int i=0; i inputData = trainDataF[count];

double groundTruth = trainDataGT[count];

auto grad = computeGradient(inputData, groundTruth);

auto grad_w = grad.first;

double grad_b = grad.second;

for (int j=0; j& inputData) {

double out = inference(inputData);

if(out>=0.0){

return 1;

}

else{

return -1;

}

}

/*perceptrondata.txt

3 4 1

1 1 -1

2 4 1

1 2 -1

1 5 1

2 0.5 -1

1 6 1

1 2.5 -1

0.5 6 1

0 1 -1

2 2.5 1

0.5 1 -1

1 4 1

1.5 1 -1

2.7 1 1

2 3.5 1

0.8 3 -1

0.1 4 -1

*/

void Perceptron::run(){

//记得更改样本路径

getData("../data/perceptrondata.txt");

splitData(0.6);//below is split data , and store it in trainData, testData

createFeatureGt();

train(200, 1.0);//20 is steps and 1.0 is learning rate

std::vector> testData = getTestDataFeature();

std::vector testGT = getTestGT();

for(int i=0; i perceptron.h

#ifndef MACHINE_LEARNING_PERCEPTRON_H

#define MACHINE_LEARNING_PERCEPTRON_H

#include

#include

#include

#include "model_base.h"

class Perceptron: public Base{

private:

std::vector w;

double b;

public:

virtual void getData(const std::string& filename);

virtual void run();

void splitData(const float& );

void createFeatureGt();//create feature for test,using trainData, testData

void setDim(const unsigned long& iDim){indim = iDim;}

double inference(const std::vector&) ;

void initialize(std::vector& init);

void train(const int& step,const float& lr);

int predict(const std::vector& inputData);

double loss(const std::vector& inputData, const double& groundTruth);

std::pair, double> computeGradient(const std::vector& inputData, const double& groundTruth);

std::vector> getTestDataFeature(){return testDataF;}

std::vector getTestGT(){ return testDataGT;}

};

#endif //MACHINE_LEARNING_PERCEPTRON_H

model_base.h

#ifndef MACHINE_LEARNING_MODEL_BASE_H

#define MACHINE_LEARNING_MODEL_BASE_H

#include

#include

#include

#include

#include

#include

using std::vector;

using std::cout;

using std::endl;

//this base class is for run

class Base{

protected:

std::vector trainDataGT;//真值

std::vector testDataGT;

std::vector> inData;//从文件都的数据

std::vector> trainData;//分割后的训练数据,里面包含真值

std::vector> testData;

unsigned long indim = 0;

std::vector> trainDataF;//真正的训练数据,特征

std::vector> testDataF;

public:

void setTrainD(vector>& trainF, vector& trainGT) {trainDataF = trainF; trainDataGT=trainGT;}

void setTestD(vector>& testF, vector& testGT) {testDataGT = testGT; testDataGT=testGT;}

virtual void getData(const std::string& filename)=0;

virtual void run()=0;

virtual ~Base(){};

template

friend auto operator + (const vector& v1, const vector& v2)->vector;

template

friend auto operator - (const vector& v1, const vector& v2)->vector;

template

friend double operator * (const vector& v1, const vector& v2);

template

friend auto operator / (const vector& v1, const vector& v2)->vector;

template

friend auto operator + (const T1& arg1, const vector& v2)->vector;

template

friend auto operator - (const T1& arg1, const vector& v2)->vector;

template

friend auto operator * (const T1& arg1, const vector& v2)->vector;

template

friend auto operator / (const T1& arg1, const vector& v2)->vector;

template

friend auto operator + (const vector& v1, const T2& arg2)->vector;

template

friend auto operator - (const vector& v1, const T2& arg2)->vector;

template

friend auto operator * (const vector& v1, const T2& arg2)->vector;

template

friend auto operator / (const vector& v1, const T2& arg2)->vector;

template

friend vector> transpose(const vector>& mat);

template

friend vector> vecMulVecToMat(const vector& vec1, const vector& vec2);

template

friend auto operator + (const vector>& v1, const vector>& v2)

->vector>;

};

template

auto operator + (const vector& v1, const vector& v2) ->vector {

if (v1.size() != v2.size()) {

cout << "two vector must have same size." << endl;

throw v1.size() != v2.size();

}

if (v1.empty()) {

cout << "vector must not empty." << endl;

throw v1.empty();

}

vector re(v1.size());

for (int i = 0; i < v1.size(); ++i) {

re[i] = v1[i] + v2[i];

}

return re;

}

template

auto operator - (const vector& v1, const vector& v2)->vector {

if (v1.size() != v2.size()) {

cout << "two vector must have same size." << endl;

throw v1.size() != v2.size();

}

if (v1.empty()){

cout << "vector must not empty." << endl;

throw v1.empty();

}

vector re(v1.size());

for (int i = 0; i < v1.size(); ++i) {

re[i] = v1[i] - v2[i];

}

return re;

}

template

double operator * (const vector& v1, const vector& v2) {

if (v1.size() != v2.size()) {

cout << "two vector must have same size." << endl;

throw v1.size() != v2.size();

}

if (v1.empty()){

cout << "vector must not empty." << endl;

throw v1.empty();

}

decltype(v1[0] * v2[0]) re = 0;

for (int i = 0; i < v1.size(); ++i) {

re += v1[i] * v2[i];

}

return re;

}

template

auto operator / (const vector& v1, const vector& v2)->vector {

if (v1.size() != v2.size()) {

cout << "two vector must have same size." << endl;

throw v1.size() != v2.size();

}

if (v1.empty()){

cout << "vector must not empty." << endl;

throw v1.empty();

}

vector re(v1.size());

for (int i = 0; i < v1.size(); ++i) {

re[i] = v1[i] / v2[i];

}

return re;

}

template

auto operator + (const T1& arg1, const vector& v2)->vector{

if (v2.empty()){

cout << "vector must not empty." << endl;

throw v2.empty();

}

vector re(v2.size());

for (int i = 0; i < v2.size(); ++i) {

re[i] = arg1 + v2[i];

}

return re;

}

template

auto operator - (const T1& arg1, const vector& v2)->vector{

if (v2.empty()){

cout << "vector must not empty." << endl;

throw v2.empty();

}

vector re(v2.size());

for (int i = 0; i < v2.size(); ++i) {

re[i] = arg1 - v2[i];

}

return re;

}

template

auto operator * (const T1& arg1, const vector& v2)->vector{

if (v2.empty()){

cout << "vector must not empty." << endl;

throw v2.empty();

}

vector re(v2.size());

for (int i = 0; i < v2.size(); ++i) {

re[i] = arg1 * v2[i];

}

return re;

}

template

auto operator / (const T1& arg1, const vector& v2)->vector{

if (v2.empty()){

cout << "vector must not empty." << endl;

throw v2.empty();

}

vector re(v2.size());

for (int i = 0; i < v2.size(); ++i) {

re[i] = arg1 / v2[i];

}

return re;

}

template

auto operator + (const vector& v1, const T2& arg2)->vector{

return arg2+v1;

}

template

auto operator - (const vector& v1, const T2& arg2)->vector{

return arg2-v1;

}

template

auto operator * (const vector& v1, const T2& arg2)->vector{

return arg2*v1;

}

template

auto operator / (const vector& v1, const T2& arg2)->vector{

if (v1.empty()){

cout << "vector must not empty." << endl;

throw v1.empty();

}

vector re(v1.size());

for (int i = 0; i < v1.size(); ++i) {

re[i] = v1[i]/arg2;

}

return re;

}

template

vector> transpose(const vector>& mat) {

vector> newMat (mat.size(), vector (mat.size(), 0));

for (int i = 0; i < mat.size(); ++i) {

for (int j = 0; j < mat.size(); ++j)

newMat[i][j] = mat[j][i];

}

return newMat;

}

template

vector> vecMulVecToMat(const vector& vec1, const vector& vec2) {

if (vec1.size() != vec2.size())

cout << "Two dimension of two vectors are not same!" << endl;

vector> newMat (vec1.size(), vector (vec2.size(), 0));

for (int i = 0; i < vec1.size(); ++i) {

for (int j = 0; j < vec2.size(); ++j){

newMat[i][j] = vec1[i] * vec2[j];

}

}

return newMat;

}

template

auto operator + (const vector>& v1, const vector>& v2)

->vector> {

if (v1.size() != v2.size())

std::cerr<< "Two dimension of two vectors are not same!" << endl;

vector> newMat;

for (int i = 0; i < v1.size(); ++i)

newMat.push_back(v1[i] + v2[i]);

return newMat;

}

#endif //MACHINE_LEARNING_MODEL_BASE_H

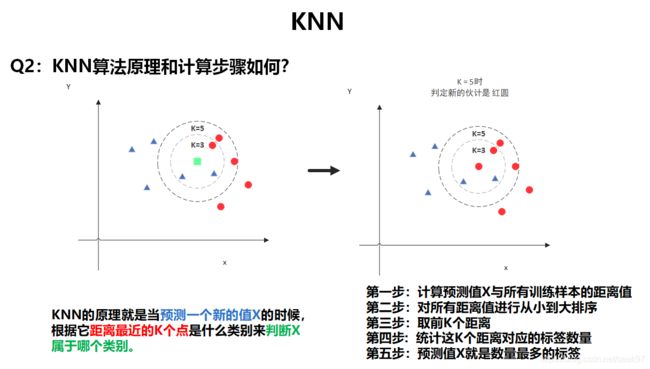

K近邻

main.cpp

#include

#include

#include "knn.h"

using std::vector;

using std::cout;

using std::endl;

int main() {

Base* obj = new Knn();

obj->run();

delete obj;

return 0;

}

knn.cpp

#include "knn.h"

using std::string;

using std::vector;

using std::pair;

using std::priority_queue;

using std::stack;

void Knn::getData(const std::string &filename) {

//load data to a vector

std::vector temData;

double onepoint;

std::string line;

inData.clear();

std::ifstream infile(filename);

std::cout<<"reading ..."<> onepoint){

temData.push_back(onepoint);

}

indim = temData.size();

indim -= 1;

inData.push_back(temData);

}

std::cout<<"total data is "<> varianceVec;

auto sumv = trainData[0];

for(unsigned long i=1;i subMean;

for(const auto& c:trainData)

subMean.push_back(c-meanv);

for (unsigned long i = 0; i < trainData.size(); ++i) {

for (unsigned long j = 0; j < indim; ++j) {

subMean[i][j] *= subMean[i][j];

}

}

auto varc = subMean[0];

for(unsigned long i=1;i(i, var[i]));

}

std::sort(varianceVec.begin(), varianceVec.end(), [](pair &left, pair &right) {

return left.second < right.second;

});

for(const auto& variance:varianceVec){

axisVec.push(variance.first);//the maximum variance is on the top

}

cout<<"createSplitAxis over"< &left, vector &right) {

return left[axis]leftTreeVal.push_back(trainData[i]);

else

root->rightTreeVal.push_back(trainData[i]);

} else{

root->val.assign(trainData[i].begin(),trainData[i].end()-1);

root->splitVal = trainData[i][axis];

root->axis = axis;

root->cls = *(trainData[i].end()-1);

}

}

cout<<"root node set over"<>& data, stack& axisStack) {

stack aS;

if(axisStack.empty())

aS=axisVec;

else

aS=axisStack;

auto node = new KdtreeNode();

node->parent = root;

auto axis2 = aS.top();

aS.pop();

std::sort(data.begin(), data.end(), [&axis2](vector &left, vector &right) {

return left[axis2]leftTreeVal.empty()&&node->rightTreeVal.empty()){

for(unsigned long i = 0; i < data.size(); ++i){

if(i!=mid){

if (ileftTreeVal.push_back(data[i]);

else

node->rightTreeVal.push_back(data[i]);

} else{

node->val.assign(data[i].begin(),data[i].end()-1);

node->splitVal = data[i][axis2];

node->axis = axis2;

node->cls = *(data[i].end()-1);

}

}

}

if(!node->leftTreeVal.empty()){

node->left = buildTree(node, node->leftTreeVal, aS);

}

if(!node->rightTreeVal.empty()){

node->right = buildTree(node, node->rightTreeVal, aS);

}

return node;

}

void Knn::showTree(KdtreeNode* root) {

if(root == nullptr)

return;

cout<<"the feature is ";

for(const auto& c:root->val)

cout<cls<left);

showTree(root->right);

}

void Knn::findKNearest(vector& testD){

cout<<"the test data is(the last is class) ";

for(const auto& c:testD)

cout< path;

auto curNode = root;

while(curNode!= nullptr){

path.push(curNode);

if(testD[curNode->axis]<=curNode->splitVal)

curNode = curNode->left;

else

curNode = curNode->right;

}

while(!path.empty()){

auto curN = path.top();

path.pop();

vector testDF(testD.begin(),testD.end()-1);

double dis=0.0;

dis = computeDis(testDF, curN->val);

if(maxHeap.size()(dis, curN));

}

else{

if(dis(dis, curN));

}

}

if(path.empty())

continue;

auto curNparent = path.top();

KdtreeNode* curNchild;

if(testDF[curNparent->axis]<=curNparent->splitVal)

curNchild = curNparent->right;

else

curNchild = curNparent->left;

if(curNchild == nullptr)

continue;

double childDis = computeDis(testDF, curNchild->val);

if(childDis(childDis, curNchild));

while(curNchild!= nullptr){//add subtree to path

path.push(curNchild);

if(testD[curNchild->axis]<=curNchild->splitVal)

curNchild = curNchild->left;

else

curNchild = curNchild->right;

}

}

}

}

double Knn::computeDis(const vector& v1, const vector& v2){

auto v = v1 - v2;

double di = v*v;

return di;

}

void Knn::DeleteRoot(KdtreeNode *pRoot) //<根据根节点删除整棵树

{

if (pRoot == nullptr) {

return;

}

KdtreeNode *pLeft = pRoot->left;

KdtreeNode *pRight = pRoot->right;

delete pRoot;

pRoot = nullptr;

if (pLeft) {

DeleteRoot(pLeft);

}

if (pRight) {

DeleteRoot(pRight);

}

return;

}

Knn::~Knn(){

DeleteRoot(root);

}

/*perceptrondata.txt

3 4 1

1 1 -1

2 4 1

1 2 -1

1 5 1

2 0.5 -1

1 6 1

1 2.5 -1

0.5 6 1

0 1 -1

2 2.5 1

0.5 1 -1

1 4 1

1.5 1 -1

2.7 1 1

2 3.5 1

0.8 3 -1

0.1 4 -1

*/

void Knn::run(){

getData("../data/perceptrondata.txt");

createTrainTest(0.6);

createSplitAxis();

setRoot();

root->left = buildTree(root, root->leftTreeVal, axisVec);

root->right = buildTree(root, root->rightTreeVal, axisVec);

cout<<"show the tree in preorder traversal."<val)

cout << c << " ";

cout << endl;

maxHeap.pop();

}

}

}

knn.h

#ifndef MACHINE_LEARNING_KNN_H

#define MACHINE_LEARNING_KNN_H

#include

#include

#include

#include

#include "model_base.h"

struct KdtreeNode {

std::vector val;//store val for feature

int cls;//store class

unsigned long axis;//split axis

double splitVal;//mid val for axis

std::vector> leftTreeVal;

std::vector> rightTreeVal;

KdtreeNode* parent;

KdtreeNode* left;

KdtreeNode* right;

KdtreeNode(): cls(0), axis(0), splitVal(0.0), parent(nullptr), left(nullptr), right(nullptr){};

};

class Knn: public Base{

private:

std::stack axisVec;

KdtreeNode* root = new KdtreeNode();

unsigned long K;

std::priority_queue> maxHeap;

public:

virtual void getData(const std::string& filename);

virtual void run();

void createTrainTest(const float& trainTotalRatio);

KdtreeNode* buildTree(KdtreeNode*root, std::vector>& data, std::stack& axisstack);

void setRoot();

void createSplitAxis();

KdtreeNode* getRoot(){return root;}

void setK(unsigned long k){K = k;}

void findKNearest(std::vector& testD);

double computeDis(const std::vector& v1, const std::vector& v2);

void DeleteRoot(KdtreeNode *pRoot);

void showTree(KdtreeNode* root);

~Knn();

};

#endif //MACHINE_LEARNING_KNN_H

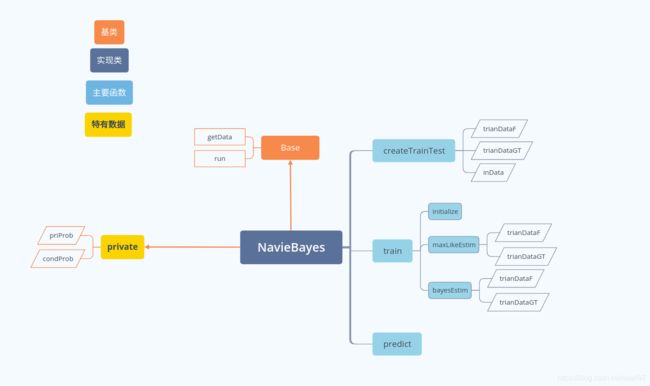

朴素贝叶斯

main.cpp

#include

#include

#include "NavieBayes.h"

using std::vector;

using std::cout;

using std::endl;

int main() {

Base* obj = new NavieBayes();

obj->run();

delete obj;

return 0;

}

naviebayes.cpp

#include "NavieBayes.h"

using std::string;

using std::vector;

using std::pair;

using std::map;

using std::set;

void NavieBayes::getData(const std::string &filename) {

//load data to a vector

std::vector temData;

double onepoint;

std::string line;

inData.clear();

std::ifstream infile(filename);

std::cout<<"reading ..."<> onepoint){

temData.push_back(onepoint);

}

indim = temData.size();

indim -= 1;

inData.push_back(temData);

}

std::cout<<"total data is "< trainf;

trainf.assign(data.begin(), data.end()-1);

trainDataF.push_back(trainf);

trainDataGT.push_back(*(data.end()-1));

}

for (const auto& data:testData){

std::vector testf;

testf.assign(data.begin(), data.end()-1);

testDataF.push_back(testf);

testDataGT.push_back(*(data.end()-1));

}

}

void NavieBayes::maxLikeEstim(){

for(const auto& gt: trainDataGT){

priProb[std::to_string(gt)] += 1;

}

for(unsigned long i=0;i, double> m;

for(const auto& xval : xVal[i])

for(const auto& yval:yVal){

auto cond = std::make_pair(std::to_string(xval), std::to_string(yval));

m[cond]=0;

}

condProb.push_back(m);

}

for(const auto& val:yVal)

priProb[std::to_string(val)]=0;

}

void NavieBayes::train(const string& estim="mle"){

//train actually is create priProb and condProb.

if(xVal.empty() && yVal.empty()){

cout<<"please set range of x and y first."<pre){

pre = pr;

y_t = y;

}

}

cout<<"the test data predict class is "<> x {{1,2,3},{4,5,6}};//书中例题第二维的取值是字母,为了方便换成了4,5,6

vector y {-1,1};

setInVal(x);

setOutVal(y);

train("byse");

vector testDF {2, 4};

testDataF.push_back(testDF);

testDataGT.push_back(-1);

predict();

}

NavieBayes.h

#ifndef MACHINE_LEARNING_NAVIEBAYES_H

#define MACHINE_LEARNING_NAVIEBAYES_H

#include

#include

#include

#include 决策树

main.h

#include

#include

#include "DecisionTree.h"

using std::vector;

using std::cout;

using std::endl;

int main() {

Base* obj = new DecisionTree();

obj->run();

delete obj;

return 0;

}

decisiontree.cpp

#include "DecisionTree.h"

using std::string;

using std::vector;

using std::pair;

using std::map;

using std::priority_queue;

using std::set;

void DecisionTree::getData(const string &filename) {

// load data to a vector

std::vector temData;

double onepoint;

std::string line;

inData.clear();

std::ifstream infile(filename);

std::cout << "reading ..." << std::endl;

while (!infile.eof()) {

temData.clear();

std::getline(infile, line);

if (line.empty())

continue;

std::stringstream stringin(line);

while (stringin >> onepoint) {

temData.push_back(onepoint);

}

indim = temData.size();

indim -= 1;

inData.push_back(temData);

}

for (int i = 0; i < indim; ++i)

features.push_back(i); // initialize features

std::cout << "total data is " << inData.size() < trainf;

trainf.assign(data.begin(), data.end()-1);

trainDataF.push_back(trainf);

trainDataGT.push_back(*(data.end()-1));

}

for (const auto& data:testData){

std::vector testf;

testf.assign(data.begin(), data.end()-1);

testDataF.push_back(testf);

testDataGT.push_back(*(data.end()-1));

}

}

void DecisionTree::initializeRoot(){

root = new DtreeNode();

}

DtreeNode* DecisionTree::buildTree(DtreeNode* node, vector>& valRange) {

if (!node)

return nullptr;

if (features.empty())

return nullptr;

pair splitFeatureAndValue = createSplitFeature(valRange);

node->axis = splitFeatureAndValue.first;

node->splitVal = splitFeatureAndValue.second;

set cls_left;

set cls_right;

for (const auto& data : valRange){

if (data[node->axis] == node->splitVal) {

node->leftTreeVal.push_back(data);

cls_left.insert(data.back());

}

else {

node->rightTreeVal.push_back(data);

cls_right.insert(data.back());

}

}

if (cls_left.size()<=1){ //belong to the same class

node->left = new DtreeNode();

node->left->isLeaf = true;

node->left->leafValue = node->leftTreeVal;

}

else if (!node->leftTreeVal.empty()) {

node->left = new DtreeNode();

node->left = buildTree(node->left, node->leftTreeVal);

} else{

return nullptr;

}

if (cls_right.size()<=1){ //belong to the same class

node->right = new DtreeNode();

node->right->isLeaf = true;

node->right->leafValue = node->rightTreeVal;

}

else if (!node->rightTreeVal.empty()){

node->right = new DtreeNode();

node->right = buildTree(node->right, node->rightTreeVal);

} else{

return nullptr;

}

if (!node->right&&!node->left){

node->isLeaf=true;

node->leafValue = valRange;

}

return node;

}

pair DecisionTree::createSplitFeature(vector>& valRange){

priority_queue>, vector>>, std::greater>>> minheap;

//pair> first value is Gini value, second pair (pair) first value is split

//axis, second value is split value

vector> dataDivByFeature(indim); //vector size is num of axis, map's key is the value of feature, map's value is

//num belong to feature'value

vector> featureVal(indim); //store value for each axis

vector, int>> datDivByFC(indim); //vector size is num of axis, map's key is the feature value and class value, map's value is

//num belong to that feature value and class

set cls; //store num of class

for(const auto& featureId:features) {

if (featureId<0)

continue;

map dataDivByF;

map, int> dtDivFC;

set fVal;

for (auto& data:valRange){ //below data[featureId] is the value of one feature axis, data.back() is class value

cls.insert(data.back());

fVal.insert(data[featureId]);

if (dataDivByF.count(data[featureId]))

dataDivByF[data[featureId]] += 1;

else

dataDivByF[data[featureId]] = 0;

if (dtDivFC.count(std::make_pair(data[featureId], data.back())))

dtDivFC[std::make_pair(data[featureId], data.back())] += 1;

else

dtDivFC[std::make_pair(data[featureId], data.back())] = 0;

}

featureVal[featureId] = fVal;

dataDivByFeature[featureId] = dataDivByF;

datDivByFC[featureId] = dtDivFC;

}

for (auto& featureId: features) { // for each feature axis

if (featureId<0)

continue;

for (auto& feVal: featureVal[featureId]){ //for each feature value

double gini1 = 0 ;

double gini2 = 0 ;

double prob1 = dataDivByFeature[featureId][feVal]/double(valRange.size());

double prob2 = 1 - prob1;

for (auto& c : cls){ //for each class

double pro1 = double(datDivByFC[featureId][std::make_pair(feVal, c)])/dataDivByFeature[featureId][feVal];

gini1 += pro1*(1-pro1);

int numC = 0;

for (auto& feVal2: featureVal[featureId])

numC += datDivByFC[featureId][std::make_pair(feVal2, c)];

double pro2 = double(numC-datDivByFC[featureId][std::make_pair(feVal, c)])/(valRange.size()-dataDivByFeature[featureId][feVal]);

gini2 += pro2*(1-pro2);

}

double gini = prob1*gini1+prob2*gini2;

minheap.push(std::make_pair(gini, std::make_pair(featureId, feVal)));

}

}

features[minheap.top().second.first]=-1;

return minheap.top().second;

}

void DecisionTree::showTree(DtreeNode* node) {

if(node == nullptr)

return;

cout<<"the leaf class is "<< bool(node->isLeaf) <axis<splitVal<isLeaf){

for (auto& data : node->leafValue){

cout<<"leaf value are(the last value is class): ";

for (auto& d:data)

cout<left);

showTree(node->right);

}

/*decisiontree.txt

1 2 2 3 2

1 2 2 2 2

1 1 2 2 1

1 1 1 3 1

1 2 2 3 2

2 2 2 3 2

2 2 2 2 2

2 1 1 2 1

2 2 1 1 1

2 2 1 1 1

3 2 1 1 1

3 2 1 2 1

3 1 2 2 1

3 1 2 3 1

3 2 2 2 2

*/

void DecisionTree::run() {

//记得更改样本地址

getData("../data/decisiontree.txt");

createTrainTest();

initializeRoot();

buildTree(root, trainData);

showTree(root);

}

DecisionTree.h

#ifndef MACHINE_LEARNING_DECISIONTREE_H

#define MACHINE_LEARNING_DECISIONTREE_H

#include

#include

#include

#include 逻辑回归

https://github.com/tobeprozy/MachineLearning_Python

main.h

main.h

#include

#include

#include "Logistic.h"

using std::vector;

using std::cout;

using std::endl;

int main() {

Base* obj = new Logistic();

obj->run();

delete obj;

return 0;

}

logistic.cpp

#include "Logistic.h"

using std::string;

using std::vector;

using std::pair;

void Logistic::getData(const string &filename){

//load data to a vector

std::vector temData;

double onepoint;

std::string line;

inData.clear();

std::ifstream infile(filename);

std::cout<<"reading ..."<> onepoint){

temData.push_back(onepoint);

}

indim = temData.size();

inData.push_back(temData);

}

std::cout<<"total data is "< trainf;

trainf.assign(data.begin(), data.end()-1);

trainf.push_back(1.0);

trainDataF.push_back(trainf);

trainDataGT.push_back(*(data.end()-1));

}

for (const auto& data:testData){

std::vector testf;

testf.assign(data.begin(), data.end()-1);

testf.push_back(1.0);

testDataF.push_back(testf);

testDataGT.push_back(*(data.end()-1));

}

}

double Logistic::logistic(const vector& data){

double expval = exp(w * data);

return expval/(1.0+expval);

}

void Logistic::initialize(const vector& wInit){

w = wInit;

}

vector Logistic::computeGradient(const vector& trainFeature, double trainGrT){

return -1*trainFeature*(trainGrT-logistic(trainFeature));

}

void Logistic::train(const int& step, const double& lr) {

int count = 0;

for(int i=0; i grad = computeGradient(trainDataF[count], trainDataGT[count]);

double fl;

if (trainDataGT[count]==0)

fl = 1;

else

fl = -1;

w = w + fl*lr*grad;

auto val = trainDataF[count]*w;

double loss = -1*trainDataGT[count]*val + log(1 + exp(val));

cout<<"step "<& inputData, const double& GT){

cout<<"The right class is "<=0.5){

std::cout<<"The predict class is 1"< init (indim, 0.5);

initialize(init);

train(20, 1.0);//20 is steps and 1.0 is learning rate

for(int i=0; i Logistic.h

#ifndef MACHINE_LEARNING_LOGISTIC_H

#define MACHINE_LEARNING_LOGISTIC_H

#include

#include

#include "model_base.h"

class Logistic: public Base{

private:

vector w;

public:

virtual void getData(const std::string &filename);

virtual void run();

double logistic(const std::vector& data);

void createTrainTest();

void initialize(const std::vector& );

void train(const int& step, const double& lr);

std::vector computeGradient(const std::vector& trainFeature, double trainGrT);

double predict(const std::vector& inputData, const double& GT);

};

#endif //MACHINE_LEARNING_LOGISTIC_H

支持向量机

main.cpp

#include

#include

#include "SVM.h"

using std::vector;

using std::cout;

using std::endl;

int main() {

Base* obj = new SVM();

obj->run();

delete obj;

return 0;

}

svm.cpp

#include "SVM.h"

using std::string;

using std::vector;

using std::pair;

void SVM::getData(const string &filename){

//load data to a vector

std::vector temData;

double onepoint;

std::string line;

inData.clear();

std::ifstream infile(filename);

std::cout<<"reading ..."<> onepoint){

temData.push_back(onepoint);

}

indim = temData.size()-1;

inData.push_back(temData);

}

std::cout<<"total data is "< trainf;

trainf.assign(data.begin(), data.end()-1);

trainDataF.push_back(trainf);

trainDataGT.push_back(*(data.end()-1));

}

for (const auto& data:testData){

std::vector testf;

testf.assign(data.begin(), data.end()-1);

testDataF.push_back(testf);

testDataGT.push_back(*(data.end()-1));

}

}

void SVM::SMO() {

/*

* this function reference the Platt J. Sequential minimal optimization: A fast algorithm for training support vector machines[J]. 1998.

*/

int numChanged = 0;

int examineAll = 1;

while(numChanged > 0 || examineAll){

numChanged = 0;

if (examineAll){

for (int i=0; i & x1, vector & x2) {

//here use linear kernel

return x1 * x2;

}

double SVM::computeE(int& i) {

double e = 0;

for(int j =0 ; j < trainDataF.size(); ++j){

e += alpha[j]*trainDataGT[j]*kernel(trainDataF[j], trainDataF[i]);

}

e += b;

e -= trainDataGT[i];

//e = w*trainDataF[i]+b-trainDataGT[i];

return e;

}

pair SVM::SMOComputeOB(int& i1, int& i2, double&L, double& H) {

double y1 = trainDataGT[i1];

double y2 = trainDataGT[i2];

double s = y1 * y2;

double f1 = y1 * (E[i1] + b) - alpha[i1] * kernel(trainDataF[i1], trainDataF[i1]) -

s * alpha[i2] * kernel(trainDataF[i1], trainDataF[i2]);

double f2 = y2 * (E[i2] + b) - s * alpha[i1] * kernel(trainDataF[i1], trainDataF[i2]) -

alpha[i2] * kernel(trainDataF[i2], trainDataF[i2]);

double L1 = alpha[i1] + s * (alpha[i2] - L);

double H1 = alpha[i1] + s * (alpha[i2] - H);

double obL = L1 * f1 + L * f2 + 0.5 * L1 * L1 * kernel(trainDataF[i1], trainDataF[i1]) +

0.5 * L * L * kernel(trainDataF[i2], trainDataF[i2]) +

s * L * L1 * kernel(trainDataF[i1], trainDataF[i2]);

double obH = H1 * f1 + H * f2 + 0.5 * H1 * H1 * kernel(trainDataF[i1], trainDataF[i1]) +

0.5 * H * H * kernel(trainDataF[i2], trainDataF[i2]) +

s * H * H1 * kernel(trainDataF[i1], trainDataF[i2]);

return std::make_pair(obL, obH);

}

int SVM::SMOTakeStep(int& i1, int& i2) {

if (i1 == i2)

return 0;

double y1 = trainDataGT[i1];

double y2 = trainDataGT[i2];

double s = y1 * y2;

double L, H;

if (y1 != y2) {

L = (alpha[i1] - alpha[i2]) > 0 ? alpha[i1] - alpha[i2] : 0;

H = (alpha[i1] - alpha[i2] + C) < C ? alpha[i1] - alpha[i2] + C : C;

} else {

L = (alpha[i1] + alpha[i2] - C) > 0 ? alpha[i1] + alpha[i2] - C : 0;

H = (alpha[i1] + alpha[i2]) < C ? alpha[i1] + alpha[i2] : C;

}

if (L == H)

return 0;

double k11 = kernel(trainDataF[i1], trainDataF[i1]);

double k12 = kernel(trainDataF[i1], trainDataF[i2]);

double k22 = kernel(trainDataF[i2], trainDataF[i2]);

double eta = k11 + k22 - 2 * k12;

double a2;

if (eta > 0) {

a2 = alpha[i2] + y2 * (E[i1] - E[i2]) / eta;

if (a2 < L)

a2 = L;

else {

if (a2 > H)

a2 = H;

}

} else {

pair ob = SMOComputeOB(i1, i2, L, H);

double Lobj = ob.first;

double Hobj = ob.second;

if (Lobj < Hobj - eps)

a2 = L;

else {

if (Lobj > Hobj + eps)

a2 = H;

else

a2 = alpha[i2];

}

}

if (std::abs(a2 - alpha[i2]) < eps * (a2 + alpha[i2] + eps))

return 0;

double a1 = alpha[i1] + s * (alpha[i2] - a2);

double b1;

//please notice that the update equation is from <<统计学习方法>>p130, not the equation in paper

b1= -E[i1] - y1 * (a1 - alpha[i1]) * kernel(trainDataF[i1], trainDataF[i1]) -

y2 * (a2 - alpha[i2]) * kernel(trainDataF[i1], trainDataF[i2]) + b;

double b2;

b2 = -E[i2] - y1 * (a1 - alpha[i1]) * kernel(trainDataF[i1], trainDataF[i2]) -

y2 * (a2 - alpha[i2]) * kernel(trainDataF[i2], trainDataF[i2]) + b;

double bNew = (b1 + b2) / 2;

b = bNew;

w = w + y1 * (a1 - alpha[i1]) * trainDataF[i1] + y2 * (a2 - alpha[i2]) *

trainDataF[i2];

//this is the linear SVM case, this equation are from the paper equation 22

alpha[i1] = a1;

alpha[i2] = a2;

// vector wtmp (indim);

// for (int i=0; itol && alph2>0)){

int alphNum = 0;

for (auto& a:alpha){

if (a != 0 && a != C)

alphNum++;

}

if (alphNum>1){

double dis = 0;

int i1 ;

for(int j=0;jdis){

i1 = j;

dis = std::abs(E[j]-E[i2]);

}

}

if (SMOTakeStep(i1,i2))

return 1;

}

for (int i = 0; i < alpha.size();++i){

if (alpha[i] != 0 && alpha[i] != C){

int i1 = i;

if (SMOTakeStep(i1, i2))

return 1;

}

}

for(int i = 0; i < trainDataF.size();++i){

int i1 = i;

if (SMOTakeStep(i1, i2))

return 1;

}

}

return 0;

}

void SVM::initialize() {

b = 0;

for(int i=0;i &inputData) {

double p = w*inputData+b;

if(p>0)

return 1.0;

else

return -1.0;

}

/*perceptrondata.txt

3 4 1

1 1 -1

2 4 1

1 2 -1

1 5 1

2 0.5 -1

1 6 1

1 2.5 -1

0.5 6 1

0 1 -1

2 2.5 1

0.5 1 -1

1 4 1

1.5 1 -1

2.7 1 1

2 3.5 1

0.8 3 -1

0.1 4 -1

*/

void SVM::run() {

getData("../data/perceptrondata.txt");

createTrainTest();

train();

cout<<"w and b is: "< SVM.h

#ifndef MACHINE_LEARNING_SVM_H

#define MACHINE_LEARNING_SVM_H

#include

#include

#include

#include "model_base.h"

class SVM : public Base{

private:

std::vector w;

std::vector alpha;

double b;

std::vector E;

double tol=0.001;

double eps=0.0005;

double C=1.0;

public:

virtual void getData(const std::string &filename);

virtual void run();

void createTrainTest();

void SMO();

int SMOTakeStep(int& i1, int& i2);

int SMOExamineExample(int i2);

double kernel(std::vector& , std::vector&);

double computeE(int& i);

std::pair SMOComputeOB(int& i1, int& i2, double&L, double& H);

void initialize();

void train();

double predict(const std::vector& inputData);

};

#endif //MACHINE_LEARNING_SVM_H

adaBoost

https://www.cnblogs.com/pinard/p/6133937.html

main.h

#include

#include

#include "AdaBoost.h"

using std::vector;

using std::cout;

using std::endl;

int main() {

Base* obj = new AdaBoost();

obj->run();

delete obj;

return 0;

}

AdaBoost.cpp

#include "AdaBoost.h"

using std::string;

using std::vector;

using std::pair;

void AdaBoost::getData(const string &filename){

//load data to a vector

std::vector temData;

double onepoint;

std::string line;

inData.clear();

std::ifstream infile(filename);

std::cout<<"reading ..."<> onepoint){

temData.push_back(onepoint);

}

indim = temData.size()-1;

inData.push_back(temData);

}

std::cout<<"total data is "< trainf;

trainf.assign(data.begin(), data.end()-1);

featrWeight.push_back(1.0);

trainDataF.push_back(trainf);

trainDataGT.push_back(*(data.end()-1));

}

for (const auto& data:testData){

std::vector testf;

testf.assign(data.begin(), data.end()-1);

testDataF.push_back(testf);

testDataGT.push_back(*(data.end()-1));

}

featrWeight = featrWeight / featrWeight.size();

}

int AdaBoost::computeWeights(Perceptron* classifier) {

vector trainGT;

for(int i =0; isetTrainD(trainDataF, trainGT);

classifier->setDim(indim);

classifier->train(100, 0.9);

double erroeRate = 0;

for(int i = 0; ipredict(trainDataF[i])!=int(trainDataGT[i]))

erroeRate += featrWeight[i];

}

if(erroeRate==0){

if(clsfWeight.size()==0)

clsfWeight.push_back(1);

return 0;

}

double clsW;

clsW = 0.5*std::log((1-erroeRate)/erroeRate);

clsfWeight.push_back(clsW);

double zm=0;

for(int i = 0; ipredict(trainDataF[i]));

}

for(int i = 0; ipredict(trainDataF[i]));

}

return 1;

}

int AdaBoost::predict(vector &testF) {

double out = 0;

for(int i = 0; ipredict(testF);

}

if (out > 0)

return 1;

else

return -1;

}

/*perceptrondata.txt

3 4 1

1 1 -1

2 4 1

1 2 -1

1 5 1

2 0.5 -1

1 6 1

1 2.5 -1

0.5 6 1

0 1 -1

2 2.5 1

0.5 1 -1

1 4 1

1.5 1 -1

2.7 1 1

2 3.5 1

0.8 3 -1

0.1 4 -1

*/

void AdaBoost::run() {

// 记得修改样本地址

getData("../data/perceptrondata.txt");

createTrainTest();

int isContinue = 1;

while(isContinue){

Perceptron* cls = new Perceptron();

isContinue = computeWeights(cls);

if(isContinue || classifiers.size()==0)

classifiers.push_back(cls);

}

for(int i=0; i AdaBoost.h

#ifndef MACHINE_LEARNING_ADABOOST_H

#define MACHINE_LEARNING_ADABOOST_H

#include

#include "model_base.h"

#include "perceptron.h"

class AdaBoost: public Base {

private:

vector clsfWeight;

vector featrWeight;

vector classifiers;

public:

virtual void getData(const std::string &filename);

virtual void run();

void createTrainTest();

int computeWeights(Perceptron* classifier);

int predict(vector& testF);

};

#endif //MACHINE_LEARNING_ADABOOST_H

GMM

main.h

#include

#include

#include "GMM.h"

using std::vector;

using std::cout;

using std::endl;

int main() {

Base* obj = new GMM();

obj->run();

delete obj;

return 0;

}

GMM.cpp

#include "GMM.h"

using std::string;

using std::vector;

using std::cout;

using std::endl;

void GMM::getData(const std::string &filename) {

//load data to a vector

std::vector temData;

double onepoint;

std::string line;

inData.clear();

std::ifstream infile(filename);

std::cout << "reading ..." << std::endl;

while(!infile.eof()){

temData.clear();

std::getline(infile, line);

if(line.empty())

continue;

std::stringstream stringin (line);

while (stringin >> onepoint) {

temData.push_back(onepoint);

}

indim = temData.size();

indim -= 1;

inData.push_back(temData);

}

std::cout<<"total data is "< trainf;

trainf.assign(data.begin(), data.end()-1);

trainDataF.push_back(trainf);

trainDataGT.push_back(*(data.end()-1));

}

for (const auto& data:testData){

std::vector testf;

testf.assign(data.begin(), data.end()-1);

testDataF.push_back(testf);

testDataGT.push_back(*(data.end()-1));

}

}

double GMM::getDet(const vector> &mat, int ignoreCol=-1) {

// compute determinant of a matrix

if (mat.empty())

throw "mat must be a Square array";

if (mat.size() == 1) {

return mat[0][0];

}

if (mat.size() == 2) {

if (mat[0].size() != 2 || mat[1].size() != 2)

throw "mat must be a Square array";

return mat[0][0]*mat[1][1] - mat[0][1]*mat[1][0];

}

double det = 0;

for (int numCol = 0; numCol < mat.size(); ++numCol) {

// below is to compute sub mat.

vector> newMat;

ignoreCol = numCol;

for (int i = 0 ; i < mat.size(); ++i) {

vector matRow;

for (int j = 0; j < mat.size(); ++j) {

if (i == 0 || j == ignoreCol)

continue;

matRow.push_back(mat[i][j]);

}

if (matRow.size()!=0)

newMat.push_back(matRow);

}

int factor;

factor = numCol%2 == 0 ? 1 : -1;

det += factor*mat[0][numCol]*getDet(newMat, numCol);

}

return det;

}

vector> GMM::matInversion(vector> &mat) {

// compute Inversion of a matrix

double det = getDet(mat);

if (std::abs(det)<1e-10)

std::cerr<< "det of mat must not be 0" << endl;

vector> invMat (mat.size(), vector(mat.size(), 0));

for (int i = 0 ; i < invMat.size(); ++i) {

for (int j = 0; j < invMat.size(); ++j) {

// below is to compute sub mat.

vector> newMat;

for (int x = 0; x < mat.size(); ++x) {

vector matRow;

for (int y = 0; y < mat.size(); ++y) {

if (x == i || y == j)

continue;

matRow.push_back(mat[i][j]);

}

if (!matRow.empty())

newMat.push_back(matRow);

}

invMat[j][i] = getDet(newMat) / det; // note the i and j

}

}

return invMat;

}

double GMM::gaussian(vector& muI, vector>& sigmaI,

vector& observeValue) {

vector xMinusMu = observeValue - muI;

vector rightMul;

vector> matInvers = transpose(matInversion(sigmaI));

// for compute convenience, i use the transpose mat for my operator *

for (auto& vec : matInvers) {

rightMul.push_back(xMinusMu*vec);

}

double finalMul = rightMul*xMinusMu;

double det = getDet(sigmaI);

double gaussianVal;

gaussianVal = 1 / (std::pow(2 * 3.14, indim/2) * std::pow(det, 0.5)) * std::exp(-0.5 * finalMul);

}

void GMM::EMAlgorithm(vector &alphaOld, vector>> &sigmaOld,

vector> &muOld) {

// compute gamma

for (int i = 0; i < trainDataF.size(); ++i) {

double probSum = 0;

for (int l = 0; l < alpha.size(); ++l) {

double gas = gaussian(muOld[l], sigmaOld[l], trainDataF[i]);

probSum += alphaOld[l] * gas;

}

for (int k = 0; k < alpha.size(); ++k) {

double gas = gaussian(muOld[k], sigmaOld[k], trainDataF[i]);

gamma[i][k] = alphaOld[k] * gas / probSum;

}

}

// update mu, sigma, alpha

for (int k = 0; k < alpha.size(); ++k) {

vector muNew;

vector> sigmaNew;

double alphaNew;

vector muNumerator;

double sumGamma = 0.0;

for (int i = 0; i < trainDataF.size(); ++i) {

sumGamma += gamma[i][k];

if (i==0) {

muNumerator = gamma[i][k] * trainDataF[i];

}

else {

muNumerator = muNumerator + gamma[i][k] * trainDataF[i];

}

}

muNew = muNumerator / sumGamma;

for (int i = 0; i < trainDataF.size(); ++i) {

if (i==0) {

auto temp1 = gamma[i][k]/ sumGamma * (trainDataF[i] - muNew);

auto temp2 = trainDataF[i] - muNew;

sigmaNew = vecMulVecToMat(temp1, temp2);

}

else {

auto temp1 = gamma[i][k] / sumGamma * (trainDataF[i] - muNew);

auto temp2 = trainDataF[i] - muNew;

sigmaNew = sigmaNew + vecMulVecToMat(temp1, temp2);

}

}

alphaNew = sumGamma / trainDataF.size();

mu[k] = muNew;

sigma[k] = sigmaNew;

alpha[k] = alphaNew;

}

}

void GMM::train(int steps, int k) {

// Initialize the variable

if (alpha.empty() && mu.empty() && sigma.empty() && gamma.empty()) {

for (int i = 0; i < k; ++i) {

alpha.push_back(1.0/k);

for (int index = 0; index < trainDataGT.size(); ++index){

if((int)trainDataGT[index] == i+1) {

mu.push_back(trainDataF[index]);

break;

}

}

vector> sigm (indim, vector (indim));

for (int row = 0; row < indim; ++row) {

for (int col = 0; col < indim; ++col){

if (row == col)

sigm[row][col] = 0.1;

}

}

sigma.push_back(sigm);

}

for (int i = 0; i < trainDataF.size(); ++i) {

vector gammaTemp;

for (int j = 0; j < k; ++j)

gammaTemp.push_back(1.0/(trainDataF.size() * k));

gamma.push_back(gammaTemp);

}

}

for (int step = 0; step < steps ; ++step)

EMAlgorithm(alpha, sigma, mu);

vector> vote (alpha.size(), vector (alpha.size()));

for (int i = 0; i < trainDataF.size(); ++i) {

double prob = 0.0;

int index = -1;

for (int l = 0; l < alpha.size(); ++l) {

double probk = gaussian(mu[l], sigma[l], trainDataF[i]);

if (probk > prob) {

prob = probk;

index = l;

}

}

int cls = (int)trainDataGT[i]-1;

vote[index][cls] += 1;

}

gaussVote = vote;

}

int GMM::predict(vector& testF, double& testGT) {

cout << "the true class is " << testGT << endl;

double prob = 0.0;

int index = -1;

for (int k = 0; k < alpha.size(); ++k) {

double probk = gaussian(mu[k], sigma[k], testF);

if (probk > prob) {

prob = probk;

index = k;

}

}

int pred = std::distance(gaussVote[index].begin(),

std::max_element(gaussVote[index].begin(), gaussVote[index].end()));

cout << "the predict class is " << pred+1 << endl;

return pred;

}

/*GMM.txt

1 1 1

1 1.5 1

1 2.5 1

2.5 4 2

2.5 5 2

3 3 2

4 1.5 3

4 2 3

5 1 3

2 2 1

1.5 2 1

1.5 4 1

2.5 6 2

3 4 2

3 5 2

4 5 2

4.5 1 3

4.5 1.5 3

4.5 2 3

5 2 3

2.5 1 1

*/

void GMM::run() {

//记得修改样本地址

getData("../data/GMM.txt");

createTrainTest();

train(10, 3);

for(int i = 0; i < testDataF.size(); ++i) {

predict(testDataF[i], testDataGT[i]);

}

} GMM.h

//

// Created by wyb on 19-2-27.

//

#ifndef MACHINE_LEARNING_GMM_H

#define MACHINE_LEARNING_GMM_H

#include

#include

#include "model_base.h"

class GMM : public Base {

private:

std::vector alpha;

std::vector>> sigma;

std::vector> mu;

std::vector> gamma;

std::vector> gaussVote;

public:

virtual void getData(const std::string &filename);

virtual void run();

void createTrainTest();

void EMAlgorithm(std::vector& alphaOld,

std::vector>>& sigmaOld,

std::vector>& muOld);

void train(int steps, int k);

double gaussian(std::vector& muI, std::vector>& sigmaI,

vector& observeValue);

double getDet(const std::vector>& mat, int ignoreCol);

std::vector> matInversion(std::vector>& mat);

int predict(vector& testF, double& testGT);

};

#endif //MACHINE_LEARNING_GMM_H