用MATLAB实现简单的线性回归

目录

一、损失函数CostFunction

二、梯度下降算法gradientDescent

三、直线回归

四、绘图效果

致歉:公示的推导要用到LATEX,但我不太会,所以.......

一、损失函数CostFunction

function J=myCostFunction(X,y,theta)

m=size(y,1)

yhat=X*theta;

delta=yhat-y;

delta=delta.^2;

dsum=sum(delta)%对列向量求和

J=dsum/(2*m)

end二、梯度下降算法gradientDescent

function theta=gradientDescent(X,y,theta,alpha,iter)

m=size(y,1);

for i=1:iter

yhat=X*theta;

error=yhat-y;

delta=1/m * (X'*error);

theta=theta-alpha*delta;

end三、直线回归

function myLinearRegression()

data=load('ex1data1.txt');

x=data(:,1); %这是数据X

y=data(:,2); %这是数据Y

figure,axis equal ,scatter(x,y,'r*');

m=size(y,1)

%n=length(y)

X=[ones(m,1),x]

theta=[0;0]%两行一列的矩阵

J=myCostFunction(X,y,theta)

iter=1500;

alpha=0.01;

theta=gradientDescent(X,y,theta,alpha,iter);

xval=max(x);

X=1:0.1:xval;

XX=[ones(size(X,2),1),X'];

YY=XX*theta;

hold on

scatter(X,YY,'ko');

end1、size()

m=size(y,1) 求y列向量的行数

2、theta=[0;0]

生成2*1的矩阵

>> theta=[0;0]

theta =

0

0

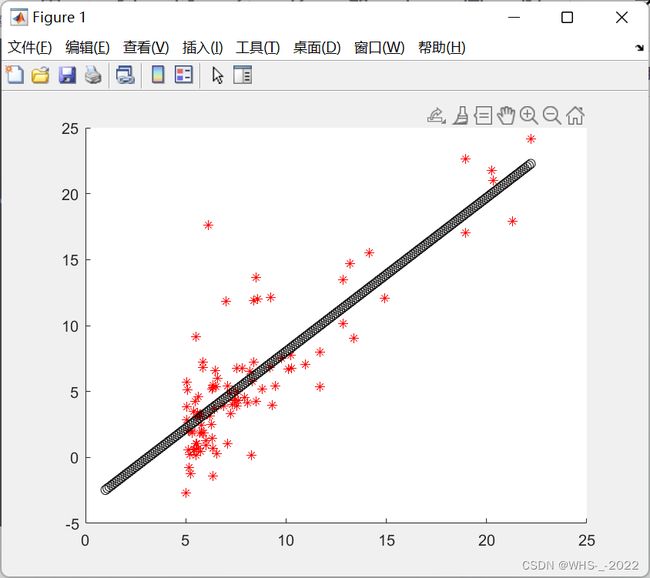

四、绘图效果

五、用二次曲线实现回归

%% 这个是主函数

function LinearTest()

% 装入数据

data = load('ex1data1.txt');

% figure, scatter(data(:,1), data(:,2));

x = data(:,1);

y = data(:,2);

X = [ones(size(x,1), 1),x];

t = eye(97,1);%生成单位矩阵

for i=1:97

t(i,1)=X(i,2)*X(i,2);

end

X = [X,t];

theta = zeros(3,1);

% J = costF(X, y, theta);

iter = 1500;

alpha = 0.0001;

theta = gradientD(X, y, theta, alpha, iter);

pre = X*theta;

figure, scatter(data(:,1), data(:,2));

hold on

% scatter(x, pre,'go');

xval = max(x);

X = 1:0.1:xval;

XX = [ones(size(X,2), 1),X', X'.^2];

prenew = XX*theta;

plot(X,prenew);

% plot(x,pre, 'rx', 'MarkerSize', 10)

end

function J = costF(X, y, theta)

prediction = X*theta;

k1 = (prediction - y);

k2 = k1.^2;

csum = sum(k2);

J = csum/size(y,1)*0.5;

end

function theta = gradientD(X, y, theta, alpha, iter)

for i = 1:iter

pre = X*theta;

m = size(y, 1);

error = (pre - y)/m;

pJ = 1/m*(X'*error);

theta = theta - alpha*pJ

end

end