Jetson Nano配置YOLO v3(CUDA+cudnn+OpenCV+TensorRT)

- 一、运行YOLO v3

-

- 1、YOLO介绍

- 2、检查CUDA

- 3、检查OpenCV

- 4、检查cuDNN

- 5、安装YOLO v3

- 6、YOLO的常用命令

-

- (1) 单张测试命令

- (2) 多张测试命令

- (3) 改变阈值

- (4) 实时摄像头

- (5) 本地视频检测

- (6)在预训练的模型上继续训练

- 二、运行TensorRT

-

- 1、TensorRT介绍

- 三、运行TensorFlow

- 1、TensorFlow简介

- 2、安装TensorFlow

一、运行YOLO v3

1、YOLO介绍

这里贴个学习YOLO的参考资料:

Series: YOLO object detector in PyTorch A collection of 5 posts.

在安装YOLO v3之前要先检查已经安装的系统组件,Jetson Nano的OS镜像已经自带了JetPack,cuda,cudnn,opencv等都已经安装好,我们要分别检查一下环境。

2、检查CUDA

Jetson-nano中已经安装了CUDA10.0版本,但必须先将路径加入到环境变量中。

sudo vim ~/.bashrc

在最后添加:

export CUDA_HOME=/usr/local/cuda-10.0

export LD_LIBRARY_PATH=/usr/local/cuda-10.0/lib64:$LD_LIBRARY_PATH

export PATH=/usr/local/cuda-10.0/bin:$PATH

然后再运行一下:

source ~/.bashrc

此时如果执行以下代码应该会出现CUDA的版本:

nvidia@nvidia-desktop:~$ nvcc -V

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2019 NVIDIA Corporation

Built on Wed_Oct_23_21:14:42_PDT_2019

Cuda compilation tools, release 10.2, V10.2.89

但是我在第一次输入后出现了:

bash: nvcc: 未找到命令

先切换到 目录下: cd ~

然后打开 .bashrc 文件:vim .bashr

编辑在文件的末尾添加下面的代码:

export PATH=/usr/local/cuda/bin:$PATH

export LD_LIBRARY_PATH=/usr/local/cuda/lib64:$LD_LIBRARY_PATH

export CUDA_ROOT=/usr/local/cud

此时依次按下Esc : q ! 将文件强制写入并退出,然后在运行一下:

source ~/.bashrc

再输入以下nvcc -V应该就会出现。

3、检查OpenCV

输入以下代码可以查看当前OpenCV的版本:

pkg-config opencv --modversion

如果OpenCv安装就绪,会显示版本号,但是我的当时一直不能显示OpenCV ,提示需要将OpenCV加入系统环境中,此时可以输入代码:

pkg-config opencv4 --modversion

由于版本的原因,这次就能显示出来:

nvidia@nvidia-desktop:~$ pkg-config opencv --modversion

4.1.1

4、检查cuDNN

Jetson-nano中已经安装好了cuDNN,我们运行一下看是否正常:

cd /usr/src/cudnn_samples_v8/mnistCUDNN #进入例子目录

sudo make #编译一下例子

sudo chmod a+x mnistCUDNN # 为可执行文件添加执行权限

./mnistCUDNN # 执行

显示如下,表示成功:

nvidia@nvidia-desktop:/usr/src/cudnn_samples_v8/mnistCUDNN$ ./mnistCUDNN

Executing: mnistCUDNN

cudnnGetVersion() : 8000 , CUDNN_VERSION from cudnn.h : 8000 (8.0.0)

Host compiler version : GCC 7.5.0

There are 1 CUDA capable devices on your machine :

device 0 : sms 1 Capabilities 5.3, SmClock 921.6 Mhz, MemSize (Mb) 3962, MemClock 12.8 Mhz, Ecc=0, boardGroupID=0

Using device 0

Testing single precision

Loading binary file data/conv1.bin

Loading binary file data/conv1.bias.bin

Loading binary file data/conv2.bin

Loading binary file data/conv2.bias.bin

Loading binary file data/ip1.bin

Loading binary file data/ip1.bias.bin

Loading binary file data/ip2.bin

Loading binary file data/ip2.bias.bin

Loading image data/one_28x28.pgm

Performing forward propagation ...

Testing cudnnGetConvolutionForwardAlgorithm_v7 ...

^^^^ CUDNN_STATUS_SUCCESS for Algo 1: -1.000000 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 0: -1.000000 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 2: -1.000000 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 7: -1.000000 time requiring 2057744 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 4: -1.000000 time requiring 184784 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 5: -1.000000 time requiring 178432 memory

^^^^ CUDNN_STATUS_NOT_SUPPORTED for Algo 6: -1.000000 time requiring 0 memory

^^^^ CUDNN_STATUS_NOT_SUPPORTED for Algo 3: -1.000000 time requiring 0 memory

Testing cudnnFindConvolutionForwardAlgorithm ...

^^^^ CUDNN_STATUS_SUCCESS for Algo 0: 0.379532 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 1: 0.407083 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 2: 1.107760 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 5: 15.830000 time requiring 178432 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 7: 28.929480 time requiring 2057744 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 4: 160.134064 time requiring 184784 memory

^^^^ CUDNN_STATUS_NOT_SUPPORTED for Algo 6: -1.000000 time requiring 0 memory

^^^^ CUDNN_STATUS_NOT_SUPPORTED for Algo 3: -1.000000 time requiring 0 memory

Testing cudnnGetConvolutionForwardAlgorithm_v7 ...

^^^^ CUDNN_STATUS_SUCCESS for Algo 1: -1.000000 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 0: -1.000000 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 2: -1.000000 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 7: -1.000000 time requiring 1433120 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 4: -1.000000 time requiring 2450080 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 5: -1.000000 time requiring 4656640 memory

^^^^ CUDNN_STATUS_NOT_SUPPORTED for Algo 6: -1.000000 time requiring 0 memory

^^^^ CUDNN_STATUS_NOT_SUPPORTED for Algo 3: -1.000000 time requiring 0 memory

Testing cudnnFindConvolutionForwardAlgorithm ...

^^^^ CUDNN_STATUS_SUCCESS for Algo 1: 2.277968 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 0: 2.286458 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 2: 2.821927 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 4: 3.238802 time requiring 2450080 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 7: 8.895677 time requiring 1433120 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 5: 9.272969 time requiring 4656640 memory

^^^^ CUDNN_STATUS_NOT_SUPPORTED for Algo 6: -1.000000 time requiring 0 memory

^^^^ CUDNN_STATUS_NOT_SUPPORTED for Algo 3: -1.000000 time requiring 0 memory

Resulting weights from Softmax:

0.0000000 0.9999399 0.0000000 0.0000000 0.0000561 0.0000000 0.0000012 0.0000017 0.0000010 0.0000000

Loading image data/three_28x28.pgm

Performing forward propagation ...

Testing cudnnGetConvolutionForwardAlgorithm_v7 ...

^^^^ CUDNN_STATUS_SUCCESS for Algo 1: -1.000000 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 0: -1.000000 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 2: -1.000000 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 7: -1.000000 time requiring 2057744 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 4: -1.000000 time requiring 184784 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 5: -1.000000 time requiring 178432 memory

^^^^ CUDNN_STATUS_NOT_SUPPORTED for Algo 6: -1.000000 time requiring 0 memory

^^^^ CUDNN_STATUS_NOT_SUPPORTED for Algo 3: -1.000000 time requiring 0 memory

Testing cudnnFindConvolutionForwardAlgorithm ...

^^^^ CUDNN_STATUS_SUCCESS for Algo 1: 0.197396 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 0: 0.205937 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 2: 0.399844 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 4: 2.132292 time requiring 184784 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 5: 4.073125 time requiring 178432 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 7: 7.371354 time requiring 2057744 memory

^^^^ CUDNN_STATUS_NOT_SUPPORTED for Algo 6: -1.000000 time requiring 0 memory

^^^^ CUDNN_STATUS_NOT_SUPPORTED for Algo 3: -1.000000 time requiring 0 memory

Testing cudnnGetConvolutionForwardAlgorithm_v7 ...

^^^^ CUDNN_STATUS_SUCCESS for Algo 1: -1.000000 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 0: -1.000000 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 2: -1.000000 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 7: -1.000000 time requiring 1433120 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 4: -1.000000 time requiring 2450080 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 5: -1.000000 time requiring 4656640 memory

^^^^ CUDNN_STATUS_NOT_SUPPORTED for Algo 6: -1.000000 time requiring 0 memory

^^^^ CUDNN_STATUS_NOT_SUPPORTED for Algo 3: -1.000000 time requiring 0 memory

Testing cudnnFindConvolutionForwardAlgorithm ...

^^^^ CUDNN_STATUS_SUCCESS for Algo 0: 1.505469 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 2: 1.527083 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 1: 1.528802 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 4: 2.178020 time requiring 2450080 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 7: 6.266927 time requiring 1433120 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 5: 10.913073 time requiring 4656640 memory

^^^^ CUDNN_STATUS_NOT_SUPPORTED for Algo 6: -1.000000 time requiring 0 memory

^^^^ CUDNN_STATUS_NOT_SUPPORTED for Algo 3: -1.000000 time requiring 0 memory

Resulting weights from Softmax:

0.0000000 0.0000000 0.0000000 0.9999288 0.0000000 0.0000711 0.0000000 0.0000000 0.0000000 0.0000000

Loading image data/five_28x28.pgm

Performing forward propagation ...

Resulting weights from Softmax:

0.0000000 0.0000008 0.0000000 0.0000002 0.0000000 0.9999820 0.0000154 0.0000000 0.0000012 0.0000006

Result of classification: 1 3 5

Test passed!

root@nvidia-desktop:/usr/src/cudnn_samples_v8/mnistCUDNN# cd

root@nvidia-desktop:~# cat /usr/include/cudnn_version.h | grep CUDNN_MAJOR -A 2

#define CUDNN_MAJOR 8

#define CUDNN_MINOR 0

#define CUDNN_PATCHLEVEL 0

--

#define CUDNN_VERSION (CUDNN_MAJOR * 1000 + CUDNN_MINOR * 100 + CUDNN_PATCHLEVEL)

#endif /* CUDNN_VERSION_H */

5、安装YOLO v3

1.首先先在官网下载:

git clone git://github.com/pjreddie/darknet.git

2.然后配置:

cd darknet

sudo vim Makefile #修改Makefil

3.接着将Makefile的前三行修改一下:

GPU=1

CUDNN=1

OPENCV=1

4.接着编译:

make -j4

但是从这里开始出错,一直显示:

error:'CUDNN_CONVOLUTION_FWD_SPECIFY_WORKSPACE_LIMIT' undeclared(first use in this function);did you mean 'CUDNN_CONVOLUTION_FWD_ALGO_DIRECT'

查找了各种方法都不能解决,尝试下载AlexeyAB版的darknet。

git clone git://github.com/AlexeyAB/darknet

5.然后配置:

cd darknet/AlexeyAB

sudo vim Makefile #修改Makefil

6.接着将Makefile修改一下:

GPU=1

CUDNN=1

CUDNN_HALF=1

OPENCV=1 #看是否安装opencv

AVX=0

OPENMP=0

LIBSO=0

ZED_CAMERA=0

7.接着编译:

make install

下载权重文件:

这个是yolov3-tiny

wget https://pjreddie.com/media/files/yolov3-tiny.weights

这个是yolov3

wget https://pjreddie.com/media/files/yolov3.weights

两者的区别在于:

yolo3-tiny是yolo3的简化版本,主要区别为:主干网络采用一个7层conv+max网络提取特征(和darknet19类似),嫁接网络采用的是1313、2626的分辨率探测网络,精度比较低。探测精度低一个很重要的原因是tiny的主干网络比较浅(7层),不能提取出更高层次的语义特征。

8.检测一下是否安装成功:

./darknet detector test data/detect.data data/yolov3.cfg data/yolov3.weight

9.运行YOLO v3-tiny:

nvidia@nvidia-desktop:~/darknet/AlexeyAB/darknet$ ./darknet detect cfg/yolov3-tiny.cfg yolov3-tiny.weights data/dog.jpg

CUDA-version: 10020 (10020), cuDNN: 8.0.0, CUDNN_HALF=1, GPU count: 1

CUDNN_HALF=1

OpenCV version: 4.1.1

0 : compute_capability = 530, cudnn_half = 0, GPU: NVIDIA Tegra X1

net.optimized_memory = 0

mini_batch = 1, batch = 1, time_steps = 1, train = 0

layer filters size/strd(dil) input output

0 conv 16 3 x 3/ 1 416 x 416 x 3 -> 416 x 416 x 16 0.150 BF

1 max 2x 2/ 2 416 x 416 x 16 -> 208 x 208 x 16 0.003 BF

2 conv 32 3 x 3/ 1 208 x 208 x 16 -> 208 x 208 x 32 0.399 BF

3 max 2x 2/ 2 208 x 208 x 32 -> 104 x 104 x 32 0.001 BF

4 conv 64 3 x 3/ 1 104 x 104 x 32 -> 104 x 104 x 64 0.399 BF

5 max 2x 2/ 2 104 x 104 x 64 -> 52 x 52 x 64 0.001 BF

6 conv 128 3 x 3/ 1 52 x 52 x 64 -> 52 x 52 x 128 0.399 BF

7 max 2x 2/ 2 52 x 52 x 128 -> 26 x 26 x 128 0.000 BF

8 conv 256 3 x 3/ 1 26 x 26 x 128 -> 26 x 26 x 256 0.399 BF

9 max 2x 2/ 2 26 x 26 x 256 -> 13 x 13 x 256 0.000 BF

10 conv 512 3 x 3/ 1 13 x 13 x 256 -> 13 x 13 x 512 0.399 BF

11 max 2x 2/ 1 13 x 13 x 512 -> 13 x 13 x 512 0.000 BF

12 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

13 conv 256 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 256 0.089 BF

14 conv 512 3 x 3/ 1 13 x 13 x 256 -> 13 x 13 x 512 0.399 BF

15 conv 255 1 x 1/ 1 13 x 13 x 512 -> 13 x 13 x 255 0.044 BF

16 yolo

[yolo] params: iou loss: mse (2), iou_norm: 0.75, cls_norm: 1.00, scale_x_y: 1.00

17 route 13 -> 13 x 13 x 256

18 conv 128 1 x 1/ 1 13 x 13 x 256 -> 13 x 13 x 128 0.011 BF

19 upsample 2x 13 x 13 x 128 -> 26 x 26 x 128

20 route 19 8 -> 26 x 26 x 384

21 conv 256 3 x 3/ 1 26 x 26 x 384 -> 26 x 26 x 256 1.196 BF

22 conv 255 1 x 1/ 1 26 x 26 x 256 -> 26 x 26 x 255 0.088 BF

23 yolo

[yolo] params: iou loss: mse (2), iou_norm: 0.75, cls_norm: 1.00, scale_x_y: 1.00

Total BFLOPS 5.571

avg_outputs = 341534

Allocate additional workspace_size = 0.00 MB

Loading weights from yolov3-tiny.weights...

seen 64, trained: 32013 K-images (500 Kilo-batches_64)

Done! Loaded 24 layers from weights-file

Detection layer: 16 - type = 27

Detection layer: 23 - type = 27

data/dog.jpg: Predicted in 2663.842000 milli-seconds.

dog: 81%

bicycle: 38%

car: 71%

truck: 41%

truck: 62%

car: 39%

运行YOLO v3 :

nvidia@nvidia-desktop:~/darknet/AlexeyAB/darknet$ ./darknet detect cfg/yolov3.cfg yolov3.weights data/dog.jpg

CUDA-version: 10020 (10020), cuDNN: 8.0.0, CUDNN_HALF=1, GPU count: 1

CUDNN_HALF=1

OpenCV version: 4.1.1

0 : compute_capability = 530, cudnn_half = 0, GPU: NVIDIA Tegra X1

net.optimized_memory = 0

mini_batch = 1, batch = 1, time_steps = 1, train = 0

layer filters size/strd(dil) input output

0 conv 32 3 x 3/ 1 416 x 416 x 3 -> 416 x 416 x 32 0.299 BF

1 conv 64 3 x 3/ 2 416 x 416 x 32 -> 208 x 208 x 64 1.595 BF

2 conv 32 1 x 1/ 1 208 x 208 x 64 -> 208 x 208 x 32 0.177 BF

3 conv 64 3 x 3/ 1 208 x 208 x 32 -> 208 x 208 x 64 1.595 BF

4 Shortcut Layer: 1, wt = 0, wn = 0, outputs: 208 x 208 x 64 0.003 BF

5 conv 128 3 x 3/ 2 208 x 208 x 64 -> 104 x 104 x 128 1.595 BF

6 conv 64 1 x 1/ 1 104 x 104 x 128 -> 104 x 104 x 64 0.177 BF

7 conv 128 3 x 3/ 1 104 x 104 x 64 -> 104 x 104 x 128 1.595 BF

8 Shortcut Layer: 5, wt = 0, wn = 0, outputs: 104 x 104 x 128 0.001 BF

9 conv 64 1 x 1/ 1 104 x 104 x 128 -> 104 x 104 x 64 0.177 BF

10 conv 128 3 x 3/ 1 104 x 104 x 64 -> 104 x 104 x 128 1.595 BF

11 Shortcut Layer: 8, wt = 0, wn = 0, outputs: 104 x 104 x 128 0.001 BF

12 conv 256 3 x 3/ 2 104 x 104 x 128 -> 52 x 52 x 256 1.595 BF

13 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

14 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

15 Shortcut Layer: 12, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

16 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

17 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

18 Shortcut Layer: 15, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

19 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

20 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

21 Shortcut Layer: 18, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

22 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

23 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

24 Shortcut Layer: 21, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

25 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

26 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

27 Shortcut Layer: 24, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

28 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

29 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

30 Shortcut Layer: 27, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

31 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

32 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

33 Shortcut Layer: 30, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

34 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

35 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

36 Shortcut Layer: 33, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

37 conv 512 3 x 3/ 2 52 x 52 x 256 -> 26 x 26 x 512 1.595 BF

38 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

39 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

40 Shortcut Layer: 37, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

41 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

42 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

43 Shortcut Layer: 40, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

44 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

45 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

46 Shortcut Layer: 43, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

47 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

48 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

49 Shortcut Layer: 46, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

50 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

51 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

52 Shortcut Layer: 49, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

53 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

54 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

55 Shortcut Layer: 52, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

56 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

57 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

58 Shortcut Layer: 55, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

59 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

60 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

61 Shortcut Layer: 58, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

62 conv 1024 3 x 3/ 2 26 x 26 x 512 -> 13 x 13 x1024 1.595 BF

63 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

64 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

65 Shortcut Layer: 62, wt = 0, wn = 0, outputs: 13 x 13 x1024 0.000 BF

66 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

67 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

68 Shortcut Layer: 65, wt = 0, wn = 0, outputs: 13 x 13 x1024 0.000 BF

69 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

70 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

71 Shortcut Layer: 68, wt = 0, wn = 0, outputs: 13 x 13 x1024 0.000 BF

72 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

73 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

74 Shortcut Layer: 71, wt = 0, wn = 0, outputs: 13 x 13 x1024 0.000 BF

75 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

76 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

77 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

78 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

79 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

80 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

81 conv 255 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 255 0.088 BF

82 yolo

[yolo] params: iou loss: mse (2), iou_norm: 0.75, cls_norm: 1.00, scale_x_y: 1.00

83 route 79 -> 13 x 13 x 512

84 conv 256 1 x 1/ 1 13 x 13 x 512 -> 13 x 13 x 256 0.044 BF

85 upsample 2x 13 x 13 x 256 -> 26 x 26 x 256

86 route 85 61 -> 26 x 26 x 768

87 conv 256 1 x 1/ 1 26 x 26 x 768 -> 26 x 26 x 256 0.266 BF

88 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

89 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

90 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

91 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

92 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

93 conv 255 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 255 0.177 BF

94 yolo

[yolo] params: iou loss: mse (2), iou_norm: 0.75, cls_norm: 1.00, scale_x_y: 1.00

95 route 91 -> 26 x 26 x 256

96 conv 128 1 x 1/ 1 26 x 26 x 256 -> 26 x 26 x 128 0.044 BF

97 upsample 2x 26 x 26 x 128 -> 52 x 52 x 128

98 route 97 36 -> 52 x 52 x 384

99 conv 128 1 x 1/ 1 52 x 52 x 384 -> 52 x 52 x 128 0.266 BF

100 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

101 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

102 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

103 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

104 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

105 conv 255 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 255 0.353 BF

106 yolo

[yolo] params: iou loss: mse (2), iou_norm: 0.75, cls_norm: 1.00, scale_x_y: 1.00

Total BFLOPS 65.879

avg_outputs = 532444

Allocate additional workspace_size = 0.00 MB

Loading weights from yolov3.weights...

seen 64, trained: 32013 K-images (500 Kilo-batches_64)

Done! Loaded 107 layers from weights-file

Detection layer: 82 - type = 27

Detection layer: 94 - type = 27

Detection layer: 106 - type = 27

data/dog.jpg: Predicted in 7466.562000 milli-seconds.

bicycle: 99%

dog: 100%

truck: 94%

可以看出YOLO v3-tiny只用了2.6s,而YOLO v3用了7.46s,但从准确度来说,YOLO v3的精准度远远大于YOLO v3-tiny。

TIP:资源不够时应对方案:增加虚拟内存swap

1.先禁用之前的

sudo swapoff /swapfile

2.修改swap 空间的大小为4G

sudo dd if=/dev/zero of=/swapfile bs=1M count=4096

3.设置文件为“swap file”类型

sudo mkswap /swapfile

4.启用swapfile

sudo swapon /swapfile

运行结果:

nvidia@nvidia-desktop:~$ df -hl

文件系统 容量 已用 可用 已用% 挂载点

/dev/mmcblk0p1 30G 22G 6.0G 79% /

none 1.8G 0 1.8G 0% /dev

tmpfs 2.0G 628M 1.4G 32% /dev/shm

tmpfs 2.0G 47M 1.9G 3% /run

tmpfs 5.0M 4.0K 5.0M 1% /run/lock

tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

tmpfs 397M 12K 397M 1% /run/user/120

tmpfs 397M 108K 397M 1% /run/user/1000

6、YOLO的常用命令

在终端里,直接运行时Yolo的Darknet的各项命令,/home/nvidia/darknet/cfg/coco.data文件,使用原件:

(1) 单张测试命令

nvidia@nvidia-desktop:~/darknet/AlexeyAB/darknet$ ./darknet detect cfg/yolov3.cfg yolov3.weights data/dog.jpg

(2) 多张测试命令

nvidia@nvidia-desktop:~/darknet/AlexeyAB/darknet$ ./darknet detect cfg/yolov3.cfg yolov3.weights

Enter Image Path: data/dog1.jpg

Enter Image Path: data/dog2.jpg

(3) 改变阈值

YOLO默认阈值0.25,范围[0,1],可以自行设定:

nvidia@nvidia-desktop:~/darknet/AlexeyAB/darknet$ ./darknet detect cfg/yolov3.cfg yolov3.weights data/dog.jpg -thresh 0

(4) 实时摄像头

实时视频检测需要Darknet with CUDA and OpenCV,-c ,OpenCV默认为0:

nvidia@nvidia-desktop:~/darknet/AlexeyAB/darknet$ ./darknet detector demo cfg/coco.data cfg/yolov3.cfg yolov3.weights

(5) 本地视频检测

nvidia@nvidia-desktop:~/darknet/AlexeyAB/darknet$ ./darknet detector demo cfg/coco.data cfg/yolov3.cfg yolov3.weights <video file>

darknet环境中:

nvidia@nvidia-desktop:~/darknet/AlexeyAB/darknet$ ./darknet detector test cfg/coco.data cfg/yolov3.cfg yolov3.weights data/xxx.mp4

OpenCV 环境中:

nvidia@nvidia-desktop:~/darknet/AlexeyAB/darknet$ python3 object_detection_yolo.py --video=xxx.mp4

(6)在预训练的模型上继续训练

在CPU下训练:

nvidia@nvidia-desktop:~/darknet/AlexeyAB/darknet$ ./darknet detector train cfg/voc.data cfg/yolov3-voc.cfg darknet53.conv.74

在多GPU下训练:

nvidia@nvidia-desktop:~/darknet/AlexeyAB/darknet$ ./darknet detector train cfg/coco.data cfg/yolov3.cfg darknet53.conv.74 -gpus 0,1,2,3

从定点继续训练:

nvidia@nvidia-desktop:~/darknet/AlexeyAB/darknet$ ./darknet detector train cfg/coco.data cfg/yolov3.cfg backup/yolov3.backup -gpus 0,1,2,3

二、运行TensorRT

1、TensorRT介绍

贴一些参考资料:

- TensorRT(1)-介绍.

1.首先在官网下载最新TensorRT7安装包:NVIDIA TensorRT 7.x Download

2.解压

tar xzvf TensorRT-7.0.0.11.Ubuntu-18.04.x86_64-gnu.cuda-10.2.cudnn7.6.tar.gz

3.添加路径

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/home/nvidia/TensorRT-7.0.0.11/lib

4.安装TensorRT

cd TensorRT-7.0.0.11/python

pip3 install tensorrt-7.0.0.11-cp36-none-linux_x86_64.whl

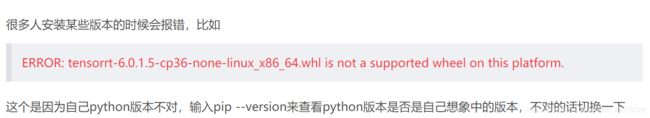

但是在安装完毕之后出现了如下问题:

nvidia@nvidia-desktop:~/TensorRT-7.0.0.11/python$ pip install tensorrt-7.0.0.11-cp36-none-linux_x86_64.whl

Defaulting to user installation because normal site-packages is not writeable

ERROR: tensorrt-7.0.0.11-cp36-none-linux_x86_64.whl is not a supported wheel on this platform.

nvidia@nvidia-desktop:~/TensorRT-7.0.0.11/python$ pip3 --version

pip 20.2.2 from /home/nvidia/.local/lib/python3.6/site-packages/pip (python 3.6)

因为安装了两个版本的python,每次使用切换比较麻烦,所以可以使用如下操作切换系统默认的python:

sudo update-alternatives --install /usr/bin/python python /usr/bin/python2 100

sudo update-alternatives --install /usr/bin/python python /usr/bin/python3 150

此时python版本已经切换为3.6,如果需要切换回来,只需要输入:

sudo update-alternatives --config python

但是再次执行命令还是会出现如上的错误,尝试安装TensorRT6,但是在运行安装的过程中还是发生了如下的命令:

nvidia@nvidia-desktop:~$ pip3 install TensorRT-6.0.1.8/python/tensorrt-6.0.1.8-cp36-none-linux_x86_64.whl

Defaulting to user installation because normal site-packages is not writeable

ERROR: tensorrt-6.0.1.8-cp36-none-linux_x86_64.whl is not a supported wheel on this platform.

但是在按照上面步骤之后,发生了如下问题:

nvidia@nvidia-desktop:~$ python

Python 3.6.9 (default, Jul 17 2020, 12:50:27)

[GCC 8.4.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import tensorrt

Traceback (most recent call last):

File "" , line 1, in <module>

File "/home/nvidia/.local/lib/python3.6/site-packages/tensorrt/__init__.py", line 1, in <module>

from .tensorrt import *

ImportError: /home/nvidia/.local/lib/python3.6/site-packages/tensorrt/tensorrt.so: cannot open shared object file: No such file or directory

查找了半天结果是因为TensorFlow没有配置好,转头开始配置TensorFlow。这里一旦运行TensorFlow但是又出现了下面的错误:

>>> import tensorflow

Traceback (most recent call last):

File "/usr/local/lib/python3.6/dist-packages/tensorflow/python/pywrap_tensorflow.py", line 58, in <module>

from tensorflow.python.pywrap_tensorflow_internal import *

File "/usr/local/lib/python3.6/dist-packages/tensorflow/python/pywrap_tensorflow_internal.py", line 28, in <module>

_pywrap_tensorflow_internal = swig_import_helper()

File "/usr/local/lib/python3.6/dist-packages/tensorflow/python/pywrap_tensorflow_internal.py", line 24, in swig_import_helper

_mod = imp.load_module('_pywrap_tensorflow_internal', fp, pathname, description)

File "/usr/lib/python3.6/imp.py", line 243, in load_module

return load_dynamic(name, filename, file)

File "/usr/lib/python3.6/imp.py", line 343, in load_dynamic

return _load(spec)

ImportError: libcublas.so.10.0: cannot open shared object file: No such file or directory

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "" , line 1, in <module>

File "/usr/local/lib/python3.6/dist-packages/tensorflow/__init__.py", line 24, in <module>

from tensorflow.python import pywrap_tensorflow # pylint: disable=unused-import

File "/usr/local/lib/python3.6/dist-packages/tensorflow/python/__init__.py", line 49, in <module>

from tensorflow.python import pywrap_tensorflow

File "/usr/local/lib/python3.6/dist-packages/tensorflow/python/pywrap_tensorflow.py", line 74, in <module>

raise ImportError(msg)

ImportError: Traceback (most recent call last):

File "/usr/local/lib/python3.6/dist-packages/tensorflow/python/pywrap_tensorflow.py", line 58, in <module>

from tensorflow.python.pywrap_tensorflow_internal import *

File "/usr/local/lib/python3.6/dist-packages/tensorflow/python/pywrap_tensorflow_internal.py", line 28, in <module>

_pywrap_tensorflow_internal = swig_import_helper()

File "/usr/local/lib/python3.6/dist-packages/tensorflow/python/pywrap_tensorflow_internal.py", line 24, in swig_import_helper

_mod = imp.load_module('_pywrap_tensorflow_internal', fp, pathname, description)

File "/usr/lib/python3.6/imp.py", line 243, in load_module

return load_dynamic(name, filename, file)

File "/usr/lib/python3.6/imp.py", line 343, in load_dynamic

return _load(spec)

ImportError: libcublas.so.10.0: cannot open shared object file: No such file or directory

Failed to load the native TensorFlow runtime.

See https://www.tensorflow.org/install/errors

for some common reasons and solutions. Include the entire stack trace

above this error message when asking for help.

三、运行TensorFlow

1、TensorFlow简介

TensorFlow是一个采用数据流图,用于数值计算的开源软件库。可用于机器学习和深度神经网络方面的研究,按着个系统的通性使其也可广泛的用于其他领域。通过使用TensorFlow人们可以快速的入门神经网络,大大降低深度学习的开发成本和开发难度,具有灵活性 、可移植性、多语言支持、性能最优化等特点。

2、安装TensorFlow

在TensorFlow官网中的搜索框搜索TensorFlow,下载tf_gpu-2.2.0 + nv20.6-py3,然后安装。

nvidia@nvidia-desktop:~$ sudo -H pip3 install ~/下载/tensorflow-1.15.3+nv20.7-cp36-cp36m-linux_aarch64.whl

Processing ./下载/tensorflow-1.15.3+nv20.7-cp36-cp36m-linux_aarch64.whl

Requirement already satisfied: protobuf>=3.6.1 in /usr/local/lib/python3.6/dist-packages (from tensorflow==1.15.3+nv20.7)

Requirement already satisfied: keras-applications>=1.0.8 in /usr/local/lib/python3.6/dist-packages (from tensorflow==1.15.3+nv20.7)

Requirement already satisfied: wheel>=0.26; python_version >= "3" in /usr/lib/python3/dist-packages (from tensorflow==1.15.3+nv20.7)

Requirement already satisfied: astor>=0.6.0 in /usr/local/lib/python3.6/dist-packages (from tensorflow==1.15.3+nv20.7)

Collecting tensorflow-estimator==1.15.1 (from tensorflow==1.15.3+nv20.7)

Using cached https://files.pythonhosted.org/packages/de/62/2ee9cd74c9fa2fa450877847ba560b260f5d0fb70ee0595203082dafcc9d/tensorflow_estimator-1.15.1-py2.py3-none-any.whl

Requirement already satisfied: six>=1.10.0 in /usr/local/lib/python3.6/dist-packages (from tensorflow==1.15.3+nv20.7)

Requirement already satisfied: absl-py>=0.7.0 in /usr/local/lib/python3.6/dist-packages (from tensorflow==1.15.3+nv20.7)

Requirement already satisfied: gast==0.2.2 in /usr/local/lib/python3.6/dist-packages (from tensorflow==1.15.3+nv20.7)

Requirement already satisfied: termcolor>=1.1.0 in /usr/local/lib/python3.6/dist-packages (from tensorflow==1.15.3+nv20.7)

Requirement already satisfied: google-pasta>=0.1.6 in /usr/local/lib/python3.6/dist-packages (from tensorflow==1.15.3+nv20.7)

Requirement already satisfied: wrapt>=1.11.1 in /usr/local/lib/python3.6/dist-packages (from tensorflow==1.15.3+nv20.7)

Requirement already satisfied: grpcio>=1.8.6 in /usr/local/lib/python3.6/dist-packages (from tensorflow==1.15.3+nv20.7)

Collecting opt-einsum>=2.3.2 (from tensorflow==1.15.3+nv20.7)

Using cached https://files.pythonhosted.org/packages/bc/19/404708a7e54ad2798907210462fd950c3442ea51acc8790f3da48d2bee8b/opt_einsum-3.3.0-py3-none-any.whl

Collecting tensorboard<1.16.0,>=1.15.0 (from tensorflow==1.15.3+nv20.7)

Using cached https://files.pythonhosted.org/packages/1e/e9/d3d747a97f7188f48aa5eda486907f3b345cd409f0a0850468ba867db246/tensorboard-1.15.0-py3-none-any.whl

Collecting numpy<2.0,>=1.16.0 (from tensorflow==1.15.3+nv20.7)

Using cached https://files.pythonhosted.org/packages/2c/2f/7b4d0b639a42636362827e611cfeba67975ec875ae036dd846d459d52652/numpy-1.19.1.zip

Requirement already satisfied: keras-preprocessing>=1.0.5 in /usr/local/lib/python3.6/dist-packages (from tensorflow==1.15.3+nv20.7)

Requirement already satisfied: setuptools in /usr/local/lib/python3.6/dist-packages (from protobuf>=3.6.1->tensorflow==1.15.3+nv20.7)

Requirement already satisfied: h5py in /usr/local/lib/python3.6/dist-packages (from keras-applications>=1.0.8->tensorflow==1.15.3+nv20.7)

Requirement already satisfied: markdown>=2.6.8 in /usr/local/lib/python3.6/dist-packages (from tensorboard<1.16.0,>=1.15.0->tensorflow==1.15.3+nv20.7)

Requirement already satisfied: werkzeug>=0.11.15 in /usr/local/lib/python3.6/dist-packages (from tensorboard<1.16.0,>=1.15.0->tensorflow==1.15.3+nv20.7)

Requirement already satisfied: importlib-metadata; python_version < "3.8" in /usr/local/lib/python3.6/dist-packages (from markdown>=2.6.8->tensorboard<1.16.0,>=1.15.0->tensorflow==1.15.3+nv20.7)

Requirement already satisfied: zipp>=0.5 in /usr/local/lib/python3.6/dist-packages (from importlib-metadata; python_version < "3.8"->markdown>=2.6.8->tensorboard<1.16.0,>=1.15.0->tensorflow==1.15.3+nv20.7)

Building wheels for collected packages: numpy

Running setup.py bdist_wheel for numpy ... error

Complete output from command /usr/bin/python3 -u -c "import setuptools, tokenize;__file__='/tmp/pip-build-1g5p2fm1/numpy/setup.py';f=getattr(tokenize, 'open', open)(__file__);code=f.read().replace('\r\n', '\n');f.close();exec(compile(code, __file__, 'exec'))" bdist_wheel -d /tmp/tmpca5hou2rpip-wheel- --python-tag cp36:

Running from numpy source directory.

Cythonizing sources

Processing numpy/random/_bounded_integers.pxd.in

Processing numpy/random/_philox.pyx

Traceback (most recent call last):

File "/tmp/pip-build-1g5p2fm1/numpy/tools/cythonize.py", line 59, in process_pyx

from Cython.Compiler.Version import version as cython_version

ModuleNotFoundError: No module named 'Cython'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/tmp/pip-build-1g5p2fm1/numpy/tools/cythonize.py", line 235, in <module>

main()

File "/tmp/pip-build-1g5p2fm1/numpy/tools/cythonize.py", line 231, in main

find_process_files(root_dir)

File "/tmp/pip-build-1g5p2fm1/numpy/tools/cythonize.py", line 222, in find_process_files

process(root_dir, fromfile, tofile, function, hash_db)

File "/tmp/pip-build-1g5p2fm1/numpy/tools/cythonize.py", line 188, in process

processor_function(fromfile, tofile)

File "/tmp/pip-build-1g5p2fm1/numpy/tools/cythonize.py", line 64, in process_pyx

raise OSError('Cython needs to be installed in Python as a module')

OSError: Cython needs to be installed in Python as a module

Traceback (most recent call last):

File "" , line 1, in <module>

File "/tmp/pip-build-1g5p2fm1/numpy/setup.py", line 499, in <module>

setup_package()

File "/tmp/pip-build-1g5p2fm1/numpy/setup.py", line 479, in setup_package

generate_cython()

File "/tmp/pip-build-1g5p2fm1/numpy/setup.py", line 274, in generate_cython

raise RuntimeError("Running cythonize failed!")

RuntimeError: Running cythonize failed!

----------------------------------------

Failed building wheel for numpy

Running setup.py clean for numpy

Complete output from command /usr/bin/python3 -u -c "import setuptools, tokenize;__file__='/tmp/pip-build-1g5p2fm1/numpy/setup.py';f=getattr(tokenize, 'open', open)(__file__);code=f.read().replace('\r\n', '\n');f.close();exec(compile(code, __file__, 'exec'))" clean --all:

Running from numpy source directory.

`setup.py clean` is not supported, use one of the following instead:

- `git clean -xdf` (cleans all files)

- `git clean -Xdf` (cleans all versioned files, doesn't touch

files that aren't checked into the git repo)

Add `--force` to your command to use it anyway if you must (unsupported).

----------------------------------------

Failed cleaning build dir for numpy

Failed to build numpy

Installing collected packages: tensorflow-estimator, numpy, opt-einsum, tensorboard, tensorflow

Found existing installation: tensorflow-estimator 1.13.0

Uninstalling tensorflow-estimator-1.13.0:

Successfully uninstalled tensorflow-estimator-1.13.0

Found existing installation: numpy 1.13.3

Not uninstalling numpy at /usr/lib/python3/dist-packages, outside environment /usr

Running setup.py install for numpy ... error

Complete output from command /usr/bin/python3 -u -c "import setuptools, tokenize;__file__='/tmp/pip-build-1g5p2fm1/numpy/setup.py';f=getattr(tokenize, 'open', open)(__file__);code=f.read().replace('\r\n', '\n');f.close();exec(compile(code, __file__, 'exec'))" install --record /tmp/pip-d81_pz_r-record/install-record.txt --single-version-externally-managed --compile:

Running from numpy source directory.

Note: if you need reliable uninstall behavior, then install

with pip instead of using `setup.py install`:

- `pip install .` (from a git repo or downloaded source

release)

- `pip install numpy` (last NumPy release on PyPi)

Cythonizing sources

numpy/random/_bounded_integers.pxd.in has not changed

Processing numpy/random/_philox.pyx

Traceback (most recent call last):

File "/tmp/pip-build-1g5p2fm1/numpy/tools/cythonize.py", line 59, in process_pyx

from Cython.Compiler.Version import version as cython_version

ModuleNotFoundError: No module named 'Cython'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/tmp/pip-build-1g5p2fm1/numpy/tools/cythonize.py", line 235, in <module>

main()

File "/tmp/pip-build-1g5p2fm1/numpy/tools/cythonize.py", line 231, in main

find_process_files(root_dir)

File "/tmp/pip-build-1g5p2fm1/numpy/tools/cythonize.py", line 222, in find_process_files

process(root_dir, fromfile, tofile, function, hash_db)

File "/tmp/pip-build-1g5p2fm1/numpy/tools/cythonize.py", line 188, in process

processor_function(fromfile, tofile)

File "/tmp/pip-build-1g5p2fm1/numpy/tools/cythonize.py", line 64, in process_pyx

raise OSError('Cython needs to be installed in Python as a module')

OSError: Cython needs to be installed in Python as a module

Traceback (most recent call last):

File "" , line 1, in <module>

File "/tmp/pip-build-1g5p2fm1/numpy/setup.py", line 499, in <module>

setup_package()

File "/tmp/pip-build-1g5p2fm1/numpy/setup.py", line 479, in setup_package

generate_cython()

File "/tmp/pip-build-1g5p2fm1/numpy/setup.py", line 274, in generate_cython

raise RuntimeError("Running cythonize failed!")

RuntimeError: Running cythonize failed!

----------------------------------------

Can't rollback numpy, nothing uninstalled.

Command "/usr/bin/python3 -u -c "import setuptools, tokenize;__file__='/tmp/pip-build-1g5p2fm1/numpy/setup.py';f=getattr(tokenize, 'open', open)(__file__);code=f.read().replace('\r\n', '\n');f.close();exec(compile(code, __file__, 'exec'))" install --record /tmp/pip-d81_pz_r-record/install-record.txt --single-version-externally-managed --compile" failed with error code 1 in /tmp/pip-build-1g5p2fm1/numpy/

查找错误:

1、RuntimeError: Running cythonize failed!.重新编译安装cython:

sudo apt cython

2、 ImportError: libcublas.so.10.0: cannot open shared object file: No such file or directory.在其官网上从源代码构建TensorFlow可以查看各版本对应的CUDA和cudnn,察其原因是因为TensorFlow与CUDA版本不匹配。

感觉是JetPack版本下载的有问题,导致CUDA和cudnn的版本与TensorRT和TensorFlow所支持的版本不匹配,准备刷个机重新再来