【18】基于pytorch的二分类网络模型的搭建

【1】模型简介

搭建了一个简单的神经网络,完成猫狗的分类,主要目的是是分析整个分类模型的流程。

分类模型的流程包含:(1)数据加载(2)模型搭建(3)模型训练(4)模型测试(5)模型优化

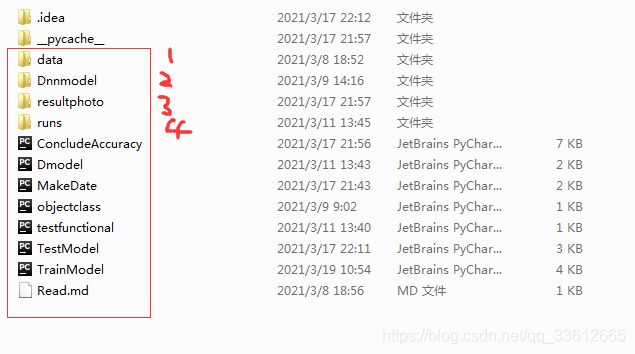

【2】文件简介

文件夹1:训练和测试的数据

文件夹2:模型结果存储

文件夹3: 训练过程中的图片存储

文件夹4:tensorboard可视化文件存储

【3】代码展示

ConcludeAccuracy.py

# -*- coding: utf-8 -*-#

#-------------------------------------------------------------------------------

# Name: testgraph

# Description:

# Author: Administrator

# Date: 2021/3/17

#-------------------------------------------------------------------------------

#导入依赖包

import numpy as np

import seaborn as sns

from sklearn.metrics import accuracy_score

from sklearn.metrics import precision_score

from sklearn.metrics import recall_score

from sklearn.metrics import confusion_matrix

from sklearn.metrics import roc_curve, auc

import matplotlib.pyplot as plt

import copy

import os

'''

文件简介:

该模块主要评估神经网络的计算结果,包含如下:

(1)计算分类的准确率、精确率、召回率;

(2)绘制ROC曲线;

reference data:

(1)https://blog.csdn.net/hfutdog/article/details/88085878

(2)https://blog.csdn.net/hfutdog/article/details/88079934

(3)https://www.jianshu.com/p/5df19746daf9

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~精确率~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

(1)Macro Average:宏平均是指在计算均值时使每个类别具有相同的权重,最后结果是每个类别的指标的算术平均值。

(2)Micro Average:微平均是指计算多分类指标时赋予所有类别的每个样本相同的权重,将所有样本合在一起计算各个指标

(3)如果每个类别的样本数量差不多,那么宏平均和微平均没有太大差异

(4)如果每个类别的样本数量差异很大,那么注重样本量多的类时使用微平均,注重样本量少的类时使用宏平均

(5)如果微平均大大低于宏平均,那么检查样本量多的类来确定指标表现差的原因

(6)如果宏平均大大低于微平均,那么检查样本量少的类来确定指标表现差的原因

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

'''

y_pred = [0, 1, 1, 1]

y_true = [0, 1, 0,1]

#计算真阳性 假阳性

def concludescore(y_pred,y_true):

score_result=[]

#计算准确率

Accuracy=accuracy_score(y_true, y_pred)

#计算精确率

Precision=precision_score(y_true, y_pred, average='macro')

#precision_score(y_true, y_pred, average='micro')

# precision_score(y_true, y_pred, average='weighted')

# precision_score(y_true, y_pred, average=None)

#计算召回率

Recall=recall_score(y_true, y_pred, average='macro')

# recall_score(y_true, y_pred, average='micro')

# recall_score(y_true, y_pred, average='weighted')

# recall_score(y_true, y_pred, average=None)

score_result.append(Accuracy)

score_result.append(Precision)

score_result.append(Recall)

return score_result

#y_true:真实值,y_score:预测值的得分,比如实际值为1,预测值为0.5

#二分类AUC曲线

def drawAUC_TwoClass(y_true,y_score):

fpr, tpr, thresholds =roc_curve(y_true,y_score)

roc_auc = auc(fpr, tpr) #auc为Roc曲线下的面积

#开始画ROC曲线

plt.plot(fpr, tpr, 'b',label='AUC = %0.2f'% roc_auc)

plt.legend(loc='lower right')#设定图例的位置,右下角

plt.plot([0,1],[0,1],'r--')

plt.xlim([-0.1,1.1])

plt.ylim([-0.1,1.1])

plt.xlabel('False Positive Rate') #横坐标是fpr

plt.ylabel('True Positive Rate') #纵坐标是tpr

plt.title('Receiver operating characteristic example')

if os.path.exists('./resultphoto')==False:

os.makedirs('./resultphoto')

plt.savefig('resultphoto/AUC_TwoClass.png', format='png')

plt.show()

#多分类AUC曲线

def drawAUC_ManyClass(y_true,y_score,num_class):

#将每类单独看作0/1二分类器,分别描述每类auc;

plt.figure()

for cls in range(num_class):

#计算每一个类别的auc

on_y_true=copy.deepcopy(y_true)

for on_class in range(len(on_y_true)):

if on_y_true[on_class]==cls:

on_y_true[on_class]=1

else:

on_y_true[on_class]=0

fpr, tpr, thresholds =roc_curve(on_y_true,y_score)

roc_auc = auc(fpr, tpr) #auc为Roc曲线下的面积

labelauc="AUC--"+str(cls)

#开始画ROC曲线

if cls/2==0:

color='g'

elif cls/2==1:

color='b'

else:

color='cyan'

plt.plot(fpr, tpr, color,label= labelauc+'= %0.2f'% roc_auc)

on_y_true.clear()

plt.legend(loc='lower right')#设定图例的位置,右下角

plt.plot([0,1],[0,1],'r--')

plt.xlim([-0.1,1.1])

plt.ylim([-0.1,1.1])

plt.xlabel('False Positive Rate') #横坐标是fpr

plt.ylabel('True Positive Rate') #纵坐标是tpr

plt.title('Receiver operating characteristic example')

if os.path.exists('./resultphoto')==False:

os.makedirs('./resultphoto')

plt.savefig('resultphoto/AUC_ManyClass.png', format='png')

plt.show()

#绘制混淆矩阵

def plot_confusion_matrix(cm, labels_name, title):

cm = cm.astype('float') / cm.sum(axis=1)[:, np.newaxis] # 归一化

plt.imshow(cm, interpolation='nearest') # 在特定的窗口上显示图像

plt.title(title) # 图像标题

plt.colorbar()

num_local = np.array(range(len(labels_name)))

plt.xticks(num_local, labels_name, rotation=90) # 将标签印在x轴坐标上

plt.yticks(num_local, labels_name) # 将标签印在y轴坐标上

plt.ylabel('True label')

plt.xlabel('Predicted label')

if os.path.exists('./resultphoto')==False:

os.makedirs('./resultphoto')

plt.savefig('resultphoto/HAR_cm.png', format='png')

plt.show()

if __name__=="__main__":

#测试二分类

# y = np.array([0, 0, 0, 1])

# scores = np.array([0.1, 0.4, 0.35, 0.8])

# drawAUC_TwoClass(y,scores)

#测试多分类

# y_true=[0,1,2,3,0,1,2,3,1,2,0]

# y_score=[0.7,0.8,0.9,0.4,0.5,0.5,0.9,0.6,0.7,0.3,0.8]

# num_class=4

# drawAUC_ManyClass(y_true,y_score,num_class)

#绘制混淆矩阵

test_y=[0,1,0,0,0,1,1,1]

pred_y=[0,0,1,0,1,0,1,1]

cm = confusion_matrix(test_y, pred_y)

labels_name=[0,1]

plot_confusion_matrix(cm, labels_name, "HAR Confusion Matrix")Dmodel.py

# -*- coding: utf-8 -*-#

#-------------------------------------------------------------------------------

# Name: Dmodel

# Description:

# Author: Administrator

# Date: 2021/3/8

#-------------------------------------------------------------------------------

'''

模块简介:该模块完成了深度学习模型的搭建

'''

import torch

import torchvision

import torchvision.transforms as transforms

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

import torch.optim as optim

import matplotlib.pyplot as plt

import numpy as np

class Net(nn.Module): # 定义网络,继承torch.nn.Module

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5) # 卷积层

self.pool = nn.MaxPool2d(2, 2) # 池化层

self.conv2 = nn.Conv2d(6, 16, 5) # 卷积层

self.fc1 = nn.Linear(16 * 5 * 5, 120) # 全连接层

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 2) # 10个输出

def forward(self, x):

x = self.pool(F.relu(self.conv1(x))) # F就是torch.nn.functional

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1, 16 * 5 * 5)

# 从卷基层到全连接层的维度转换

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

if __name__=="__main__":

net=Net()

print(net)

MakeDate.py

# -*- coding: utf-8 -*-#

#-------------------------------------------------------------------------------

# Name: MakeDate

# Description:

# Author: Administrator

# Date: 2021/3/8

#-------------------------------------------------------------------------------

'''

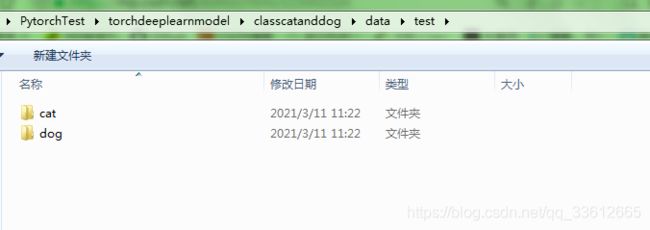

模块简介:该模块主要完成数据的加载,包含训练模型和测试数据的加载

(1)训练的数据:将不同类型的图像放置到不同的文件夹中,文件夹的名字为类别的名字

(2)测试的数据:将需要测试的数据放置到测试的文件夹中,同样也是相同的图像放置到相同的文件夹中。

测试的时候回打乱排布顺序。

'''

import torch

import torchvision

import torchvision.transforms as transforms

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

import torch.optim as optim

import matplotlib.pyplot as plt

import numpy as np

#加载训练数据

def loadtraindata(path):

trainset = torchvision.datasets.ImageFolder(path,

transform=transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor()]) )

trainloader = torch.utils.data.DataLoader(trainset, batch_size=4, shuffle=True, num_workers=2)

return trainloader

#加载测试数据

def loadtestdata(path):

testset = torchvision.datasets.ImageFolder(path,

transform=transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor()]))

testloader = torch.utils.data.DataLoader(testset, batch_size=64, shuffle=True, num_workers=2)

return testloaderobjectclass.py

# -*- coding: utf-8 -*-#

#-------------------------------------------------------------------------------

# Name: class

# Description:

# Author: Administrator

# Date: 2021/3/8

#-------------------------------------------------------------------------------

#模块简介:该模块是待分类的名称,通过后面加载索引可以显示出相应的类别。

class_names = '''cat

dog

'''.split("\n")

#print(class_names[0])testfunctional.py

# -*- coding: utf-8 -*-#

#-------------------------------------------------------------------------------

# Name: testfunctional

# Description:

# Author: Administrator

# Date: 2021/3/11

#-------------------------------------------------------------------------------

import cv2 as cv

import numpy as np

import matplotlib.pyplot as plt

img=cv.imread("01.jpg")

npimg =np.array(img)

npimg[200:330][200:330]=[255,0,0]

print(npimg.shape)

plt.imshow(npimg)

plt.show()

TestModel.py

# -*- coding: utf-8 -*-#

#-------------------------------------------------------------------------------

# Name: TestModel

# Description:

# Author: Administrator

# Date: 2021/3/8

#-------------------------------------------------------------------------------

'''

模块简介:该模块完成的模型的测试,通过加载相应的函数,测试模型分类的结果,绘制AUC图和混淆矩阵

'''

import torch

import torchvision

import torchvision.transforms as transforms

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

import torch.optim as optim

import matplotlib.pyplot as plt

import numpy as np

import objectclass

from MakeDate import *

from ConcludeAccuracy import *

def reload_net():

trainednet = torch.load('net.pkl')

return trainednet

def imshow(img):

img = img / 2 + 0.5 # unnormalize

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))#改变每个轴对应的数值

plt.show()

def test(path):

testloader = loadtestdata(path)

net = reload_net()

dataiter = iter(testloader)

images, labels = dataiter.next()

#imshow(torchvision.utils.make_grid(images,nrow=5)) # nrow是每行显示的图片数量,缺省值为8

#%5s中的5表示占位5个字符

#print('GroundTruth: ' , " ".join('%5s' % objectclass.class_names[labels[j]] for j in range(25))) # 打印前25个GT(test集里图片的标签)

outputs = net(Variable(images))

_, predicted = torch.max(outputs.data, 1)

#获取分类的预测分数

sum_score=outputs.data.numpy()

row,col=sum_score.shape

score=[]

for i in range(row):

score.append(np.max(sum_score[i],axis=0))

true_label=labels.tolist()#张量转换为列表

#print('Predicted: ', " ".join('%5s' % objectclass.class_names[predicted[j]] for j in range(25)))

pre_value=predicted.tolist()

score_result=concludescore(pre_value,true_label)

print('准确率 精确率 召回率:\n',score_result)

#绘制ROC曲线

drawAUC_TwoClass(true_label, score)

#绘制混淆矩阵

cm = confusion_matrix(true_label, pre_value)

print('混淆矩阵:\n',cm)

labels_name=['cat','dog']

plot_confusion_matrix(cm, labels_name, "HAR Confusion Matrix")

# 打印前25个预测值

if __name__=="__main__":

path='F:\\PytorchTest\\torchdeeplearnmodel\\classcatanddog\\data\\test\\'

test(path)TrainModel.py

# -*- coding: utf-8 -*-#

#-------------------------------------------------------------------------------

# Name: TrainModel

# Description:

# Author: Administrator

# Date: 2021/3/8

#-------------------------------------------------------------------------------

'''

模块简介:完成模型的训练并保存模型

'''

import torch

import torchvision

import torchvision.transforms as transforms

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

import torch.optim as optim

import matplotlib.pyplot as plt

import numpy as np

from MakeDate import *

from Dmodel import Net

from tensorboardX import SummaryWriter

def trainandsave(TRAINPATH,SAVEPATH,EPOCHS):

trainloader = loadtraindata(TRAINPATH)

# 神经网络结构

net = Net()

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9) # 学习率为0.001

criterion = nn.CrossEntropyLoss() # 损失函数也可以自己定义,我们这里用的交叉熵损失函数

writer=SummaryWriter('./runs')

# 训练部分

for epoch in range(EPOCHS): # 训练的数据量为5个epoch,每个epoch为一个循环

# 每个epoch要训练所有的图片,每训练完成200张便打印一下训练的效果(loss值)

running_loss = 0.0 # 定义一个变量方便我们对loss进行输出

for i, data in enumerate(trainloader, 0): # 这里我们遇到了第一步中出现的trailoader,代码传入数据

# enumerate是python的内置函数,既获得索引也获得数据

# get the inputs

inputs, labels = data # data是从enumerate返回的data,包含数据和标签信息,分别赋值给inputs和labels

# wrap them in Variable

inputs, labels = Variable(inputs), Variable(labels) # 转换数据格式用Variable

optimizer.zero_grad() # 梯度置零,因为反向传播过程中梯度会累加上一次循环的梯度

# forward + backward + optimize

outputs = net(inputs) # 把数据输进CNN网络net

loss = criterion(outputs, labels) # 计算损失值

#创建可视化图

writer.add_scalar("Loss/train",loss)

loss.backward() # loss反向传播

optimizer.step() # 反向传播后参数更新

#print(loss.data.item())

losssum=round(loss.data.item(),2)

running_loss += losssum # loss累加

#加入参数

writer.add_scalar("Loss/train_losssum",losssum)

# writer.add_histogram("Param/weight",outputs.weight,epoch)

if i % 50 == 0:

print('[%d, %5d] loss: %.3f' %(epoch + 1, i + 1, running_loss / 200)) # 然后再除以200,就得到这两百次的平均损失值

running_loss = 0.0 # 这一个200次结束后,就把running_loss归零,下一个200次继续使用

print('Finished Training')

# 保存神经网络,一种类型是保存参数,一种类型为保存模型

savemodelname='net.pkl'

savemodelname_params='net_params.pkl'

savepath1=SAVEPATH+savemodelname

savepath2=SAVEPATH+savemodelname_params

torch.save(net,savepath1) # 保存整个神经网络的结构和模型参数

torch.save(net.state_dict(), savepath2) # 只保存神经网络的模型参数

if __name__=="__main__":

#设置训练和模型保存路径

trainpath='F:\\PytorchTest\\torchdeeplearnmodel\\classcatanddog\\data\\train\\'

savepath='F:\\PytorchTest\\torchdeeplearnmodel\\classcatanddog\\Dnnmodel\\'

#设置超参数

EPOCHS=100 #训练的轮数

trainandsave(trainpath,savepath,EPOCHS)【4】结果展示