Multi-Modal Knowledge Graph Construction and Application: A Survey

Absract:

存在问题:1.现实世界知识爆炸;2现存KG是with pure symbol,不好让机器去理解。

->解决问题方案:Multi-Modal KG,这可以更好地实现人类水平的机器翻译。

->得出结果:MMKG

概览:

1.defintion of MMKGs;

2.the preliminaries on multi-modal tasks and techniques;

3.systematically review the challenges,progress,opportunities on the construction and application of MMKGs;

4.analyses of the strength and weakness of different solutions.

1.Introduction

one hand: the dog and the experience of dogs--象征与其物理世界意义联系起来;

on the other hand:

1 图像中更好抽取类似关系抽取,属性抽取,(eg:Partof(keyboard and the screen are parts of a laptop))

2.可以形成more informative entity-level sentence instead of a vague concept-level with MMKG(eg:Donald Trump is making a speech(with MMKGs);A tall man with blond hair is making a speech(no use MMKGs))。

Construction:(conclude opposite directions)[challenges,progress,opportunities]

- One is from images to symbols 即 labeling images with symbols inKG

- The other is from symbols to images 即 grounding symbols in KG to images

Application:

- In-MMKG:旨在解决MMKG本身的质量或集成问题;

- Out-of-MMKG:通用的多模式任务,MMKG可以提供帮助。

2.Definition and Preliminaries

2.1 first defines two representation ways for KGs;

2.2 review some preliminaries on multi-modal tasks and techniques;

2.3 followed with a discussion on the connections between MMKGs and the existing multi-modal tasks and techniques.

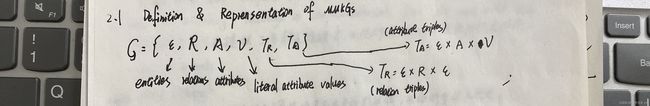

2.1 Definitions and Representation of MMKGs

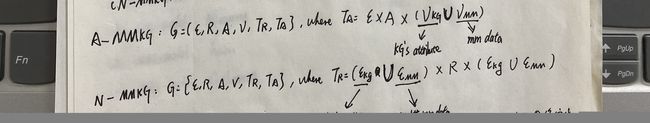

two different ways for representing MMKGs:

- A-MMKG:take multi-modal data as particular attribute values of entities/concepts

- N-MMKG:take multi-data as entities in KGs

N-MMKG通常将一幅图像抽象为若干图像描述符,这些描述符通常概括为图像实体在像素级的特征向量。因此可以通过简单的计算得到图像之间的关系(eg:通过图像描述符向量的内积得到图像的相似度)

2.2 Preliminaries on Multi-Modal Tasks and Techniques

- well-studied multi-modal tasks

- multi-modal learning techniques

- followed with important progress on multi-modal pretrained language model

Multi-Modal tasks

(a problem is characterized as multi-modal if it involves data of multiple modalities)

多模态任务整合并模拟了多种交际模式,以便从多模态数据中获取知识或理解。

Multi-Modal Learning

多模态学习主要是对多模态之间的对应关系进行建模,以理解多模态数据。

面临的挑战;

- Multi-Modal Representation

- Multi-Modal Translation

- Multi-Modal Alignment

- Multi-Modal Fusion

- Multi-Modal Co-Learning

Multi-Modal Pretrained Language Model(多模态预训练语言模型)

近年来,学者们设计了一些自监督预训练任务,

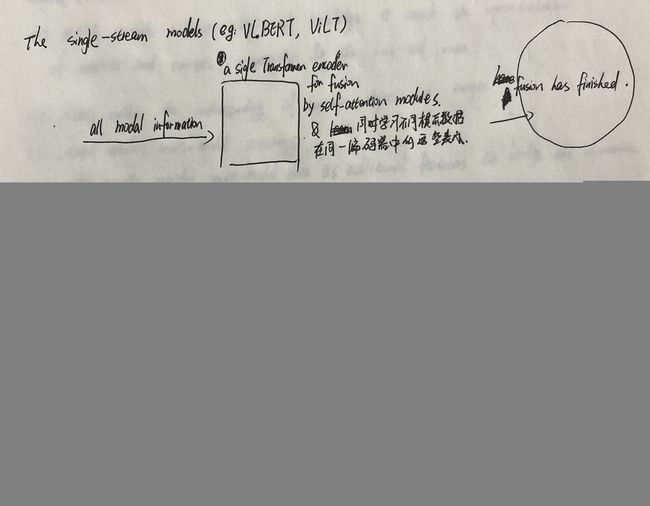

In terms of the Transformered-based fusion process of different modality

(就不同模态的基于Transformered的融合过程而言)

多模态预训练语言模型可分为

- single-stream models

- two-stream models

2.3 Discussion

虽然利用多模态学习技术和多模态预训练语言模型来处理多种多模态任务已经有了很大的研究成果,但引入多模态知识来提高已有多模态任务的性能仍是一个新型趋势。MMKG可以从以下几个方面为这些下游任务带来好处:

- MMKG provieds sufficient background knowledge to enrich the representation of entities and concepts,especially for the long-tail ones.

- MMKG enables the understanding of unseen objects in images

- MMKG enables multi-modal reasoning

- MMKG usually provides multi-modal data as additional features to bridge the information gaps in some NLP tasks.

PS:

长尾(long-tail)问题:

长尾问题是实际生产数据中的一种数据分布。其中关键的特点在于占据影响比例相对较小的部分分布着较多的实例。一个例子是统计指定话题下的100w的微博,其中的字按频次排期,除了头部的数据外,频次较低的字有着极大的数量。

常见的长尾问题解决方案:

- 高频部分通过人工筛选 + 人工标注,产出高质量可用数据。

- 低频部分,通过自动化构建的方式,产出一份可用的指定质量的数据。

To sum up:

在没有大规模MMKG支持的情况下,以往使用多模态信息的努力仍然有限。我们设想,当大规模的高质量的MMKG可用时,许多任务可以进一步改进