架构垂直伸缩和水平伸缩区别

巨型图上的深度学习 (Deep learning on giant graphs)

TL;DR: One of the challenges that have so far precluded the wide adoption of graph neural networks in industrial applications is the difficulty to scale them to large graphs such as the Twitter follow graph. The interdependence between nodes makes the decomposition of the loss function into individual nodes’ contributions challenging. In this post, we describe a simple graph neural network architecture developed at Twitter that can work on very large graphs.

TL; DR: 迄今为止,阻碍图神经网络在工业应用中广泛采用的挑战之一是难以将它们缩放到大型图(例如Twitter跟随图)。 节点之间的相互依赖性使损失函数分解成单个节点的贡献变得困难。 在这篇文章中,我们描述了一种在Twitter上开发的简单图神经网络架构,该架构可以处理非常大的图。

This post was co-authored with Fabrizo Frasca and Emanuele Rossi.

这篇文章与 Fabrizo Frasca 和 Emanuele Rossi 合着 。

Graph Neural Networks (GNNs) are a class of ML models that have emerged in recent years for learning on graph-structured data. GNNs have been successfully applied to model systems of relation and interactions in a variety of different domains, including social science, computer vision and graphics, particle physics, chemistry, and medicine. Until recently, most of the research in the field has focused on developing new GNN models and testing them on small graphs (with Cora, a citation network containing only about 5K nodes, still being widely used [1]); relatively little effort has been invested in dealing with large-scale applications. On the other hand, industrial problems often deal with giant graphs, such as Twitter or Facebook social networks containing hundreds of millions of nodes and billions of edges. A big part of methods described in the literature are unsuitable for these settings.

摹拍摄和神经网络(GNNS)是一类ML车型已经出现在最近几年的学习上图的结构化数据。 GNN已成功应用于各种不同领域的关系和相互作用的模型系统,包括社会科学,计算机视觉和图形,粒子物理学,化学和医学。 直到最近,该领域的大多数研究都集中在开发新的GNN模型并在小图形上对其进行测试(使用仅包含约5K节点的引用网络Cora,至今仍在广泛使用[1]); 在处理大规模应用程序上投入了相对较少的精力。 另一方面,工业问题通常涉及巨型图,例如包含数亿节点和数十亿边缘的Twitter或Facebook社交网络。 文献中描述的方法的很大一部分不适用于这些设置。

In a nutshell, graph neural networks operate by aggregating the features from local neighbour nodes. Arranging the d-dimensional node features into an n×d matrix X (here n denotes the number of nodes), the simplest convolution-like operation on graphs implemented in the popular GCN model [2] combines node-wise transformations with feature diffusion across adjacent nodes

简而言之,图神经网络通过聚集来自本地邻居节点的特征进行操作。 将d维节点特征排列到n × d矩阵X中 (此处n表示节点数),流行的GCN模型 [2]中实现的对图的最简单的类似卷积运算将结点变换与整个特征扩散相邻节点

Y = ReLU(AXW).

Y = ReLU( AXW )。

Here W is a learnable matrix shared across all nodes and A is a linear diffusion operator amounting to a weighted average of features in a neighbourhood [3]. Multiple layers of this form can be applied in sequence like in traditional CNNs. Graph neural networks can be designed to make predictions at the level of nodes (e.g. for applications such as detecting malicious users in a social network), edges (e.g. for link prediction, a typical scenario in recommender systems), or the entire graphs (e.g. predicting chemical properties of molecular graphs). The node-wise classification task can be carried out, for instance, by a two-layer GCN of the form

这里W是在所有节点上共享的可学习矩阵, A是线性扩散算子,等于邻域中特征的加权平均值[3]。 这种形式的多层可以像传统的CNN一样顺序应用。 可以将图神经网络设计为在节点(例如,用于诸如检测社交网络中的恶意用户的应用程序),边缘(例如,用于链接预测,推荐系统中的典型场景),或整个图(例如,预测分子图的化学性质)。 节点分类任务可以例如通过以下形式的两层GCN来执行

Y = softmax(A ReLU(AXW)W’).

Y = softmax( A ReLU( AXW ) W ')。

Why is scaling graph neural networks challenging? In the aforementioned node-wise prediction problem, the nodes play the role of samples on which the GNN is trained. In traditional machine learning settings, it is typically assumed that the samples are drawn from some distribution in a statistically independent manner. This, in turn, allows to decompose the loss function into the individual sample contributions and employ stochastic optimisation techniques working with small subsets (mini-batches) of the training data at a time. Virtually every deep neural network architecture is nowadays trained using mini-batches.

w ^ HY被缩放图形神经网络的挑战? 在上述的逐节点预测问题中,节点扮演训练GNN的样本的角色。 在传统的机器学习设置中,通常假设样本是从某种分布中以统计独立的方式提取的。 反过来,这又允许将损失函数分解为单个样本贡献,并采用随机优化技术来一次处理训练数据的小子集(微型批次)。 如今,几乎每个深度神经网络体系结构都使用小型批次进行培训。

In graphs, on the other hand, the fact that the nodes are inter-related via edges creates statistical dependence between samples in the training set. Moreover, because of the statistical dependence between nodes, sampling can introduce bias — for instance it can make some nodes or edges appear more frequently than on others in the training set — and this ‘side-effect’ would need proper handling. Last but not least, one has to guarantee that the sampled subgraph maintains a meaningful structure that the GNN can exploit.

另一方面,在图中,节点通过边相互关联的事实在训练集中的样本之间产生了统计依赖性。 此外,由于节点之间的统计依赖性,采样可能会引入偏差(例如,它可能使某些节点或边缘比训练集中的其他节点或边缘出现得更频繁),并且这种“副作用”需要适当处理。 最后但并非最不重要的一点是,必须保证采样的子图保持GNN可以利用的有意义的结构。

In many early works on graph neural networks, these problems were swept under the carpet: architectures such as GCN and ChebNet [2], MoNet [4] and GAT [5] were trained using full-batch gradient descent. This has led to the necessity to hold the whole adjacency matrix of the graph and the node features in memory. As a result, for example, an L-layer GCN model has time complexity (Lnd²) and memory complexity (Lnd +Ld²) [7], prohibitive even for modestly-sized graphs.

在许多关于图神经网络的早期工作中,这些问题被掩盖了:使用全梯度梯度下降训练了诸如GCN和ChebNet [2],MoNet [4]和GAT [5]之类的体系结构。 这导致必须将图形的整个邻接矩阵和节点特征保存在内存中。 其结果是,例如,一个L -层GCN模型具有时间复杂度(LND²)和存储复杂(LND + Ld的 ²)[7],望而却步即使对于适度大小的曲线图。

The first work to tackle the problem of scalability was GraphSAGE [8], a seminal paper of Will Hamilton and co-authors. GraphSAGE used neighbourhood sampling combined with mini-batch training to train GNNs on large graphs (the acronym SAGE, standing for “sample and aggregate”, is a reference to this scheme). The main idea is that in order to compute the training loss on a single node with an L-layer GCN, only the L-hop neighbours of that node are necessary, as nodes further away in the graph are not involved in the computation. The problem is that, for graphs of the “small-world” type, such as social networks, the 2-hop neighbourhood of some nodes may already contain millions of nodes, making it too big to be stored in memory [9]. GraphSAGE tackles this problem by sampling the neighbours up to the L-th hop: starting from the training node, it samples uniformly with replacement [10] a fixed number k of 1-hop neighbours, then for each of these neighbours it again samples k neighbours, and so on for L times. In this way, for every node we are guaranteed to have a bounded L-hop sampled neighbourhood of (kᴸ) nodes. If we then construct a batch with b training nodes, each with its own independent L-hop neighbourhood, we get to a memory complexity of (bkᴸ) independent of the graph size n. The computational complexity of one batch of GraphSAGE is (bLd²kᴸ).

吨他解决可扩展性的问题,第一个工作是GraphSAGE [8],威尔·汉密尔顿和共同作者的开创性论文。 GraphSAGE使用邻域采样结合小批量训练在大型图上训练GNN(首字母缩写SAGE代表“样本和集合”,是此方案的参考)。 主要思想是,为了在具有L层GCN的单个节点上计算训练损失,仅需要该节点的L跳邻居,因为图中更远的节点不参与计算。 问题在于,对于“ 小世界 ”类型的图,例如社交网络,某些节点的2跳邻域可能已经包含数百万个节点,从而使其太大而无法存储在内存中[9]。 GraphSAGE通过对直到第L跳的邻居进行采样来解决此问题:从训练节点开始,它用替换[10]固定数目k的1跳邻居进行均匀采样,然后对这些邻居中的每个邻居再次采样k L个邻居,等等。 这样,对于每个节点,我们都可以保证有( kᴸ )个节点的有界L跳采样邻域。 如果然后用b个训练节点构造一个批处理节点,每个训练节点都有自己独立的L跳邻域,我们得到的存储复杂度为( bkᴸ ),与图的大小n无关。 一批GraphSAGE的计算复杂度为(BLD²kᴸ)。

Neighbourhood sampling procedure of GraphSAGE. A batch of b nodes is subsampled from the full graph (in this example, b=2 and the red and light yellow nodes are used for training). On the right, the 2-hop neighbourhood graphs sampled with k=2, which are independently used to compute the embedding and therefore the loss for the red and light yellow nodes. GraphSAGE的邻域采样过程。 从完整图中对b个节点进行了批量采样(在此示例中,b = 2,红色和浅黄色节点用于训练)。 在右侧,以k = 2采样的2跳邻域图被独立用于计算嵌入,因此计算出红色和浅黄色节点的损耗。A notable drawback of GraphSAGE is that sampled nodes might appear multiple times, thus potentially introducing a lot of redundant computation. For instance, in the figure above the dark green node appears in both the l-hop neighbourhood for the two training nodes, and therefore its embedding is computed twice in the batch. With the increase of the batch size b and the number of samples k, the amount of redundant computation increases as well. Moreover, despite having (bkᴸ) nodes in memory for each batch, the loss is computed on only b of them, and therefore, the computation for the other nodes is also in some sense wasted.

GraphSAGE的一个显着缺点是,采样的节点可能会出现多次,因此可能引入大量的冗余计算。 例如,在上图中,深绿色节点出现在两个训练节点的l跳附近,因此在批次中两次对其进行嵌入。 随着批次大小b和样本数量k的增加,冗余计算量也增加。 此外,尽管每批内存中有( bkᴸ )个节点,但仅对其中的b个进行了损耗计算,因此,在某种意义上,其他节点的计算也被浪费了。

Multiple follow-up works focused on improving the sampling of mini-batches in order to remove redundant computation of GraphSAGE and make each batch more efficient. The most recent works in this direction are ClusterGCN [11] and GraphSAINT [12], which take the approach of graph-sampling (as opposed to neighbourhood-sampling of GraphSAGE). In graph-sampling approaches, for each batch, a subgraph of the original graph is sampled, and a full GCN-like model is run on the entire subgraph. The challenge is to make sure that these subgraphs preserve most of the original edges and still present a meaningful topological structure.

多项后续工作的重点是改进小型批次的采样,以消除GraphSAGE的冗余计算并提高每批的效率。 这方面的最新工作是ClusterGCN [11]和GraphSAINT [12],它们采用了图抽样的方法(与GraphSAGE的邻域抽样相反)。 在图采样方法中,对于每个批次,都会对原始图的一个子图进行采样,然后在整个子图上运行一个完整的类似GCN的模型。 面临的挑战是确保这些子图保留大多数原始边缘,并且仍然呈现有意义的拓扑结构。

ClusterGCN achieves this by first clustering the graph. Then, at each batch, the model is trained on one cluster. This allows the nodes in each batch to be as tightly connected as possible.

ClusterGCN通过首先对图形进行聚类来实现此目的。 然后,在每一批次中,在一个群集上训练模型。 这允许每批中的节点尽可能紧密地连接。

GraphSAINT proposes instead a general probabilistic graph sampler constructing training batches by sampling subgraphs of the original graph. The graph sampler can be designed according to different schemes: for example, it can perform uniform node sampling, uniform edge sampling, or “importance sampling” by using random walks to compute the importance of nodes and use it as the probability distribution for sampling.

图圣 提出了一个通用的概率图采样器,它通过对原始图的子图进行采样来构造训练批次。 可以根据不同的方案设计图形采样器:例如,它可以通过使用随机游走来计算节点的重要性并将其用作采样的概率分布,来执行统一节点采样,统一边缘采样或“重要性采样”。

It is also important to note that one of the advantages of sampling is that during training it acts as a sort of edge-wise dropout, which regularises the model and can help the performance [13]. However, edge dropout would require to still see all the edges at inference time, which is not feasible here. Another effect graph sampling might have is reducing the bottleneck [14] and the resulting “over-squashing” phenomenon that stems from the exponential expansion of the neighbourhood.

还需要注意的是,采样的优点之一是,在训练过程中,采样起到了某种边沿下降的作用,这使模型规则化并可以帮助提高性能[13]。 但是,边缘丢失将要求仍在推理时看到所有边缘,这在此处不可行。 效果图采样的另一个效果可能是减少瓶颈[14],以及由于邻域的指数扩展而导致的“过度挤压”现象。

In our recent paper with Ben Chamberlain, Davide Eynard, and Federico Monti [15], we investigated the extent to which it is possible to design simple, sampling-free architectures for node-wise classification problems. You may wonder why one would prefer to abandon sampling strategies in light of the indirect benefits we have just highlighted above. There are a few reasons for that. First, instances of node classification problems may significantly vary from one another and, to the best of our knowledge, no work so far has systematically studied when sampling actually provides positive effects other than just alleviating computational complexity. Second, the implementation of sampling schemes introduces additional complexity and we believe a simple, strong, sampling-free, scalable baseline architecture is appealing.

在我们与Ben Chamberlain,Davide Eynard和Federico Monti共同撰写的最新论文中[15],我们研究了针对节点分类问题设计简单,无采样架构的可能性。 您可能想知道,鉴于我们上面刚刚强调的间接好处,为什么有人更愿意放弃抽样策略。 有几个原因。 首先,节点分类问题的实例可能彼此之间有很大的不同,据我们所知,到目前为止, 当采样实际上提供了积极的效果而不仅仅是减轻计算复杂性时 ,还没有系统地研究任何工作。 其次,采样方案的实施会带来额外的复杂性,我们相信简单,强大,无采样,可扩展的基准架构很有吸引力。

Our approach is motivated by several recent empirical findings. First, simple fixed aggregators (such as GCN) were shown to often outperform in many cases more complex ones, such as GAT or MPNN [16]. Second, while deep learning success was built on models with a lot of layers, in graph deep learning it is still an open question whether depth is needed. In particular, Wu and coauthors [17] argue that a GCN model with a single multi-hop diffusion layer can perform on par with models with multiple layers.

我们的方法是基于最近的一些经验发现。 首先,在许多情况下,简单的固定聚合器(例如GCN)在许多复杂的情况下(例如GAT或MPNN)通常都优于[16]。 第二,虽然深度学习成功是建立在具有许多层的模型上的,但在图深度学习中, 是否需要深度仍然是一个悬而未决的问题。 尤其是,Wu和合著者[17]认为具有单个多跳扩散层的GCN模型可以与具有多个层的模型媲美。

By combining different, fixed neighbourhood aggregators within a single convolutional layer, it is possible to obtain an extremely scalable model without resorting to graph sampling [18]. In other words, all the graph related (fixed) operations are in the first layer of the architecture and can therefore be precomputed; the pre-aggregated information can then be fed as inputs to the rest of the model which, due to the lack of neighbourhood aggregation, boils down to a multi-layer perceptron (MLP). Importantly, the expressivity in the graph filtering operations can still be retained even with such a shallow convolutional scheme by employing several, possibly specialised and more complex, diffusion operators. As an example, it is possible to design operators to include local substructure counting [19] or graph motifs [20].

通过在单个卷积层中组合不同的,固定的邻域聚合器,可以在不依靠图采样的情况下获得极具可扩展性的模型[18]。 换句话说,所有与图相关的(固定)操作都在体系结构的第一层中,因此可以进行预先计算。 然后,可以将预先聚合的信息作为模型其余部分的输入,由于缺少邻域聚合,因此可以归结为多层感知器(MLP)。 重要的是,即使采用这种浅卷积方案,通过采用几个可能是专门的,更复杂的扩散算子,仍可以保留图形过滤操作中的表达能力。 例如,可以将算子设计为包括局部子结构计数 [19]或图形主题[20]。

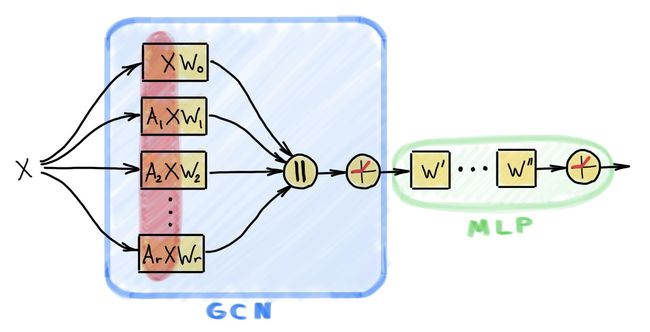

SIGN architecture comprises one GCN-like layer with multiple linear diffusion operators possibly acting on multi-hop neighbourhoods, followed by MLP applied node-wise. The key to its efficiency is the pre-computation of the diffused features (marked in red).

SIGN体系结构包括一个类似GCN的层,其中可能具有多个线性扩散算符,它们可能作用于多跳邻域,随后是在节点级应用MLP。 其效率的关键是对扩散特征(以红色标记)进行预先计算。

The proposed scalable architecture, which we call Scalable Inception-like Graph Network (SIGN) has the following form for node-wise classification tasks:

所提出的可伸缩体系结构,我们称为可伸缩初始类图网络(SIGN),具有以下形式用于节点分类任务:

Y = softmax(ReLU(XW₀ | A₁XW₁ | A₂XW₂ | … | AᵣXWᵣ) W’)

Y = SOFTMAX(RELU(XW₀|一个 ₁XW₁| A₂XW₂| ... | AᵣXWᵣ)W“)

Here Aᵣ are linear diffusion matrices (such as a normalised adjacency matrix, its powers, or a motif matrix) and Wᵣ and W’ are learnable parameters. As depicted in the figure above, the network can be made deeper with additional node-wise layers,

这里甲 ᵣ是线性的扩散矩阵(例如归一化的邻接矩阵,它的权力,或基序矩阵)和Wᵣ和W'是可学习的参数。 如上图所示,可以通过附加的节点层使网络更深,

Y = softmax(ReLU(…ReLU(XW₀ | A₁XW₁ | … | AᵣXWᵣ) W’)… W’’)

Y = SOFTMAX(RELU(... RELU(XW₀|一个 ₁XW₁| ... | AᵣXWᵣ)W“)... W '')

Finally, when employing different powers for the same diffusion operator (e.g. A₁=B¹, A₂=B², etc.), the graph operations effectively aggregate from neighbours in further and further hops, akin to having convolutional filters of different receptive fields within the same network layer. This analogy to the popular inception module in classical CNNs explains the name of the proposed architecture [21].

最后,采用不同的功率时为相同的扩散操作者(例如甲 ₁= B¹,A₂= B²等),从在进一步的和进一步的跳邻居有效聚集体,类似于具有不同接受的卷积滤波器的图操作同一网络层中的字段。 这与经典CNN中流行的初始模块的类比解释了所提议体系结构的名称[21]。

As already mentioned, the matrix products A₁X,…, AᵣX in the above equations do not depend on the learnable model parameters and can thus be pre-computed. In particular, for very large graphs this pre-computation can be scaled efficiently using distributed computing infrastructures such as Apache Spark. This effectively reduces the computational complexity of the overall model to that of an MLP. Moreover, by moving the diffusion to the pre-computation step, we can aggregate information from all the neighbours, avoiding sampling and the possible loss of information and bias that comes with it [22].

如已经提到的,矩阵产品甲 ₁X,...,AᵣX在上述等式不依赖于可学习模型参数,因此可以预先计算。 特别是对于非常大的图,可以使用分布式计算基础结构(例如Apache Spark)有效地缩放此预计算。 这有效地将整个模型的计算复杂度降低到了MLP。 此外,通过将扩散转移到预计算步骤,我们可以汇总来自所有邻居的信息,避免采样以及随之而来的信息丢失和偏差[22]。

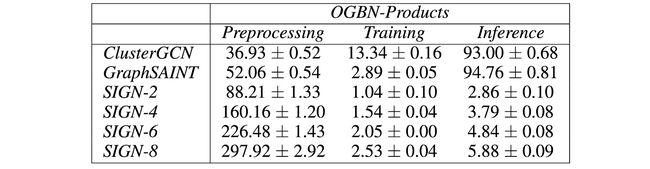

The main advantage of SIGN is its scalability and efficiency, as it can be trained using standard mini-batch gradient descent. We found our model to be up to two orders of magnitude faster than ClusterGCN and GraphSAINT at inference time, while also being significantly faster at training time (all this while maintaining accuracy performances very close to that of the state-of-the-art GraphSAINT).

SIGN的吨他主要优点是它的可扩展性和效率,因为它可以使用标准的小批量梯度下降的培训。 我们发现我们的模型在推理时比ClusterGCN和GraphSAINT快多达两个数量级,同时在训练时也快得多(所有这些都保持了精确度与最新GraphSAINT相当) )。

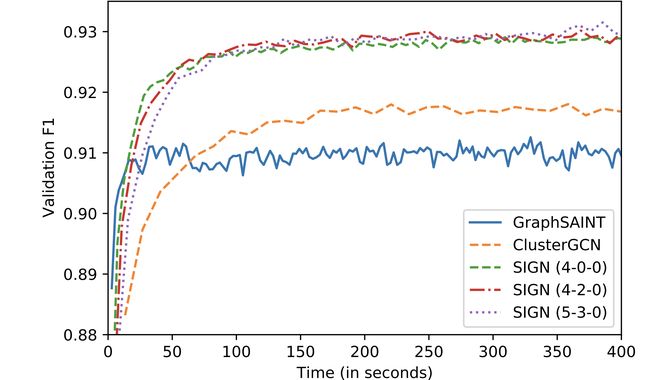

Convergence of different methods on the OGBN-Products dataset. Variants of SIGN converge faster and to a higher validation F1 score than GraphSaint and ClusterGCN. OGBN-Products数据集上不同方法的收敛性。 与GraphSaint和ClusterGCN相比,SIGN的变体收敛更快,并且验证F1得分更高。 Preprocessing, training and inference time (in seconds) of different methods on the OGBN-Products dataset. While having a slower pre-processing, SIGN is faster at training and nearly two orders of magnitude faster at inference time than other methods. OGBN-Products数据集上不同方法的预处理,训练和推断时间(以秒为单位)。 与其他方法相比,尽管SIGN的预处理速度较慢,但其在训练中的速度更快,在推理时的速度快了将近两个数量级。Moreover, our model supports any diffusion operators. For different types of graphs, different diffusion operators may be necessary, and we found some tasks to benefit from having motif-based operators such as triangle counts.

而且,我们的模型支持任何扩散算子。 对于不同类型的图形,可能需要不同的扩散算子,并且我们发现一些任务可以受益于使用基于图案的算子,例如三角形数。

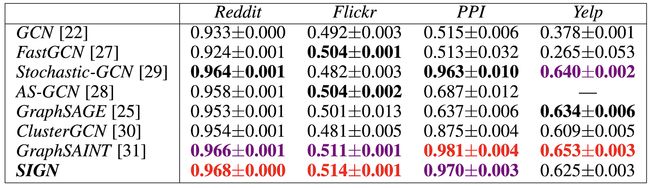

Performance of SIGN and other scalable methods on node-wise classification tasks on some popular datasets. Diffusion operators based on triangular motifs gave an interesting performance gain on Flickr and provided some improvements on PPI and Yelp. SIGN和其他可伸缩方法在某些流行数据集上的节点分类任务上的性能。 基于三角形图案的扩散算子在Flickr上获得了令人感兴趣的性能提升,并对PPI和Yelp进行了一些改进。Despite the limitation of having only a single graph convolutional layer and linear diffusion operators, SIGN performs very well in practice, achieving results on par or even better than much more complex models. Given its speed and simplicity of implementation, we envision SIGN to be a simple baseline graph learning method for large-scale applications. Perhaps more importantly, the success of such a simple model leads to a more fundamental question: do we really need deep graph neural networks? We conjecture that in many problems of learning on social networks and “small world” graphs, we should use richer local structures rather than resort to brute-force deep architectures. Interestingly, traditional CNNs architectures evolved according to an opposite trend (deeper networks with smaller filters) because of computational advantages and the ability to compose complex features of simpler ones. We are not sure if the same approach is right for graphs, where compositionality is much more complex (e.g. certain structures cannot be computed by message passing, no matter how deep the network is). For sure, more elaborate experiments are still needed to test this conjecture.

d espite在实践中仅具有单个图表卷积层和线性扩散运营商,SIGN进行很好的限制,实现媲美,甚至优于更复杂的模型的结果。 鉴于其实现的速度和简便性,我们设想SIGN是用于大型应用程序的简单基线图学习方法。 也许更重要的是,这种简单模型的成功带来了一个更根本的问题: 我们真的需要深度图神经网络吗? 我们推测在社交网络和“小世界”图学习的许多问题中,我们应该使用更丰富的本地结构,而不是采用蛮力的深度架构。 有趣的是,传统的CNN架构因其计算优势和组合较简单特征的复杂功能而根据相反的趋势(具有更小的滤波器的更深网络)发展了。 我们不确定相同的方法是否适用于图,图的组成要复杂得多(例如,无论网络有多深,某些结构都无法通过消息传递来计算)。 可以肯定的是,仍然需要进行更复杂的实验来检验这一猜想。

[1] The recently introduced Open Graph Benchmark now offers large-scale graphs with millions of nodes. It will probably take some time for the community to switch to it.

[1]最近引入的Open Graph Benchmark现在提供具有数百万个节点的大规模图形。 社区可能要花一些时间才能转向它。

[2] T. Kipf and M. Welling, Semi-supervised classification with graph convolutional networks (2017). Proc. ICLR introduced the popular GCN architecture, which was derived as a simplification of the ChebNet model proposed by M. Defferrard et al. Convolutional neural networks on graphs with fast localized spectral filtering (2016). Proc. NIPS.

[2] T. Kipf和M.Welling ,图卷积网络的半监督分类 (2017年)。 进程 ICLR引入了流行的GCN体系结构,该体系结构是M.Defferrard等人提出的ChebNet模型的简化。 具有快速局部频谱滤波的图上的卷积神经网络 (2016)。 进程 NIPS。

[3] As the diffusion operator, Kipf and Welling used the graph adjacency matrix with self-loops (i.e., the node itself contributes to its feature update), but other choices are possible as well. The diffusion operation can be made feature-dependent of the form A(X)X (i.e., it is still a linear combination of the node features, but the weights depend on the features themselves) like in MoNet [4] or GAT [5] models, or completely nonlinear, (X), like in message-passing neural networks (MPNN) [6]. For simplicity, we focus the discussion on the GCN model applied to node-wise classification.

[3]作为扩散算子,Kiff和Welling使用具有自环的图邻接矩阵(即,节点本身对其特征更新有所贡献),但是其他选择也是可能的。 可以使扩散操作取决于形式A ( X ) X (即,它仍然是节点特征的线性组合,但权重取决于特征本身),就像在MoNet [4]或GAT [5]中一样]模型或完全非线性的模型, X ( X ),就像在消息传递神经网络(MPNN)[6]中一样。 为简单起见,我们将讨论重点放在应用于节点分类的GCN模型上。

[4] F. Monti et al., Geometric Deep Learning on Graphs and Manifolds Using Mixture Model CNNs (2017). In Proc. CVPR.

[4] F.Monti等人,《 使用混合模型CNN在图形和流形上进行几何深度学习》 (2017)。 在过程中。 CVPR。

[5] P. Veličković et al., Graph Attention Networks (2018). In Proc. ICLR.

[5] P.Veličković等人, Graph Attention Networks (2018)。 在过程中。 ICLR。

[6] J. Gilmer et al., Neural message passing for quantum chemistry (2017). In Proc. ICML.

[6] J. Gilmer等人, 《量子化学的神经信息传递》 (2017年)。 在过程中。 ICML。

[7] Here we assume for simplicity that the graph is sparse with the number of edges |ℰ|=(n).

[7]在这里,为简单起见,我们假设图是稀疏的,边的数量| ℰ | =( n )。

[8] W. Hamilton et al., Inductive Representation Learning on Large Graphs (2017). In Proc. NeurIPS.

[8] W. Hamilton等人, 大图的归纳表示学习 (2017)。 在过程中。 NeurIPS。

[9] The number of neighbours in such graphs tends to grow exponentially with the neighbourhood expansion.

[9]在这种图中,邻居的数量会随着邻域扩展而呈指数增长。

[10] Sampling with replacement means that some neighbour nodes can appear more than once, in particular if the number of neighbours is smaller than k.

[10]替换抽样意味着某些邻居节点可能出现不止一次,特别是如果邻居数小于k时 。

[11] W.-L. Chiang et al., Cluster-GCN: An Efficient Algorithm for Training Deep and Large Graph Convolutional Networks (2019). In Proc. KDD.

[11] W.-L. Chiang等人,《 Cluster-GCN:一种用于训练深度图和大型图卷积网络的高效算法》 (2019年)。 在过程中。 KDD。

[12] H. Zeng et al., GraphSAINT: Graph Sampling Based Inductive Learning Method (2020) In Proc. ICLR.

[12] H. Zeng等人, GraphSAINT:《基于图采样的归纳学习方法》 (2020) ICLR。

[13] Y. Rong et al. DropEdge: Towards deep graph convolutional networks on node classification (2020). In Proc. ICLR. An idea similar to DropOut where a random subset of edges is used during training.

[13] Y. Rong等。 DropEdge:关于节点分类的深图卷积网络 (2020年)。 在过程中。 ICLR。 类似于DropOut的想法,在训练过程中使用随机的边缘子集。

[14] U. Alon and E. Yahav, On the bottleneck of graph neural networks and its practical implications (2020). arXiv:2006.05205. Identified the over-squashing phenomenon in graph neural networks, which is similar to one observed in sequential recurrent models.

[14] U. Alon和E. Yahav, 关于图神经网络的瓶颈及其实际意义 (2020年)。 arXiv:2006.05205。 确定了图神经网络中的过度挤压现象,这与在顺序递归模型中观察到的现象相似。

[15] Frasca et al., SIGN: Scalable Inception Graph Neural Networks (2020). ICML workshop on Graph Representation Learning and Beyond.

[15] Frasca等人, SIGN:可伸缩初始图神经网络 (2020)。 ICML关于图表示学习及其他的研讨会。

[16] O. Shchur et al. Pitfalls of graph neural network evaluation (2018). Workshop on Relational Representation Learning. Shows that simple GNN models perform on par with more complex ones.

[16] O. Shchur等。 图神经网络评估的陷阱 (2018)。 关系表示学习研讨会。 表明简单的GNN模型可以与更复杂的模型相提并论。

[17] F. Wu et al., Simplifying graph neural networks (2019). In Proc. ICML.

[17] F.Wu等人, 简化图神经网络 (2019)。 在过程中。 ICML。

[18] While we stress that SIGN does not need sampling for computational efficiency, there are other reasons why graph subsampling is useful. J. Klicpera et al. Diffusion improves graph learning (2020). Proc. NeurIPS show that sampled diffusion matrices improve performance of graph neural networks. We observed the same phenomenon in early SIGN experiments.

[18]虽然我们强调SIGN不需要采样就可以提高计算效率,但是还有其他原因使图子采样很有用。 J.Klicpera等。 扩散改善了图学习 (2020)。 进程 NeurIPS显示,采样扩散矩阵可提高图神经网络的性能。 我们在早期的SIGN实验中观察到了相同的现象。

[19] G. Bouritsas et al. Improving graph neural network expressivity via subgraph isomorphism counting (2020). arXiv:2006.09252. Shows how provably powerful GNNs can be obtained by structural node encoding.

[19] G. Bouritsas等。 通过子图同构计数 (2020) 提高图神经网络表达能力 。 arXiv:2006.09252。 说明如何通过结构节点编码获得功能强大的GNN。

[20] F. Monti, K. Otness, M. M. Bronstein, MotifNet: a motif-based graph convolutional network for directed graphs (2018). arXiv:1802.01572. Uses motif-based diffusion operators.

[20] F. Monti,K。Otness,MM Bronstein, MotifNet:用于有向图的基于主题的图卷积网络 (2018年)。 arXiv:1802.01572。 使用基于主题的扩散算子。

[21] C. Szegedi et al., Going deeper with convolution (2015). Proc. CVPR proposed the inception module in the already classical Google LeNet architecture. To be fair, we were not the first to think of graph inception modules. Our collaborator Anees Kazi from TU Munich, who was a visiting student at Imperial College last year, introduced them first.

[21] C. Szegedi等人, “通过卷积深入研究” (2015年)。 进程 CVPR在已经很经典的Google LeNet架构中提出了初始模块。 公平地说,我们并不是第一个想到图初始模块的人。 我们的合作者来自慕尼黑工业大学的Anees Kazi,去年曾在帝国理工学院做访问学生,他首先介绍了它们。

[22] Note that reaching higher-order neighbours is normally achieved by depth-wise stacking graph convolutional layers operating with direct neighbours; in our architecture this is directly achieved in the first layer by powers of graph operators.

[22]注意,到达高阶邻居通常是通过与直接邻居一起操作的深度堆叠图卷积层来实现的; 在我们的架构中,这是通过图运算符的力量直接在第一层实现的。

SIGN implementation is available in PyTorch Geometric. Interested in Graph Deep Learning? See other posts on Medium.

在 PyTorch Geometric中 可以使用 SIGN 实现 。 对图深度学习感兴趣吗? 请参阅 Medium上的 其他帖子 。

翻译自: https://medium.com/@michael.bronstein/simple-scalable-graph-neural-networks-7eb04f366d07

架构垂直伸缩和水平伸缩区别