初学思路

代码

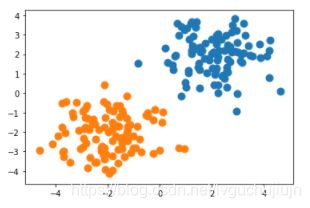

1.人工生成数据集

import torch

from torch.autograd import Variable

import matplotlib.pyplot as plt

n_data = torch.ones(100, 2)

x1 = torch.normal(2*n_data, 1)

y1 = torch.zeros(100,1)

x2 = torch.normal(-2*n_data, 1)

y2 = torch.ones(100,1)

x = torch.cat((x1, x2), 0).type(torch.FloatTensor)

y = torch.cat((y1, y2), 0).type(torch.FloatTensor) # labels

# 数据可视化

plt.scatter(x.data.numpy()[0:len(x1), 0], x.data.numpy()[0:len(x1), 1],s=100, lw=0)

plt.scatter(x.data.numpy()[len(x1):len(x), 0], x.data.numpy()[len(x1):len(x), 1],s=100,lw=0)

plt.show()

数据集可视化

2.初始化参数w与b

w = torch.ones(2, 1, requires_grad = True)

b = torch.ones(1, 1, requires_grad = True)

3.logistic回归模型的sigmoid函数

def sigmoid(w,b):

y_hat = 1/(1+torch.exp(-(torch.mm(x,w) + b)))

return y_hat

4.loss交叉熵损失函数

def loss(y_hat,y):

loss_func = -y*torch.log(y_hat) - (1-y)*torch.log(1-y_hat)

return loss_func

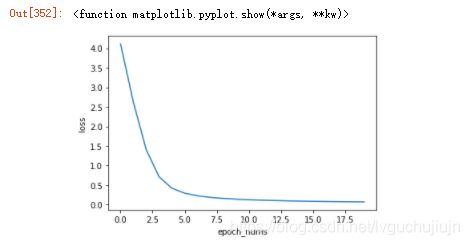

5.迭代开始

lr = 0.2

epoch_nums = 20 # 迭代次数

graph = [] # 最后要用到的可视化损失的数据

for i in range(epoch_nums):

y_hat = sigmoid(w,b)

J = torch.mean(loss(y_hat,y)) # 一共的代价函数

graph.append(J)

J.backward() # 反向传递

w.data = w.data - lr * w.grad.data # 开始梯度下降方法更新w与b

b.data = b.data - lr * b.grad.data

w.grad.data.zero_()

b.grad.data.zero_()

print("epoch:{} loss:{:.4}".format(i + 1,J.item()))

plt.plot(graph)

plt.xlabel("epoch_nums")

plt.ylabel("loss")

plt.show

结果

epoch:1 loss:4.111

epoch:2 loss:2.619

epoch:3 loss:1.409

epoch:4 loss:0.714

epoch:5 loss:0.421

epoch:6 loss:0.2933

epoch:7 loss:0.2267

epoch:8 loss:0.1865

epoch:9 loss:0.1595

epoch:10 loss:0.1401

epoch:11 loss:0.1255

epoch:12 loss:0.1141

epoch:13 loss:0.1048

epoch:14 loss:0.09721

epoch:15 loss:0.09079

epoch:16 loss:0.08532

epoch:17 loss:0.08059

epoch:18 loss:0.07645

epoch:19 loss:0.0728

epoch:20 loss:0.06955

(可以观察到学习率为0.2时比较合适)