TensorFlow实现CNN对花的分类,Tensorboard进行可视化

数据集下载

训练模型下载

参考博客:https://blog.csdn.net/u014281392/article/details/74316028

实验环境TensorFlow1.14

直接上代码:

import os

import numpy as np

import tensorflow as tf

import time

# 获取每张图片的路径

def get_file(file_dir):

image_list = []

label_list = []

for train_class in os.listdir(file_dir):

for pic in os.listdir(file_dir + '/' + train_class):

image_list.append(file_dir + '/' + train_class + '/' + pic)

label_list.append(train_class)

temp = np.array([image_list, label_list])

temp = temp.transpose()

np.random.shuffle(temp) # 打乱顺序

label_list = [int(i) for i in label_list] # 数据类型转换

return image_list, label_list

# 读取图片产生训练样本

def get_batch(image, label, image_W, image_H, batch_size, capacity):

image = tf.cast(image, tf.string)

label = tf.cast(label, tf.int32)

input_queue = tf.train.slice_input_producer([image, label])

label = input_queue[1]

image_contens = tf.read_file(input_queue[0])

image = tf.image.decode_jpeg(image_contens, channels=3)

image = tf.image.resize_image_with_crop_or_pad(image, image_W, image_H)

image = tf.image.per_image_standardization(image)

image_batch, label_batch = tf.train.batch([image, label], batch_size=batch_size, num_threads=16, capacity=capacity)

label_batch = tf.reshape(label_batch, [batch_size])

image_batch = tf.cast(image_batch, tf.float32)

return image_batch, label_batch

def weight_variable(shape, n):

initial = tf.truncated_normal(shape, stddev=n, dtype=tf.float32)

return initial

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape, dtype=tf.float32)

return initial

def conv2d(x, W):

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding="SAME")

def max_pool_2x2(x, name):

return tf.nn.max_pool(x, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding="SAME", name=name)

# 定义训练模型

def inference(images, batch_size, n_classes):

# 第一层卷积层

with tf.variable_scope('conv1') as scope:

w_conv1 = tf.Variable(weight_variable([3, 3, 3, 16], 1.0), name="weights", dtype=tf.float32)

b_conv1 = tf.Variable(bias_variable([16]), name="blases", dtype=tf.float32)

h_conv1 = tf.nn.relu(conv2d(images, w_conv1) + b_conv1, name="conv1")

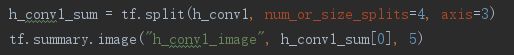

h_conv1_sum = tf.split(h_conv1, num_or_size_splits=4, axis=3) # 将特征图划分为[20, 56, 56, 4]

tf.summary.image("h_conv1_image", h_conv1_sum[0], 5) # 保存卷积后的特征图

# 第一层池化层

with tf.variable_scope('pooling1_lrn') as scope:

pool1 = max_pool_2x2(h_conv1, "pooling1")

norm1 = tf.nn.lrn(pool1, depth_radius=4, bias=1.0, alpha=0.001 / 9.0, beta=0.75, name="norm1")

pool1_sum = tf.split(pool1, num_or_size_splits=4, axis=3)

tf.summary.image("pooling1", pool1_sum[0], 5) # 保存池化后的特征图

# 第二层卷积层

with tf.variable_scope('conv2') as scope:

w_conv2 = tf.Variable(weight_variable([3, 3, 16, 32], 1.0), name="weights", dtype=tf.float32)

b_conv2 = tf.Variable(bias_variable([32]), name="blases", dtype=tf.float32)

h_conv2 = tf.nn.relu(conv2d(norm1, w_conv2) + b_conv2, name="conv2")

h_conv2_sum = tf.split(h_conv2, num_or_size_splits=8, axis=3)

tf.summary.image("h_conv2_image", h_conv2_sum[0], 5)

# 第二层池化层

with tf.variable_scope('pooling2_lrn') as scope:

pool2 = max_pool_2x2(h_conv2, "pooling2")

norm2 = tf.nn.lrn(pool2, depth_radius=4, bias=1.0, alpha=0.001 / 9.0, beta=0.75, name="norm2")

pool2_sum = tf.split(pool2, num_or_size_splits=8, axis=3)

tf.summary.image("pooling2", pool2_sum[0], 5)

# 第三层卷积层

with tf.variable_scope('conv3') as scope:

w_conv3 = tf.Variable(weight_variable([3, 3, 32, 64], 1.0), name="weights", dtype=tf.float32)

b_conv3 = tf.Variable(bias_variable([64]), name="blases", dtype=tf.float32)

h_conv3 = tf.nn.relu(conv2d(norm2, w_conv3) + b_conv3, name="conv3")

h_conv3_sum = tf.split(h_conv3, num_or_size_splits=16, axis=3)

tf.summary.image("h_conv3_image", h_conv3_sum[0], 5)

# 第三层池化层

with tf.variable_scope('pooling3_lrn') as scope:

pool3 = max_pool_2x2(h_conv3, "pooling2")

norm3 = tf.nn.lrn(pool3, depth_radius=4, bias=1.0, alpha=0.001 / 9.0, beta=0.75, name="norm3")

pool3_sum = tf.split(pool3, num_or_size_splits=16, axis=3)

tf.summary.image("pooling3", pool3_sum[0], 5)

# 第四层全连接层

with tf.variable_scope('local3') as scope:

reshape = tf.reshape(norm3, shape=[batch_size, -1])

dim = reshape.get_shape()[1].value

w_fc1 = tf.Variable(weight_variable([dim, 128], 0.005), name="weights", dtype=tf.float32)

b_fc1 = tf.Variable(bias_variable([128]), name="blases", dtype=tf.float32)

h_fc1 = tf.nn.relu(tf.matmul(reshape, w_fc1) + b_fc1, name=scope.name)

# 第五层全连接层

with tf.variable_scope('local4') as scope:

w_fc2 = tf.Variable(weight_variable([128, 128], 0.005), name="weights", dtype=tf.float32)

b_fc2 = tf.Variable(bias_variable([128]), name="blases", dtype=tf.float32)

h_fc2 = tf.nn.relu(tf.matmul(h_fc1, w_fc2) + b_fc2, name=scope.name)

h_fc2_dropout = tf.nn.dropout(h_fc2, 0.5) # 随机删除神经网络中的部分神经元,防止过拟合

# 回归层

with tf.variable_scope("sofemax_liner") as scope:

weights = tf.Variable(weight_variable([128, n_classes], 0.005), name="softmax_linear", dtype=tf.float32)

biases = tf.Variable(bias_variable([n_classes]), name="biases", dtype=tf.float32)

softmax_linear = tf.add(tf.matmul(h_fc2_dropout, weights), biases, name="softmax_linear")

return softmax_linear

# 计算损失

def losses(logits, labels):

with tf.variable_scope("loss") as scope:

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=logits, labels=labels, name="xentropy_per_example")

loss = tf.reduce_mean(cross_entropy, name="loss")

tf.summary.scalar(scope.name + "/loss", loss) # 保存损失模型

return loss

# loss损失值优化

def trainning(loss, learning_rate):

with tf.name_scope("oprimizer"):

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate)

global_step = tf.Variable(0, name="global_step", trainable=False)

train_op = optimizer.minimize(loss, global_step=global_step)

return train_op

# 准确率计算

def evaluation(logits, labels):

with tf.variable_scope("accuracy") as scope:

correct = tf.nn.in_top_k(logits, labels, 1)

accuracy = tf.reduce_mean(tf.cast(correct, tf.float16))

tf.summary.scalar(scope.name + "/accuracy", accuracy) # 保存准确率模型

return accuracy

# ******************************************************************************************************************* #

N_CLASSES = 5 # 图片的种类

IMG_W = 56 # 图片宽度

IMG_H = 56 # 图片高度

BATCH_SIZE = 20 # 每次读取图片的数目

CAPACITY = 200 # 读取队列的最大容量

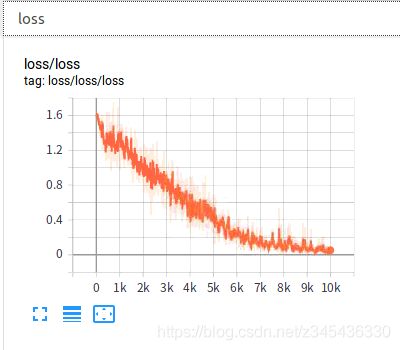

MAX_STEP = 10000 # 迭代次数

learning_rate = 0.0001 # 学习率

train_dir = "flowers" # 文件路径

logs_train_dir = "CK-part/" # 日志保存路径

# ******************************************************************************************************************* #

train, train_label = get_file(train_dir) # 获取文件路径

train_batch, train_label_batch = get_batch(train, train_label, IMG_W, IMG_H, BATCH_SIZE, CAPACITY) # 读取数据和标签

# 构建训练模型

train_logits = inference(train_batch, BATCH_SIZE, N_CLASSES)

train_loss = losses(train_logits, train_label_batch)

train_op = trainning(train_loss, learning_rate)

train_acc = evaluation(train_logits, train_label_batch)

image_summary = tf.summary.image("image", train_batch, 5) # 保存原始图片特征图

summary_op = tf.summary.merge_all() # 混合所有summary类型log

saver = tf.train.Saver() # 保存训练模型

# ******************************************************************************************************************* #

# 开始训练模型,并输出训练过程中的loss和accuracy

if __name__ == "__main__":

sess = tf.Session() # 开启一个会话

train_writer = tf.summary.FileWriter(logs_train_dir, sess.graph) # 日志写入

sess.run(tf.global_variables_initializer()) # 初始化全部模型参数

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(sess=sess, coord=coord) # 启动线程

try:

print(time.strftime('%Y-%m-%d %H:%M:%S'))

for step in np.arange(MAX_STEP):

if coord.should_stop():

break

_, tra_loss, tra_acc = sess.run([train_op, train_loss, train_acc])

if step % 10 == 0:

print("Step %d, train loss = %.2f, train accuracy = %.2f%%" % (step, tra_loss, tra_acc * 100.0))

summary_str = sess.run(summary_op)

train_writer.add_summary(summary_str, step)

checkpoint_path = os.path.join(logs_train_dir, "thing.ckpt")

saver.save(sess, checkpoint_path)

print(time.strftime('%Y-%m-%d %H:%M:%S'))

except tf.errors.OutOfRangeError:

print("Done training -- epoch limit reached")

finally:

coord.request_stop()

coord.join(threads)

sess.close()

可视化方法:

1. 日志目录

2. 需要记录的数据( tf.summary.***)

3. 数据合并保存

![]()

4. 查看数据

cmd命令行进入代码log中 输入:tensorboard --logdir=./

或者写bat

打开浏览器输入:http://localhost:6006

显示如下:

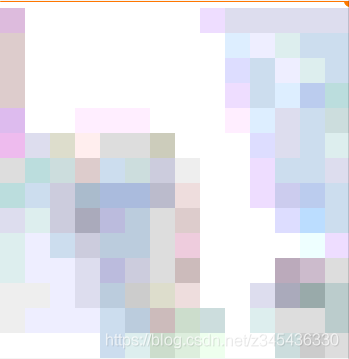

原始特征图:

第一层卷积

第一层池化

第二层卷积

第二层池化

第三层卷积

第三层池化

遇到的问题:

1. InvalidArgumentError (see above for traceback): Tensor must be 4-D with last dim 1, 3, or 4, not [20,56,56,16]

第四位通道数必须为1,3,4解决办法:

先切分为4片,每一份为[20,56,56,4] ,保存第一份

也可以切分为16片,保存为灰度图

2. tensorboard

查看SCALARS 提示 KeyError: None, 无法显示loss和accuracy (在windows上出现异常)

解决办法:在Linux下安装tensorflow和tensorboard包,后可以查看

3. W tensorflow/core/framework/op_kernel.cc:1401] OP_REQUIRES failed at save_restore_v2_ops.cc:137 : Unknown: Failed to rename: CK-part/thing.ckpt.index.tempstate10496770459040915805 to: CK-part/thing.ckpt.index : �ܾ����ʡ�

; Input/output error

关闭打开的tensorboard和网页即可